In late winter of 1975, a seismologist named Cao Xianqing tracked a series of small earthquakes near Haicheng, China, which he took to presage a much larger one to come. On the morning of February 3, officials ordered evacuations of the surrounding communities. Despite the subfreezing weather, many residents abandoned their homes, although others refused, dismissing the warning as another cry of “wolf” in a string of false alarms.

Yet this time, around dinnertime the very next day, Cao’s prognosis materialized in the form of a massive, magnitude 7.3 quake. Bridges collapsed, pipes ruptured, and buildings crumbled. But the accurate early alert—the first ever documented—spared thousands of lives: Of the 150,000 casualties predicted for a disaster of comparable size, only about 25,000 were tallied, including just over 2,000 deaths.

The successful forecast of the Haicheng quake seemed to justify the optimism felt by earthquake researchers around the world, who believed they were on the brink of unlocking the secrets of Earth’s tectonic motion. Just a few years earlier, in 1971, geophysicist Don Anderson, who headed the California Institute of Technology’s renowned Seismology Laboratory, had boasted that prediction science would soon pay big dividends. With enough funds, he told a local reporter, “it would in my opinion be possible to forecast a quake in a given area within a week.”

Those funds duly arrived. In 1978, the United States Geological Survey (USGS) allocated over half its research budget ($15.76 million) to earthquake prediction, a level of spending that continued for much of the next decade. Scientists deployed hundreds of seismometers and other sensors, hoping to observe telltale signals heralding the arrival of the next big one. They looked for these signs in subterranean fluids, crustal deformations, radon gas emissions, electric currents, even animal behavior. But every avenue they explored led to a dead end.

In one sudden and violent motion, all that tension is released. The rock snaps apart, shifting the earth as much as several feet in a matter of seconds.

“In our long search for signals, we never saw anything that could be used in a reliable way,” says Ruth Harris, a geophysicist at the USGS. “Either the method wasn’t repeatable or it looked like the original thing was just a case of noise.” Even the famous Haicheng prediction turned out to be little more than fabulously good luck. Cao later admitted he had based his warning partly on foreshocks, which precede some large quakes by minutes to days, and mostly on superstition. According to a book he’d read called Serendipitous Historical Records of Yingchuan, the heavy autumn rains of 1974 would “surely be followed” by a winter earthquake.

Since the early 20th century, scientists have known that large quakes often cluster in time and space: 99 percent of them occur along well-mapped boundaries between plates in Earth’s crust and, in geological time, repeat almost like clockwork. But after decades of failed experiments, most seismologists came to believe that forecasting earthquakes in human time—on the scale of dropping the kids off at school or planning a vacation—was about as scientific as astrology. By the early 1990s, prediction research had disappeared as a line item in the USGS’s budget. “We got burned enough back in the 70s and 80s that nobody wants to be too optimistic about the possibility now,” says Terry Tullis, a career seismologist and chair of the National Earthquake Prediction Evaluation Council (NEPEC), which advises the USGS.

Defying the skeptics, however, a small cadre of researchers have held onto the faith that, with the right detectors and computational tools, it will be possible to predict earthquakes with the same precision and confidence we do just about any other extreme natural event, including floods, hurricanes, and tornadoes. The USGS may have simply given up too soon. After all, the believers point out, advances in sensor design and data analysis could allow for the detection of subtle precursors that seismologists working a few decades ago might have missed.

And the stakes couldn’t be higher. The three biggest natural disasters in human history, measured in dollars and cents, have all been earthquakes, and there’s a good chance the next one will be too. According to the USGS, a magnitude 7.8 quake along Southern California’s volatile San Andreas fault would result in 1,800 deaths and a clean-up bill of more than $210 billion—tens of billions of dollars more than the cost of Hurricane Katrina and the Deepwater Horizon oil spill combined.

At a time when American companies and institutions are bankrolling “moonshot” projects like self-driving cars, space tourism, and genomics, few problems may be as important—and as neglected—as earthquake prediction.

David Schaff was a senior at Northwestern University when a magnitude 6.7 earthquake struck the upscale Los Angeles suburb of Northridge in January 1994. A devout Christian, Schaff saw a connection between the time the quake began (4:31 a.m.1) and verse 4:31 in Acts of the Apostles, a book of the New Testament: “After they prayed, the place where they were meeting was shaken.”

“I was amazed that God would have complete control of the timing and location of something as chaotic, energetic, and unpredictable as an earthquake,” says Schaff, now a professor at Columbia University. He believed the Northridge quake was a divine message. “I thought, as a scientist who was a believer, He might use me to help warn people of impending earthquakes.”

After graduation, Schaff enrolled at Stanford University to pursue a Ph.D. in geophysics. “I was learning about earthquakes from my academic advisors and from my pastor in my church,” he says. His faith told him that “earthquakes serve many purposes, and one is to create awe and wonder and amazement in God—and also fear.” But he didn’t doubt they also had a physical origin.

As Schaff’s textbooks laid out, the dozen or so tectonic plates that make up Earth’s crust are always on the move, continuously slipping past or sliding over each other. They creep as fast as a few inches a year, deforming the rock at the seams until it can no longer withstand the strain. Then, in one sudden and violent motion, all that tension is released. The rock snaps apart, shifting the earth as much as several feet in a matter of seconds.

Schaff wondered if faint precursors of the 2004 quake might be hiding in ambient noise, where the USGS hadn’t thought to look.

This “elastic rebound” generates two types of seismic waves. The fastest waves, and thus the first to hit nearby communities or infrastructure, are high-frequency body waves, which travel through Earth’s interior. Body waves come in two flavors: Primary “P” waves push and pull the ground as they race outward like sound through air, while secondary “S” waves rattle the rock up and down. The effects of these waves are typically so subtle that, although some animals can sense them, humans notice only a quick jolt or vibrations—or nothing at all. Virtually all of the damage caused by earthquakes is due to slower, low-frequency surface waves: Love waves, which wobble things side to side, and Rayleigh waves, which roll like breakers in the deep ocean, toppling power lines and lifting buildings off their foundations.

The vast majority of shaking that seismometers detect on a daily basis, however, has nothing to do with earthquakes. In fact, the earth is constantly awash in ambient noise—extremely low-amplitude Love and Rayleigh waves produced by road and rail traffic, industrial activity, and natural movements like wind. Ocean waves are a major source of this background rumbling: Striking the continental margin, they generate gentle reverberations that can propagate thousands of miles. Schaff’s advisors taught him to discard ambient noise, which they sometimes cursed for masking geological signals.

But in 2001, as Schaff was beginning a post-doctoral fellowship at Columbia, physicists at the University of Illinois published a paper showing that ambient noise had a secret utility: By correlating measurements from distant receivers, one could estimate how fast ambient waves propagated, and thus determine the composition of the material the waves had traveled through.1 Indeed, in a 2005 study in the journal Science, a team led by seismologist Nikolai Shapiro, then at the University of Colorado at Boulder, used ambient-noise recordings to map the ground beneath Southern California.2 Unusually slow waves, the team discovered, corresponded to sedimentary basins, while unusually fast waves indicated the igneous cores of mountains.

Scientists quickly realized that ambient noise could similarly provide a means to observe geologic turmoil brewing deep underground. In 2010, in the weeks before two consecutive eruptions of Piton de la Fournaise, a volcano on the French island of La Reunion, in the Indian Ocean, volcanologist Anne Obermann and her colleagues at the Swiss Seismological Service detected decreases in the velocity of ambient waves passing through the earth below.3 Their discovery bolstered a controversial theory called dilatancy, which posits that cracks opening up in stressed subterranean rock will cause it to expand. This gradual dilation slows any seismic waves traveling through, possibly warning that the rock is nearing its breaking point.

First proposed in the 1970s, dilatancy seemed to explain Soviet scientists’ apparent progress in predicting major earthquakes by tracking speed changes in S and P waves from minor quakes and other seismic activity. But when this approach was later discredited, dilatancy fell into disrepute.

In light of Obermann’s findings, Schaff, who had since become an associate professor, wondered whether the theory might have a kernel of truth. If one could measure shifts in the velocity of ambient waves preceding volcanic eruptions, maybe it was possible to detect similar shifts before large earthquakes. There was already a hint that it was. In a 2008 Nature paper, seismologists led by Fenglin Niu, at Rice University, in Texas, reported the slowing of seismic waves hours before two small quakes in the tiny farming community of Parkfield, California.4

Niu, however, had created his own noise. Using a buried piezoelectric transmitter, which converts electrical energy to motion, he had sent surface waves to a recorder a few meters away. Replicating this set-up on a practical scale, along the lengths of entire faults, would be ruinously expensive.

“The beauty of ambient noise is that it’s free,” Schaff says. In 2010, he began mining archival noise data from a network of USGS seismometers near Parkfield—a site long seen, ironically, as a monument to the folly of earthquake prediction.

Situated slap bang on top of the San Andreas fault separating the North American and Pacific Plates, Parkfield experienced an earthquake of magnitude 6.0 or greater about once every 22 years between 1857 and 1966. In 1985, the USGS confidently announced that the next one would occur before 1993. In anticipation, researchers blanketed the area with instruments, including seismometers, strain gauges, dilatometers, magnetometers, and GPS sensors.

But it wasn’t until 2004 that another biggish one, a magnitude 6.0 quake, finally hit. And if the decades-long wait weren’t bad enough, the sensor results were worse. “Prior to the 2004 earthquake, the scores of instruments at Parkfield … recorded nothing out of the ordinary,” reports seismologist Susan Elizabeth Hough in her book Predicting the Unpredictable. “The fault did not start to creep in advance of the earthquake. The crust did not start to warp; no unusual magnetic signals were recorded. The earthquake wasn’t even preceded by the large foreshock scientists were expecting.”

Schaff, however, wondered if faint precursors of the 2004 quake might be hiding in ambient noise, where the USGS hadn’t thought to look. “One man’s signal is another man’s noise,” he says.

To get a clear speed reading of ambient waves, scientists typically must average data over periods as long as a month, making it hard to pinpoint precisely when a change occurred. But because Parkfield had such a high density of seismometers—13 within 20 kilometers of the quake’s epicenter—Schaff was able to get the resolution down to a single day. His calculations showed that ambient waves had slowed during the quake itself, then gradually sped up as the earth settled into a new shape. But try as he could, Schaff couldn’t tease out any significant speed changes in the days leading up to the main event.5

“The conclusion for this particular earthquake was that, if there was a change, it was too small, too short, or might not have occurred in the area we were sampling,” he says. “It would be worth designing experiments with more stations to monitor areas where we suspect there might be an earthquake.” Schaff isn’t the only researcher who thinks this. At least a few dozen independent scientists in the U.S. and abroad have devoted their careers—and sometimes their own bank accounts—to the hunt for predictive signals, scrutinizing not just ambient noise but also thermal radiation and electromagnetic fields.

“We’d love to do a network up in the Pacific Northwest, where they’re really concerned about a major earthquake and tsunami, but we just don’t have the money.”

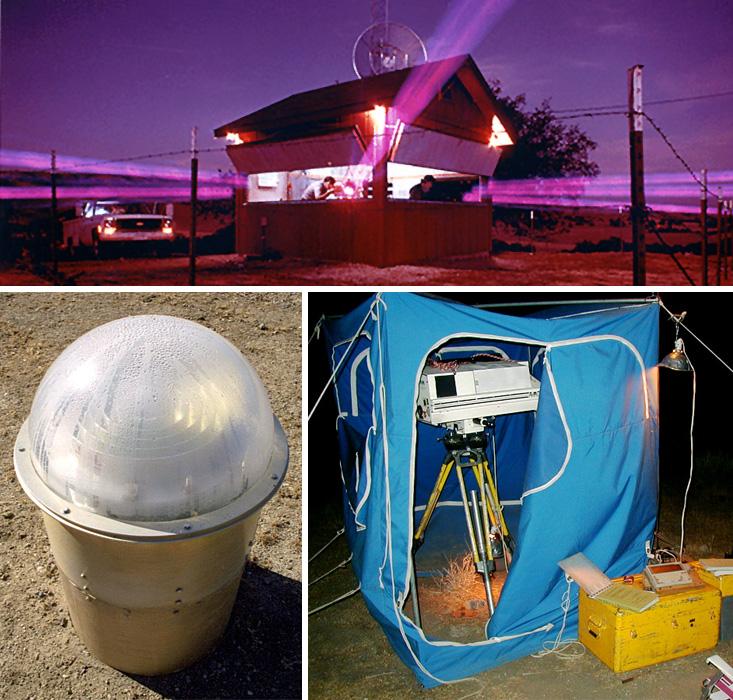

Tom Bleier, a former satellite engineer living about a thousand feet from the San Andreas fault, near Silicon Valley, operates a network of magnetometers called QuakeFinder. He got interested in earthquake precursors more than two decades ago, intrigued by scientists’ reporting of strange electromagnetic fluctuations days before a magnitude 6.9 quake struck Loma Prieta, south of San Francisco, in 1989. With $2 million from NASA, QuakeFinder has since expanded from a handful of DIY sensors to a global network of 165 stations, most of them installed along faults in California, including San Andreas, Hayward, and San Jacinto.

So far, QuakeFinder has captured data for seven medium to large earthquakes (greater than magnitude 5.0). In the weeks prior to several of them, Bleier says, the data show distinctive electromagnetic pulses between 0.1 and 10 nanotesla in size, as much as 100,000 times weaker than Earth’s natural field. It’s possible, he points out, that these faint blips occurred before all seven quakes, but in some cases were drowned out by other magnetic disturbances, such as from lightning, solar storms, or even passing cars.

Friedemann Freund, a physicist associated with the SETI Institute who has also attracted NASA funding, has a theory about what might be causing all this pulsing. Days before an earthquake, he believes, stressed underground rock generates large electric currents, which migrate to the surface, perturbing Earth’s magnetic field while simultaneously ionizing the air and emitting a burst of infrared energy. (At Freund’s advice, each of QuakeFinder’s $50,000 magnetometer stations also includes ion sensors.)

Most seismologists are highly sceptical of Freund’s theory. “NASA really likes to fund things that are a leap into the unknown,” says John Vidale, a professor of earth and space science at the University of Washington and the state’s official seismologist. “But there are leaps into the unknown and leaps into things that we know are not likely to work out. There’s no reason why what Freund is saying couldn’t be right. It’s just extremely unlikely that it is.”

Even Bleier concedes that QuakeFinder isn’t ready to publish its predictions just yet. “We wouldn’t go off and issue public forecasts without being under the guidance of USGS,” he says. Even in the throes of optimism, it’s important to be cautious. “You can imagine a scenario where you feel there’s going to be an earthquake in San Francisco, and you issue a public forecast, and the city empties out—and no earthquake happens.”

There’s no need to imagine. In the late 1970s, Brian Brady, a geophysicist with the U.S. Bureau of Mines believed he had found a mathematical model that could predict earthquakes by analyzing stresses along a fault. He warned that a series of rumblings culminating in a gargantuan, magnitude 9.9 quake would strike Lima, Peru in 1981. But when the alleged start date rolled around, Lima was nothing more than a perfectly intact ghost town.

Eight years later, in 1989, a self-taught climatologist named Iben Browning made a similar blunder when he proclaimed, based on tidal observations, that several earthquakes would rattle New Madrid, Missouri on Dec. 3, 1990. Schools closed, emergency services geared up for disaster, and locals fled. But no quake ever came.

It’s no wonder, Schaff says, that funders such as the USGS and the National Science Foundation (NSF) are now gun shy about anything that smacks of prediction. “They view this research as high-reward but also high-risk. Budgets are tight. The NSF did fund one of my projects, but the second one they didn’t.” Bleier, too, is scrambling to keep QuakeFinder going and expand its reach. “We’d love to do a network up in the Pacific Northwest, where they’re really concerned about a major earthquake and tsunami, but we just don’t have the money,” he says.

Most mainstream seismologists, however, aren’t interested in a moonshot. “Personally, I don’t think we’ll ever be able to say ‘There will be a magnitude 7.0 earthquake at this minute in this place’,” says the USGS’s Harris. “The earth is just so complicated. Putting a lot of money into understanding subduction zones and the mechanics of faults—and retrofitting buildings—would be great. But just putting money into earthquake prediction wouldn’t be worth it.”

The NEPEC’s Tullis agrees. “We don’t know enough,” he says. Even so, he admits, he’s willing to hold out hope that, like any deliberate venture into the unknown, the slow grind of seismology might eventually yield a breakthrough no one was expecting. “Over time, with enough measurements and careful analysis, maybe at some point, someone will stumble across something that has definitive predictive value, ” he says, and then, perhaps wary of sounding too sanguine, quickly adds a caveat: “It will certainly have to be proven, assuming it’s even possible. It will need an awful lot of testing.”

Schaff, meanwhile, is putting his confidence in a power higher than the USGS. “After 22 years of study,” he says, “I have come to the conclusion that it is impossible for mankind to predict earthquakes without God giving insight into the secrets and mysteries of their occurrence.”

Mark Harris is an investigative science and technology reporter living near the Seattle Fault. In 2014, he was a Knight Science Journalism Fellow at MIT, and in 2015, he won a AAAS Kavli Science Journalism Gold Award for his article “How a Lone Hacker Shredded the Myth of Crowdsourcing,” published in Backchannel. He recently purchased earthquake insurance.

References

1. Lobkis, O.I. & Weaver, R.L. On the emergence of the Green’s function in the correlations of a diffuse field. The Journal of the Acoustical Society of America 110, 3011-3017 (2001).

2. Shapiro, N.M., Campillo, M., Stehly, L., & Ritzwoller, M.H. High-resolution surface-wave tomography from ambient seismic noise. Science 307, 1615-1618 (2005).

3. Obermann, A., Planès, T., Larose, E., & Campillo, M. Imaging preeruptive and coeruptive structural and mechanical changes of a volcano with ambient seismic noise. Journal of Geophysical Research 118, 6285-6294 (2013).

4. Niu, F., Silver, P.G., Daley, T.M., Cheng, X., & Majer, E.L. Preseismic velocity changes observed from active source monitoring at the Parkfield SAFOD drill site. Nature 454, 204-208 (2008).

5. Schaff, D.P. Placing an upper bound on preseismic velocity changes measured by ambient noise monitoring for the 2004 Mw 6.0 Parkfield earthquake (California). Bulletin of the Seismological Society of America 102, 1400-1416 (2012).