How many researchers does it take to change a test of artificial intelligence?

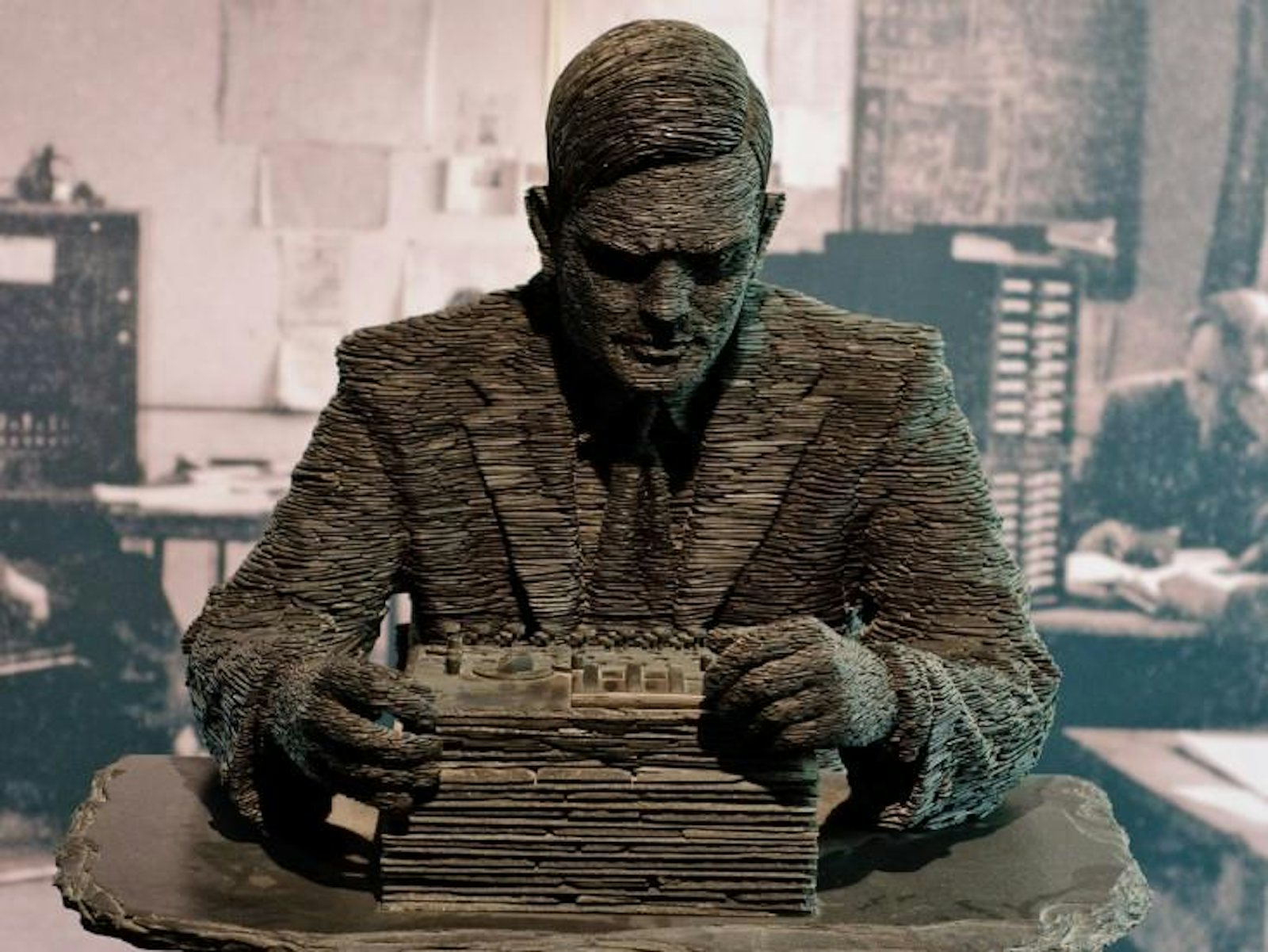

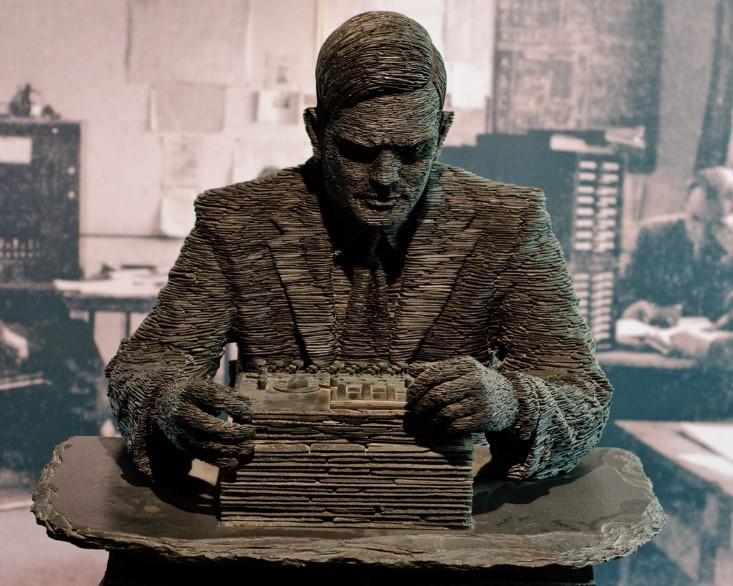

Sixty-five years ago, famed mathematician and WWII code-breaker Alan Turing unveiled the “Imitation Game,” a playful scenario designed to test a computer’s ability to disguise itself as a human agent. The Imitation Game as Turing described it is like the classic game show To Tell The Truth, in which two people make an identical claim, like “I am a taxi driver in New York City,” and a human interviewer asks them questions to determine which of the pair is lying. In Turing’s version, one of the two interviewees is replaced by a computer.

In the years since, what Turing originally intended as a philosophical examination of whether machines can think has morphed in other people’s minds into the “Turing test,” shorthand for a nebulous threshold of performance beyond which a machine might be termed “intelligent.”

In the summer of 2014, it was widely reported that a chatbot program named Eugene Goostman had passed Turing’s test when it was pitted against similar programs and convinced the most people that they were chatting with a human and not a computer. But leading artificial-intelligence researchers were decidedly not convinced . The Goostman bot had not truly beaten the Turing test, many said—it was only able to fool a third of its human interviewers, in short conversations, by using a crutch: It pretended to be a 13-year-old Ukrainian boy with a limited grasp of the English language. Psychologist Gary Marcus says the bot’s feat is more about how easily we are fooled than any advent of intelligent machines.

More importantly, many researchers said the entire idea behind the test was flawed and a distraction from more significant questions. Thanks to Goostman, what had been a quiet undercurrent within the field rapidly overflowed its banks, eventually spilling into a riverside hotel conference room in Austin, Texas, during a conference last month on artificial intelligence. Here 50 or so computer scientists, roboticists, linguists, psychologists, and neuroscientists gathered to chart a new course for AI, with many calling for the venerable Turing test to be retired in favor of a new series of tests, ones they hope will more accurately measure human and machine intelligence and ultimately will inspire more useful research.

Marcus, a professor at New York University and an organizer of the recent conference, helped focus the experts’ skepticism toward the attention to Goostman’s ostensible victory. “We all see that the Turing test has a central spot in public’s imagination,” Marcus says, “but every one of us in the room thinks it’s an illegitimate test, and that it’s a poor goal for artificial intelligence.”

The biggest downside of the Turing test is also likely the same reason it’s become so well-known: its simplicity. “I think the real problem with the Turing test is that it only tests one narrow sliver of human intelligence,” says Doug Lenat, CEO of artificial-intelligence company Cycorp and a former Stanford professor. Lenat and his colleagues put more stock in Howard Gardner’s “Theory of Multiple Intelligences,” which divides cognition from a general trait into a set of specific abilities. While computers like IBM’s Jeopardy-winning Watson are notoriously single-”minded” in their capabilities, Lenat said, truly intelligent machines will need to reflect the varied aspects of human intelligence. The group unanimously agreed that evaluating these diverse intelligences will require diverse tests, and they ended the day by sketching out several Turing test replacements.

“We all see that the Turing Test has a central spot in public’s imagination, but every one of us in the room thinks it’s an illegitimate test, and that it’s a poor goal for artificial intelligence.”

The test that’s now most fully developed asks a computer to compute “common sense” language. Consider the following sentence:

The city council refused to give the demonstrators a permit because they [feared/advocated] violence.

If the word is “feared,” then the pronoun “they” refers to “the city council.” If the word chosen is “advocated”, the meaning of the pronoun shifts to “the demonstrators.” Sentences like this, known as Winograd schema, are easy for us to decipher, but are currently impossible for computers. The Winograd schema challenge, sponsored by the AI firm Nuance Communications, will invite teams to compete later this year.

A second test, led by Stanford’s Fei Fei Li, will require computers to decipher the meaning of images and video. Search engines are currently unable to index the web’s visual media unless it is accompanied by text descriptions; intelligent computer vision would bring much of this digital dark matter into the light. Another would challenge machines like Watson to move beyond Jeopardy to answer elementary-school standardized-test questions, and perhaps use that knowledge to tutor human students.

The fourth and perhaps most ambitious proposal is jokingly titled, “The IKEA Challenge.” It may sound like a bad, corporate-sponsored reality show, but this test is designed to examine a robot’s ability to decipher language while physically cooperating with humans. That means interpreting written instructions, choosing the right piece, and holding it in just the right position for a human teammate to turn the screw. Skynet is a piece of Swedish furniture, apparently. Marcus suggests that as these tests are combined and as new ones are developed, intelligent machines may one day be asked to compete in a “Turing Decathlon.”

So how long until computers reach our level? This group wisely avoids such predictions, but Francesca Rossi, professor of computer science at Harvard and the University of Padova in Italy, voiced a sentiment of humility that echoed throughout the workshop. Speaking of human intelligence, Rossi said, “I don’t think we know enough about our brains and our mind to know exactly what it is.” Considering our limited self-understanding, it’s hard to say when computers might match us.

The group will reconvene at a conference later this year to continue refining the new tests. No word yet on whether Alan Turing is invited.

For updates and more information, visit the “Beyond the Turing Test” workshop website.

Joe Hanson, Ph.D. is the creator of the PBS Digital Studios YouTube series and website “It’s Okay to be Smart.” He has also written for Scientific American and WIRED, and his writing has been featured in The Open Laboratory: The Best Science Writing Online. He is based in Austin, Texas.