In 2016, a Mercedes-Benz executive was quoted as saying that the company’s self-driving autos would put the safety of its own occupants first. This comment brought harsh reactions about luxury cars mowing down innocent bystanders until the company walked back the original statement. Yet protecting the driver at any cost is what drivers want: A recently published study in Science (available to read on arXiv) shows that, though in principle people want intelligent cars to save as many lives as possible (like avoiding hitting a crowd of children, for example), they also want a car that will protect its occupants first.

It would be hard to trust this algorithm because we—the humans nominally in charge of the A.I.—don’t ourselves have the “right” ethical answer to this dilemma. Besides, and potentially worse, the algorithm itself might change. No matter how a car’s A.I. is initially programmed, if it is designed to learn and improve itself as it drives, it may act unpredictably in a complicated accident, perhaps in ways that would satisfy nobody. It would be unsettling as a driver to worry whether your car really wants to protect you; fortunately, ethically ambiguous driving situations do not happen often—and those might happen even less if self-driving cars fulfill their touted promise of high competence, hugely reducing the frequency of auto accidents, though this remains to be seen. Last month, a Tesla Model X, engaged in Autopilot, slammed into a California highway median and caught fire, killing the driver (who was warned several times by the car to place his hands on the wheel), and, in Arizona, a self-driving Uber fatally hit a pedestrian.

It would be unsettling as a driver to worry whether your car really wants to protect you.

The potential harm of A.I.s deliberately designed to kill in warfare is much more pressing. The U.S. and other countries are working hard to develop military A.I., in the form of automated weapons, that enhance battlefield capabilities while exposing fewer soldiers to injury or death. For the U.S., this would be a natural extension of the existing imperfect drone warfare program—failures in military intelligence have led to the mistaken killing of non-combatants in Iraq. The Pentagon says that it has no plans to remove humans from the decision process that approves the use of lethal force, but A.I. technology is out-performing humans in a growing number of domains so fast that many fear a run-away global arms race that could easily accelerate toward completely autonomous weaponry—autonomous, but not necessarily with good judgment.

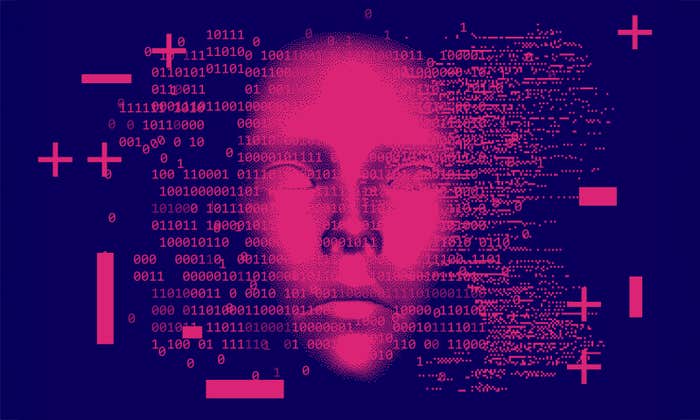

Thousands of employees at Google are worried about this outcome. Google has been lending its A.I. expertise to the Pentagon on its Project Maven, which has been working since last year to establish an “Algorithmic Warfare Cross-Functional Team” that will eventually allow one human analyst, according to Marine Corps Colonel Drew Cukor, “to do twice as much work, potentially three times as much, as they’re doing now” on tasks like recognizing targets—road-side bomb planters, for instance—in drone video footage. “In early December, just over six months from the start of the project,” according to the Bulletin of the Atomic Scientists, “Maven’s first algorithms were fielded to defense intelligence analysts to support real drone missions in the fight against ISIS.” In a petition signed by over 3,100 employees, including many senior engineers, according to the New York Times, the employees asked that Google stop assisting Project Maven, stating, “We believe Google should not be in the business of war.”

Even if Google pulls out, other companies developing A.I., like Microsoft and Amazon, will likely step in—Amazon is thought to be the favorite to win the Pentagon’s multibillion dollar cloud services contract, which includes A.I. technology. So, it’s all too easy to imagine—in the not-so-far future—one of these companies helping the military design an A.I. that chooses targets, aims, and fires with more speed and accuracy than any human soldier, but lacks the wider intelligence to decide if the target is appropriate. This is why the U.N. is considering how to place international limits on lethal autonomous weapons. In 2015, thousands of researchers in A.I. and robotics asked that autonomous weapons be banned if they lack human control because, among other reasons, “autonomous weapons are ideal for tasks such as assassinations, destabilizing nations, subduing populations and selectively killing a particular ethnic group.”

These terrible disruptions would be within the grasp of specialized A.I. capabilities that exist now or will in the near future, such as facial recognition, making it possible to hunt down a particular target for assassination; or worse, algorithms that pick entire groups of human targets solely by external physical characteristics, like skin color. Add to that a clever dictator who tells his people, “My new A.I. weapons will kill them but not us,” and you have a potential high-tech crime against humanity that could come more cheaply than building rogue nuclear weapons. It’s certainly not far-fetched to suppose that a group like ISIS, for example, could acquire A.I. capabilities. Its supply chain of weapons—some of which they’ve creatively repurposed for urban combat—has been traced back to the U.S. “For ISIS to produce such sophisticated weapons marks a significant escalation of its ambition and ability,” Wired reported in December. “It also provides a disturbing glimpse of the future of warfare, where dark-web file sharing and 3-D printing mean that any group, anywhere, could start a homegrown arms industry of its own”—a tempting possibility for international bad actors to expand their impact.

We already have enough problems dealing with the large-scale weapons capabilities of nations like North Korea. Let’s hope we can keep A.I. out of any arsenal developed by smaller groups with evil intentions.

Sidney Perkowitz, Candler Professor Emeritus of Physics at Emory University, is the author of Digital People, editor of the just published Frankenstein: How a Monster Became an Icon, and the author of other popular works about AI, robots, and varied topics in science and technology. Follow him on Twitter @physp.

WATCH: What working with robots can tell you about being human.