When it comes to artificial intelligence, we may all be suffering from the fallacy of availability: thinking that creating intelligence is much easier than it is, because we see examples all around us. In a recent poll, machine intelligence experts predicted that computers would gain human-level ability around the year 2050, and superhuman ability less than 30 years after.1 But, like a tribe on a tropical island littered with World War II debris imagining that the manufacture of aluminum propellers or steel casings would be within their power, our confidence is probably inflated.

AI can be thought of as a search problem over an effectively infinite, high-dimensional landscape of possible programs. Nature solved this search problem by brute force, effectively performing a huge computation involving trillions of evolving agents of varying information processing capability in a complex environment (the Earth). It took billions of years to go from the first tiny DNA replicators to Homo Sapiens. What evolution accomplished required tremendous resources. While silicon-based technologies are increasingly capable of simulating a mammalian or even human brain, we have little idea of how to find the tiny subset of all possible programs running on this hardware that would exhibit intelligent behavior.

But there is hope. By 2050, there will be another rapidly evolving and advancing intelligence besides that of machines: our own. The cost to sequence a human genome has fallen below $1,000, and powerful methods have been developed to unravel the genetic architecture of complex traits such as human cognitive ability. Technologies already exist which allow genomic selection of embryos during in vitro fertilization—an embryo’s DNA can be sequenced from a single extracted cell. Recent advances such as CRISPR allow highly targeted editing of genomes, and will eventually find their uses in human reproduction.

It is easy to forget that the computer revolution was led by a handful of geniuses: individuals with truly unusual cognitive ability.

The potential for improved human intelligence is enormous. Cognitive ability is influenced by thousands of genetic loci, each of small effect. If all were simultaneously improved, it would be possible to achieve, very roughly, about 100 standard deviations of improvement, corresponding to an IQ of over 1,000. We can’t imagine what capabilities this level of intelligence represents, but we can be sure it is far beyond our own. Cognitive engineering, via direct edits to embryonic human DNA, will eventually produce individuals who are well beyond all historical figures in cognitive ability. By 2050, this process will likely have begun.

These two threads—smarter people and smarter machines—will inevitably intersect. Just as machines will be much smarter in 2050, we can expect that the humans who design, build, and program them will also be smarter. Naively, one would expect the rate of advance of machine intelligence to outstrip that of biological intelligence. Tinkering with a machine seems easier than modifying a living species, one generation at a time. But advances in genomics—both in our ability to relate complex traits to the underlying genetic codes, and the ability to make direct edits to genomes—will allow rapid advances in biologically-based cognition. Also, once machines reach human levels of intelligence, our ability to tinker starts to be limited by ethical considerations. Rebooting an operating system is one thing, but what about a sentient being with memories and a sense of free will?

Therefore, the answer to the question “Will AI or genetic modification have the greater impact in the year 2050?” is yes. Considering one without the other neglects an important interaction.

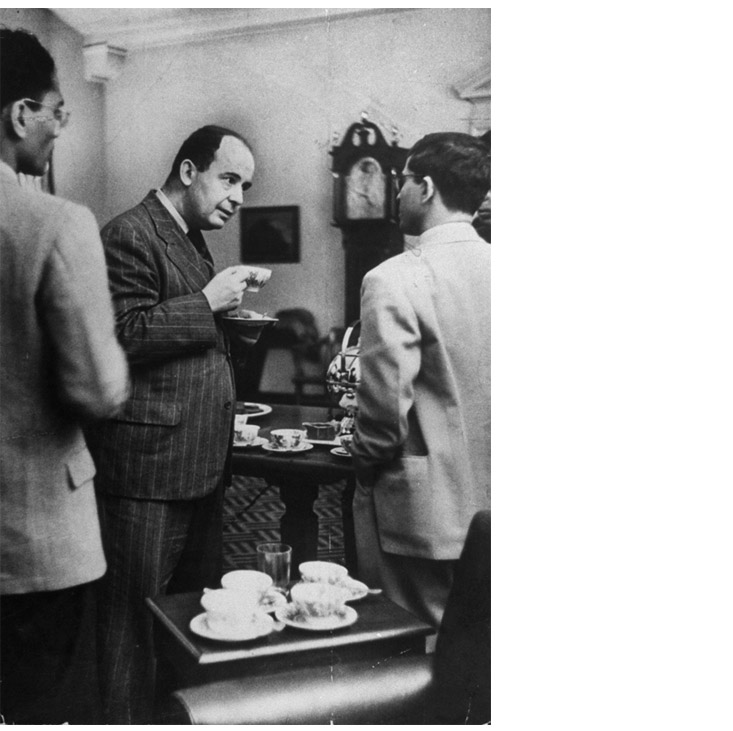

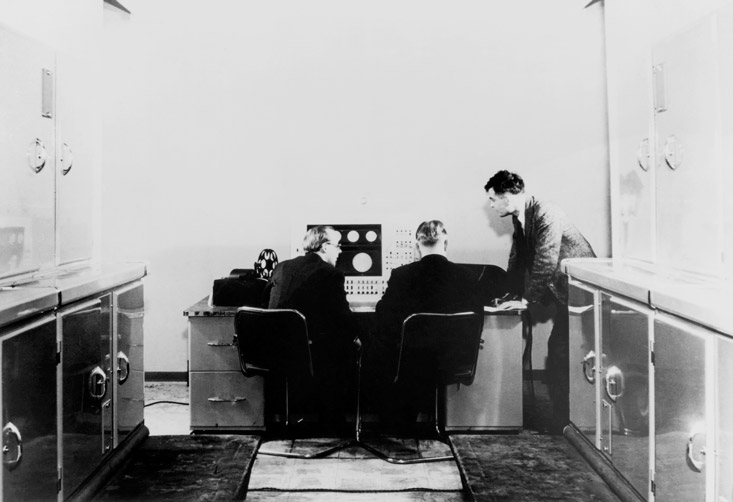

It has happened before. It is easy to forget that the computer revolution was led by a handful of geniuses: individuals with truly unusual cognitive ability. Alan Turing and John von Neumann both contributed to the realization of computers whose program is stored in memory and can be modified during execution. This idea appeared originally in the form of the Turing Machine, and was given practical realization in the so-called von Neumann architecture of the first electronic computers, such as the EDVAC. While this computing design seems natural, even obvious, to us now, it was at the time a significant conceptual leap.

Turing and von Neumann were special, and far beyond peers of their era. Both played an essential role in the Allied victory in WWII. Turing famously broke the German Enigma codes, but not before conceptualizing the notion of “mechanized thought” in his Turing Machine, which was to become the main theoretical construct in modern computer science. Before the war, von Neumann placed the new quantum theory on a rigorous mathematical foundation. As a frequent visitor to Los Alamos he made contributions to hydrodynamics and computation that were essential to the United States’ nuclear weapons program. His close colleague, the Nobel Laureate Hans A. Bethe, established the singular nature of his abilities, and the range of possibilities for human cognition, when he said “I always thought von Neumann’s brain indicated that he was from another species, an evolution beyond man.”

Today, we need geniuses like von Neumann and Turing more than ever before. That’s because we may already be running into the genetic limits of intelligence. In a 1983 interview, Noam Chomsky was asked whether genetic barriers to further progress have become obvious in some areas of art and science.2 He answered:

You could give an argument that something like this has happened in quite a few fields … I think it has happened in physics and mathematics, for example … In talking to students at MIT, I notice that many of the very brightest ones, who would have gone into physics twenty years ago, are now going into biology. I think part of the reason for this shift is that there are discoveries to be made in biology that are within the range of an intelligent human being. This may not be true in other areas.

AI research also pushes even very bright humans to their limits. The frontier machine intelligence architecture of the moment uses deep neural nets: multilayered networks of simulated neurons inspired by their biological counterparts. Silicon brains of this kind, running on huge clusters of GPUs (graphical processor units made cheap by research and development and economies of scale in the video game industry), have recently surpassed human performance on a number of narrowly defined tasks, such as image or character recognition. We are learning how to tune deep neural nets using large samples of training data, but the resulting structures are mysterious to us. The theoretical basis for this work is still primitive, and it remains largely an empirical black art. The neural networks researcher and physicist Michael Nielsen puts it this way:3

… in neural networks there are large numbers of parameters and hyper-parameters, and extremely complex interactions between them. In such extraordinarily complex systems it’s exceedingly difficult to establish reliable general statements. Understanding neural networks in their full generality is a problem that, like quantum foundations, tests the limits of the human mind.

The detailed inner workings of a complex machine intelligence (or of a biological brain) may turn out to be incomprehensible to our human minds—or at least the human minds of today. While one can imagine a researcher “getting lucky” by stumbling on an architecture or design whose performance surpasses her own capability to understand it, it is hard to imagine systematic improvements without deeper comprehension.

But perhaps we will experience a positive feedback loop: Better human minds invent better machine learning methods, which in turn accelerate our ability to improve human DNA and create even better minds. In my own work, I use methods from machine learning (so-called compressed sensing, or convex optimization in high dimensional geometry) to extract predictive models from genomic data. Thanks to recent advances, we can predict a phase transition in the behavior of these learning algorithms, representing a sudden increase in their effectiveness. We expect this transition to happen within about a decade, when we reach a critical threshold of about 1 million human genomes worth of data. Several entities, including the U.S. government’s Precision Medicine Initiative and the private company Human Longevity Inc. (founded by Craig Venter), are pursuing plans to genotype 1 million individuals or more.

The feedback loop between algorithms and genomes will result in a rich and complex world, with myriad types of intelligences at play: the ordinary human (rapidly losing the ability to comprehend what is going on around them); the enhanced human (the driver of change over the next 100 years, but perhaps eventually surpassed); and all around them vast machine intellects, some alien (evolved completely in silico) and some strangely familiar (hybrids). Rather than the standard science-fiction scenario of relatively unchanged, familiar humans interacting with ever-improving computer minds, we will experience a future with a diversity of both human and machine intelligences. For the first time, sentient beings of many different types will interact collaboratively to create ever greater advances, both through standard forms of communication and through new technologies allowing brain interfaces. We may even see human minds uploaded into cyberspace, with further hybridization to follow in the purely virtual realm. These uploaded minds could combine with artificial algorithms and structures to produce an unknowable but humanlike consciousness. Researchers have recently linked mouse and monkey brains together, allowing the animals to collaborate—via an electronic connection—to solve problems. This is just the beginning of “shared thought.”

It may seem incredible, or even disturbing, to predict that ordinary humans will lose touch with the most consequential developments on planet Earth, developments that determine the ultimate fate of our civilization and species. Yet consider the early 20th-century development of quantum mechanics. The first physicists studying quantum mechanics in Berlin—men like Albert Einstein and Max Planck—worried that human minds might not be capable of understanding the physics of the atomic realm. Today, no more than a fraction of a percent of the population has a good understanding of quantum physics, although it underlies many of our most important technologies: Some have estimated that 10-30 percent of modern gross domestic product is based on quantum mechanics. In the same way, ordinary humans of the future will come to accept machine intelligence as everyday technological magic, like the flat screen TV or smartphone, but with no deeper understanding of how it is possible.

New gods will arise, as mysterious and familiar as the old.

Stephen Hsu is Vice-President for Research and Professor of Theoretical Physics at Michigan State University. He is also a scientific advisor to BGI (formerly, Beijing Genomics Institute) and a founder of its Cognitive Genomics Lab.

References

1. Müller, V.C. & Bostrom, N. Future progress in artificial intelligence: A survey of expert opinion. AI Matters 1, 9-11 (2014).

2. Gliedman, J. Things no amount of learning can teach. Omni Magazine 6, 112- 119 (1983).

3. Bengio, Y., Goodfellow, I.J., & Courville, A. Deep Learning Book in preparation for publishing with MIT Press. (2015).