Have you heard the one about the biologist, the physicist, and the mathematician? They’re all sitting in a cafe watching people come and go from a house across the street. Two people enter, and then some time later, three emerge. The physicist says, “The measurement wasn’t accurate.” The biologist says, “They have reproduced.” The mathematician says, “If now exactly one person enters the house then it will be empty again.”

Hilarious, no? You can find plenty of jokes like this—many invoke the notion of a spherical cow—but I’ve yet to find one that makes me laugh. Still, that’s not what they’re for. They’re designed to show us that these academic disciplines look at the world in very different, perhaps incompatible ways.

There’s some truth in that. Many physicists, for example, will tell stories of how indifferent biologists are to their efforts in that field, regarding them as irrelevant and misconceived. It’s not just that the physicists were thought to be doing things wrong. Often the biologists’ view was that (outside perhaps of the well established but tightly defined discipline of biophysics) there simply wasn’t any place for physics in biology.

But such objections (and jokes) conflate academic labels with scientific ones. Physics, properly understood, is not a subject taught at schools and university departments; it is a certain way of understanding how processes happen in the world. When Aristotle wrote his Physics in the fourth century B.C., he wasn’t describing an academic discipline, but a mode of philosophy: a way of thinking about nature. You might imagine that’s just an archaic usage, but it’s not. When physicists speak today (as they often do) about the “physics” of the problem, they mean something close to what Aristotle meant: neither a bare mathematical formalism nor a mere narrative, but a way of deriving process from fundamental principles.

This is why there is a physics of biology just as there is a physics of chemistry, geology, and society. But it’s not necessarily “physicists” in the professional sense who will discover it.

In the mid-20th century, the boundary between physics and biology was more porous than it is today. Several pioneers of 20th-century molecular biology, including Max Delbrück, Seymour Benzer, and Francis Crick, were trained as physicists. And the beginnings of the “information” perspective on genes and evolution that found substance in James Watson and Francis Crick’s 1953 discovery of genetic coding in DNA is usually attributed to physicist Erwin Schrödinger’s 1944 book What Is Life? (Some of his ideas were anticipated, however, by the biologist Hermann Muller.)

A merging of physics and biology was welcomed by many leading biologists in the mid-century, including Conrad Hal Waddington, J. B. S. Haldane, and Joseph Needham, who convened the Theoretical Biology Club at Cambridge University. And an understanding of the “digital code” of DNA emerged at much the same time as applied mathematician Norbert Wiener was outlining the theory of cybernetics, which purported to explain how complex systems from machines to cells might be controlled and regulated by networks of feedback processes. In 1955 the physicist George Gamow published a prescient article in Scientific American called “Information transfer in the living cell,” and cybernetics gave biologists Jacques Monod and François Jacob a language for formulating their early theory of gene regulatory networks in the 1960s.

But then this “physics of biology” program stalled. Despite the migration of physicists toward biologically related problems, there remains a void separating most of their efforts from the mainstream of genomic data-collection and detailed study of genetic and biochemical mechanisms in molecular and cell biology. What happened?

Some of the key reasons for the divorce are summarized in Ernst Mayr’s 2004 book What Makes Biology Unique. Mayr was one of the most eminent evolutionary biologists of the modern age, and the title alone reflected a widely held conception of exceptionalism within the life sciences. In Mayr’s view, biology is too messy and complicated for the kind of general theories offered by physics to be of much help—the devil is always in the details.

Scientific ideas developed in one field can turn out to be relevant in another.

Mayr made perhaps the most concerted attempt by any biologist to draw clear disciplinary boundaries around his subject, smartly isolating it from other fields of science. In doing so, he supplies one of the clearest demonstrations of the folly of that endeavor.

He identifies four fundamental features of physics that distinguish it from biology. It is essentialist (dividing the world into sharply delineated and unchanging categories, such as electrons and protons); it is deterministic (this always necessarily leads to that); it is reductionist (you understand a system by reducing it to its components); and it posits universal natural laws, which in biology are undermined by chance, randomness, and historical contingency. Any physicists will tell you that this characterization of physics is thoroughly flawed, as a passing familiarity with quantum theory, chaos, and complexity would reveal.

But Mayr’s argument gets more interesting—if not actually more valid—when he claims that what makes biology truly unique is that it is concerned with purpose: with the designs ingeniously forged by blind mutation and selection during evolution. Particles bumping into one another on their random walks don’t have to do anything. But the genetic networks and protein molecules and complex architectures of cells are shaped by the exigencies of survival: they have a kind of goal. And physics doesn’t deal with goals, right? As Massimo Pigliucci of City University of New York, an evolutionary biologist turned philosopher, recently stated, “It makes no sense to ask what is the purpose or goal of an electron, a molecule, a planet or a mountain.”1

Purpose or teleology are difficult words in biology: They all too readily suggest a deterministic goal for evolution’s “blind watchmaker,” and lend themselves to creationist abuse. But there’s no escaping the compunction to talk about function in biology: Its components and structures play a role in the survival of the organism and the propagation of genes.

The thing is, physical scientists aren’t deterred by the word either. When Norbert Wiener wrote his 1943 paper “Behaviour, purpose and teleology,” he was being deliberately provocative. And the Teleological Society that Wiener formed two years later with Hungarian mathematical physicist John von Neumann announced as its mission the understanding of “how purpose is realised in human and animal conduct.” Von Neumann’s abiding interest in replication—an essential ingredient for evolving “biological function”—as a computational process laid the foundations of the theory of cellular automata, which are now widely used to study complex adaptive processes including Darwinian evolution (even Richard Dawkins has used them).

Apparent purpose arises from Darwinian adaptation to the environment. But isn’t that then perfectly understood by Darwin’s random mutation and natural selection, without any appeal to a “physics” of adaptation?

Actually, no. For one thing, it isn’t obvious that these two ingredients—random inheritable mutation between replicating organisms, and selective pressure from the environment—will necessarily produce adaptation, diversity, and innovation. How does this depend on, say, the rate of replication, the fidelity of the copying process and the level of random noise in the system, the strength of selective pressure, the relationship between the inheritable information and the traits they govern (genotype and phenotype), and so on? Evolutionary biologists have mathematical models to investigate these things, but doing calculations tells you little without a general framework to relate it to.

That general framework is the physics of evolution. It might be mapped out in terms of, say, threshold values of the variables above which a qualitatively new kind of global behavior appears: what physicists call a phase diagram. The theoretical chemist Peter Schuster and his coworkers have found such a threshold in the error rate of genetic copying, below which the information contained in the replicating genome remains stable. In other words, above this error rate there can be no identifiable species as such: Their genetic identity “melts.” Schuster’s colleague, Nobel laureate chemist Manfred Eigen, argues that this switch is a phase transition entirely analogous to those like melting that physicists more traditionally study.

Meanwhile, evolutionary biologist Andreas Wagner has used computer models to show that the ability of Darwinian evolution to innovate and generate qualitatively new forms and structures rather than just minor variations on a theme doesn’t follow automatically from natural selection. Instead, it depends on there being a very special “shape” to the combinatorial space of possibilities which describes how function (the chemical effect of a protein, say) depends on the information that encodes it (such as the sequences of amino acids in the molecular chain). Here again is the “physics” underpinning evolutionary variety.

And physicist Jeremy England of the Massachusetts Institute of Technology has argued that adaptation itself doesn’t have to rely on Darwinian natural selection and genetic inheritance, but may be embedded more deeply in the thermodynamics of complex systems.2 The very notions of fitness and adaptation have always been notoriously hard to pin down—they easily end up sounding circular. But England says that they might be regarded in their most basic form as an ability of a particular system to persist in the face of a constant throughput of energy by suppressing big fluctuations and dissipating that energy: you might say, by a capacity to keep calm and carry on.

“Our starting assumptions are general physical ones, and they carry us forward to a claim about the general features of nonequilibrium evolution of which the Darwinian story becomes a special case that obtains in the event that your system contains self-replicating things,” says England. “The notion becomes that thermally fluctuating matter gets spontaneously beaten into shapes that are good at work absorption from the external fields in the environment.” What’s exciting about this, he says, is that “when we give a physical account of the origins of some of the ‘adapted’-looking structures we see, they don’t necessarily have to have had parents in the usual biological sense.” Already, some researchers are starting to suggest that England’s ideas offer the foundational physics for Darwin’s.

Notice that there is really no telling where this “physics” of the biological phenomenon will come from—it could be from chemists and biologists as much as from “physicists” as such. There is nothing at all chauvinistic, from a disciplinary perspective, about calling these fundamental ideas and theories the physics of the problem. We just need to rescue the word from its departmental definition, and the academic turf wars that come with it.

You could regard these incursions into biology of ideas more familiar within physics as just another example of the way in which scientific ideas developed in one field can turn out to be relevant in another.

But the issue is deeper than that, and phrasing it as cross-talk (or border raids) between disciplines doesn’t capture the whole truth. We need to move beyond attempts like those of Mayr to demarcate and defend the boundaries.

The habit of physicists to praise peers for their ability to see to the “physics of the problem” might sound odd. What else would a physicist do but think about the “physics of the problem?” But therein lies a misunderstanding. What is being articulated here is an ability to look beyond mathematical descriptions or details of this or that interaction, and to work out the underlying concepts involved—often very general ones that can be expressed concisely in non-mathematical, perhaps even colloquial, language. Physics in this sense is not a fixed set of procedures, nor does it alight on a particular class of subject matter. It is a way of thinking about the world: a scheme that organizes cause and effect.

We don’t yet know quite what a physics of biology will consist of. But we won’t understand life without it.

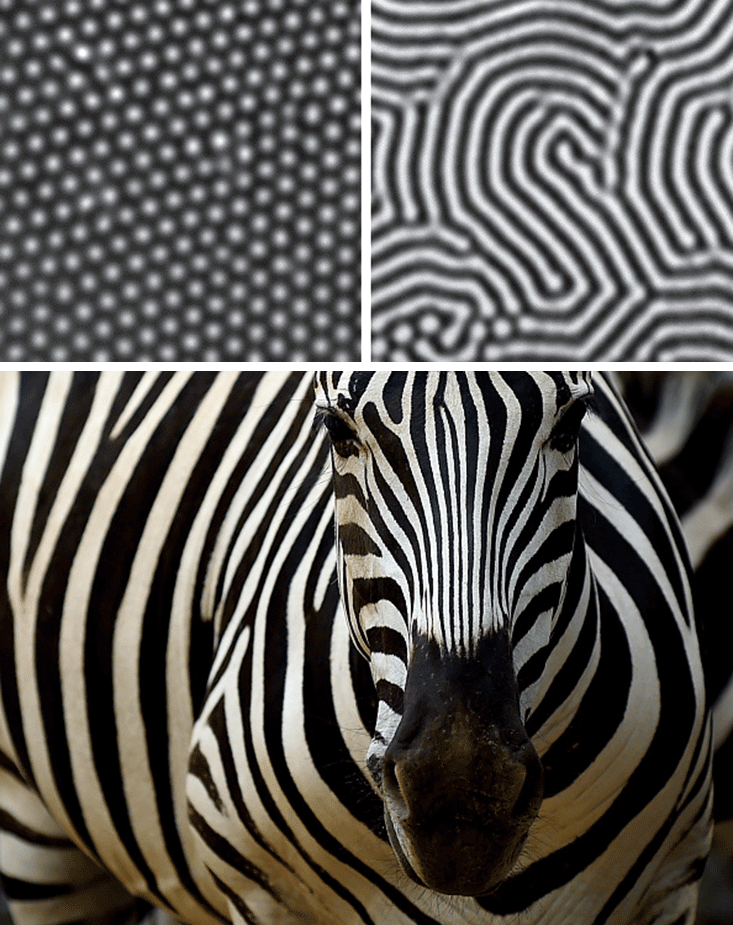

This kind of thinking can come from any scientist, whatever his or her academic label. It’s what Jacob and Monod displayed when they saw that feedback processes were the key to genetic regulation, and so forged a link with cybernetics and control theory. It’s what the developmental biologist Hans Meinhardt did in the 1970s when he and his colleague Alfred Gierer unlocked the physics of Turing structures. These are spontaneous patterns that arise in a mathematical model of diffusing chemicals, devised by mathematician Alan Turing in 1952 to account for the generation of form and order in embryos. Meinhardt and Gierer identified the physics underlying Turing’s maths: the interaction between a self-generating “activator” chemical and an ingredient that inhibits its behavior.

Once we move past the departmental definition of physics, the walls around other disciplines become more porous, to positive effect. Mayr’s argument that biological agents are motivated by goals in ways that inanimate objects are not was closely tied to a crude interpretation of biological information springing from the view that everything begins with DNA. As Mayr puts it, “there is not a single phenomenon or a single process in the living world which is not controlled by a genetic program contained in the genome.”

This “DNA chauvinism,” as it is sometimes now dubbed, leads to the very reductionism and determinism that Mayr wrongly ascribes to physics, and which the physics of biology is undermining. For even if we recognize (as we must) that DNA and genes really are central to the detailed particulars of how life evolves and survives, there’s a need for a broader picture in which information for maintaining life doesn’t just come from a DNA data bank. One of the key issues here is causation: In what directions does information flow? It’s now becoming possible to quantify these questions of causation—and that reveals the deficiencies of a universal bottom-up picture.

Neuroscientist Giulio Tononi and colleagues at the University of Wisconsin-Madison have devised a generic model of a complex system of interacting components—which could conceivably be neurons or genes, say—and they find that sometimes the system’s behavior is caused not so much in a bottom-up way, but by higher levels of organization among the components.3

This picture is borne out in a recent analysis of information flow in yeast gene networks by Sara Walker, Paul Davies, and colleagues at Arizona State University in Tempe.4 The study suggests that indeed “downward” causation could be involved in this case.1 Davies and colleagues believe that top-down causation might be a general feature of the physics of life, and that it could have played a key role in some major shifts in evolution,5 such as the appearance of the genetic code, the evolution of complex compartmentalized cells (eukaryotes), the development of multicellular organisms, and even the origin of life itself.6 At such pivotal points, they say, information flow may have switched direction so that processes at higher levels of organization affected and altered those at lower levels, rather than everything being “driven” by mutations at the level of genes.

One thing this work, and that of Wagner, Schuster, and Eigen, suggests is that the way DNA and genetic networks connect to the maintenance and evolution of living organisms can only be fully understood once we have a better grasp of the physics of information itself.7

A case in point is the observation that biological systems often operate close to what physicists call a critical phase transition or critical point: a state poised on the brink of switching between two modes of organization, one of them orderly and the other disorderly. Critical points are well known in physical systems like magnetism, liquid mixtures, and superfluids. William Bialek, a physicist working on biological problems at Princeton University, and his colleague Thierry Mora at the École Normale Supérieure in Paris, proposed in 2010 that a wide variety of biological systems, from flocking birds to neural networks in the brain and the organization of amino-acid sequences in proteins, might also be close to a critical state.8

By operating close to a critical point, Bialek and Mora said, a system undergoes big fluctuations that give it access to a wide range of different configurations of its components. As a result, Mora says, “being critical may confer the necessary flexibility to deal with complex and unpredictable environments.” What’s more, a near-critical state is extremely responsive to disturbances in the environment, which can send rippling effects throughout the whole system. That can help a biological system to adapt very rapidly to change: A flock of birds or a school of fish can respond very quickly to the approach of a predator, say.

Criticality can also provide an information-gathering mechanism. Physicist Amos Maritan at the University of Padova in Italy and coworkers have shown that a critical state in a collection of “cognitive agents”—they could be individual organisms, or neurons, for example—allows the system to “sense” what is going on around it: to encode a kind of ‘internal map’ of its environment and circumstances, rather like a river network encoding a map of the surrounding topography.9 “Being poised at criticality provides the system with optimal flexibility and evolutionary advantage to cope with and adapt to a highly variable and complex environment,” says Maritan. There’s mounting evidence that brains, gene networks, and flocks of animals really are organized this way. Criticality may be everywhere.

Examples like these give us confidence that biology does have a physics to it. Bialek has no patience with the common refrain that biology is just too messy—that, as he puts it, “there might be some irreducible sloppiness that we’ll never get our arms around.”10 He is confident that there can be “a theoretical physics of biological systems that reaches the level of predictive power that has become the standard in other areas of physics.” Without it, biology risks becoming mere anecdote and contingency. And one thing we can be fairly sure about is that biology is not like that, because it would simply not work if it was.

We don’t yet know quite what a physics of biology will consist of. But we won’t understand life without it. It will surely have something to say about how gene networks produce both robustness and adaptability in the face of a changing environment—why, for example, a defective gene need not be fatal and why cells can change their character in stable, reliable ways without altering their genomes. It should reveal why evolution itself is both possible at all and creative.

Saying that physics knows no boundaries is not the same as saying that physicists can solve everything. They too have been brought up inside a discipline, and are as prone as any of us to blunder when they step outside. The issue is not who “owns” particular problems in science, but about developing useful tools for thinking about how things work—which is what Aristotle tried to do over two millennia ago. Physics is not what happens in the Department of Physics. The world really doesn’t care about labels, and if we want to understand it then neither should we.

Philip Ball is the author of Invisible: The Dangerous Allure of the Unseen and many books on science and art.

References

1. Pigliucci, M. Biology vs. Physics: Two ways of doing science? www.ThePhilosophersMag.com (2015).

2. Perunov, N., Marsland, R., & England, J. Statistical physics of adaptation. arXiv:1412.1875 (2014).

3. Hoel, E.P., Albantakis, L., & Tononi, G. Quantifying causal emergence shows that macro can beat micro. Proceedings of the National Academy of Sciences 110, 19790-19795 (2013).

4. Walker, S.I., Kim, H., & Davies, P.C.W. The informational architecture of the cell. Philosophical Transactions of the Royal Society A 374 (2016). Retrieved from: DOI: 10.1098/rsta.2015.0057

5. Walker, S.I., Cisneros, L., & Davies, P.C.W. Evolutionary transitions and top-down causation. arXiv:1207.4808 (2012).

6. Walker, S.I. & Davies, P.C.W. The algorithmic origins of life. Journal of the Royal Society Interface 10 (2012). Retrieved from: DOI: 10.1098/rsif.2012.0869

7. “DNA as Information” Theme issue compiled and edited by Cartwright, J.H.E., Giannerini, S., & Gonzalez, D.L. Philosophical Transactions of the Royal Society A 374 (2016).

8. Mora, T. & Bialek, W. Are biological systems poised at criticality? Journal of Statistical Physics 144, 268-302 (2011).

9. Hildalgo, J., et al. Information-based fitness and the emergence of criticality in living systems. Proceedings of the National Academy of Sciences 111, 10095-10100 (2014).

10. Bialek, W. Perspectives on theory at the interface of physics and biology. arXiv:1512.08954 (2015).