There’s a weird way in which a hedge fund is a confluence of everything. There’s the money of course—Two Sigma, located in lower Manhattan, manages over $50 billion, an amount that has grown 600 percent in 6 years and is roughly the size of the economy of Bulgaria. Then there are the people—financiers, philosophers, engineers—all applying themselves to unearthing inscrutable patterns that separate fortune from failure.

And there is the science and engineering, much of it resting on a towering stack of data. In principle, almost any information about the real world can be relevant to a hedge fund. Employees, so the stories go, have camped out next to harbors noting down tanker waterlines, and in retail parking lots counting cars. This data then has to be standardized, synthesized, and made accessible to the people who place bets on the market.

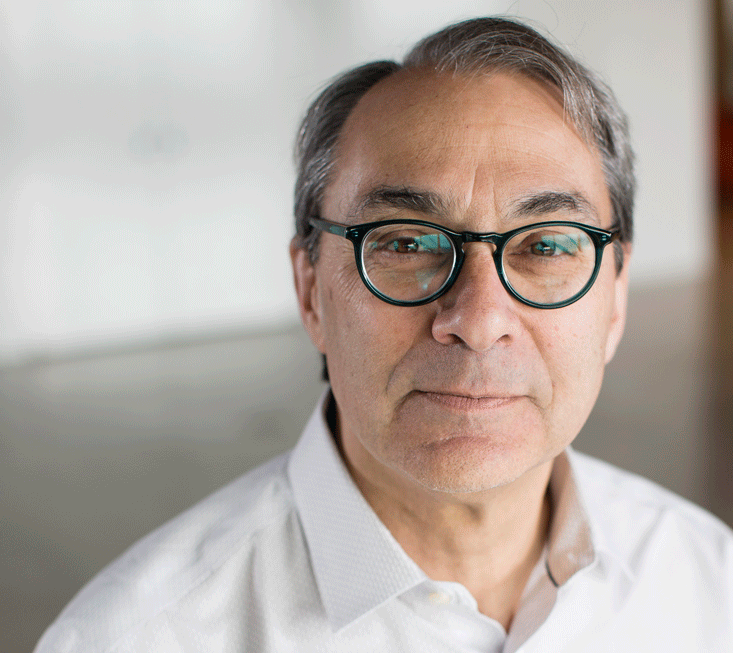

Building the tools to do this is part of Alfred Spector’s job. As the chief technology officer of Two Sigma, he is responsible for the engineering platforms used by the firm’s modelers. A former vice president at IBM and former professor of computer science at Carnegie Mellon, Spector has seen software transform one industry after another, and made more than a few contributions of his own along the way.

He sat down with us for a conversation at Two Sigma’s headquarters earlier this month.

Why would a company like Two Sigma run a public game competition?

We started the Halite AI Programming Competition because we want to be known in the tech community for doing things that the tech community likes. Game competitions are one of those things. Programmers like interesting programing challenges—particularly ones that are contained enough that they can do off-hours. Plus we open-source everything so that the programmers can actually see the game environment and learn everything about the game. That gives programmers a lot of opportunities for creativity as to how the game can be played, and it becomes more fun.

What effect did you notice the competition having on the Two Sigma brand?

What we noticed is that when we go to campuses, people have heard of us more. We also hired someone that was at the top of the ranking.

What was the goal of the game?

Both this year and last year’s games are turn-based strategy games. In Halite 1 last year there were between two and six bots on the board to start. In the game, each bot starts with a single piece, which it can move up, down, left, or right. It can also stay still, in which case the piece gains strength. If it moves, it leaves behind another piece where it was, so the number of pieces on your side grows. All the players are doing this on a grid that’s maybe 40 or 50 squared pieces, so a bot can make a huge number of different moves. This year’s game, Halite 2, is in many ways similar, but it uses a space war theme, where a ship can move to a planet and take over the planet. When you put all this together, people come up with incredibly interesting winning strategies.

What’s an example of an interesting strategy that came out of the games?

There was an interesting, unanticipated strategy that emerged in the final week of last year’s game. It was a non-aggression strategy. Some players determined that if they hung loose for a while, and didn’t try to defeat other players, and just tried to gain space and stay out of trouble, it could help them. Remaining aggressive players would actually sort of hurt themselves, while the players that were being non-aggressive would actually then be in a position that, with moderately high probability, could win.

How was machine learning relevant to game strategy?

This year, we really focused a lot on advancing the ability of our players to use machine learning. In fact, we provided a limited number of Google credits for players to use GPUs (graphic processing units) in the Google Cloud, which allow for very rapid machine-learning algorithm training to be done. We make replays of all the games that have been played available, so that machine-learning systems could look at them and try to learn how to play the game better. It is still to be determined how well machine learning can do in a game as complex as Halite.

What’s the difference between an algorithmic approach and a machine-learning approach?

Computers always execute code, and code embodies an algorithm of some form. When we think of machine learning, those algorithms learn, and in effect modify themselves, from data. We think of classical algorithms as having everything specified in advance. Machine-learning algorithms have more degrees of freedom. They learn from the environment in which they’re operating.

When we go to campuses, people have heard of us more.

How does Two Sigma get its data?

I kind of liken it to the electromagnetic spectrum of economic data shining in on us. We see certain frequencies and we don’t see others. Certainly, we get tick data. We understand the prices and volumes of tradable entities in public markets. We get fundamental data. We get earnings data and things of that form from many sources. Sometimes we are able to get data on the interest that the sell side of Wall Street has in stocks. We have a product called PICS, which enables sell-side contributors to tell us that they’re recommending something. Both we and they benefit from this.

How can machines interpret this great variety of data?

One way is that a human has a hypothesis. For example, we can hypothesize that something will have an effect on the valuation of some security. What we can do then is create a mathematical representation of that predictive model and then see whether that hypothesis has proven true. We do an enormous amount of testing at Two Sigma. That’s hypothesis-driven work and, in one form or another, it has been the mainstay of investing for a very long time. Another approach is to send some form of a machine-learning algorithm at a lot of data and at some economic outcome, say a stock price or something like that, and see whether the machine can figure out the pattern. That’s a more challenging thing to go do because, even if you get a result that’s positive, you don’t necessarily feel that you know why.

How can humans learn to interpret machine learning?

I think one approach is to experiment and look at the outputs that you get as a function of the different kinds of inputs that you could give. Maybe a deep learning algorithm is going to predict the weather. You know it knows the barometric pressure, the temperature, the wind conditions, et cetera. Now let’s say you iterate over many of those elements and you look at how the predictions would change. You may then be able to say: This algorithm seems to be very sensitive to rapid temperature change. You’re beginning to get an idea of what the algorithm does, and you’re beginning to get explanative power.

Will there always be a human in the loop in this process?

Not necessarily. You could imagine that there’s some way of deciding on orthogonal planes of data that you then send to the system. You could take a lot of data elements and then feed them through the system and see what predictions occur in an automatic fashion, and try to learn from that. You could imagine another learning algorithm layered on the first learning algorithm.

Should we be wary of the unintended consequences of machine learning?

There was a very nice paper published on the reinforcement of gender bias as an unintended consequence. Imagine you build a machine learning system that is designed to show ads to people who will click on those ads. If you’re showing ads for CEO positions, with the state of the world as it is today, perhaps it is more likely that males will click on them. If that’s true, a system that’s doing machine learning may learn to advertise CEO ads in publications that are frequented by men, thereby inadvertently promulgating an undesirable bias. I note that the teams building that algorithm had no idea of this unintended consequence. I give a talk, called the Opportunities and Perils of Data Science, where I argue that we have a much greater need to educate engineers and data scientists in all manner of ethical issues now as data science becomes more pervasive.

How will those education needs be met?

We will need to train people in every discipline and probably every walk of life to have more technological understanding. We already see it happening. We see many more high schools with computer science and programming training. We see a lot more of it in college. In fact, computer science has become the largest major at some universities: Stanford, for example. You see at other great universities introductory computer science courses really growing in enrollment. All this I think is good.

Journalists, in particular, will need to understand data and algorithms better.

In the world of computer science we had hoped that we would make vast amounts of data available, and that journalists and political scientists could use that data to reach scientifically valid conclusions. We hoped the world, would become, if you will, more truthful. What actually has happened seems to be the opposite. Journalists have so much data that by choosing which data to use, they can draw many different conclusions. Admittedly, as anyone who’s taken a statistics class knows, it’s pretty difficult to draw truly valid conclusions from data. A lot of the data’s erroneous as well, which makes it even easier to jump to really bad conclusions. But all this provides an opportunity for journalists that are grounded in really good rigorous approaches to using data. But frankly it’s also a great risk that many journalists and very many members of the public will not know how to interpret data properly. We seem to be having more problems with the latter at the moment.

Is educating the reader the antidote?

I think we need education of the reader but I also think we need education of the journalist. With the data science that we have today it’s possible to get data on a great number of highly detailed events in the world, and that number will only grow. With so much detail available, how can we put those detailed events in perspective in the very large world around us? An individual item, like some engineering failure or accident may or may not be of significant societal impact, but it can only be judged in context. That’s a very hard thing for a journalist to do. And it’s even more difficult for members of the public. So, I think we’re going to become more dependent upon journalists. And journalists are going need a lot of perspective.

There was an interesting, unanticipated strategy that emerged in the final week of the game.

Can machine learning be used to help education?

Back when I was a father of young children, there were already reading tutors that we used. This would have been 13 years ago. I loved them. My children loved them. I don’t know why they’re not a more common part of education in the United States, but even more important, in parts of the developing world where there is more illiteracy. If we extend these types of immersive tutors into more domains and add machine learning and AI techniques, these tutors can have broad impact and can adapt themselves to the learning styles of our children. There’s every reason to think that they will be more like an individual tutor rather than a large, university lecture course. Interestingly, Halite is a pretty good example of an immersive, educational programming opportunity.

What datasets would you like to see made available to the public?

I think there’s enormous opportunity in medicine with the right data. We need to build the right datasets of phenotypes, genetics, disease, and more. These comprehensive databases of epidemiological information may not directly generate the answers we need, but they will tee up a vast number of hypotheses that our medical researchers will find very interesting. You see this beginning to happen at 23andMe, though it is focused primarily on genetic data. Imagine we could do that across all of the medical institutions in the country, and we really, really go to work on all of this data.

Do you have any sense of what’s keeping medical data so fragmented?

The problem is, first, as a society we are really worried about the privacy of that data. Ensuring patient privacy is really complex and slows down the application of data to science. The second problem is the data itself is actually very complex. Data that’s gathered at one institution may not be directly comparable with data gathered at another institution. Third, a lot of the data is wrong. There was an article a number of years back by a Science News reporter who reported that the evaluations of her own gut biome from two independent labs were vastly different. Finally, there is always concern about the ownership of data, and the prospect that it might somehow be financially valuable.

What new approaches to machine learning are you excited about?

People are trying to come up with mechanisms for probing causality, for asking “Why has the machine reached a particular conclusion?” Another area is so-called “adversarial” networks. Machine learning can do quite well in many environments, but it can also be fooled. Black and yellow stripes were claimed by one algorithm to be a school bus. There has to be more research on how to actually deal with these adversarial approaches and systems, which could become an abuse challenge. A third area is reinforcement learning, where the computer plays against itself in order to learn. Often, this is very time consuming and slow, but researchers are looking at knowledge representation so that the algorithm could learn in one domain and then transfer some of the knowledge to another, which would make reinforcement learning faster. I believe ultimately in the “Combination Hypothesis” where we will augment machine learning with other approaches, such as inferencing, to build truly intelligent systems.

How did you get interested in science?

I got interested in computer science because I was taking economics courses in college that required mathematics, and the mathematics class required some programming. I was really enamored of the programming in that class, and it just came very naturally to me. I was also enamored with some of the modeling that seemed to be plausible in the 1970s. A lot of people at that time were interested in natural resource limitations and concerns we’d run out of oil, not be able feed the growing population, et cetera. So, a lot of almost Malthusian modeling began to be done, and I found that most interesting. I should add that another reason I got interested was because I had been a pizza chef prior to college, and I was paid two-and-a-quarter an hour. Programming was more lucrative.