I sometimes worry that many who would enjoy a scientific career are put off by a narrow and outdated conception of what’s involved. The word “scientist” still conjures up an unworldly image of an Einstein lookalike (male and elderly) or else a youthful geek. There’s still too little racial and gender diversity among scientists. But there’s a huge variety in the intellectual and social styles of work the sciences involve. They require speculative theorists, lone experimenters, ecologists gaining data in the field, and quasi-industrial teams working on giant particle accelerators or big space projects.

Scientists are widely believed to think in a special way—to follow what’s called the “scientific method.” It would be truer to say scientists follow the same rational style of reasoning as lawyers or detectives in categorizing phenomena, forming hypotheses, and testing evidence. A related and damaging misperception is the mindset that supposes that there’s something elite about the quality of scientists’ thought and they have to be especially clever. Academic ability is one facet of the far wider concept of intellectual ability—possessed in equal measure by the best journalists, lawyers, engineers, and politicians.

The great ecologist E.O. Wilson avers that to be effective in some scientific fields it’s actually best not to be too bright. He’s not disparaging the insights and eureka moments that punctuate (albeit rarely) scientists’ working lives. But, as the world expert on tens of thousands of ant species, Wilson’s research has involved decades of hard slog: Armchair theorizing is not enough. Yes, there is a risk of boredom. And he’s right that those with short attention spans—with “grasshopper minds”—may find happier (and less worthwhile) employment as “millisecond traders” on Wall Street.

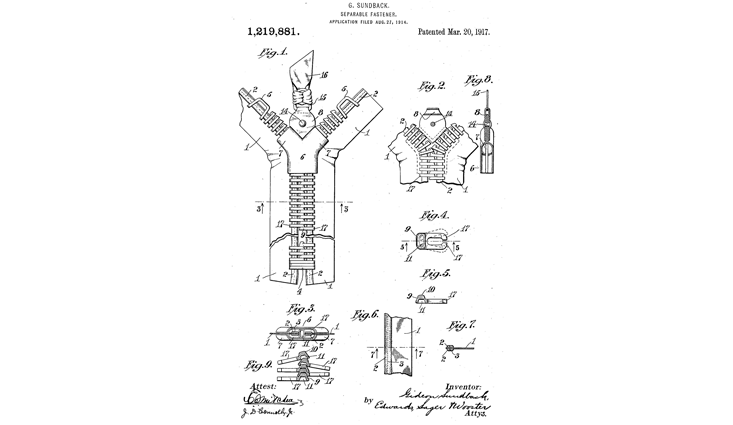

There’s no justification for snobbery of pure over applied work. Harnessing a scientific concept for practical goals can be a greater challenge than the initial discovery. A favorite cartoon of my engineering friends shows two beavers looking up at a vast hydroelectric dam. One beaver says to the other, “I didn’t actually build it, but it’s based on my idea.” And I like to remind my theorist colleagues that the Swedish engineer Gideon Sundback, who invented the zipper, made a bigger intellectual leap than most of us ever will. There are few more inspiring goals than helping to provide clean energy, better health, and enough food for the 9 billion people who will populate the world by mid-century.

Aspiring scientists do best when they choose a field and type of research (fieldwork, computer modeling) that suits their personalities, skills, and tastes. It’s especially gratifying to enter a field where things are advancing fast, where you have access to novel techniques, more powerful computers, or bigger data sets. And there’s no need to stick with the same field for an entire career—nor indeed to spend all your career as a scientist. The typical field advances through surges, interspersed by periods of relative stagnation. And those who shift their focus mid-career often bring a new perspective. The most vibrant fields often cut across traditional disciplinary boundaries.

E.O. Wilson avers that to be effective in some scientific fields it’s best not to be too bright.

And another thing: Only geniuses (or cranks) head straight for the grandest and most fundamental problems. You should multiply the importance of the problem by the probability that you’ll solve it and maximize that product. Aspiring scientists shouldn’t all swarm into the unification of cosmos and quantum, even though it’s plainly one of the intellectual peaks we aspire to reach. They should realize the great challenges in cancer research and brain science need to be tackled in a piecemeal fashion, rather than head-on.

Odd though it may seem, it’s the most familiar questions that sometimes baffle us most, while some of the best-understood phenomena are far away in the cosmos. Astronomers confidently explain black holes crashing together a billion light years away. In contrast, our grasp of everyday matters that interest us all—diet and child care, for instance—is still so meager that “expert” advice changes from year to year. But it isn’t paradoxical that we’ve understood some arcane cosmic phenomena while being flummoxed by everyday things. What challenges us is complexity, not mere size. The smallest insect is structured far more intricately than a star or a galaxy, and offers deeper mysteries.

It’s conventional wisdom that scientists, especially theorists, don’t improve with age, that they burn out. The physicist Wolfgang Pauli had a famous put-down for scientists past 30: “Still so young, and already so unknown.” (I hope it’s not just wishful thinking on the part of an aging scientist to be less fatalistic.) Despite some “late flowering” exceptions, there are few whose last works are their greatest. That’s not the case for many artists. Artists, influenced in their youth (like scientists) by the then-prevailing culture and style, can improve and deepen solely through internal development. Scientists, by contrast, need continually to absorb new concepts and new techniques if they want to stay on the frontier. That doesn’t mean productivity can’t continue into old age. John Goodenough, co-inventor of the lithium ion battery, is still working at age 97. In 2019, he became the oldest-ever winner of a Nobel Prize.

There’s a pathway that’s seduced some of our greatest scientists that should nevertheless be avoided: an unwise and overconfident diversification into other fields. Those who follow this route are still, in their own eyes, “doing science”—they want to understand the world and the cosmos—but they no longer get satisfaction from researching in the traditional piecemeal way: they over-reach themselves, sometimes to the embarrassment of their admirers.

Arthur Eddington was perhaps the leading astrophysicist of his generation. In his later years (the 1930s) he developed a “fundamental theory” in which he claimed, via elaborate mathematics, to predict the exact number of atoms in the universe. Once, when Eddington presented his ideas in a lecture in Holland, a young scientist in the audience asked his older colleague, “Do all old physicists go off on crazy tangents when they get old?” No, the older scientist answered, “A genius like Eddington may perhaps go nuts but a fellow like you just gets dumber and dumber.” That’s at least some consolation for non-geniuses.

Scientists tend to be severe critics of other people’s work. They have more incentive than anyone else to uncover errors. That’s because the greatest esteem in their profession goes to those who contribute something unexpected and original—and overturn a consensus. But they should be equally critical of their own work. They must not become too enamored of pet theories, nor influenced by wishful thinking. Unsurprisingly, many find that hard. Someone who has invested years of their life in a project is bound to be committed to its importance, to the extent that it is a traumatic wrench if the whole effort comes to naught. Seductive theories get destroyed by harsh facts. Only those that are robust enough to survive intense scrutiny become part of public knowledge—for instance, the link between smoking and lung cancer, and between HIV and AIDS. The great historian Robert Merton described science as “organized skepticism.”

The smallest insect is structured more intricately than a star and offers deeper mysteries.

The path toward a consensual scientific understanding is often winding—with many blind alleys being explored along the way. Occasionally a maverick is vindicated. We all enjoy seeing this happen; but such instances are rarer than is commonly supposed, and perhaps rarer than would be inferred from reading the popular press. And sometimes a prior consensus is overturned. Most advances transcend and generalize the concepts that went before, rather than contradicting them. For instance, Einstein didn’t “overthrow” Newton. He transcended Newton, offering a new perspective with broader scope and deeper insights into space, time, and gravity.

When rival theories fight it out, there is just one winner. Sometimes, one crucial piece of evidence clinches the case. That happened in 1965 for Big Bang cosmology, when weak microwaves were discovered which pervaded all of space and had no plausible interpretation other than as an afterglow of a hot, dense “beginning.” And the discovery of “sea-floor spreading,” again in the 1960s, converted almost all geophysicists to a belief in continental drift.

In other cases, an idea gains only a gradual ascendancy: Alternative views get marginalized until their leading proponents die off. Sometimes, the subject moves on, and what once seemed an epochal issue is bypassed or sidelined.

The cumulative advance of science requires new technology and new instruments—in symbiosis, of course, with theory and insight. Some instruments are still tabletop in scale. At the other extreme, the pan-European Large Hadron Collider at CERN in Geneva is currently the world’s most elaborate scientific instrument. Likewise, astronomical instruments are run by multinational consortia, and some are truly global projects—for instance the ALMA radio telescope in Chile (Atacama Large Millimeter/Submillimeter Array) has participation from Europe, the United States, and Japan.

But even if we work in a small localized group, we benefit from the fact that science is a truly global culture. Our skills (unlike those of a lawyer) are transferable worldwide. That’s why scientists can straddle boundaries of nationality and ideology more readily than other groups in tackling both intellectual and practical problems. For many of us, that’s an important plus in our career.

The best laboratories, like the best start-ups, should be optimal incubators of original ideas and young talent. But it’s only fair to highlight an insidious demographic trend that militates against an optimum creative atmosphere.

Only geniuses (or cranks) head straight for the grandest and most fundamental problems.

Fifty years ago, my generation benefited from the fact that the science profession was still growing exponentially, riding on the expansion of higher education. Then, the young outnumbered the old; moreover, it was normal (and generally mandatory) to retire by one’s mid-60s. The academic community, at least in the West, isn’t now expanding much (and in many areas has reached saturation level), and there is no enforced retirement age. In earlier decades, it was reasonable to aspire to lead a group by one’s early 30s—but in, for example, the United States’ biomedical community, it’s now unusual to get your first research grant before the age of 40. This is a bad augury. Science will always attract nerds who can’t envisage any other career. And laboratories can be staffed with those content to spend their time writing grant applications that usually fail to get funding.

But the profession needs to attract a share of those with flexible talent, and the ambition to achieve something by their 30s. If a perceived prospect evaporates, some people will shun academia, and maybe attempt a start-up. This route offers great satisfaction and public benefit—many should take it—but in the long run it’s important that some such people dedicate themselves to the fundamental frontiers. The advances in IT and computing can be traced back to basic research done in leading universities—in some cases nearly a century ago. And the stumbling blocks encountered in medical research stem from uncertain fundamentals. The depressing failure of anti-Alzheimer drugs to pass clinical tests suggests not enough is yet known about how the brain functions, and that effort should refocus on basic science.

But I’m hopeful this log-jam will be temporary, and that new opportunities are opening up for aspiring scientists. The expansion of wealth and leisure—coupled with the connectivity offered by IT—will offer millions of educated amateurs and citizen scientists anywhere in the world greater scope than ever before to follow their interests. These trends will enable leading researchers to do cutting-edge work outside a traditional academic or governmental laboratory. If enough make this choice, it will erode the primacy of research universities and enhance the importance of independent scientists to the level that prevailed before the 20th century—and perhaps enhance the flowering of the genuinely original ideas the world needs for a sustainable future.

Martin Rees is an astrophysicist and cosmologist, and the United Kingdom’s Astronomer Royal. He is based at Cambridge University where he has been Director of the Institute of Astronomy and Master of Trinity College. He is a member of the U.K.’s House of Lords, and was President of the Royal Society for the period 2005 to 2010. In addition to his research publications, he has written extensively for a general readership. He has been increasingly concerned about long-term global issues. His most recent book is On the Future: Prospects for Humanity.

Lead image: Visual Generation / Shutterstock