The history of Artificial Intelligence,” said my computer science professor on the first day of class, “is a history of failure.” This harsh judgment summed up 50 years of trying to get computers to think. Sure, they could crunch numbers a billion times faster in 2000 than they could in 1950, but computer science pioneer and genius Alan Turing had predicted in 1950 that machines would be thinking by 2000: Capable of human levels of creativity, problem solving, personality, and adaptive behavior. Maybe they wouldn’t be conscious (that question is for the philosophers), but they would have personalities and motivations, like Robbie the Robot or HAL 9000. Not only did we miss the deadline, but we don’t even seem to be close. And this is a double failure, because it also means that we don’t understand what thinking really is.

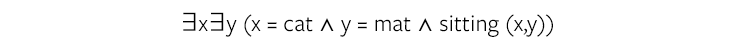

Our approach to thinking, from the early days of the computer era, focused on the question of how to represent the knowledge about which thoughts are thought, and the rules that operate on that knowledge. So when advances in technology made artificial intelligence a viable field in the 1940s and 1950s, researchers turned to formal symbolic processes. After all, it seemed easy to represent “There’s a cat on the mat” in terms of symbols and logic:

Literally translated, this reads as “there exists variable x and variable y such that x is a cat, y is a mat, and x is sitting on y.” Which is no doubt part of the puzzle. But does this get us close to understanding what it is to think that there is a cat sitting on the mat? The answer has turned out be “no,” in part because of those constants in the equation. “Cat,” “mat,” and “sitting” aren’t as simple as they seem. Stripping them of their relationship to real-world objects, and all of the complexity that entails, dooms the project of making anything resembling a human thought.

This lack of context was also the Achilles heel of the final attempted moonshot of symbolic artificial intelligence. The Cyc Project was a decades-long effort, begun in 1984, that attempted to create a general-purpose “expert system” that understood everything about the world. A team of researchers under the direction of Douglas Lenat set about manually coding a comprehensive store of general knowledge. What it boiled down to was the formal representation of millions of rules, such as “Cats have four legs” and “Richard Nixon was the 37th President of the United States.” Using formal logic, the Cyc (from “encyclopedia”) knowledge base could then draw inferences. For example, it could conclude that the author of Ulysses was less than 8 feet tall:

(implies

(writtenBy Ulysses-Book ? SPEAKER)

(equals ?SPEAKER JamesJoyce))

(isa JamesJoyce IrishCitizen)

(isa JamesJoyce Human)

(implies

(isa ?SOMEONE Human)

(maximumHeightInFeet ?SOMEONE 8)

Unfortunately, not all facts are so clear-cut. Take the statement “Cats have four legs.” Some cats have three legs, and perhaps there is some mutant cat with five legs out there. (And Cat Stevens only has two legs.) So Cyc needed a more complicated rule, like “Most cats have four legs, but some cats can have fewer due to injuries, and it’s not out of the realm of possibility that a cat could have more than four legs.” Specifying both rules and their exceptions led to a snowballing programming burden.

After more than 25 years, Cyc now contains 5 million assertions. Lenat has said that 100 million would be required before Cyc would be able to reason like a human does. No significant applications of its knowledge base currently exist, but in a sign of the times, the project in recent years has begun developing a “Terrorist Knowledge Base.” Lenat announced in 2003 that Cyc had “predicted” the anthrax mail attacks six months before they had occurred. This feat is less impressive when you consider the other predictions Cyc had made, including the possibility that Al Qaeda might bomb the Hoover Dam using trained dolphins.

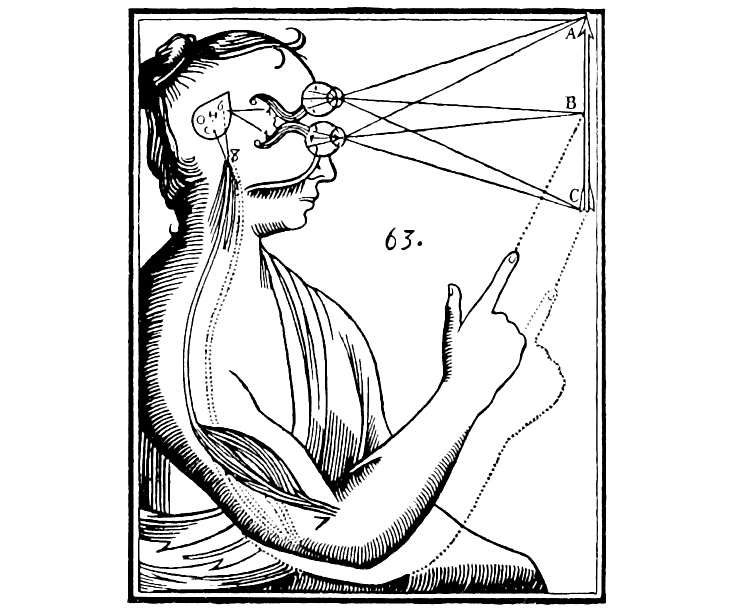

Cyc, and the formal symbolic logic on which it rested, implicitly make a crucial and troublesome assumption about thinking. By gathering together in a single virtual “space” all of the information and relationships relevant to a particular thought, the symbolic approach pursues what Daniel Dennett has called a “Cartesian theater”—a kind of home for consciousness and thinking. It is in this theater that the various strands necessary for a thought are gathered, combined, and transformed in the right kinds of ways, whatever those may be. In Dennett’s words, the theater is necessary to the “view that there is a crucial finish line or boundary somewhere in the brain, marking a place where the order of arrival equals the order of ‘presentation’ in experience because what happens there is what you are conscious of.” The theater, he goes on to say, is a remnant of a mind-body dualism which most modern philosophers have sworn off, but which subtly persists in our thinking about consciousness.

The impetus to believe in something like the Cartesian theater is clear. We humans, more or less, behave like unified rational agents, with a linear style of thinking. And so, since we think of ourselves as unified, we tend to reduce ourselves not to a single body but to a single thinker, some “ghost in the machine” that animates and controls our biological body. It doesn’t have to be in the head—the Greeks put the spirit (thymos) in the chest and the breath—but it remains a single, indivisible entity, our soul living in the house of the senses and memory. Therefore, if we can be boiled to an indivisible entity, surely that entity must be contained or located somewhere.

This has prompted much research looking for “the area” where thought happens. Descartes hypothesized that our immortal soul interacted with our animal brain through the pineal gland. Today, studies of brain-damaged patients (as Oliver Sacks has chronicled in his books) have shown how functioning is corrupted by damage to different parts of the brain. We know facts like, language processing occurs in Broca’s area in the frontal lobe of the left hemisphere. But some patients with their Broca’s area destroyed can still understand language, due to the immense neuroplasticity of the brain. And language, in turn, is just a part of what we call “thinking.” If we can’t even pin down where the brain processes language, we are a far way from locating that mysterious entity, “consciousness.” That may be because it doesn’t exist in a spot you can point at.

Symbolic artificial intelligence, the Cartesian theater, and the shadows of mind-body dualism plagued the early decades of research into consciousness and thinking. But eventually researchers began to throw the yoke off. Around 1960, linguistics pioneer Noam Chomsky made a bold argument: Forget about meaning, forget about thinking, just focus on syntax. He claimed that linguistic syntax could be represented formally, was a computational problem, and was universal to all humans and hard-coded into every baby’s head. The process of exposure to language caused certain switches to be flipped on or off to determine what particular form the grammar would take (English, Chinese, Inuit, and so on). But the process was one of selection, not acquisition. The rules of grammar, however they were implemented, became the target of research programs around the world, supplanting a search for “the home of thought.”

Chomsky made progress by abandoning the attempt to directly explain meaning and thought. But he remained firmly inside the Cartesian camp. His theories were symbolic in nature, postulating relationships among a variety of potential vocabularies rooted in native rational faculties, and never making any predictions that proved true without exception. Modern artificial intelligence programs have gone one step further, by giving up on the idea of any form of knowledge representation. These so-called subsymbolic approaches, which also go under such names as connectionism, neural networks, and parallel distributed processing take a unique approach. Rather than going from the inside out—injecting symbolic “thoughts” into computer code and praying that the program will exhibit sufficiently human-like thinking—subsymbolic approaches proceed from the outside in: Trying to get computers to behave intelligently without worrying about whether the code actually “represents” thinking at all.

Subsymbolic approaches were pioneered in the late 1950s and 1960s, but lay fallow for years because they initially seemed to generate worse results than symbolic approaches. In 1957, Frank Rosenblatt pioneered what he called the “perceptron,” which used a re-entrant feedback algorithm in order to “train” itself to compute various logical functions correctly, and thereby “learn” in the loosest sense of the term. This approach was also called “connectionism” and gave rise to the term “neural networks,” though a perceptron is vastly simpler than an actual neuron. Rosenblatt was drawing on oddball cybernetic pioneers like Norbert Wiener, Warren McCulloch, Ross Ashby, and Grey Walter, who theorized and even experimented with homeostatic machines that sought equilibrium with their environment, such as Grey Walter’s light-seeking robotic “turtles” and Claude Shannon’s maze-running “rats.”

In 1969, Rosenblatt was met with a scathing attack by symbolic artificial intelligence advocate Marvin Minsky. The attack was so successful that subsymbolic approaches were more or less abandoned during the 1970s, a time which has been called the AI Winter. As symbolic approaches continued to flail in the 1970s and 1980s, people like Terrence Sejnowski and David Rumelhart returned to subsymbolic artificial intelligence, modeling it after learning in biological systems. They studied how simple organisms relate to their environment, and how the evolution of these organisms gradually built up increasingly complex behavior. Biology, genetics, and neuropsychologyare what figured here, rather than logic and ontology.

This is a double failure, because it also means that we don’t understand what thinking really is.

This approach more or less abandons knowledge as a starting point. In contrast to Chomsky, a subsymbolic approach to grammar would say that grammar is determined and conditioned by environmental and organismic constraints (what psychologist Joshua Hartshorne calls “design constraints”), not by a set of hardcoded computational rules in the brain. These constraints aren’t expressed in strictly formal terms. Rather, they are looser contextual demands such as, “There must be a way for an organism to refer to itself” and “There must be a way to express a change in the environment.”

By abandoning the search for a Cartesian theater, containing a library of symbols and rules, researchers made the leap from instilling machines with data, to instilling them with knowledge. The essential truth behind subsymbolism is that language and behavior exist in relation to an environment, not in a vacuum, and they gain meaning from their usage in that environment. To use language is to use it for some purpose. To behave is to behave for some end. In this view, any attempt to generate a universal set of rules will always be riddled with exceptions, because contexts are constantly shifting. Without the drive toward concrete environmental goals, representation of knowledge in a computer is meaningless, and fruitless. It remains locked in the realm of data.

For certain classes of problems, modern subsymbolic approaches have proved far more generalizable and ubiquitous than any previous symbolic approach to the same problems. This success speaks to the advantage of not worrying about whether a computer “knows” or “understands” the problem it is working on. For example, genetic approaches represent algorithms with varying parameters as chromosomal “strings,” and “breed” successful algorithms with one another. These approaches do not improve through better understanding of the problem. All that matters is the fitness of the algorithm with respect to its environment—in other words, how the algorithm behaves. This black-box approach has yielded successful applications in everything from bioinformatics to economics, yet one can never give a concise explanation of just why the fittest algorithm is the most fit.

Neural networks are another successful subsymbolic technology, and are used for image, facial, and voice recognition applications. No representation of concepts is hardcoded into them, and the factors that they use to identify a particular subclass of images emerge from the operation of the algorithm itself. They can also be surprising: Pornographic images, for instance, are frequently identified not by the presence of particular body parts or structural features, but by the dominance of certain colors in the images.

Researchers made the leap from instilling machines with data, to instilling them with knowledge.

These networks are usually “primed” with test data, so that they can refine their recognition skills on carefully selected samples. Humans are often involved in assembling this test data, in which case the learning environment is called “supervised learning.” But even the requirement for training is being left behind. Influenced by theories arguing that parts of the brain are specifically devoted to identifying particular types of visual imagery, such as faces or hands, a 2012 paper by Stanford and Google computer scientists showed some progress in getting a neural network to identify faces without priming data, among images that both did and did not contain faces. Nowhere in the programming was any explicit designation made of what constituted a “face.” The network evolved this category on its own. It did the same for “cat faces” and “human bodies” with similar success rates (about 80 percent).

While the successes behind subsymbolic artificial intelligence are impressive, there is a catch that is very nearly Faustian: The terms of success may prohibit any insight into how thinking “works,” but instead will confirm that there is no secret to be had—at least not in the way that we’ve historically conceived of it. It is increasingly clear that the Cartesian model is nothing more than a convenient abstraction, a shorthand for irreducibly complex operations that somehow (we don’t know how) give the appearance, both to ourselves and to others, of thinking. New models for artificial intelligence ask us to, in the words of philosopher Thomas Metzinger, rid ourselves of an “Ego Tunnel,” and understand that, while our sense of self dominates our thoughts, it does not dominate our brains.

Instead of locating where in our brains we have the concept of “face,” we have made a computer whose code also seems to lack the concept of “face.” Surprisingly, this approach succeeds where others have failed, giving the computer an inkling of the very idea whose explicit definition we gave up on trying to communicate. In moving out of our preconceived notion of the home of thought, we have gained in proportion not just a new level of artificial intelligence, but perhaps also a kind of self-knowledge.

David Auerbach is a writer and software engineer who lives in New York. He writes the Bitwise column for Slate.