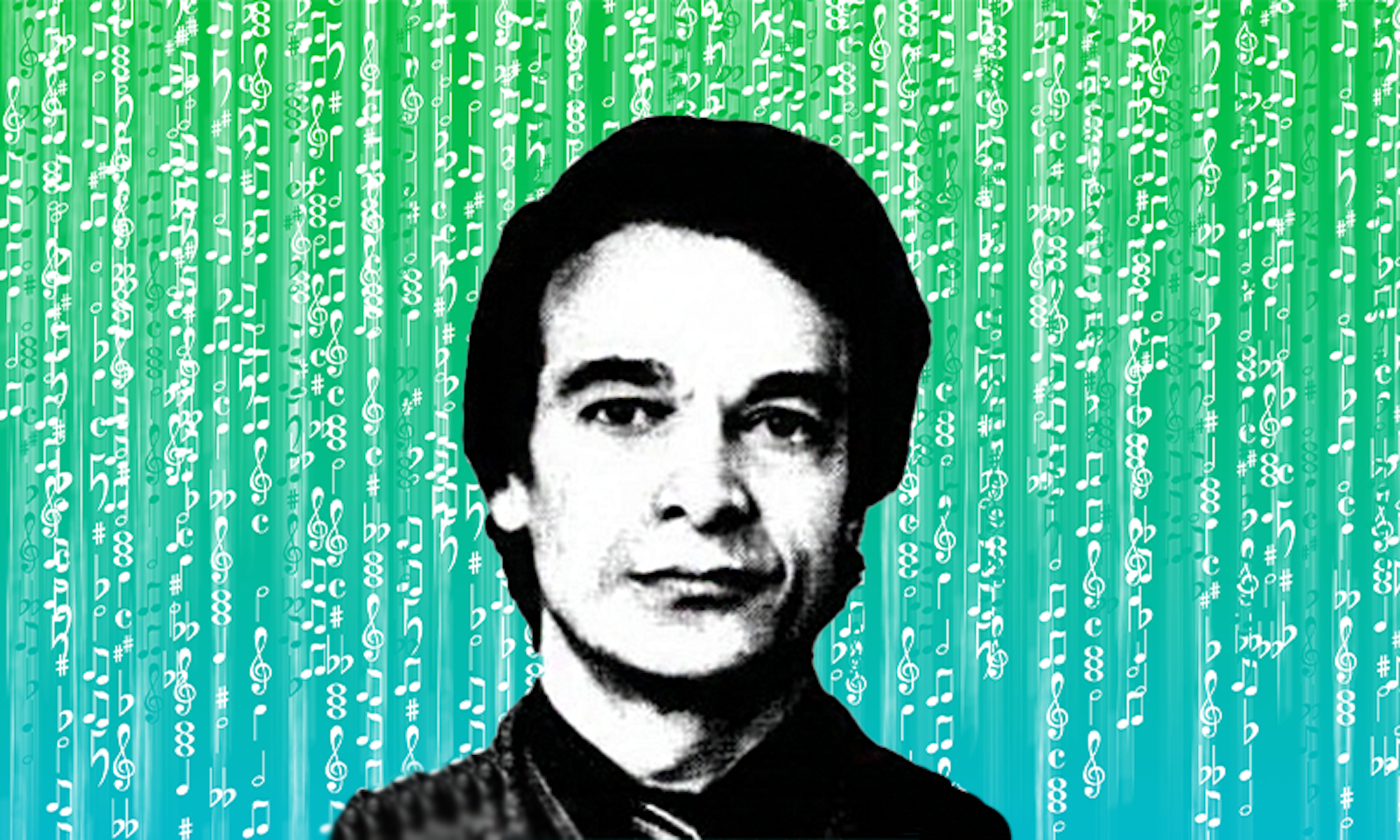

On a summer evening in 1959, as the sun dipped below the horizon of the Moscow skyline, Rudolf Zaripov was ensconced in a modest dormitory at Moscow State University. Zaripov had just defended his Ph.D. in physics at Rostov University in southern Russia, when he was sent to Moscow to program one of the country’s early mainframe computers and train a new generation of programmers for military projects.

The small room in Sparrow Hills—one of the seven hills on which the city was founded—was a crucible of creativity for Zaripov. Working on state-sanctioned cryptography projects by day, Zaripov chipped away at an algorithm for musical composition on the computer by night, determined to translate the elusive language of music into the rigid syntax of mathematical commands.

Zaripov’s foray into algorithmic composition had begun in 1947, when he was 18. In high school, he studied music and played the cello, though he also loved math and physics. His classmate, Bulat Galeev, remembered Zaripov as kind. “By nature, he was a very gentle person, and this was often abused by those who liked to skip lectures.” Zaripov would cover for his friends’ absences, which led the dean to remove him from his post as “head boy.”

One day, preparing for a music exam, Zaripov observed that the patterns of harmonization of a melody could be described by mathematical rules.

“He figured out several ways to calculate chords and realized that this task was accessible to any calculating machine,” wrote Gleb Anfilov in a 1962 book, Physics and Music. “But then another thought flashed across the inquisitive young man’s mind: What if we tried to calculate not only chords, but also the most important component of music—the melody? After all, melodies also obey laws. And their laws can probably also be expressed mathematically!”

At that time, the Soviet political apparatus had engulfed science. Work on any project that linked computer and human systems—then known as the field of cybernetics—was anathema to Soviet philosophy. Cybernetics was viewed as a weapon of the capitalistic West, a bourgeois pseudoscience and a powerful tool that threatened to leave human workers behind.

By the late 1950s, though, Soviet restrictions on cybernetics had softened, and Zaripov was working in a less fearful climate. In a late hour of that summer night in 1959, as he punched codes into the lumbering Ural computer, with its blinking lights and whirring tapes, Zaripov heard the sound he had been pursuing for years. His algorithm worked! His “Ural Chants” emerged from the computer like an eerie, even beautiful, organ rendition of early sacred music.

It was an incredible achievement for the 30-year-old scientist and musician. The music he generated behind the Iron Curtain echoes today in debates over the value of music and art generated by AI. And there’s no false note about it: Zaripov was thinking about artificial intelligence in a dangerous time.

The field of cybernetics nominally relates to problems of control and the use of feedback signals to guide computational processes. It’s the brainchild of the American computer scientist Norbert Wiener (although, as is often true, these ideas had been floating around at the time, and were based on earlier work).

Wiener’s 1948 book, Cybernetics: Or Control and Communication in the Animal and the Machine, was a treatise on ideas he first came across while working on military technologies during WWII, using feedback mechanisms to improve the aim of weapons. Wiener saw applications of the principles of feedback systems practically everywhere, and expanded them to encompass biological, economic, political, psychological, and social systems. This breadth was one of the issues the Soviets latched onto: How could one system explain everything?

MIT historian Slava Gerovitch writes in his 2002 book on Soviet cybernetics that Wiener was damned by his reference to Erwin Schrödinger. Schrödinger’s book, What Is Life?, was translated into Russian the same year that Zaripov first contemplated algorithmic composition, 1947. What Is Life? came to be highly regarded in the West for its theoretical description of the chemical makeup of genetic material. (Francis Crick cited it as an inspiration.)

Cybernetics was a weapon of the capitalistic West, a bourgeois pseudoscience.

The Soviet animosity came from the fact that, starting in the 1930s, genetics was vilified as Western propaganda, due to the efforts of Trofim Lysenko, an uneducated but politically well-placed proponent of Lamarckian evolution. Lysenko’s ideas on how crops could quickly adapt to harsh winters led to famine and mass starvation. Lysenko believed that biology should conform to Soviet ideology, under which adaptability and strength were more patriotic than the fate of inheritance. “Schrödinger’s defense of the chromosome theory brought the authority of modern physics explicitly to the geneticists’ side, and this immediately made him a mortal enemy of the Lysenkoites,” Gerovitch writes.

The attacks on cybernetics were relentless in the 1950s. Soviet journalist Boris Agapov mocked Mark III, the Harvard computer, listed Wiener among the “charlatans and obscurantists, whom capitalists substitute for genuine scientists,” and called the computer hype in the United States a “giant-scale campaign of mass delusion of ordinary people.” Another article claimed, “these fabrications of the learned lackeys of imperialism have nothing in common with science and only testify to the degeneration of modern bourgeois science.” In 1953, an anonymous author in the Soviet journal, Problems of Philosophy, wrote, “We will be left with computers of perfect technical design, ‘giant brains’ that will control all other machines … The need for workers will disappear.”

They say that science progresses one funeral at a time. The funeral that rocketed cybernetics from pseudoscience to a subject worthy of investigation in the Soviet Union was Stalin’s. The Soviet ruler had a lot to say about science, writing on philosophy, linguistics, economics, and even physiology and physics, despite having no formal education in these topics. That of course didn’t stop him from dictating the practice of science. In Stalin and the Soviet Science Wars, Ethan Pollock writes that Stalin was called “the coryphaeus”—leader of the Greek chorus—“of science.” After Stalin’s death, things for cybernetics researchers became easier. By 1955, mathematician Alexey Lyapunov, who had close ties with leading Soviet scientists in the 1940s, was conducting an official seminar at Moscow State University on cybernetics and physiology.

The idea of algorithmic music wasn’t Zaripov’s alone. It can be traced to the visionary 19th-century mathematician Ada Lovelace, who envisioned in the 1840s that music could be captured with mathematical rules and composed with a machine. Nor was Zaripov the first to compose on a computer. In 1957, the American composers Lejaren Hiller and Leonard Isaacson created the “Illiac Suite.” The duo used the Illiac computer—which was composed of 2,800 vacuum tubes, not much higher than the Ural 1’s 2,100, which Zaripov used for his work—to create a string quartet through algorithmic methods.

Zaripov, though, was the first to publish an academic paper on algorithmic composition, published in 1960 in the Proceedings of the Academy of Sciences of the USSR. The paper describes the painstaking rules of melody that Zaripov had to articulate in mathematical language for the computer to follow: Each musical phrase must end on one of the three main notes of the scale; two large intervals (a fifth or a sixth) are not allowed in a row; the number of notes moving in one direction (up or down in pitch) must not exceed six, and so on.

Despite the apparent rigidity of the rules, one of the reasons “Ural Chants” engages listeners is that Zaripov had the mind of a musician. In the 1970s, he conducted experiments broadcast over the radio. He played listeners a series of melodies he generated on a computer along with similar melodies played by professional musicians. Often, listeners preferred the computer melodies.

“Zaripov was drawn to questions of aesthetics and taste,” says Ianina Prudenko, a Ukranian culturologist and author of Cybernetics in the Humanities and Arts in the USSR. “You can’t say that he was a ‘dry mathematician’ who did not care what effect algorithmic music had on perception.”

On that summer night, Zaripov heard the sound he had been pursuing for years.

Elements of Zaripov’s simple algorithms exist in the sophisticated algorithms that would follow, such as David Cope’s Experiments in Musical Intelligence Project, developed in the 1980s, which uses AI to analyze music styles, like those of Bach and Mozart, and compose new works in those styles, pushing the boundaries of creative AI applications in music composition.

Recent incarnations of the composing computer have relied on advances in deep learning and neural networks. These modern approaches typically use a transformer architecture—the same neural network as ChatGPT and other large language models—trained to fill in blanks in a vast corpus of music represented in the MIDI format, a digital language for musical notation. These approaches take human language descriptions of music as input, and generate outputs based on their statistical learning of the structure of music in their training data.

For all the advances in technology, though, the specter of the giant machine brain hovering over humanity is still with us. John Supko, a composer and professor of music at Duke University, is no Luddite. Supko has created and used computational tools for composition, but his experience with music LLMs has left him disheartened.

“It is extremely dispiriting to open Twitter and see the deployment of powerful technology like ChatGPT to create music that sounds like schlock,” Supko says. “If such music were composed by a human, it would not be worth listening to. For these systems to regurgitate third-rate imitations of Bach and other composers is a criminal underuse of the technology.”

The composer and technologist Ed Newton-Rex led a team at the startup Stability AI that released a generative music model called Stable Audio, a neural network similar to OpenAI’s Dall-E, which works by diffusing noise into structured data, in this case, music. Newton-Rex says he started out thinking, “Wouldn’t it be cool if AI could generate music? And of course the answer is yes. It would be impressive and magical.”

But for Newton-Rex, the magic is gone. “We’re at the point where generative AI today is literally starting to impact musicians. What I’m talking about is not some hypothetical bad. It is definitive that AI is competing with human creators.” Disillusioned by Silicon Valley, Newton-Rex left Stability AI in 2023 to found a nonprofit that certifies the source of training data for AI models.

For his part, Supko is optimistic about the ways technology can improve composition. “When I compose music,” he explains, “my inspiration is powered by tiny explosions of connections. I listen to see what happens when I combine two little fragments of music. I don’t have a full-fledged idea of what I’m working toward but work with my ability to be surprised. That’s what I want for an audience—to be surprised.”

Supko says he’s unlikely to compose with today’s generative AI, but he sees possible uses for it. He imagines he could take the result of generative AI music, “chop it up and manipulate it as just another sample of something I found in the world, and work on it in the hope of making it more interesting.”

With this approach, the responsibility and intention for creating music remain with the human composer. Zaripov would have likely agreed. In his 1963 book, Cybernetics and Music, he wrote, “The machine and cybernetic methods are powerful aids to man in the investigation of the mysteries of nature, the secrets of creativity, in the clarification of the laws and principles of intellectual processes.” Despite the progress in AI, these laws and principles are still a mystery, and the art of making music remains human, for now. ![]()

Lead image: Bragapictures / Shutterstock