In the early 1990s, Iris Murdoch was writing a new novel, as she’d done 25 times before in her life. But this time something was terribly off. Her protagonist, Jackson, an English manservant who has a mysterious effect on a circle of friends, once meticulously realized in her head, had become a stranger to her. As Murdoch later told Joanna Coles, a Guardian journalist who visited her in her house in North Oxford in 1996, a year after the publication of the book, Jackson’s Dilemma, she was suffering from a bad writer’s block. It began with Jackson and now the shadows had suffused her life. “At the moment I’m just falling, falling … just falling as it were,” Murdoch told Coles. “I think of things and then they go away forever.”

Jackson’s Dilemma was a flop. Some reviewers were respectful, if confused, calling it “an Indian Rope Trick, in which all the people … have no selves,” and “like the work of a 13-year-old schoolgirl who doesn’t get out enough.” Compared to her earlier works, which showcase a rich command of vocabulary and a keen grasp of grammar, Jackson’s Dilemma is rife with sentences that forge blindly ahead, lacking delicate shifts in structure, the language repetitious and deadened by indefinite nouns. In the book’s final chapter, Jackson sits sprawled on grass, thinking that he has “come to a place where there is no road,” as lost as Lear wandering on the heath after the storm.

Two years after Jackson’s Dilemma was published, Murdoch saw a neurologist who diagnosed her with Alzheimer’s disease. That discovery brought about a small supernova of media attention, spurred the next year by the United Kingdom publication of Iris: A Memoir of Iris Murdoch (called Elegy for Iris in the United States), an incisive and haunting memoir by her husband John Bayley, and a subsequent film adaptation starring Kate Winslet and Judi Dench. “She is not sailing into the dark,” Bayley writes toward the end of the book. “The voyage is over, and under the dark escort of Alzheimer’s, she has arrived somewhere.”

In 2003, Peter Garrard, a professor of neurology, with an expertise in dementia, took a unique interest in the novelist’s work. He had studied for his Ph.D. under John Hodges, the neurologist who had diagnosed Murdoch with Alzheimer’s. One day Garrard’s wife handed him her copy of Jackson’s Dilemma, commenting, “You’re interested in language and Alzheimer’s; why don’t you analyze this?” He resolved he would do just that: analyze the language in Murdoch’s fiction for signs of the degenerative effects of Alzheimer’s.

Researchers believe cognitive impairment begins well before signs of dementia are obvious to outsiders.

Prior to his interest in medicine, Garrard had studied ancient literature at Oxford, at a time when the discipline of computational language analysis, or computational linguistics, was taking root. Devotees of the field had developed something they called the Oxford Concordance Program—a computer program that created lists of all of the word types and word tokens in a text. (Token refers to the total number of words in a given text, and the type is the number of different words that appear in that text.) Garrard was intrigued by the idea that such lists could give ancient literature scholars insight into texts whose authorship was in dispute. Much as a Rembrandt expert might examine paint layers in order to assign a painting to a forger or to the Old Master himself, a computational linguist might count word types and tokens in a text and use that information to identify a work of ambiguous authorship.

Garrard had the idea to apply a similar computational technique to books by Murdoch. Alzheimer’s researchers believe cognitive impairment begins well before signs of dementia are obvious to outsiders. Garrard thought it might be possible to sift through three of Murdoch’s novels, written at different points in her life, to see if signs of dementia could be read between the lines.

Scientists believe Alzheimer’s disease is caused by cell death and tissue loss as a result of abnormal build up of plaques and tangles of protein in the brain. Language is impacted when the brain’s Wernicke’s and Broca’s areas, responsible for language comprehension and production, are affected by the spread of disease. Language, therefore, provides an exceptional window on the onset and development of pathology. And a masterful writer like Murdoch puts bountiful language in high relief, offering a particularly rich field of study.

The artist, in fact, could serve science. If computer analysis could help pinpoint the earliest signs of mild cognitive impairment, before the onset of obvious symptoms, this might be valuable information for researchers looking to diagnose the disease before too much damage has been done to the brain.

Barbara Lust, a professor of human development, linguistics, and cognitive science at Cornell University, who researches topics in language acquisition and early Alzheimer’s, explains that understanding changes in language patterns could be a boon to Alzheimer’s therapies. “Caregivers don’t usually notice very early changes in language, but this could be critically important both for early diagnosis and also in terms of basic research,” Lust says. “A lot of researchers are trying to develop drugs to halt the progression of Alzheimer’s, and they need to know what the stages are in order to halt them.”

Before Garrard and his colleagues published their Murdoch paper in 2005, researchers had identified language as a hallmark of Alzheimer’s disease. As Garrard explains, a patient’s vocabulary becomes restricted, and they use fewer words that are specific labels and more words that are general labels. For example, it’s not incorrect to call a golden retriever an “animal,” though it is less accurate than calling it a retriever or even a dog. Alzheimer’s patients would be far more likely to call a retriever a “dog” or an “animal” than “retriever” or “Fred.” In addition, Garrard adds, the words Alzheimer’s patients lose tend to appear less frequently in everyday English than words they keep—an abstract noun like “metamorphosis” might be replaced by “change” or “go.”

Researchers also found the use of specific words decreases and the noun-to-verb ratio changes as more “low image” verbs (be, come, do, get, give, go, have) and indefinite nouns (thing, something, anything, nothing) are used in place of their more unusual brethren. The use of the passive voice falls off markedly as well. People also use more pauses, Garrard says, as “they fish around for words.”

In their 2005 paper, Garrard and colleagues point out that the assessment of language changes in Alzheimer’s patients was based in many cases on standardized tasks such as word fluency and picture naming, the kind of tests criticized for lacking a “real-world validity.” But writing novels is a more naturalistic activity, one done voluntarily and without knowledge of the disease. That eliminates any negative or compensatory response that a standardized test might induce in a patient. With Murdoch, he and his colleagues could analyze language, “the products of cognitive operations,” over the natural course of her novel-writing life, which stretched from her 30s to 70s. “I thought it would be fascinating to be able to show that language could be affected before the patient or anyone else was aware of symptoms,” Garrard says.

For his analysis of Murdoch, Garrard used a program called Concordance to count word tokens and types in samples of text from three of her novels: her first published effort, Under the Net; a mid-career highlight, The Sea, The Sea, which won the Booker prize in 1978; and her final effort, Jackson’s Dilemma. He found that Murdoch’s vocabulary was significantly reduced in her last book—“it had become very generic,” he says—as compared to the samples from her two earlier books.

The Murdoch paper by Garrard and his colleagues proved influential. In Canada, Ian Lancashire, an English professor at the University of Toronto, was conducting his own version of textual analysis. Though he’d long toiled in the fields of Renaissance drama, Lancashire had been inspired by the emergence of a field called corpus linguistics, which involves the study of language though specialized software. In 1985, he founded the Center for Computing in the Humanities at the University of Toronto. (Today Lancashire is an emeritus professor, though he maintains a lab at the University of Toronto.)

What he discovered astounded him: Agatha Christie’s use of vocabulary had “completely tanked.”

In trying to determine some sort of overarching theory on the genesis of creativity, Lancashire had directed the development of software for the purpose of studying language through the analysis of text. The software was called TACT, short for Textual Analysis Computing Tools. The software created an interactive concordance and allowed Lancashire to count types and tokens in books by several of his favorite writers, including Shakespeare and Milton.

Lancashire had been an Agatha Christie fan in his youth, and decided to apply the same treatment to two of Christie’s early books, as well as Elephants Can Remember, her second-to-last novel. What he discovered astounded him: Christie’s use of vocabulary had “completely tanked” at the end of her career, by an order of about 20 percent. “I was shocked, because it was so obvious,” he says. Even though the length of Elephants was comparable to her other works, there was a marked decrease in the variety of words she used in it, and a good deal more phrasal repetition. “It was as if she had given up trying to find le mot juste, exactly the right word,” he says.

Lancashire presented his findings at a talk at the University of Toronto in 2008. Graeme Hirst, a computational linguist in Toronto’s computer science department, was in the audience. He suggested to Lancashire that they collaborate on statistical analysis of texts. The team employed a wider array of variables and much larger samples of text from Christie and Murdoch, searching for linguistic markers for Alzheimer’s disease. (Unlike Murdoch, Christie was never formally diagnosed with Alzheimer’s.)

The Toronto team, which included Regina Jokel, an assistant professor in the department of Speech-Language Pathology at the University of Toronto, and Xuan Le, at the time one of Hirst’s graduate students, settled on P.D. James—a writer who would die with her cognitive powers seemingly intact—as their control subject. Using a program called Stanford Parser, they fed books by all three writers through the algorithm, focusing on things like vocabulary size, the ratio of the size of the vocabulary to the total number of words used, repetition, word specificity, fillers, grammatical complexity, and the use of the passive voice.

“Each type of dementia has its own language pattern, so if someone has vascular dementia, their pattern would look different than someone who has progressive aphasia or Alzheimer’s,” says Jokel. “Dementia of any kind permeates all modalities, so if someone has problems expressing themselves, they will have trouble expressing themselves both orally and in writing.”

To the researchers, evidence of Murdoch’s decline was apparent in Jackson’s Dilemma. A passage from The Sea, The Sea illustrates her rich language:

The chagrin, the ferocious ambition which James, I am sure quite unconsciously, prompted in me was something which came about gradually and raged intermittently.

In Jackson’s Dilemma, her vocabulary seems stunted:

He got out of bed and pulled back the curtains. The sun blazed in. He did not look out of the window. He opened one of the cases, then closed it again. He had been wearing his clothes in bed, except for his jacket and his shoes.

It seems that after conceiving of her character, Murdoch had trouble climbing back inside of his head. According to Lancashire, this was likely an early sign of dementia. “Alzheimer’s disease … damages our ability to see identity in both ourselves and other people, including imagined characters,” Lancashire later wrote. “Professional novelists with encroaching Alzheimer’s disease will forget what their characters look like, what they have done, and what qualities they exhibit.”

The Toronto team’s “Three British Novelists” paper, as it came to be called, influenced a number of other studies, including one at Arizona State University. Using similar software, researchers examined non-scripted news conferences of former presidents Ronald Reagan and George Herbert Walker Bush. President Reagan, they wrote, showed “a significant reduction in the number of unique words over time and a significant increase in conversational fillers and non-specific nouns over time,” while there was no such pattern for Bush. The researchers conclude that during his presidency, Reagan was showing a reduction in linguistic complexity consistent with what others have found in patients with dementia.

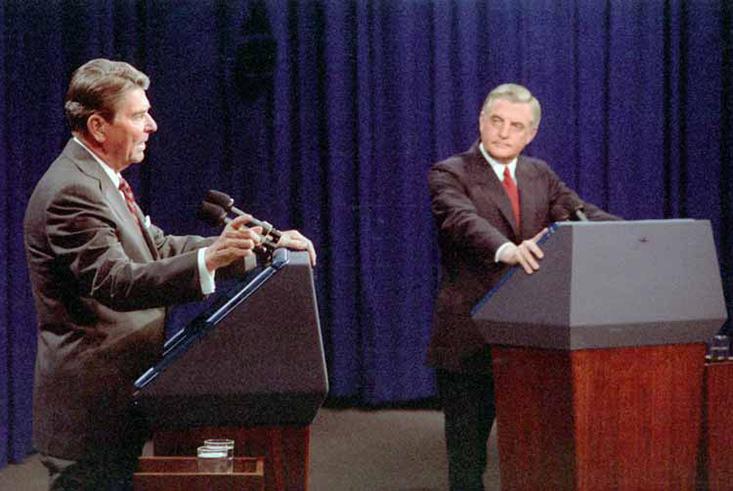

Brian Butterworth, a professor emeritus of cognitive neuropsychology at the Institute of Cognitive Neuropsychology at the University College London, also “diagnosed” Reagan in the mid ’80s, years before Reagan was clinically diagnosed with Alzheimer’s disease. Butterworth wrote a report comparing Reagan’s 1980 debate performance with then-President Jimmy Carter, with that of his debate performance with democratic presidential nominee Walter Mondale four years later.

“With Carter, Reagan was more or less flawless, but in 1984, he was making mistakes of all sorts, minor slips, long pauses, and confusional errors,” Butterworth says. “He referred to the wrong year in one instance.” If one forgets a lot of facts, as Reagan did, Butterworth says, that might be an effect of damage to the frontal lobes; damage to the temporal lobes and Broca’s area affects speech. “The change from 1980 to 1984 was not stylistic, in my opinion,” Butterworth says. Reagan “got much worse, probably because his brain had changed in a significant way. He had been shot. He had been heavily rehearsed. Even with all that, he was making a lot of mistakes.”

Thanks in part to the literary studies, the idea of language as a biomarker for Alzheimer’s has continued to gain credibility. In 2009, the National Institute on Aging and the Alzheimer’s Association charged a group of prominent neurologists with revising the criteria for Alzheimer’s disease, previously updated in 1984. The group sought to include criteria that general healthcare providers, who might not have access to diagnostic tools like neuropsychological testing, advanced imaging, and cerebrospinal fluid measures, could use to diagnose dementia. Part of their criteria included impaired language functions in speaking, reading, and writing; a difficulty in thinking of common words while speaking; hesitations; and speech, spelling, and writing errors.

The embrace of language as a diagnostic strategy has spurred a host of diagnostic tools. Hirst has begun working on programs that use speech by real patients in real time. Based on Hirst’s work, Kathleen Fraser, a Ph.D. student, and Frank Rudzicz, an assistant professor of computer science at the University of Toronto, and a scientist at the Toronto Rehabilitation Institute, who focuses on machine learning and natural language processing in healthcare settings, have developed software that analyzes short samples of speech, 1 to 5 minutes in length, to see if an individual might be showing signs of cognitive impairment. They are looking at 400 or so variables right now, says Rudzicz, such as pitch variance, pitch emphasis, pauses or “jitters,” and other qualitative aspects of speech.

Few of us are prolific novelists, but most of us are leaving behind large datasets of language, courtesy of email and social media.

Rudzicz and Fraser have co-founded a startup called Winterlight Labs, and they are working on similar software to be used by clinicians. Some organizations are already piloting their technology. They hope to capture the attention of pharmaceutical companies regarding using their program to help quickly identify the best individuals to be part of clinical trials—which tends to be a very expensive and laborious process—or to help track people’s cognitive states once they’ve been clinically diagnosed. They also hope one day to be able to use language as a lens to peer into people’s general cognitive states, so that researchers might gain a clearer understanding of everything from depression to autism.

Lust and other researchers agree, however, that the idea of using language as a biomarker for Alzheimer’s and other forms of cognitive impairment is still in its early stages. “We ultimately need some kind of low-cost, easy-to-use and noninvasive tool that can identify someone who should go on for more intensive follow-up, such as a cup on your arm can detect high-blood pressure that could indicative of heart disease,” says Heather Snyder, a molecular biologist and Senior Director of Medical and Scientific Operations at the Alzheimer’s Association. “At this point we don’t have that validated tool that tells us that something is predictive, at least to my knowledge.”

Howard Fillit, the founding executive editor and chief scientific officer of the Alzheimer’s Drug Discovery Foundation, says language is a valid way to test for Alzheimer’s disease and other forms of dementia. “If someone comes in complaining of cognitive impairment, and you want to do a diagnostic evaluation and see how serious their language problem is, I can see that [such software] would be useful,” he says. But he says the language analysis would have to be performed with other tests that measure cognitive function. “Otherwise,” Fillit says, “you might end up scaring a lot of people unnecessarily.”

One of the main reasons Garrard undertook the Murdoch study in the early 2000s was he saw her novels as a kind of large, unstructured dataset of language. He loved working with datasets, he says, “to see whether they tell a story or otherwise support a hypothesis.” Now, with computer programs that analyze language for cognitive deficits on the horizon, the future of Alzheimer’s diagnosis looks both beneficial and unnerving. Few of us are prolific novelists, but most of us are leaving behind large, unstructured datasets of language, courtesy of email, social media, and the like. There are such large volumes of data in that trail, Garrard says, “that it’s going to be usable in predicting all sorts of things, possibly including dementia.”

Garrard agrees a computer program that aids medical scientists in diagnosing cognitive diseases like Alzheimer’s holds great promise. “It’s like screening people for diabetes,” he says. “You wouldn’t want to have the condition, but better to know and treat it than not.”

Adrienne Day is a Bay Area-based writer and editor. She covers issues in science, culture, and social innovation.