The hallmark of intelligence is the ability to learn. As decades of research have shown, our brains exhibit a high degree of “plasticity,” meaning that neurons can rewire their connections in response to new stimuli. But researchers at Carnegie Mellon University and the University of Pittsburgh have recently discovered surprising constraints on our learning abilities. The brain may be highly flexible and adaptive overall, but at least over short time frames, it learns by inefficiently recycling tricks from its neural repertoire rather than rewiring from scratch.

“Whenever I play squash, I look like a tennis player,” said Byron Yu, a biomedical engineer and neuroscientist at Carnegie Mellon and one of the leaders of the research. Yu has played tennis for many years. His problem with squash is that it uses a shorter racquet and can demand quicker, harder shots, with a different sort of follow-through than what he’s used to on the tennis court. Yet in a squash match, he slips into the style of racquet use that long experience with tennis has drilled into him. The brain doesn’t easily let go of what it already knows.

Now, while observing activity in the brain during learning, Yu and his colleagues have seen evidence of a similar lack of plasticity at the neural level. That discovery and the team’s related research may help to explain why some things are harder to learn than others.

Several years ago, Yu, Aaron Batista of the University of Pittsburgh, and members of their labs began using brain-computer interfaces (BCIs) as tools for neuroscience discovery. These devices have a chip roughly the size of a fingernail that can track the electrical activity of 100 neurons at once in the brain’s motor cortex, which controls movement. By monitoring the sequences of voltage spikes that run through the individual neurons over time, a BCI can calculate a “spike rate” to characterize the behavior of each neuron during the performance of a task.

“Learning entails forgetting. The brain might be reluctant to let go of things it already knows how to do.”

“You can imagine the challenge of trying to dig through all this data to see what the brain is doing,” Yu said. (Editor’s note: Yu receives funding from the Simons Foundation, which publishes Quanta.) “Our eyes are not well-trained to pick up on subtle patterns here.” But advanced statistical analytics built into the chip can do this, and the patterns can be used to identify the neural activity associated with a test subject’s intent to make specific movements. The system can distinguish, for example, between a subject wanting to reach an arm to the left, to the right, up, or down.

Researchers can then use the output of a BCI to translate the neural activity for specific movements into directional controls for a cursor on a computer screen. Through trial and error, people or animals using the interface can learn that by imagining their arm moving to the left, for example, they can push a cursor that way.

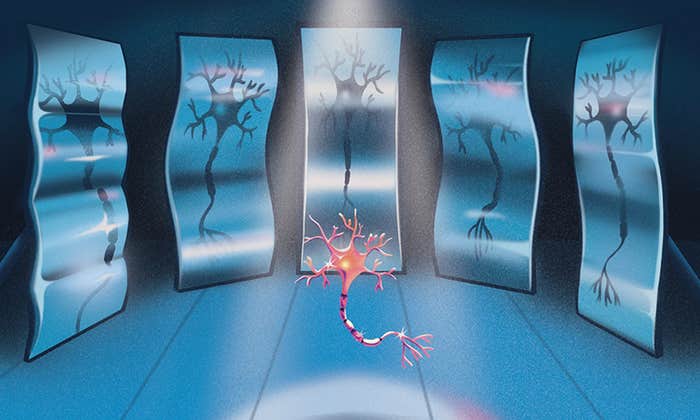

When Yu, Batista, and their colleagues monitored the motor cortex of a monkey while it repeatedly performed simple arm-waving tasks, they found that the neurons were not firing independently. Rather, the behavior of the 100 neurons being measured could be described statistically in terms of about 10 neurons, which were variously exciting or inhibiting the others. In the researchers’ analysis, this result showed up as a set of plotted points that filled only a small volume of a 100-dimensional data space.

“We’ve been calling [that volume] the intrinsic manifold because we think it’s something really intrinsic to the brain,” said Steven Chase, a professor of biomedical engineering at Carnegie Mellon. “The dimensionality of this space is highly predictive of what these neurons can do.”

In 2014, the researchers observed that test subjects could learn new tasks more easily if they involved patterns of neural activity within the intrinsic manifold rather than outside it. That outcome makes sense, according to Yu, because tasks that fall within the intrinsic manifold make demands on the brain that are consistent with the underlying neural structure. After completing this study, the group turned its attention to the question of how neural activity changes during learning, as described in a recent paper in Nature Neuroscience.

To find out, the researchers first let primates equipped with BCIs become adept at moving the cursor left and right. Then the team switched the neural activity requirements for moving the cursor and waited to see what new patterns of neural activity, corresponding to new points in the intrinsic manifold, the animals would use to accomplish them.

The researchers expected to see evidence of a learning strategy they call “realignment,” in which the animal would start using whatever new activity pattern worked most naturally. “Realignment is the best strategy the animal could use, subject to these constraints in the intrinsic manifold,” said Matthew Golub, who collaborated on the project as a postdoc with Yu and Chase but now works at Stanford University. Alternatively, the monkeys’ brains might have learned through a process of “rescaling,” in which neurons involved in the original learning task would increase or decrease their spike rates until they stumbled onto a pattern that worked.

But to the researchers’ surprise, neither realignment nor rescaling occurred. Instead, the researchers observed a highly inefficient approach called “reassociation.” The animal subjects learned the new tasks simply by repeating the original neural activity patterns and swapping their assignments. Patterns that had previously moved the cursor left now moved it right, and vice versa. “It’s recycling what they used to do,” Golub said, but under new circumstances.

Why would the brain use less than the best strategy for learning? The group’s findings suggest that, just as the neural architecture constrains activity to the intrinsic manifold, some further constraint limits how the neurons reorganize their activity during the experiments. Batista suggests that the changes in the synaptic connections between neurons that would be required for realignment may be too hard to accomplish quickly. “Plasticity must be more limited in the short term than we thought,” he said. “Learning entails forgetting. The brain might be reluctant to let go of things it already knows how to do.”

Chase likened the motor cortex to an old-fashioned telephone switchboard, with neural connections like cables linking inputs from other cortical areas to outputs in the brain’s cerebellum. During their experiments, he said, the brain “just rearranges all the cables”—though the nuances of what that means are still unknown.

“The quick-and-dirty strategy is to change the inputs to the cortex,” Yu said. But he also noted that their experiments only tracked the brain’s activity for one or two hours. The researchers can’t yet rule out the possibility that reassociation is a fast interim way for the brain to learn new tasks; over a longer time period, realignment or rescaling might still show up.

If so, it might explain differences in how novices and experts process new information related to a shared interest. “Beginners make do with what they have, and experts consolidate knowledge,” Batista said. “This might be a neural basis for that well-known phenomenon.”

Lead image: The brain’s adaptability can sometimes seem endless. But observations of the brain during learning suggest that its networks of neurons can be surprisingly inflexible and inefficient. Credit: Liu zishan