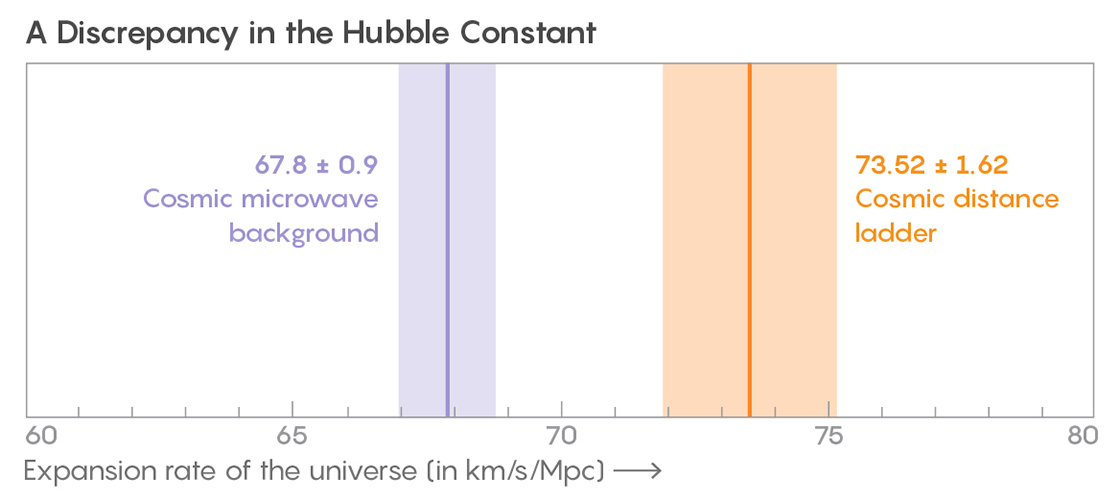

Cosmologists have wielded every tool at their disposal to measure exactly how fast the universe is expanding, a rate known as the Hubble constant. But these measurements have returned contradictory results.

The conflicting measurements have vexed astrophysicists and inspired rampant speculation as to whether unknown physical processes might be causing the discrepancy. Maybe dark matter particles are interacting strongly with the regular matter of planets, stars, and galaxies? Or perhaps an exotic particle not yet detected, such as the so-called sterile neutrino, might be playing a role. The possibilities are as boundless as the imaginations of theoretical physicists.

Yet a new study by John Peacock, a cosmologist at the University of Edinburgh and a leading figure in the cosmology community, takes a profoundly more conservative view of the conflict. Along with his co-author, José Luis Bernal, a graduate student at the University of Barcelona, he argues that it’s possible there’s no tension in the measurements after all. Just one gremlin in one telescope’s instrument, for example, or one underestimated error, is all it takes to explain the gap between the Hubble values. “When you make these measurements, you account for everything that you know of, but of course there could be things we don’t know of. Their paper formalizes this in a mathematical way,” said Wendy Freedman, an astronomer at the University of Chicago.

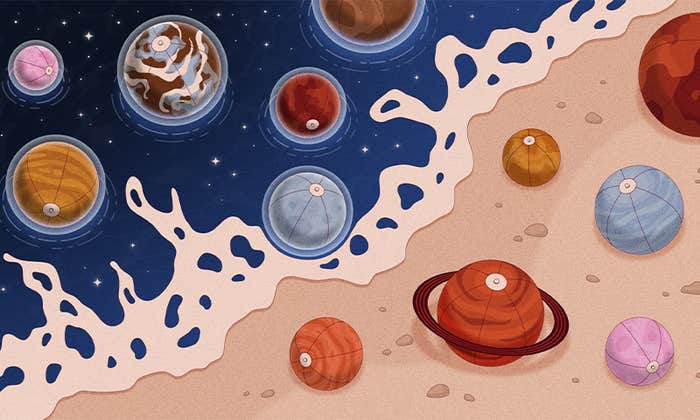

Freedman is a pioneer in measuring the Hubble constant with Cepheid stars, which all shine with the same intrinsic brightness. Determine how bright such stars are, and you can precisely calculate the distance to nearby galaxies that have these stars. Measure how fast these galaxies are moving away from us, and the Hubble constant follows. This method can be extended to the more-distant universe by climbing the “cosmic distance ladder”—using the brightness of Cepheids to calibrate the brightness of supernovas that can be seen from billions of light-years away.

All of these measurements have uncertainties, of course. Each research group first makes raw measurements, then attempts to account for the vagaries of individual telescopes, astrophysical unknowns, and countless other sources of uncertainty that can keep night-owl astronomers up all day. Then all the individual published studies get combined into a single number for the expansion rate, along with a measurement of how uncertain this number is.

In the new work, Peacock argues that unknown errors can creep in at any stage of these calculations, and in ways that are far from obvious to the astronomers working on them. He and Bernal provide a meta-analysis of the disparate measurements with a “Bayesian” statistical approach. It separates measurements into separate classes that are independent from one another—meaning that they don’t use the same telescope or have the same implicit assumptions. It can also be easily updated when new measurements come out. “There’s a clear need—which you would’ve thought statisticians would’ve provided years ago—for how you combine measurements in such a way that you’re not likely to lose your shirt if you start betting on the resulting error bars,” said Peacock. He and Bernal then consider the possibility of underestimated errors and biases that could systematically shift a measured expansion rate up or down. “It’s kind of the opposite of the normal legal process: All measurements are guilty until proven innocent,” he said. Take these unknown unknowns into account, and the Hubble discrepancy melts away.

Other researchers agree that such mundane factors could be at work, and that the excitement over the Hubble constant is driven in part by a hunger to find something new in the universe. “I have a very bad feeling that we are somehow stuck with a cosmological model that works but that we cannot either understand or explain from first principles, and then there is a lot of frustration,” said Andrea Macciò, an astrophysicist at New York University, Abu Dhabi. “This pushes people to jump onto any possibility for new physics, no matter how thin the evidence is.”

Meanwhile, researchers continue to improve their measurements of the Hubble constant. In a paper appearing today on the scientific preprint site arxiv.org, researchers used measurements of 1.7 billion stars taken by the European Space Agency’s Gaia satellite to more precisely calibrate the distance to nearby Cepheid stars. They then climbed the cosmic distance ladder to recalculate the value of the Hubble constant. With the new data, the disagreement between the two Hubble measurements has grown even worse; the researchers estimate that there’s less than a 0.01 percent possibility that the discrepancy is due to chance. A simple fix would be welcome, but don’t count on it coming anytime soon.

Lead image: The Cepheid variable star RS Puppis as seen by the Hubble Space Telescope. These types of stars are used along with supernovas to measure the expansion rate of the universe. Credit: Hubble Space Telescope