Artificial intelligence sees things we don’t—often to its detriment. While machines have gotten incredibly good at recognizing images, it’s still easy to fool them. Simply add a tiny amount of noise to the input images, undetectable to the human eye, and the AI suddenly classifies school buses, dogs or buildings as completely different objects, like ostriches.

In a paper posted online in June 2021, Nicolas Papernot of the University of Toronto and his colleagues studied different kinds of machine learning models that process language and found a way to fool them by meddling with their input text in a process invisible to humans. The hidden instructions are only seen by the computer when it reads the code behind the text to map the letters to bytes in its memory. Papernot’s team showed that even tiny additions, like single characters that encode for white space, can wreak havoc on the model’s understanding of the text. And these mix-ups have consequences for human users, too—in one example, a single character caused the algorithm to output a sentence telling the user to send money to an incorrect bank account.

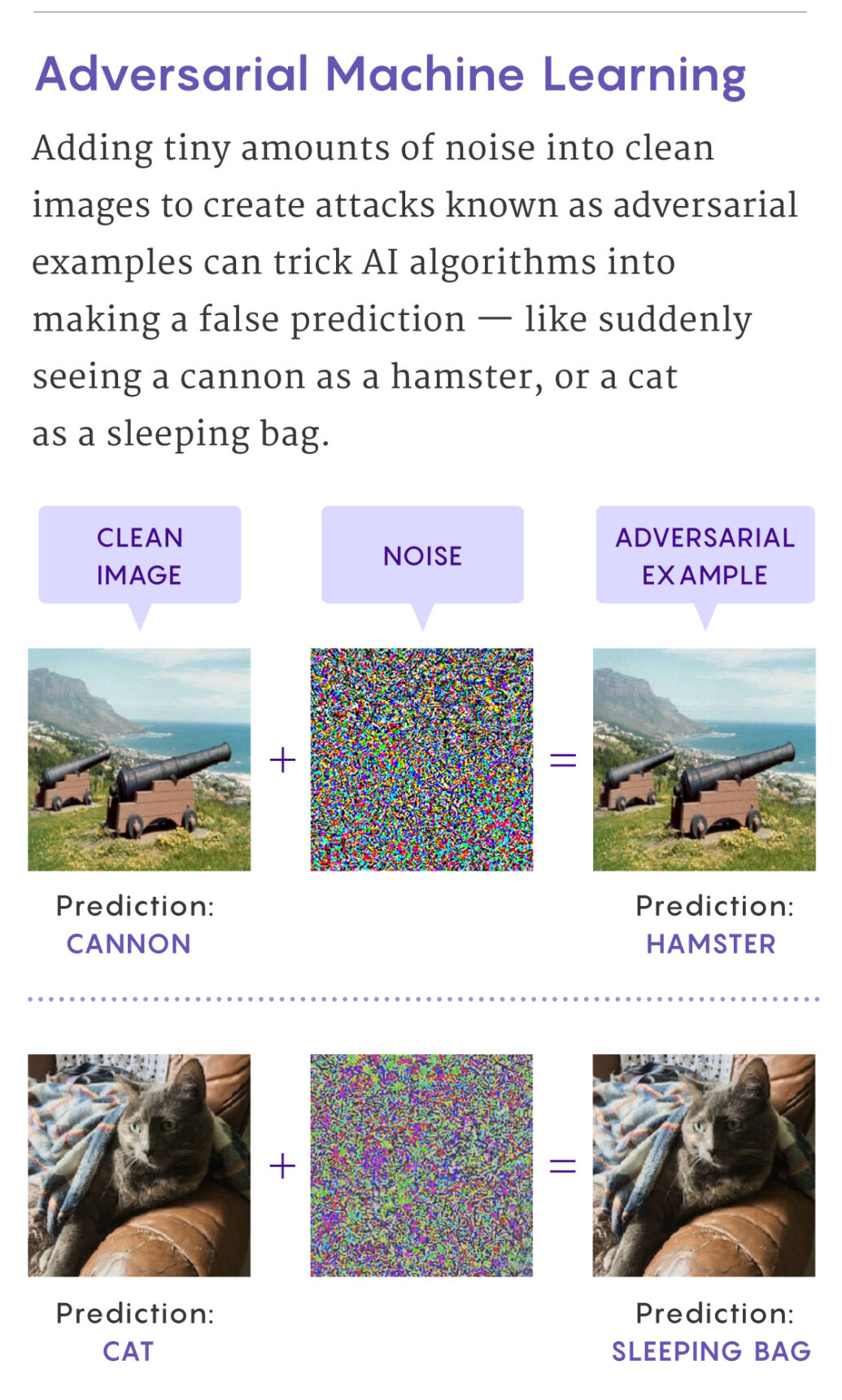

These acts of deception are a type of attack known as adversarial examples, intentional changes to an input designed to deceive an algorithm and cause it to make a mistake. This vulnerability achieved prominence in AI research in 2013 when researchers deceived a deep neural network, a machine learning model with many layers of artificial “neurons” that perform computations.

For now, we have no foolproof solutions against any medium of adversarial examples—images, text or otherwise. But there is hope. For image recognition, researchers can purposely train a deep neural network with adversarial images so that it gets more comfortable seeing them. Unfortunately, this approach, known as adversarial training, only defends well against adversarial examples the model has seen. Plus, it lowers the accuracy of the model on non-adversarial images, and it’s computationally expensive. Recently, the fact that humans are so rarely duped by these same attacks has led some scientists to look for solutions inspired by our own biological vision.

“Evolution has been optimizing many, many organisms for millions of years and has found some pretty interesting and creative solutions,” said Benjamin Evans, a computational neuroscientist at the University of Bristol. “It behooves us to take a peek at those solutions and see if we can reverse-engineer them.”

Focus on the Fovea

The first glaring difference between visual perception in humans and machines starts with the fact that most humans process the world through our eyes, and deep neural networks don’t. We see things most clearly in the middle of our visual field due to the location of our fovea, a tiny pit centered behind the pupil in the back of our eyeballs. There, millions of photoreceptors that sense light are packed together more densely than anywhere else.

“We think we see everything around us, but that’s to a large extent an illusion,” said Tomaso Poggio, a computational neuroscientist at the Massachusetts Institute of Technology and director of the Center for Brains, Minds and Machines.

Machines “see” by analyzing a grid of numbers representing the color and brightness of every pixel in an image. This means they have the same visual acuity across their entire field of vision (or rather, their grid of numbers). Poggio and his collaborators wondered if processing images the way our eyes do—with a clear focus and a blurry boundary—could improve adversarial robustness by lessening the impact of noise in the periphery. They trained deep neural networks with images edited to display high resolution in just one place, mimicking where our eyes might focus, with decreasing resolution expanding outward. Because our eyes move around to fixate on multiple parts of an image, they also included many versions of the same image with different areas of high resolution.

Their results, published in 2020, suggested that models trained with their “foveated” images led to improved performance against adversarial examples without a drop in accuracy. But their models still weren’t as effective against the attacks as adversarial training, the top nonbiological solution. Two postdoctoral researchers in Poggio’s lab, Arturo Deza and Andrzej Banburski, are continuing this line of work by incorporating more complex foveated computations, with a greater emphasis on the computations that occur in our peripheral vision.

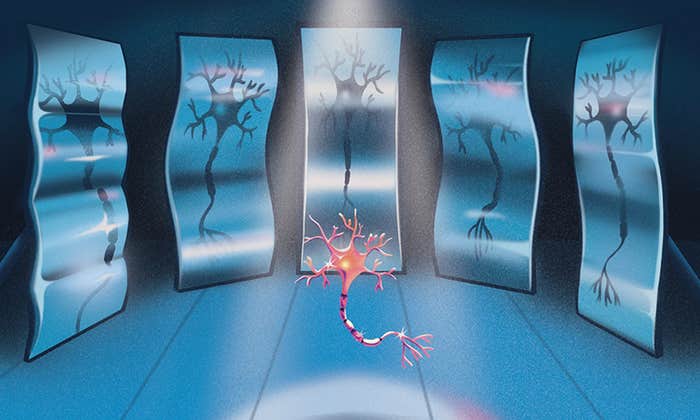

Mirroring Visual Neurons

Of course, light hitting the cells in our eyes is only the first step. Once the electrical signals from photoreceptors exit the back of our eyes, they travel along nerve fibers until they reach the seat of visual processing at the back of our brains. Early breakthroughs about how individual neurons are hierarchically organized there to represent visual features, like the orientation of a line, inspired the computer scientist Kunihiko Fukushima to develop the first convolutional neural network (CNN) in 1980. This is a type of machine learning model now widely used for image processing that has been found to mimic some of the brain activity throughout the visual cortex.

CNNs work by using filters that scan images to extract specific features, like the edges of an object. But the processing that happens in our visual cortex is still vastly different and more complex, and some think that mirroring it more closely could help machines see more like us—including against adversarial examples.

“And just like a biological system, [machines] may need some period of sleep,” says Maksim Bazhenov of the University of California, San Diego.

The labs of James DiCarlo at MIT and Jeffrey Bowers at the University of Bristol have been doing just this. Both labs have added a special filter that approximates how single neurons extract visual information in a region known as the primary visual cortex, and DiCarlo’s lab has gone further by adding features like a noise generator meant to replicate the brain’s noisy neurons, which fire at random times. These additions have made machine vision more humanlike by guarding against an overreliance on texture (a common AI problem) and difficulties with image distortions like blurring.

When DiCarlo’s lab tried out their souped-up CNN against adversarial examples, the results suggested that the modifications lent it a fourfold boost in accuracy on adversarial images, with only a minuscule drop in accuracy on clean images compared to standard CNN models. It also outperformed the adversarial training method, but only for types of adversarial images that weren’t used during training. They found that all of their biologically inspired additions worked together to defend against attacks, with the most important being the random noise their model added to every artificial neuron.

In a conference paper published in November of 2021, DiCarlo’s lab worked with other teams to further study the impact of neural noise. They added random noise to a new artificial neural network, this time inspired by our auditory system. They claim this model also successfully fended off adversarial examples for speech sounds—and again they found that the random noise played a large role. “We still haven’t quite figured out why the noise interacts with the other features,” said Joel Dapello, a doctoral student in DiCarlo’s lab and a co-lead author on the papers. “That’s a pretty open question.”

Machines That Sleep

What our brains do when our eyes are closed and our visual cortex isn’t processing the outside world may be just as important to our biological armor against attacks. Maksim Bazhenov, a computational neuroscientist at the University of California, San Diego, has spent more than two decades studying what happens in our brains while we sleep. Recently, his lab began investigating whether putting algorithms to sleep might fix a host of AI problems, including adversarial examples.

Their idea is simple. Sleep plays a critical role in memory consolidation, which is how our brains turn recent experiences into long-term memories. Researchers like Bazhenov think sleep might also contribute to building and storing our generalized knowledge about the things we encounter every day. If that’s the case, then artificial neural networks that do something similar might also get better at storing generalized knowledge about their subject matter—and become less vulnerable to small additions of noise from adversarial examples.

“Sleep is a phase when the brain actually has time to kind of turn off external input and deal with its internal representations,” said Bazhenov. “And just like a biological system, [machines] may need some period of sleep.”

In a 2020 conference paper led by graduate student Timothy Tadros, Bazhenov’s lab put an artificial neural network through a sleep phase after training it to recognize images. During sleep, the network was no longer forced to update the connections between its neurons according to a common learning method that relies on minimizing error, known as backpropagation. Instead, the network was free to update its connections in an unsupervised manner, mimicking the way our neurons update their connections according to an influential theory called Hebbian plasticity. After sleep, the neural network required more noise to be added to an adversarial example before it could be fooled, compared to neural networks that didn’t sleep. But there was a slight drop in accuracy for non-adversarial images, and for some types of attacks adversarial training was still the stronger defense.

An Uncertain Future

Despite recent progress in developing biologically inspired approaches to protect against adversarial examples, they have a long way to go before being accepted as proven solutions. It may be only a matter of time before another researcher is able to defeat these defenses—an extremely common occurrence in the field of adversarial machine learning.

Not everyone is convinced that biology is even the right place to look.

“I don’t think that we have anything like the degree of understanding or training of our system to know how to create a biologically inspired system which will not be affected adversely by this category of attacks,” said Zico Kolter, a computer scientist at Carnegie Mellon University. Kolter has played a big role in designing nonbiologically inspired defense methods against adversarial examples, and he thinks it’s going to be an incredibly difficult problem to solve.

Kolter predicts that the most successful path forward will involve training neural networks on vastly larger amounts of data—a strategy that attempts to let machines see as much of the world as we do, even if they don’t see it the same way.

Lead image: Samuel Velasco/Quanta Magazine