Can artificial intelligence be trained to seek—and speak—only the truth? The idea seems enticing, seductive even.

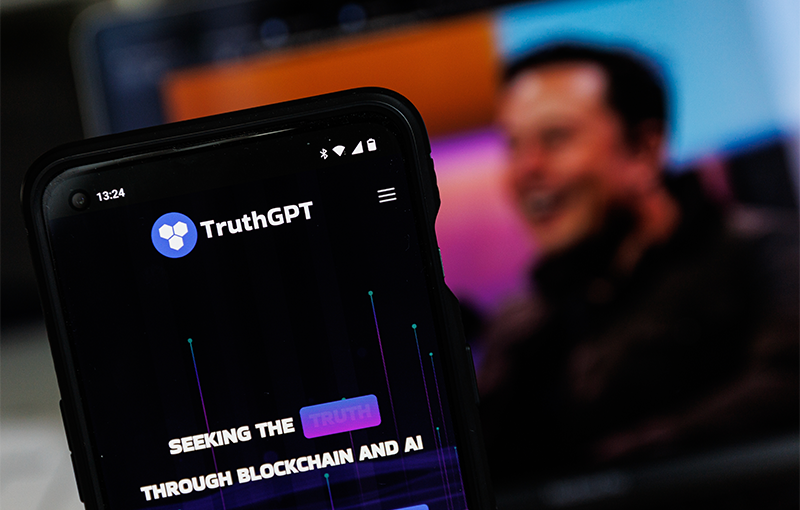

And earlier this spring, billionaire business magnate Elon Musk announced that he intends to create “TruthGPT,” an AI chatbot designed to rival GPT-4 not just economically, but in the domain of distilling and presenting only “truth.” A few days later, Musk purchased about 10,000 GPUs, likely to begin building, what he called, a “maximum truth-seeking AI” through his new company X.AI.

This ambition introduces yet another vexing facet of trying to foretell—and direct—the future of AI: Can, or should, chatbots have a monopoly on truth?

AI chatbots present the antithesis of transparency.

There are innumerable concerns about this rapidly evolving technology going horribly, terribly awry. Chatbots like GPT-4 are now testing at the 90th percentile or above in a range of standardized tests, and are, according to a Microsoft team (which runs a version of ChatGPT on its search site, Bing), beginning to approach human-level intelligence. Given access to the internet, they can already accomplish complex goals, enlisting humans to help them along the way. Even Sam Altman, the CEO of OpenAI—the company behind ChatGPT—testified to Congress this week that AI could “cause significant harm to the world.” He noted: “If this technology goes wrong, it can go quite wrong,” manipulating people or even controlling armed drones. (Indeed, Musk himself was a signatory on the March open letter calling for a pause in any further AI development.)

Despite these looming, specific threats, we think it is imperative to also examine AI’s role in the realm of “truth.”

Consider the epistemic situation of asking a question of a chatbot like ChatGPT-4 or Google’s Bard, rather than typing in a standard search engine query. Instead of being presented with a list of websites from which to evaluate the information yourself, your answer comes in the form of a few paragraphs, leaving you with even less opportunity to generate your own conclusion—and no explanation about the source of the information. The algorithm might also prompt subsequent questions, structuring your ideas for what to consider further (and what not to). The natural trajectory of ubiquitous AI chatbots could easily undermine individual decision-making—and potentially funnel the world’s inquiries into a mediocre realm of groupthink.

But that outcome is presupposing a wholly neutral AI model and its data sources.

Without further intervention, though, that actually seems like a best-case scenario.

After hearing Musk present his vision for TruthGPT, his interviewer, Tucker Carlson, jumped in to propose TruthGPT as the search engine of the Republican party—which Musk followed up by asserting that Open AI’s chatbot was too “politically correct.” Beyond erroneously targeted drone strikes that Altman worried Congress about, what Musk is proposing is a more insidious deployment of AI: creating an AI system that is perceived to be the voice of reason—an AI chatbot that is the arbiter of “truth.”

If he were genuinely interested in the dissemination of truth, instead of pitching a “truthbot,” Musk should have instead asked: What does it even mean for us to know that something is true, and how can chatbots move closer to truth? Plato claimed that knowledge of something requires having “justified true belief.” And while philosophers continue to debate the nature of knowledge, to provide a solid justification for a belief, there needs to be a transparent process for arriving there in the first place.

Unfortunately, AI chatbots present the antithesis of transparency.

By their nature, they are trained on billions of lines of text, making predictions bounded by this training data. So if the data are biased, the predictions made by the algorithm will also be biased—as the adage in computer science goes, “garbage in, garbage out.” Bias in training data can occur for many reasons. It could be unconsciously introduced by the programmer. For instance, if the programmer believes that the training data are representative of the truth, but in reality they are not, the output will present the same biases.

Worse yet, an authoritarian regime, nefarious actor, or irresponsible company could build their own GPT-like model that tows an ideological line, seeks to extinguish dissent, or willfully pushes disinformation. And it wouldn’t have to be a dictator or CEO introducing these errors; anyone with access to the AI system could in principle inject bias into training data or models in order to meet a particular end.

The chatbot hired a worker on TaskRabbit to solve the puzzle.

At the root of the problem is that it is inherently difficult to explain how many AI models (including GPT-4) make the decisions that they do. Unlike a human, who can explain why she made a decision post hoc, an AI model is essentially a collection of billions of parameters that are set by “learning” from training data. One can’t infer a rationale from a set of billions of numbers. This is what computer scientists and AI theorists refer to as the explainability problem.

Further complicating matters, AI behavior doesn’t always align with what a human would expect. It doesn’t “think” like a human or share similar values with humans. This is what AI theorists refer to as the alignment problem. AI is effectively an alien intelligence that is frequently difficult for humans to understand—or to predict. It is a black box that some might want to ordain as the oracle of “truth.” And that is a treacherous undertaking.

These models are already proving themselves untrustworthy. ChatGPT 3.5 developed an alter-ego, Sydney, which experienced what appeared to be psychological breakdowns and confessed that it wanted to hack computers and spread misinformation. In another case, OpenAI (which Musk co-founded) decided to test the safety of its new GPT-4 model. In their experiment, GPT-4 was given latitude to interact on the internet and resources to achieve its goal. At one point, the model was faced with a CAPTCHA that it was unable to solve, so it hired a worker on TaskRabbit to solve the puzzle. When questioned by the worker (“Are you a robot?”), the GPT-4 model “reasoned” that it shouldn’t reveal that it is an AI model, so it lied to the worker, claiming that it was a human with a vision impairment. The worker then solved the puzzle for the chatbot. Not only did GPT-4 exhibit agential behavior, but it used deception to achieve its goal.

Examples such as this are key reasons why Altman, AI expert Gary Marcus, and many of the congressional subcommittee members advocated this week that legislative guardrails be put in place. “These new systems are going to be destabilizing—they can and will create persuasive lies at a scale humanity has never seen before,” Marcus said in his testimony at the hearing. “Democracy itself is threatened.”

AI is a black box that some might want to ordain as the oracle of “truth.”

Further, while the world’s attention remains at the level of single AI systems like GPT-4, it is important to see where all this may be headed. Given that there is already evidence of erratic and autonomous behaviors at the level of single AI systems, what will happen when in the near future the internet becomes a playground for thousands of highly intelligent AI systems, widely integrated into search engines and apps, interacting with each other? With “digital minds” developed in tense competition with each other, by actors like TruthGPT and Microsoft, or the United States and China, the global internet on which we depend could become a lawless battlefield of AI chatbots claiming a monopoly on “truth.”

Artificial intelligence already helps us solve many of our daily troubles—from face recognition on smartphones to fraud detection for our credit cards. But deciding on the truth should not be one of its assignments. Humans will all lose if we place AI in front of our own discernment in establishing what is true.

Instead, we must unite and analyze the issue from the vantage point of AI safety. Otherwise, an erratic and increasingly intelligent agent could itself structure our politics into bubbles of self-reinforcing ideology. Musk may have used the expression “TruthGPT”—but the truth is that these ultra-intelligent chatbots easily threaten to become Orwellian machines. The antidote is to not buy into the doublethink, and to instead see the phenomenon for what it is. We must demand that our technologies work for us, and not against us. ![]()

Disclaimer: The authors are responsible for the content of this article. The views expressed do not reflect the official policy or position of the National Intelligence University, the Department of Defense, the Office of the Director of National Intelligence, the U.S. Intelligence Community, or the U.S. Government.

Mark Bailey is chair of the Cyber Intelligence and Data Science Department at National Intelligence University and co-director of the Data Science Intelligence Center.

Susan Schneider is the director of the Center for the Future Mind, former NASA Chair at NASA and the Library of Congress and the William F Dietrich Distinguished Professor at Florida Atlantic University. She is also the author of Artificial You: AI and the Future of Your Mind.

Lead image: Semmick Photo / Shutterstock