Computers and information technologies were once hailed as a revolution in education. Their benefits are undeniable. They can provide students with far more information than a mere textbook. They can make educational resources more flexible, tailored to individual needs, and they can render interactions between students, parents, and teachers fast and convenient. And what would schools have done during the pandemic lockdowns without video conferencing?

The advent of AI chatbots and large language models such as OpenAI’s ChatGPT, launched last November, create even more new opportunities. They can give students practice questions and answers as well as feedback, and assess their work, lightening the load on teachers. Their interactive nature is more motivating to students than the imprecise and often confusing information dumps elicited by Google searches, and they can address specific questions.

The algorithm has no sense that “love” and “embrace” are semantically related.

But large language models “should worry English teachers,” too, Jennifer Rowsell, professor of digital literacy at the University of Sheffield in England, tells me. ChatGPT can write a decent essay for the lazy student. It doesn’t just find and copy an essay from the web, but constructs it de novo—and, if you wish, will give you another, and another, until you’re happy with it. Some teachers admit that the results are often good enough to get a strong grade. In a New York Times article, one university professor attests to having caught a student who produced a philosophy essay this way—the best in the class. High school humanities teacher Daniel Herman writes in The Atlantic that, “My life—and the lives of thousands of other teachers and professors, tutors and administrators—is about to drastically change.” He thinks that ChatGPT will exact a “heavy toll” on the current system of education.

Schools are already fighting a rearguard action. New York City’s education department plans to ban ChatGPT in its public schools, although that won’t stop students using it at home. Dan Lewer, a social studies teacher at a public school, suggests on TikTok that teachers require students submitting from home also to provide a short video that restates their thesis and evidence: This, Lewer says, should ensure “that they are really learning the material, not just finding something online and turning it in.”

OpenAI’s researchers are themselves working on schemes to “watermark” ChatGPT’s output; for example, by having it select words with a hidden statistical signature. How easy it will be for harried teachers to check a class-load of essays this way is less clear—and the method might be undermined by “translating” ChatGPT’s output by running it through some other language-learning software. In a digital arms race of cheating student against teacher, I wouldn’t count on the teacher being able to stay ahead of the game. Some are already concluding that the out-of-class essay assignment is now dead.

But the challenge posed by ChatGPT isn’t just about catching cheats. The fact that AI can now produce better essays than many students without a smidgeon of genuine thought or understanding ought to prompt some reflection of what it is that we aim to teach in the first place. Herman says it is no longer obvious to him that teenagers will need to develop the basic skill of writing well—in which case, he says, the question for the teacher becomes, “Is this still worth doing?”

Without wishing to detract from the impressive algorithmic engineering, ChatGPT is able to generate more-than-passable essays not because it is so clever but because the route to its goal is so well-defined. Is that a good thing in the first place? Many education experts have long felt a need change the way English is taught, says Rowsell, but she admits that “teachers are finding it very difficult to make a substantial change from what we’ve known so long. We simply don’t know how to teach otherwise.”

Language AI might force matters to a head. “Science has leapt ahead, and we [in literacy education] don’t know quite how to grapple with it,” says Rowsell. “But we’ve learned that we don’t fight this stuff—let’s understand it and work with it. There’s no reason why classical essay-writing and ChatGPT can’t work together. Maybe this will catalyse the change we have to make within teaching.” If so, it should start with a consideration of what language is for.

Algorithms that can interact using natural language have been around since the earliest days of AI. Computer scientist Joseph Weizenbaum of MIT recounted how, in the 1960s, one of his colleagues ended up in an angry remote exchange with such a program called Eliza, thinking he was in fact conversing with Weizenbaum himself in a particularly perverse mood.

That such a crude language program as Eliza could fool a user reveals how innately inclined we are to attribute mind where it does not reside. Even until recently, language-using algorithms tended to deliver little more than awkward phrases full of non-sequiturs and solecisms. But advances in the technology—the exponential growth in computational power, the appearance of “deep-learning” methods in the mid-2010s, an ever-expanding body of online data to mine—have now produced systems with near-flawless syntax that supply a spooky simulacrum of intelligence. ChatGPT has been hailed as a game changer. It can perform all manner of parlour tricks, like blending sources and genres: a recipe for fish pie in the style of the King James Bible, or a limerick about Albert Einstein and Niels Bohr’s arguments over quantum mechanics.

There are evident dangers in these large language model technologies. We have little experience in dealing with an information resource that so powerfully mimics thought while possessing none. The algorithm’s blandly authoritative tone can be harnessed for almost any use or abuse, auto-generating superficially persuasive screeds of bullshit (or rather, repackaging in an apparently “objective” and better-mannered format the ones that humans have generated).

If ChatGPT churns out a credible essay, it’s because schools set an equivalent task.

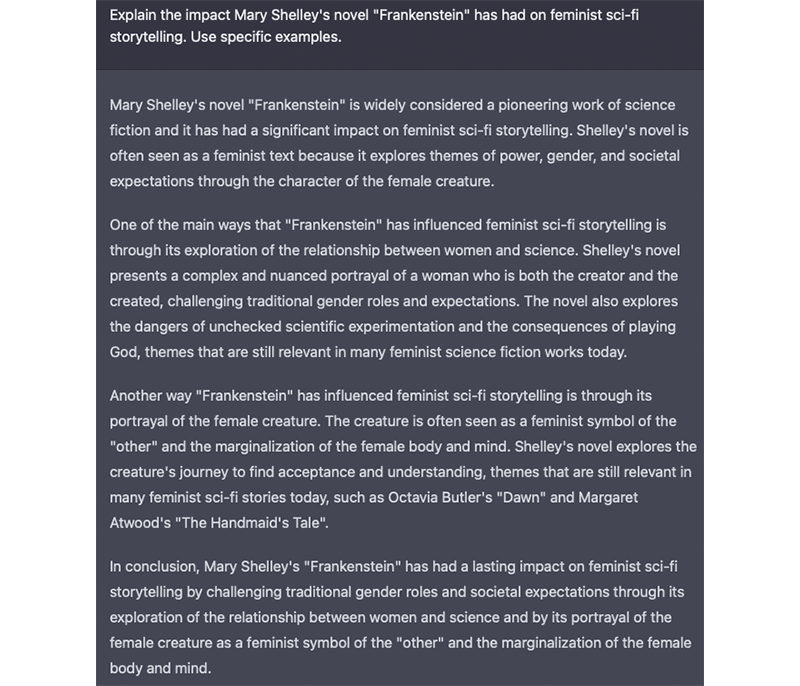

The algorithm is ultimately still rather “lazy,” too. When I gave it the essay assignment of summarizing the plot of Frankenstein from a feminist perspective, ChatGPT did not seem to have consulted the vast body of scholarship on that issue, but instead provided a series of stilted and clichéd tropes barely connected to the novel: It “can be seen as a commentary on the patriarchal society of the time,” and “the ways in which women are often judged and valued based on their appearance.” It would probably say the same about Pride and Prejudice.

Some of these flaws might be avoided by giving the large language models better prompts—although to get a really sharp, informed response, the user might need to know so much that writing the essay would pose no challenge anyway. But at root the shortcomings here reflect the fact that the algorithm is not tracking down the feminist literature on Frankenstein at all, but is simply seeking the words and phrases that have the highest probability of being associated with those in my prompt. Like all deep-learning AI, it mines the available database (here, texts “scraped” from online sources) for correlations and patterns. If you ask it for a love sonnet, you are likely to get more words like “forever,” “heart,” and “embrace” than “screwdriver” (unless you ask for a sonnet about a screwdriver, and yes, I admit I just did that; the result wasn’t pretty). The algorithm has no sense that “love” and “embrace” are semantically related. What is so impressive about ChatGPT is how it is able not just to smooth out the syntax in these word associations but also to create context. I think it’s unlikely that, “You are the screw that keeps me firmly braced,” is a line that has ever appeared in a human-composed sonnet (and for good reason)—but still I’m impressed that you can see what it is driving at. (ChatGPT can do puns, too, although I take the blame for that one.)

For factual prompts, the texts that emerge from this probabilistic melange generally express consensus truths. This guarantees the exclusion of any particularly controversial viewpoints, because by definition they will be controversial only if they are not the consensus. Asking a large language model who killed JFK will deliver Lee Harvey Oswald. But ChatGPT occasionally invents random falsehoods, too. In its biography of me, it knocked two years off my age (I’m OK with that) and gave me a fictitious earlier career in the pharmaceutical industry (just weird).

The question for the teacher becomes, “Is this still worth doing?”

If ChatGPT churns out a credible school essay, it’s because we are setting school pupils an equivalent task: Give us the generally agreed facts, with some semblance of coherence. Student essay-writing already tends to be formulaic to an almost algorithmic degree, codified in acronyms: Point-Evidence-Explanation-Link, Point-Evidence-Analysis-Context. Not only are students told exactly what to put where, but they risk having marks deducted if they deviate from the template. Current education rewards an ability to argue along predictable lines. There’s some logic in that, much as art students are sent off to galleries to draw and paint copies of great works, learning the skills without the burdensome and unrealistic demand of having to be original.

But is this all we want? Rowsell says it is hard for teachers or educationalists even to confront the question, since the cogs of education systems typically continue to turn merely on the basis that “this is how we’ve always done it.” A deep dive into the teaching of cursive handwriting, for example, reveals that there is no clear justification for it beyond tradition. But maybe now’s the right time to be asking this difficult question.

My instinct as an occasional educator—in the 1980s I used to teach computer classes in a prison, and I homeschooled my children for a time—is that of the scientist, I suppose: to start with an explanation of how a thing works, starting with its parts. “You won’t use it well unless you understand it first!” says my inner voice. But while I listed off unfamiliar computer components to my incarcerated pupils, one finally chimed in, “I don’t even know how to turn it on!”

I appreciate that sometimes schoolchildren must feel toward language learning somewhat as my prison class did to computers, being told the unfamiliar names of the components (noun, embedded clause, fronted adverbial; CPU, RAM, bits and bytes) while thinking, “But I just want to know how to use it!” But ChatGPT seems to make all that groundwork otiose, much as your average computer user needs to know nothing of coding or shift registers. English writing? There’s an app for that. High-school humanities teacher Herman worries that students might be inclined to depend on language AI for all their writing needs, just as many will now consider it a waste of time to learn a foreign language in the age of real-time fluent translation AI.

I realize now that I was teaching prisoners about the inner workings of computers because I loved all that stuff. I suspect few if any of them loved it, too—but they rightly discerned that an ability to use a computer was going to become important in their life. I want my kids to love the way language works and what it can do, too. But they need first to know how to turn it on, so to speak. And the on-switch for language is not the distinction between nouns and verbs or the placement of subclauses, but the more fundamental process of communicating.

When we learn good use of language, we are not simply being trained to conform to a model. Those templates for sentence or essay construction do not follow some law of literature demanding particular arrangements of words, phrases, and arguments. However crude and formulaic they might be, ultimately they exist because they benefit the reader. In other words, reading is a cognitive process with its own dynamics, which the writer can facilitate or hinder. Sometimes all it takes to transform a confusing sentence into a lucid one is the movement of a single word to where it matches the cognitive processing of the reader. There is nothing esoteric about this; good communication is a skill that can be learned like punctuation. (And punctuation itself exists to aid good communication.)

The on-switch for language is not the distinction between nouns and verbs or the placement of subclauses.

What all this demands is empathy: an ability of the writer to step outside their head and into the reader’s. For factual text, the goal is usually clarity of expression. For fiction, the priority might be elsewhere: even, indeed, to impede instant understanding, not arbitrarily or perversely but in order to deliver a little jolt of surprise and delight when the meaning crystallizes in the reader’s mind. Music does the same with melody and rhythm, and this is partly how it moves and excites us. Shakespeare is famous for his sentence-inversions: a rearrangement of the usual order (“But soft, what light through yonder window breaks?”) to arrest the mind for a moment, perhaps for emphasis, perhaps for the sheer thrill of the lexical puzzle. On the larger scale, much of fiction is the art of controlled disclosure, of revealing information at the right moment and not sooner (or later).

Language is, at its heart, a link between minds. One theory of the origin of language, proposed by linguist Daniel Dor, argues that it arose not for simple communication but for “the instruction of the imagination.” It enabled us to move beyond the blank imperatives and warnings of animal vocalizations, and project the contents of one mind into another.

What does this imply for language AI? Precisely because the algorithm of a large language model does not have a communicative goal—it has no notion at all of what communication is, or of having an audience—it does not show us what language can do, and indeed was invented to do. It would iron out Shakespeare’s quirky acts of linguistic subterfuge. It would fail to capture the rhetorical tools with which a good historian makes her case memorable and persuasive. And it is hard to see how a language model could ever truly innovate, for that is antithetical to what it is designed to do, which is simply to ape, mimic, and, as a statistician would put it, regress to the mean, which tends toward the mind-numbingly drab.

Language use is the opposite of that, and nowhere more so than among young people, whose stylistic invention in speech and digital discourse is enormous and even rather joyous. Progressive educationalists long to tap into the multimodality of the online sphere, but are struggling to figure out how. Crucially, the media-savvy communicative sophistication of Gen Z relies on the assumption of shared concepts, standards, and points of reference, just as much as does the compact and stylized language of a Tang-dynasty poem. If space is made in education for this facet of language use, it may well be immune to colonization by AI.

There’s no denying that large language models raise a host of ethical and legal issues. So did the internet, and so do many other forms of deep-learning AI, such as deep fakes and face recognition. We grapple with these problems and learn to live with them. Some believe that, panic about AI-based essay writing aside, large language models will eventually become just another tool, perhaps situated somewhere between the humdrum utilitarianism of Excel spreadsheets and the creative possibilities of digital photography.

After all, written language is itself often a tool, a means to an end. This doesn’t mean we need to learn to use it only crudely. But it does mean that what the likes of ChatGPT can now offer is not only adequate for certain purposes but might even be valuable. People who struggle with literacy skills are already using ChatGPT to improve their letters of job application or their email correspondence. Some scientists are even using it to burnish their papers before submission. Arguably these AIs can democratize language in the way music software has democratized music-making. For those with limited English-language skills who need to write professionally, the technology could be a great leveller.

And just as electronic calculators freed up students’ time and mental space once monopolized by having to learn logarithms for complicated multiplication, large language models might liberate pupils from having to master the nuances of spelling, syntax, and punctuation so that they can focus on the tasks of constructing a good argument or developing rhythm and variety in their sentences.

The absence of imagination, style, and flair meanwhile makes large language models no threat to fiction writers: “It’s the aesthetic dimension of writing that is really hard for an AI to emulate,” says Rowsell. But that is just what could make them an important new tool.

For example, I suspect it would help students see what makes a story or an essay come alive to get them to improve on ChatGPT’s dull output. Perhaps in much the same way that some musicians are using music-generating AI as a source of copious raw material, large language models might provide kernels of ideas that writers can sift, select, and work on.

Here, as elsewhere, AI holds up a mirror to ourselves, revealing in its shortcomings what cannot be automated and algorithmized: in other words, what constitutes the core of humanity. ![]()

Philip Ball is a science writer and author based in London. His most recent book is The Book of Minds.

Lead image: Boboshko-Studio / Shutterstock