A few key data points about Amelia Sloan: She likes to suck her own toes. She wears pink tutus. She doesn’t do interviews with journalists. She was born in Falls Church, Va. on April 25, 2013, at 6:54 p.m., weighing in at 8 pounds, 2 ounces. Amelia entered the world right on schedule, but not all her fellow Tauruses did, a fact that raises other key data points: In the United States, some 500,000 babies are born prematurely every year. At birth, each weighs less than a two-liter soda bottle. They cost the healthcare system an estimated annual $26 billion. More than 330,000 of them don’t survive the first year, and many of the rest suffer life-long health and cognitive problems. And doctors don’t know why.

To unravel the mystery, scientists at the hospital where Amelia was born are combing through yet another dataset: The billions of molecules of her healthy newborn genome compared to the genomes of preemies. Taken together, these are themselves only one small point in a vast constellation of genomic data being collected by medical scientists on a scale impossible only a few years ago. A new cadre of medical researchers believes that examining the full genetic information of as many people as possible will reveal not just the cures for health problems ranging from pre-term birth to cancer and autism, but the predictive insight to prevent them from happening altogether.

Everything about your body, from the basic structure and function of your vital organs to your eye and skin color, is encoded in a unique strand of 3 billion molecules called nucleotides, which come in four flavors represented by the letters A, T, C, and G. In aggregate, the nucleotides spell out a blueprint—your DNA—that is packed identically into each of your trillions of cells. The DNA, along with the RNA that decodes it so your body can manufacture protein and other biomolecules, comprise your genome. Your genome, in turn, is divided into functional chunks called genes. When the blueprint is written properly, you might forget it’s there: You grow four limbs and digest food and produce healthy children and do all the other things our human bodies do. But just as the manuscript of a novel can contain typos that confuse its meaning, misprints in your genome, inherited from your parents or introduced at conception, can give you diseases like diabetes or a predisposition to obesity. For the scientists working with Amelia, finding cures starts with identifying those misprints.

When molecular biologist Joe Vockley, the keeper of Amelia’s genome, started studying genetics 20 years ago, scientists hunted for genetic glitches the way you might use a flashlight to hunt for a missing sock in a dark room: Shine a light over here and over there until you find it. But now Vockley, chief scientific officer at the Inova Translational Medicine Institute (ITMI), a nonprofit within one of the country’s largest networks of hospitals, hopes that massive amounts of data will reveal the roots of disease without endless hit-or-miss testing of suspect genes. Using so-called “big data” is like looking for your sock with the floodlights thrown on. With the right genetic markers identified, clinicians could sample a woman’s genome, predict her chances for a pre-term birth, and then take steps to prevent it.

“We’re trying to get from the practice of medicine to the science of medicine,” Vockley says. “And I think that genomic medicine, with its principles of prediction and prevention, is going to redefine how medicine is practiced in this country.”

The fear: A future in which your genetic information is as available as your Google searches to health insurance companies looking to lower their risk at your expense.

But efforts like this have raised the hackles of privacy experts—some of the same folks already up in arms about the collection of millions of phone records and Facebook connections by the National Security Agency. The fear: A future in which your genetic information is as readily available as your Google searches to marketers, police departments, and identity thieves, not to mention health insurance companies and employers looking to lower their risk at your expense. While one of the first things Vockley does with a new genome is strip it of identifiers like a name, there’s no guarantee that the data can or will stay anonymous forever. In January, Yaniv Erlich, a data scientist at MIT, “re-identified the genomes” (legally “hacked” the identities) of nearly 50 people who had participated in studies like Vockley’s by connecting the anonymous genomes to surnames and partial genome data from distant family members publicly available on a family-tree website. “We’re going to enter into this era of ubiquitous genetic information,” Erlich says.

Pre-term birth is just the beginning: Today, ITMI is gathering tens of thousands of genomes from other patients to find the root causes of cancer, diabetes, osteoarthritis, and cardiovascular disease. It’s also running a study that repeatedly samples babies over the first two years of life to see how genes’ functions change over this time. Vockley estimates that altogether, his lab has generated more than 10 percent of all the human genome data in the world. With a new in-house DNA sequencing facility currently under construction, he hopes to boost that figure to 15 percent by the end of next year.

If Vockley succeeds, he could change the lives of countless preemies. But for Amelia and the others, are the potential gains worth the risk of standing as naked, data-wise, as the day they were born?

Riding the Data Wave

We’re well on our way to a future where massive data processing will power not just medical research, but nearly every aspect of society. Viktor Mayer-Schönberger, a data scholar at the University of Oxford’s Oxford Internet Institute, says we are in the midst of a fundamental shift from a culture in which we make inferences about the world based on a small amount of information to one in which sweeping new insights are gleaned by steadily accumulating a virtually limitless amount of data on everything.

To make the point, Mayer-Schönberger looks to the mid-19th century discovery of a cache of salt-crusted logbooks by Midshipman Matthew Fontaine Maury, a bright young naval officer crippled in a stagecoach accident in 1839. Confined to a desk at the Depot of Charts and Instruments in Washington, D.C., Maury noticed that the Navy’s standard routes, navigated mainly by tradition and superstition, were often meandering and nonsensical. Frustrated, he pored over long-ignored logbooks, and was amazed to find countless entries on water and wind conditions in different times and places—data—that in aggregate clearly revealed when and where the most efficient routes would be. By the end of his investigation, Maury had plotted 1.2 million data points (literally by hand) and permanently transformed U.S. military navigation.

Their job is to chip away at the staggering pile of genetic data to carve out variants that are strongly associated with the diseased population but not the healthy one.

Now imagine if Maury had aggregated not just what he found in the logbooks but every single piece of navigation-relevant data, every wind speed and water depth everywhere on Earth. Ever. His results would have been immaculate, but his feather pen was probably not up to the task. The statistical concept of a sample size is only necessary because gathering all the data on, say, voting behavior, was long impossible. Thanks to recent advances in digital storage and processing, that is no longer the case.

The value of collecting all the information, says Mayer-Schönberger, who published an exhaustive treatise entitled Big Data in March, is that “you don’t have to worry about biases or randomization. You don’t have to worry about having a hypothesis, a conclusion, beforehand.” If you look at everything, the landscape will become apparent and patterns will naturally emerge.

In 2009, with the swine flu sweeping the globe, Google got a chance to put this theory to the test. Analysts there found they could predict where outbreaks were about to happen using nothing but search queries. The results proved accurate enough to arm public health officials with advance knowledge of where the disease would crop up next. The predictive power ended up hinging on 45 search terms, including “influenza complication,” “cold/flu remedy,” and “antibiotic medication,” that began appearing in clusters on Google servers. In aggregate, the terms correlated strongly with an impending flu outbreak in the location of the searchers themselves.

While it’s not surprising that you’ll find the flu where a bunch of people are Googling “flu remedy,” the significance of Google’s research was in how it identified these terms out of the 50 million most common search queries in the U.S.. Rather than hypothesizing suspect terms, testing for correlation, and slowly building up a list, researchers developed an algorithm (stepwise instructions for a computer to filter data) that looked simultaneously at all queries on any topic and found those that correlated in time and place to public health records on flu outbreaks. The right terms—virtually all of them—found themselves. With the floodlights on, all the guesswork was gone.

Data in the Age of Genomics

None of this has been lost on Vockley and his team. They are sifting through nearly 1,000 newborn genomes, including Amelia’s, from deep within Inova’s Fairfax Hospital, a sprawling brick-and-concrete labyrinth in Falls Church, Va., on Washington, D.C.’s suburban fringe. Here, doctors treat cancer, replace joints, and transplant hearts (including, last year, Dick Cheney’s heart).

Vockley grew up in hospitals like this. Some of his earliest memories from a childhood on the outskirts of Pittsburgh are of visiting family members who had to undergo repeated surgeries to remove painful bone growths, which can turn into fatal cancers, caused by a genetic disorder called multiple cartilaginous exostoses. While Vockley didn’t inherit the disease, it has run through generations of his family. So even as a child, Vockley understood that a family’s misfortune can be caused by mysterious malfunctions deep within their cells.

“It’s the hard way to learn about genetics,” he says.

The experience lit a spark, leading him to a postdoctoral fellowship and clinical genetics residency at UCLA. By the early 1990s, he’d landed a job at SmithKline Beecham (now GlaxoSmithKline, the world’s tenth-largest pharmaceutical giant by revenue), where he searched a small database of partial genomes for genes linked to cancer. The first complete human genome wouldn’t be sequenced until 2003, but Vockley already understood the potential. The more data he had, the more he would find.

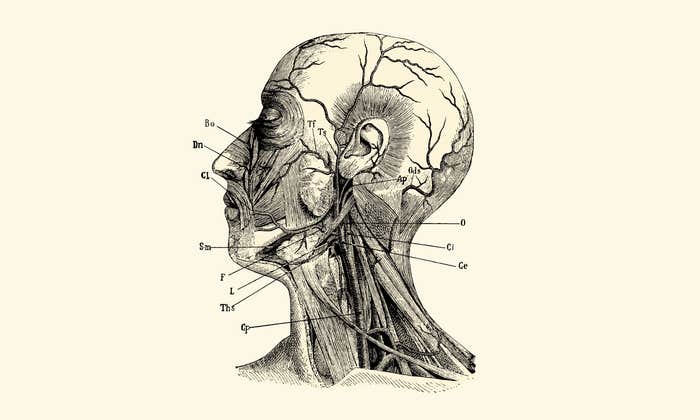

By the time he arrived at Inova in 2010, whole genome sequencing technology had truly arrived. To convert a patient’s blood sample to a digital file, lab technicians remove white blood cells and chemically melt away everything but the DNA and RNA, the genome itself. They break this into pieces and affix lab-generated non-human DNA to each end. This DNA binds to complementary DNA on a glass plate, locking the human sample in place. Then they use a polymerase, the same enzyme that makes copies of your genome every time one of your cells divides, and churn out an exact replica. The polymerase is modified to add a fluorescent marker onto each nucleotide it copies, so that each new A, T, C, and G is color-coded. The colors are picked up by a super-high-resolution camera, and a genome data file is born. This process is repeated up to 30 times for each different sample, to correct for mistakes or misreads.

When Vockley was at SmithKline Beecham, sequencing just 10,000 nucleotides—only 0.00033 percent of a full genome—took 18 hours. Today, sequencing machines can get through 45 billion nucleotides (about half of what’s needed for one sample, given the repeated testing) in the same amount of time, thanks to engineering advances that make it possible to process many samples at once. As efficiency has increased, the cost has dropped by orders of magnitude: Sequencing one full human genome cost around $100 million in 2001; today the price tag is less than $10,000.

With the technology in place, Vockley found a community of scientists at Inova keen to apply big-data analysis to medical problems. He also found a lot of babies—around 10,000 are born there every year, making it one of the country’s busiest neonatal facilities, and the opportunity he had been waiting for. In Inova’s home state, Virginia, around 12 percent of babies are born prematurely every year, an approximate microcosm of the nationwide average. Pre-term birth has been a thorny disease for decades, Vockley says; the incidence rate hasn’t budged in nearly 30 years, even as prenatal care has improved by leaps and bounds.

“Everything that people are doing, it’s not having an impact,” he says. “So the question is, can we come up with something in the world of genomics that will change the outcome?”

Preventing Preemies

By 2010, Vockley and his team had devised a plan to dig up the genetic roots of pre-term birth. He would amass thousands of genomes from mothers, fathers, and babies and find patterns the same way Google Flu Trends found its predictive search terms: by looking across all the data at once.

Two years ago his team began to roam the hospital’s maternity ward looking for pregnant women willing to divulge not only their own genomes, but their baby’s as well. Technicians then collected blood and saliva samples from participating mothers and fathers and, within a day or two after birth, the babies, from which to sequence the genomes.

Vockley now has 2,710 genomes in the study, including 881 each of mothers and fathers and 948 babies, and is still collecting samples from any willing preemies who come along. The DNA alone comprises a staggering amount of data: Three billion nucleotides times 2,710 participants equals 8.1 trillion A, C, T, and Gs.

With their predictive power, these findings suggest the possibility of a massive shift from today’s practice, which relies mainly on guesswork.

Compare any two humans, and the vast majority of their DNA sequence will be the same; we are, after all, the same species. But roughly 10,000 multi-letter chunks of the 3 billion-letter DNA string differ from person to person, mostly based on ancestry (two white guys from upstate New York—the demographic of the first human genomes ever sequenced—will have more in common with each other than they would with a woman from Zimbabwe, for example). These divergent chunks are known as “variants,” and Vockley’s goal is to tease out the disease-causing minority from the benign majority.

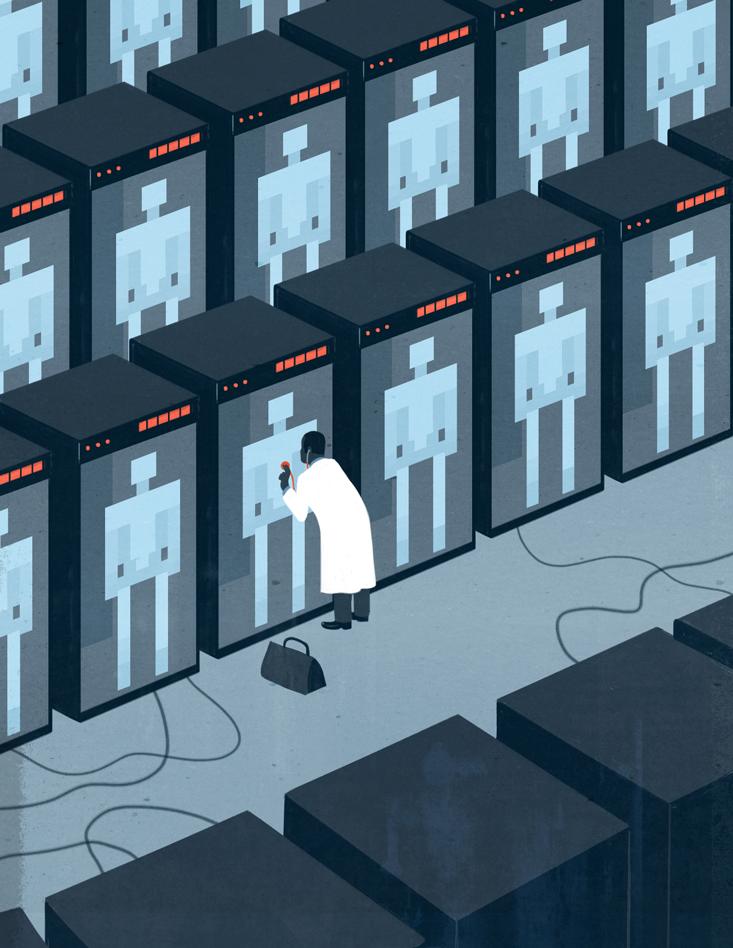

To do this, he employs a team of 40 bioinformaticists, a specialized breed of software engineer with training in computer programming and biology, who start by dropping every new genome in the pre-term birth study into one of two digital buckets: “diseased” (pre-term), and “non-diseased” (full-term). Then their job is to chip away at the staggering pile of genetic data to carve out variants that are strongly associated with the diseased population but not the healthy one.

They start by writing an algorithm that stacks all 2,710 genomes atop one another and looks at them all simultaneously. That allows the computer to filter out sequences that are the same in everyone. This is where the benefit of a large dataset comes into play: If there were only a handful of genomes to work with, any similarities or differences between them could be the product of chance. But if the exact same sequence appears in all 2,710 genomes, there is a stronger likelihood that it is something common to all humans, and therefore not a factor in the disease.

The next step is to filter out ancestral variations, differences between a Parisian, say, and a Zimbabwean. Here, Vockley took advantage of the ethnic diversity of the D.C. metro area; participants in the pre-term birth study hail from 77 different countries, making it possible to account for and filter out many of the ancestry-based differences.

“All of a sudden you go from 10,000 variants” that could relate to any number of differences between participants, says Vockley, “to 100” that are much more likely to relate to the disease.

At the same time, different algorithms search through the genomes for commonalities within each bucket, variants shared by all pre-term families but not found in any full-term families. Pinning down variants that directly cause pre-term birth is only part of the challenge: Vockley is also interested in variants correlated with the disease; these correlations may not cause pre-term birth directly, but could serve as red flags to watch that pregnancy with a special level of care. Altogether, this process of elimination has yielded 20 genomic variants that Vockley believes play a role in pre-term birth, a result he plans to publish in the peer-reviewed journal Nature Genetics this winter. These include variants that impact physical factors like the development of placenta and the length of the mother’s cervix, as well as biochemical imbalances between the mother and her fetus. If a woman tests positive for any combination of these genes, it could signal an increase in her risk for pre-term birth.

With their predictive power, these findings suggest the possibility of a massive shift from today’s practice, which relies mainly on guesswork. Doctors empowered by the data and the story it tells could prescribe custom treatment and greatly increase the odds of a safe and successful delivery. New medicines could be developed to correct imbalances in advance, and delivery nurses alerted to risk could prepare for complications rather than scrambling to accommodate them at the last minute.

“Reactive medicine is what we currently have,” Vockley says. “You get sick, we treat you. The goal here is to keep you from getting sick.”

Clues to Cancer

One of the world’s most advanced data mining projects applies this same kind of analysis to cancer. Ilya Shmulevich, a lead genomicist who directs a Genome Data Analysis Center at the National Institutes of Health’s The Cancer Genome Atlas, says the project was born out of a shared frustration among cancer researchers at being forced, by a dearth of data, to study cancer one defective gene at a time, even while suspecting that the disease is actually the result of many genomic malfunctions, all happening at once.

“In order to understand where that system is broken in cancer,” he says, “we have to measure everything about that system.”

MIT’s “hacker” reconstructed nearly 50 identities. The process, he says, was “much easier than what has been estimated.”

Over the past several years, the NIH team has derived complete genomic data from 20 different types of cancers from 10,000 cancer patients across the world. In order to see what has caused the disease, the scientists have sequenced the genomes found in the patients’ healthy cells and the skewed genomes of their tumors as well. Already, results are rolling in. In mid-October, scientists at Washington University’s School of Medicine in St. Louis worked with The Cancer Genome Atlas data to uncover 127 genes that are commonly mutated across 12 major cancer types. The finding sets the stage for a single test that could catch more cancers earlier on. Such research also paves the way for targeted drugs designed to attack tumors based on their unique molecular traits.

And sequencing genes, which stay the same throughout life, is only the first step in predicting and preventing genetic disease. Also important: RNA molecules that read the genes’ DNA blueprint, creating functional proteins that do everything from forming tissue to fighting bacterial disease. Over time, these translational molecules change in response to environmental conditions like diet and stress. Sampling the same person today and then again a year from now can show a very different picture. Shmulevich sees great potential in comparing you to yourself as you go from a state of health to a state of disease. Big genomic data could reveal previously unseen patterns in the behavior of cells just as large-scale traffic data can help your GPS navigator find the most efficient route home. Using the data, doctors could tell that you’re “coming down with” cancer long before any symptoms appear.

Big Data Future

Data aficionados like Shmulevich say we’re close to a future where getting whole genome information for every patient who walks into a clinic will be cheap, fast, and easy; a gaggle of biotech startups are racing toward the first $100 genome, which could be only a few years away. For Vockley, this will afford an unprecedented strategic advantage to physicians fighting disease, one that obviates any need to take shots in the dark. And unlike small statistical samples that carefully select data to answer a pre-defined question, large-scale data collection faces no limit in what it can reveal; the same genomic data being collected today by ITMI and The Cancer Genome Atlas could be used tomorrow to study other diseases, like diabetes or obesity.

But big data’s long shelf life also exposes it to unforeseen risks: Unscrupulous players could use it to jack up healthcare premiums before you develop a disease, deny credit before you’ve ever defaulted on a loan, or, in Mayer-Schönberger’s personal favorite apocalyptic scenario, punish “criminals” before they ever commit a crime, all based on your big data profile. Think Minority Report, but with prognostications issued by sheer data instead of freaky floating clairvoyants. In other words, the real risk is not that the NSA will know you made a long-distance call to your boyfriend during work, per se, but that you might never be hired in the first place because people with your data profile are likely to make such a call.

Adding genome data into the mix of what’s out there about you increases the risk that someday it could be used for a purpose other than the original research. With big data, “it isn’t that we as human beings necessarily become more naked and more surveilled,” Mayer-Schönberger says. “We as a community of humans become more naked. It’s the interactions, the dynamics in society that will become exposed.”

Amelia Sloan became a citizen of the big data age on her second day of life. Still in the hospital, she gave up samples of blood and saliva for the pre-term birth study, which her mother, Holly, had volunteered for. Holly had good reason to sign on: Herself a delivery nurse for five years in this same hospital, she had been on deck for dozens of pre-term births, and had too often watched what should have been a joyous family occasion turn funereal.

“In the very beginning it is so scary,” she says, “because they come out and they’re teensy weensy, and there’s all the equipment, and then all of the people, and you’re wondering, ‘How in the world is that little baby gonna make it?’ ”

Holly wanted to stop asking herself that question. So she, her new baby, and a few family members offered their genomes to Vockley’s study, with the promise that the data would be made anonymous before being shared with other researchers. But that could be wishful thinking. In fact, it might already be too late to protect your genome from prying eyes. As MIT’s Erlich showed, the comfort that participants might draw from having their genomes “anonymized” is diminished when that data is placed in the context of all the other data that is out there about them.

Erlich started his career being paid by banks to hack into their systems, looking for security weaknesses. The experience left him skeptical of how secure any dataset can really be, so late last year he decided to test the locks on genome data. He took 10 full, supposedly anonymized genomes from public research databases and matched them to partial DNA snippets of Y chromosomes people had submitted, along with their surnames, to a commercial genetic family-tree reconstruction website. Because Y chromosomes transmit from father to son like surnames, making a match didn’t require the same individual to be in both sets—it was enough to infer the anonymous genomes’ surname, then narrow down to an individual using age and state-of-residency information that wasn’t legally considered identifying in the research database. Erlich reconstructed nearly 50 identities, and the process, he says, was “much easier than what has been estimated.”

Similar results could be achieved by combining research genomes with any other genomic data source, like another medical study or DNA collected for a police investigation or parenthood test. Beyond that, any number of combinations is possible: Genome data could be combined with, say, Amazon purchasing habits, to target marketing campaigns toward people with a certain genetic profile or disease. All it would take is the accidental or intentional release of genomic data through a snafu (Netflix faced a $5 billion suit in 2009 when it released supposedly anonymous movie review data that was quickly re-identified by two University of Texas researchers) or a Wikileaks-style vigilante data dump. And since so much genomic data is shared by family members, your sister taking part in a leaked genome study would reveal secrets about you as well.

In the big data age, Mayer-Schönberger says, “If one person opts to have his genome sequenced, in essence he is compromising the genome information of all his relatives, too,” which opens up an ethical crevasse for anyone thinking of donating their genome to science, or of consenting on behalf of their newborn baby.

Vockley and Shmulevich both point to the Health Insurance Portability and Accountability Act (HIPAA), the standard of medical privacy since President Bill Clinton signed it into law in 1996, as an adequate safeguard against nonconsensual sharing of medical data. But information and privacy law experts like Katherine J. Strandburg of New York University’s School of Law think that big data, from genomes to Facebook likes, requires a new kind of legal protection that looks beyond any guarantees that can be made at the time of data collection (such as the traditional notice-and-consent contract you agree to when signing up for, say, an email provider) and instead specifically prohibits future misuse.

“People don’t know what’s going to be done with the information that’s collected about them,” she says. “So we really need more direct regulation, where certain kinds of practices, certain uses of information, are simply not allowed.”

That could be why, last fall, the Presidential Commission for the Study of Bioethical Issues—created by President Barack Obama in 2009 to advise him on ethical complications arising from advances in biomedical technology—sent a memo to the president urging the creation of state and federal laws that expand privacy protection for genomic data regardless of its origin. Fortunately for Holly and Amelia, one of the more progressive laws on this front is the Genome Information Nondiscrimination Act (GINA), signed by President George W. Bush in 2008, that specifically forbids discrimination by insurers or employers on the basis of genetic information. Unlike HIPAA, GINA works on the assumption that all data could someday wind up in the public sphere, and so prohibits abuse itself rather than the sharing of data.

For Vockley, the promise of genomic medicine outweighs the risk. He sees a not-so-distant future where genomic data will allow doctors to spend more time warding off the disease you’ll probably get than beating back the one you already have. “What would a hospital look like,” he wonders, “if everybody had a prediction, instead of having a disease?”

Because technology always outpaces regulation, how much data to share is still a decision only you, or maybe your mom, can make.

“I thought it would be cool to get my genome mapped,” Holly Sloan says. “Ask me again in 20 years if I still think it was a good idea.”

Tim McDonnell is an associate producer at Mother Jones magazine, where he covers environmental science and policy.