On October 23, Google researchers officially made computing history. Or not, depending on whom you ask.

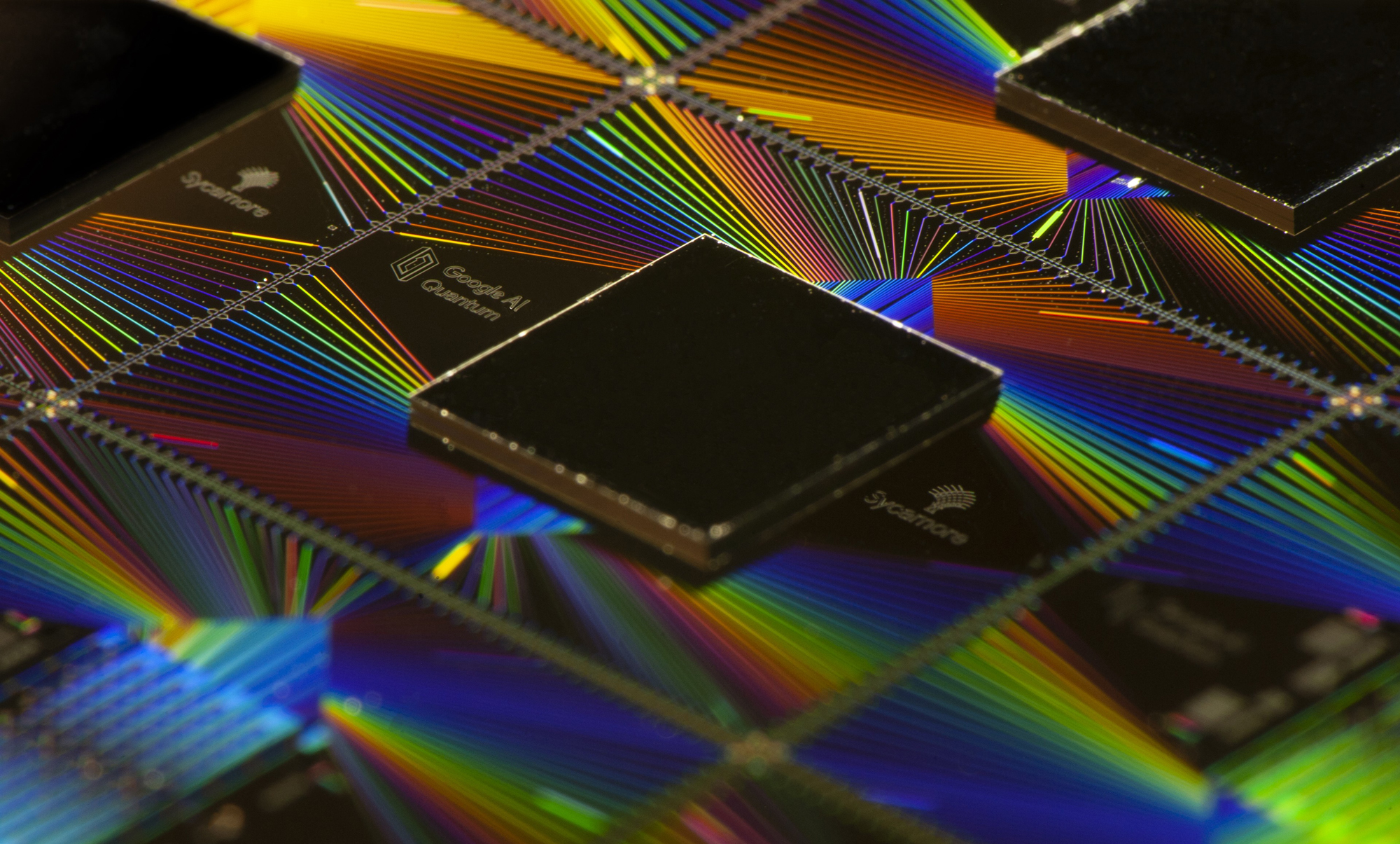

The tech giant announced it had reached a long-anticipated milestone known as “quantum supremacy”—a watershed moment in which a quantum computer executes a calculation that no ordinary computer can match. In a related paper in Nature, Google described just such a feat performed on their state-of-the-art quantum machine, code named “Sycamore.” While quantum computers are not yet at a point where they can do useful things, this result demonstrates that they have an inherent advantage over ordinary computers for some tasks.

Yet in an eleventh-hour objection, Google’s chief quantum-computing rival asserted that the quantum supremacy threshold has not yet been crossed. In a paper posted online on October 21, IBM provided evidence that the world’s most powerful supercomputer can nearly keep pace with Google’s new quantum machine. As a result, IBM argued that Google’s claim should be received “with a large dose of skepticism.”

So why all the confusion? Aren’t major milestones supposed to be big, unambiguous achievements? The episode reminds us that not all scientific revolutions arrive as a thunderclap—and that quantum supremacy in particular involves more nuance than fits in a headline.

Quantum computers have been under development for decades. While ordinary, or classical, computers perform calculations using bits—strings of 1s and 0s—quantum computers encode information using quantum bits, or qubits, that behave according to the strange rules of quantum mechanics. Quantum computers aim to harness those features to rapidly perform calculations far beyond the capacity of any ordinary computer. But for years, quantum computers struggled to match the computing power of a handheld calculator.

In 2012, John Preskill, a theoretical physicist at the California Institute of Technology, coined the phrase “quantum supremacy” to describe the moment when a quantum computer finally surpasses even the best supercomputer. The term caught on, but experts came to hold different ideas about what it means.

And that’s how you end up in a situation where Google says it has achieved quantum supremacy, but IBM says it hasn’t.

Before explaining what quantum supremacy means, it’s worth clarifying what it doesn’t mean: the moment a quantum computer performs a calculation that’s impossible for a classical computer. This is because a classical computer can, in fact, perform any calculation a quantum computer can perform — eventually.

“Given enough time … classical computers and quantum computers can solve the same problems,” said Thomas Wong of Creighton University.

Instead, most experts interpret quantum supremacy to mean the moment a quantum computer performs a calculation that, for all practical purposes, a classical computer can’t match. This is the crux of the disagreement between Google and IBM, because “practical” is a fuzzy concept.

In their Nature paper, Google claims that their Sycamore processor took 200 seconds to perform a calculation that the world’s best supercomputer—which happens to be IBM’s Summit machine—would need 10,000 years to match. That’s not a practical time frame. But IBM now argues that Summit, which fills an area the size of two basketball courts at the Oak Ridge National Laboratory in Tennessee, could perform the calculation in 2.5 days.

Google stands by their 10,000 year estimate, though several computer experts interviewed for this article said IBM is probably right on that point. “IBM’s claim looks plausible to me,” emailed Scott Aaronson of the University of Texas, Austin.

So assuming they’re right, is 2.5 days a practical amount of time? Maybe it is for some tasks, but certainly not for others. For that reason, when computer scientists talk about quantum supremacy, they usually have a more precise idea in mind.

Computer scientists distinguish between programs that run in “fast” polynomial time and “slow” exponential time. Fast programs remain fast even when you ask them to chew on a really big number. Slow programs grind down exponentially quickly as the size of the problem you’re asking them to solve gets bigger.

In their recent paper, Google demonstrated that their 53-qubit quantum computer performs a certain specialized computation (called “random circuit sampling”—see our recent explainer for more details) in fast polynomial time. Meanwhile, there’s no evidence that any classical computer can perform the same task in anything better than slow exponential time. That’s far more important than the time frame involved, said William Fefferman of the University of Chicago, whether it’s 2.5 days or 10,000 years.

“The actual time estimate is not very important,” Fefferman said. “I don’t think that [IBM’s paper] should invalidate any of the core claims Google is making, other than the 10,000-year estimate.”

What matters is that Google’s machine is solving a computational problem in a fundamentally different way than a classical computer can. This difference means that every time its quantum computer grows by even a single qubit, a classical computer will have to double in size to keep pace. By the time a quantum computer gets to 70 qubits—likely by the early 2020s—a classical supercomputer would need to occupy the area of a city to keep up.

Aaronson—borrowing an analogy from a friend—said the relationship between classical and quantum computers following Google’s announcement is a lot like the relationship in the 1990s between chess champion Garry Kasparov and IBM’s Deep Blue supercomputer. Kasparov could keep up for a bit, but it was clear he was soon going to be hopelessly outstripped by his algorithmic foe.

“Kasparov can make a valiant stand during a ‘transitional era’ that lasts for maybe a year or two,” Aaronson said. “But the fundamentals of the situation are that he’s toast.”