Reprinted with permission from Quanta Magazine’s Abstractions blog.

When he talks about where his fields of neuroscience and neuropsychology have taken a wrong turn, David Poeppel of New York University doesn’t mince words. “There’s an orgy of data but very little understanding,” he said to a packed room at the American Association for the Advancement of Science annual meeting in February. He decried the “epistemological sterility” of experiments that do piecework measurements of the brain’s wiring in the laboratory but are divorced from any guiding theories about behaviors and psychological phenomena in the natural world. It’s delusional, he said, to think that simply adding up those pieces will eventually yield a meaningful picture of complex thought.

He pointed to the example of Caenorhabditis elegans, the roundworm that is one of the most studied lab animals. “Here’s an organism that we literally know inside out,” he said, because science has worked out every one of its 302 neurons, all of their connections and the worm’s full genome. “But we have no satisfying model for the behavior of C. elegans,” he said. “We’re missing something.”

Poeppel is more than a gadfly attacking the status quo: Recently, his laboratory used real-world behavior to guide the design of a brain-activity study that led to a surprising discovery in the neuroscience of speech.

Critiques like Poeppel’s go back for decades. In the 1970s, the influential computational neuroscientist David Marr argued that brains and other information processing systems needed to be studied in terms of the specific problems they face and the solutions they find (what he called a computational level of analysis) to yield answers about the reasons behind their behavior. Looking only at what the systems do (an algorithmic analysis) or how they physically do it (an implementational analysis) is not enough. As Marr wrote in his posthumously published book, Vision: A Computational Investigation into the Human Representation and Processing of Visual Information, “… trying to understand perception by understanding neurons is like trying to understand a bird’s flight by understanding only feathers. It cannot be done.”

Poeppel and his co-authors carried on this tradition in a paper that appeared in Neuron last year. In it, they review ways in which overreliance on the “compelling” tools for manipulating and measuring the brain can lead scientists astray. Many types of experiments, for example, try to map specific patterns of neural activity to specific behaviors—by showing, say, that when a rat is choosing which way to run in a maze, neurons fire more often in a certain area of the brain. But those experiments could easily overlook what’s happening in the rest of the brain when the rat is making that choice, which might be just as relevant. Or they could miss that the neurons fire in the same way when the rat is stressed, so maybe it has nothing to do with making a choice. Worst of all, the experiment could ultimately be meaningless if the studied behavior doesn’t accurately reflect anything that happens naturally: A rat navigating a laboratory maze may be in a completely different mental state than one squirming through holes in the wild, so generalizing from the results is risky. Good experimental designs can go only so far to remedy these problems.

The common rebuttal to his criticism is that the huge advances that neuroscience has made are largely because of the kinds of studies he faults. Poeppel acknowledges this but maintains that neuroscience would know more about complex cognitive and emotional phenomena (rather than neural and genomic minutiae) if research started more often with a systematic analysis of the goals behind relevant behaviors, rather than jumping to manipulations of the neurons involved in their production. If nothing else, that analysis could help to target the research in productive ways.

That is what Poeppel and M. Florencia Assaneo, a postdoc in his laboratory, accomplished recently, as described in their paper for Science Advances. Their laboratory studies language processing—“how sound waves put ideas into your head,” in Poeppel’s words.

When people listen to speech, their ears translate the sound waves into neural signals that are then processed and interpreted by various parts of the brain, starting with the auditory cortex. Years of neurophysiological studies have observed that the waves of neural activity in the auditory cortex lock onto the audio signal’s “envelope”—essentially, the frequency with which the loudness changes. (As Poeppel put it, “The brain waves surf on the sound waves.”) By very faithfully “entraining” on the audio signal in this way, the brain presumably segments the speech into manageable chunks for processing.

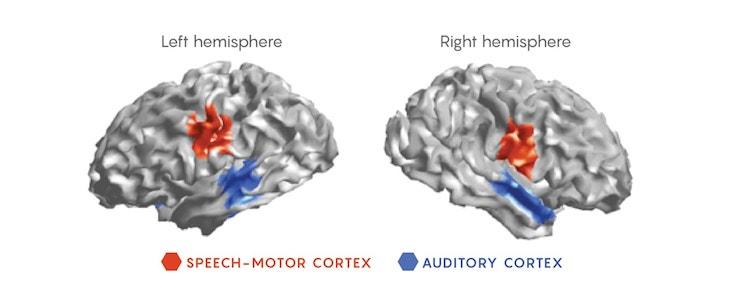

More curiously, some studies have seen that when people listen to spoken language, an entrained signal also shows up in the part of the motor cortex that controls speech. It is almost as though they are silently speaking along with the heard words, perhaps to aid comprehension—although Assaneo emphasized to me that any interpretation is highly controversial. Scientists can only speculate about what is really happening, in part because the motor-center entrainment doesn’t always occur. And it’s been a mystery whether the auditory cortex is directly driving the pattern in the motor cortex or whether some combination of activities elsewhere in the brain is responsible.

Assaneo and Poeppel took a fresh approach with a hypothesis that tied the real-world behavior of language to the observed neurophysiology. They noticed that the frequency of the entrained signals in the auditory cortex is commonly about 4.5 hertz—which also happens to be the mean rate at which syllables are spoken in languages around the world.

In her experiments, Assaneo had people listen to nonsensical strings of syllables played at rates between 2 and 7 hertz while she measured the activity in their auditory and speech motor cortices. (She used nonsense syllables so that the brain would have no semantic response to the speech, in case that might indirectly affect the motor areas. “When we perceive intelligible speech, the brain network being activated is more complex and extended,” she explained.) If the signals in the auditory cortex drive those in the motor cortex, then they should stay entrained to each other throughout the tests. If the motor cortex signal is independent, it should not change.

But what Assaneo observed was rather more interesting and surprising, Poeppel said: The auditory and speech motor activities did stay entrained, but only up to about 5 hertz. Once the audio changed faster than spoken language typically does, the motor cortex dropped out of sync. A computational model later confirmed that these results were consistent with the idea that the motor cortex has its own internal oscillator that naturally operates at around 4 to 5 hertz.

These complex results vindicate the researchers’ behavior-linked approach in several ways, according to Poeppel and Assaneo. Their equipment monitors 160 channels in the brain at sampling rates down to 1 hertz; it produces so much neurophysiological data that if they had simply looked for correlations in it, they would have undoubtedly found spurious ones. Only by starting with information drawn from linguistics and language behavior—the observation that there is something special about signals in the 4-to-5-hertz range because they show up in all spoken languages—did the researchers know to narrow their search for meaningful data to that range. And the specific interactions of the auditory and motor cortices they found are so nuanced that the researchers would never have thought to look for those on their own.

According to Assaneo, they are continuing to investigate how the rhythms of the brain and speech interact. Among other questions, they are curious about whether more-natural listening experiences might lift the limits on the association they saw. “It could be possible that intelligibility or attention increases the frequency range of the entrainment,” she said.

John Rennie joined Quanta Magazine as deputy editor in 2017. Previously, he spent 20 years at Scientific American, where he served as editor in chief between 1994 and 2009. He created and hosted Hacking the Planet, an original 2013 TV series for The Weather Channel, and has appeared frequently on television and radio on programs such as PBS’s Newshour, ABC’s World News Now, NPR’s Science Friday, the History Channel special Clash of the Cavemen and the Science Channel series Space’s Deepest Secrets. John has also been an adjunct professor of science writing at New York University since 2009. Most recently, he was editorial director of McGraw-Hill Education’s online science encyclopedia AccessScience.

WATCH: How language shaped human evolution.