In the 1940s, trailblazing physicists stumbled upon the next layer of reality. Particles were out, and fields—expansive, undulating entities that fill space like an ocean—were in. One ripple in a field would be an electron, another a photon, and interactions between them seemed to explain all electromagnetic events.

There was just one problem: The theory was glued together with hopes and prayers. Only by using a technique dubbed “renormalization,” which involved carefully concealing infinite quantities, could researchers sidestep bogus predictions. The process worked, but even those developing the theory suspected it might be a house of cards resting on a tortured mathematical trick.

“It is what I would call a dippy process,” Richard Feynman later wrote. “Having to resort to such hocus-pocus has prevented us from proving that the theory of quantum electrodynamics is mathematically self-consistent.”

Justification came decades later from a seemingly unrelated branch of physics. Researchers studying magnetization discovered that renormalization wasn’t about infinities at all. Instead, it spoke to the universe’s separation into kingdoms of independent sizes, a perspective that guides many corners of physics today.

Renormalization, writes David Tong, a theorist at the University of Cambridge, is “arguably the single most important advance in theoretical physics in the past 50 years.”

A Tale of Two Charges

By some measures, field theories are the most successful theories in all of science. The theory of quantum electrodynamics (QED), which forms one pillar of the Standard Model of particle physics, has made theoretical predictions that match up with experimental results to an accuracy of one part in a billion.

But in the 1930s and 1940s, the theory’s future was far from assured. Approximating the complex behavior of fields often gave nonsensical, infinite answers that made some theorists think field theories might be a dead end.

Feynman and others sought whole new perspectives—perhaps even one that would return particles to center stage—but came back with a hack instead. The equations of QED made respectable predictions, they found, if patched with the inscrutable procedure of renormalization.

The exercise goes something like this. When a QED calculation leads to an infinite sum, cut it short. Stuff the part that wants to become infinite into a coefficient—a fixed number—in front of the sum. Replace that coefficient with a finite measurement from the lab. Finally, let the newly tamed sum go back to infinity.

To some, the prescription felt like a shell game. “This is just not sensible mathematics,” wrote Paul Dirac, a groundbreaking quantum theorist.

The core of the problem—and a seed of its eventual solution—can be seen in how physicists dealt with the charge of the electron.

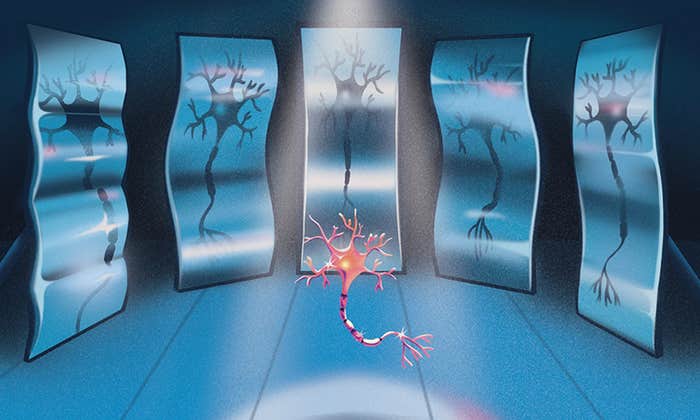

In the scheme above, the electric charge comes from the coefficient—the value that swallows the infinity during the mathematical shuffling. To theorists puzzling over the physical meaning of renormalization, QED hinted that the electron had two charges: a theoretical charge, which was infinite, and the measured charge, which was not. Perhaps the core of the electron held infinite charge. But in practice, quantum field effects (which you might visualize as a virtual cloud of positive particles) cloaked the electron so that experimentalists measured only a modest net charge.

“Renormalization captures nature’s tendency to sort itself into essentially independent worlds.”

Two physicists, Murray Gell-Mann and Francis Low, fleshed out this idea in 1954. They connected the two electron charges with one “effective” charge that varied with distance. The closer you get (and the more you penetrate the electron’s positive cloak), the more charge you see.

Their work was the first to link renormalization with the idea of scale. It hinted that quantum physicists had hit on the right answer to the wrong question. Rather than fretting about infinites, they should have focused on connecting tiny with huge.

Renormalization is “the mathematical version of a microscope,” said Astrid Eichhorn, a physicist at the University of Southern Denmark who uses renormalization to search for theories of quantum gravity. “And conversely you can start with the microscopic system and zoom out. It’s a combination of a microscope and a telescope.”

Magnets Save the Day

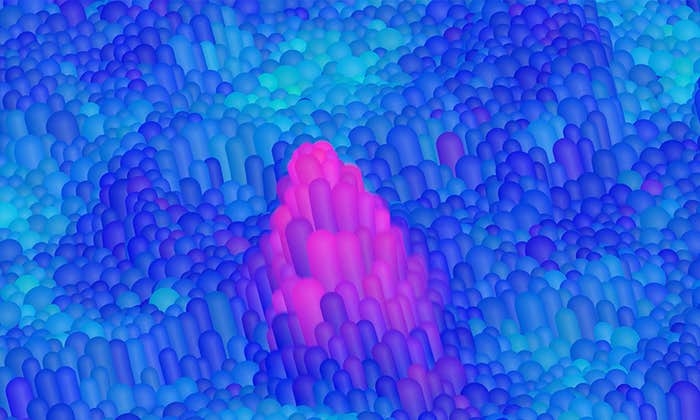

A second clue emerged from the world of condensed matter, where physicists were puzzling over how a rough magnet model managed to nail the fine details of certain transformations. The Ising model consisted of little more than a grid of atomic arrows that could each point only up or down, yet it predicted the behaviors of real-life magnets with improbable perfection.

At low temperatures, most atoms align, magnetizing the material. At high temperatures they grow disordered and the lattice demagnetizes. But at a critical transition point, islands of aligned atoms of all sizes coexist. Crucially, the ways in which certain quantities vary around this “critical point” appeared identical in the Ising model, in real magnets of varying materials, and even in unrelated systems such as a high-pressure transition where water becomes indistinguishable from steam. The discovery of this phenomenon, which theorists called universality, was as bizarre as finding that elephants and egrets move at precisely the same top speed.

Physicists don’t usually deal with objects of different sizes at the same time. But the universal behavior around critical points forced them to reckon with all length scales at once.

Leo Kadanoff, a condensed matter researcher, figured out how to do so in 1966. He developed a “block spin” technique, breaking an Ising grid too complex to tackle head-on into modest blocks with a few arrows per side. He calculated the average orientation of a group of arrows and replaced the whole block with that value. Repeating the process, he smoothed the lattice’s fine details, zooming out to grok the system’s overall behavior.

Finally, Ken Wilson—a former graduate student of Gell-Mann with feet in the worlds of both particle physics and condensed matter—united the ideas of Gell-Mann and Low with those of Kadanoff. His “renormalization group,” which he first described in 1971, justified QED’s tortured calculations and supplied a ladder to climb the scales of universal systems. The work earned Wilson a Nobel Prize and changed physics forever.

The best way to conceptualize Wilson’s renormalization group, said Paul Fendley, a condensed matter theorist at the University of Oxford, is as a “theory of theories” connecting the microscopic with the macroscopic.

Consider the magnetic grid. At the microscopic level, it’s easy to write an equation linking two neighboring arrows. But taking that simple formula and extrapolating it to trillions of particles is effectively impossible. You’re thinking at the wrong scale.

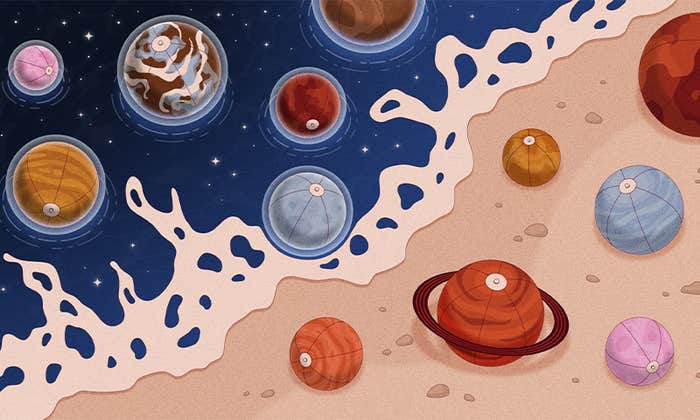

Wilson’s renormalization group describes a transformation from a theory of building blocks into a theory of structures. You start with a theory of small pieces, say the atoms in a billiard ball. Turn Wilson’s mathematical crank, and you get a related theory describing groups of those pieces—perhaps billiard ball molecules. As you keep cranking, you zoom out to increasingly larger groupings—clusters of billiard ball molecules, sectors of billiard balls, and so on. Eventually you’ll be able to calculate something interesting, such as the path of a whole billiard ball.

This is the magic of the renormalization group: It helps identify which big-picture quantities are useful to measure and which convoluted microscopic details can be ignored. A surfer cares about wave heights, not the jostling of water molecules. Similarly, in subatomic physics, renormalization tells physicists when they can deal with a relatively simple proton as opposed to its tangle of interior quarks.

Wilson’s renormalization group also suggested that the woes of Feynman and his contemporaries came from trying to understand the electron from infinitely close up. “We don’t expect [theories] to be valid down to arbitrarily small [distance] scales,” said James Fraser, a philosopher of physics at Durham University in the U.K. Mathematically cutting the sums short and shuffling the infinity around, physicists now understand, is the right way to do a calculation when your theory has a built-in minimum grid size. “The cutoff is absorbing our ignorance of what’s going on” at lower levels, said Fraser.

In other words, QED and the Standard Model simply can’t say what the bare charge of the electron is from zero nanometers away. They are what physicists call “effective” theories. They work best over well-defined distance ranges. Finding out exactly what happens when particles get even cozier is a major goal of high-energy physics.

From Big to Small

Today, Feynman’s “dippy process” has become as ubiquitous in physics as calculus, and its mechanics reveal the reasons for some of the discipline’s greatest successes and its current challenges. During renormalization, complicated submicroscopic capers tend to just disappear. They may be real, but they don’t affect the big picture. “Simplicity is a virtue,” Fendley said. “There is a god in this.”

That mathematical fact captures nature’s tendency to sort itself into essentially independent worlds. When engineers design a skyscraper, they ignore individual molecules in the steel. Chemists analyze molecular bonds but remain blissfully ignorant of quarks and gluons. The separation of phenomena by length, as quantified by the renormalization group, has allowed scientists to move gradually from big to small over the centuries, rather than cracking all scales at once.

Yet at the same time, renormalization’s hostility to microscopic details works against the efforts of modern physicists who are hungry for signs of the next realm down. The separation of scales suggests they’ll need to dig deep to overcome nature’s fondness for concealing its finer points from curious giants like us.

“Renormalization helps us simplify the problem,” said Nathan Seiberg, a theoretical physicist at the Institute for Advanced Study in Princeton, New Jersey. But “it also hides what happens at short distances. You can’t have it both ways.”

Lead image: You don’t have to analyze individual water molecules to understand the behavior of droplets, or droplets to study a wave. This ability to shift focus across various scales is the essence of renormalization. Credit: Samuel Velasco/Quanta Magazine