I was listening to a recent Radiolab episode on blame and guilt, where the guest Robert Sapolsky mentioned a famous study on judges handing out harsher sentences before lunch than after lunch. The idea is that their mental resources deplete over time, and they stop thinking carefully about their decision—until having a bite replenishes their resources. The study is well known, and often (as in the Radiolab episode) used to argue how limited free will is, and how much of our behavior is caused by influences outside of our own control. I had never read the original paper, so I decided to take a look.

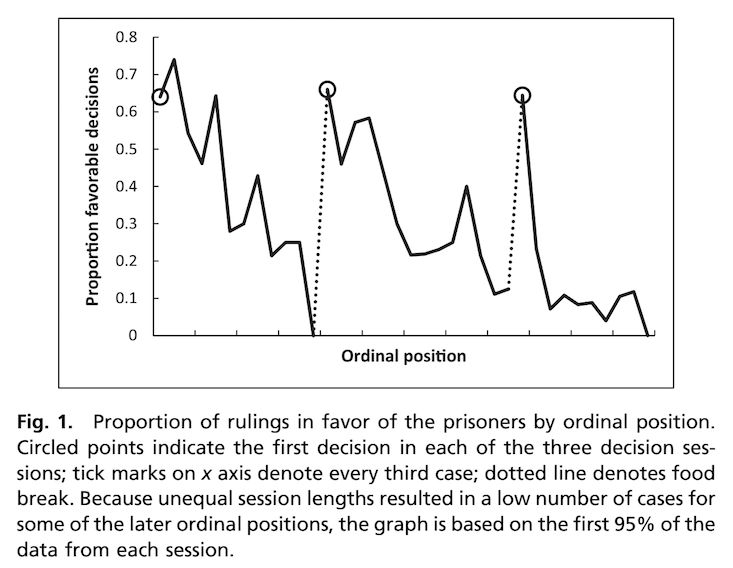

During the podcast, it was mentioned that the percentage of favorable decisions drops from 65 percent to 0 percent over the number of cases that are decided on. This sounded unlikely. I looked at Figure 1 from the paper (below), and I couldn’t believe my eyes. Not only is the drop indeed as large as mentioned—it occurs three times in a row over the course of the day, and after a break, it returns to exactly 65 percent!

As psychologists, we shouldn’t teach or cite this finding.

I’m not the first person to be surprised by this data (thanks to Nick Brown for pointing me to these papers on Twitter). There was a published criticism on the study in PNAS (which no one reads or cites), and more recently, an article by Andreas Glöckner explaining how the data could be explained through a more plausible mechanism (for a nice write up, see this blog by Tom Stafford). I appreciate that people have tried to think about which mechanism could cause this effect, and if you are interested, I highly recommend reading the commentaries (and perhaps even the response by the authors).

But I want to take a different approach in this blog. I think we should dismiss this finding, simply because it is impossible. When we interpret how impossibly large the effect size is, anyone with even a modest understanding of psychology should be able to conclude that it is impossible that this data pattern is caused by a psychological mechanism. As psychologists, we shouldn’t teach or cite this finding, nor use it in policy decisions as an example of psychological bias in decision making.

As Glöckner notes, one surprising aspect of this study is the magnitude of the effect: “A drop of favorable decisions from 65% in the first trial to 5% in the last trial as observed in DLA is equivalent to an odds ratio of 35 or a standardized mean difference of d = 1.96 (Chinn, 2000).”

Some people dislike statistics. They are only interested in effects that are so large, you can see them by just plotting the data. This study might seem to be a convincing illustration of such an effect. My goal in this blog is to argue against this idea. You need statistics, maybe especially when effects are so large they jump out at you.

When reporting findings, authors should report and interpret effect sizes. An important reason for this is that effects can be impossibly large. An example I give in my MOOC is the Ig Nobel prize-winning finding that suicide rates among white people increased with the amount of airtime dedicated to country music. The reported (but not interpreted) correlation was a whopping r = 0.54. I once went to a Dolly Parton concert with my wife. It was a great two-hour show. If the true correlation between listening to country music and white suicide rates was 0.54, this would not have been a great concert, but a mass suicide.

Based on this data, the difference between the height of 21-year-old men and women in The Netherlands is approximately 13 centimeters. That is a Cohen’s d of 2. That’s the effect size in the hungry judges study.

If hunger had an effect on our mental resources of this magnitude, our society would fall into minor chaos every day at 11:45 a.m. Or at the very least, our society would have organized itself around this incredibly strong effect of mental depletion. Just like manufacturers take size differences between men and women into account when producing items such as golf clubs or watches, we would stop teaching in the time before lunch, doctors would not schedule surgery, and driving before lunch would be illegal. If a psychological effect is this big, we don’t need to discover it and publish it in a scientific journal—you would already know it exists. Sort of how the “after lunch dip” is a strong and replicable finding that you can feel yourself (and that, as it happens, is directly in conflict with the finding that judges perform better immediately after lunch—surprisingly, the authors don’t discuss the after lunch dip).

We can look at the review paper by Richard, Bond, & Stokes-Zoota (2003) to see which effect sizes in law psychology are close to a Cohen’s d of 2, and find two that are slightly smaller. The first is the effect that a jury’s final verdict is likely to be the verdict a majority initially favored, which 13 studies show has an effect size of r = 0.63, or d = 1.62. The second is that when a jury is initially split on a verdict, its final verdict is likely to be lenient, which 13 studies show to have an effect size of r = .63 as well. In their entire database, some effect sizes that come close to d = 2 are the findings that personality traits are stable over time (r = 0.66, d = 1.76), people who deviate from a group are rejected from that group (r = .6, d = 1.5), or that leaders have charisma (r = .62, d = 1.58). You might notice the almost-tautological nature of these effects. The biggest effect in their database is for “psychological ratings are reliable” (r = .75, d = 2.26)—if we try to develop a reliable rating, it is pretty reliable. That is the type of effect that has a Cohen’s d of around 2: Tautologies. And that is, supposedly, the effect size that the passing of time (and subsequently eating lunch) has on parole hearing sentencings.

I think it is telling that most psychologists don’t seem to be able to recognize data patterns that are too large to be caused by psychological mechanisms. There are simply no plausible psychological effects that are strong enough to cause the data pattern in the hungry judges study. Implausibility is not a reason to completely dismiss empirical findings, but impossibility is. It is up to authors to interpret the effect size in their study, and to show the mechanism through which an effect that is impossibly large, becomes plausible. Without such an explanation, the finding should simply be dismissed.

Daniël Lakens is an experimental psychologist at the Human-Technology Interaction group at Eindhoven University of Technology, The Netherlands. Follow him on Twitter @lakens.

WATCH: The neurobiologist Robert Sapolsky on the problem of being too reductive about the brain.

This post was reprinted with permission from The 20% Statistician.