When he was just a kid, Anil Seth wondered about big questions. Why am I me and not someone else? Where was I before I was born? He was consciousness-curious before he knew the name for it. This drew him initially to physics, which he thought had the ideas and tools to understand everything. Then experimental psychology seemed to promise a more direct route to understanding the nature of mind, but his attention would again shift elsewhere. “There was a very long diversion through computer science and AI,” Seth told me recently. “So my Ph.D., in fact, is in artificial intelligence.” Though it wasn’t like that was going to confine his curiosity: AI led him to neuroscience and back to consciousness, which has been his focus, he said, for “the best part of 20 years or so.”

Now Seth is a cognitive and computational neuroscientist at the University of Sussex in England. His intellectual mission is advancing the science of consciousness, and he codirects a pair of organizations—the Sackler Center for Consciousness Science and the Canadian Institute for Advanced Research’s program on Brain, Mind, and Consciousness—to that end. Seth is also the author of the 2021 book Being You: A New Science of Consciousness. In it, he argues that minds require flesh-and-blood predictive machinery. His 2017 TED talk about this has over 13 million views.

I caught up recently with Seth to discuss his work on consciousness, AI, and the worrying intersection of his pair of passions—the possibility of creating conscious AI, machines that not only think but also feel. We discuss why consciousness likely isn’t reducible to brain algorithms, the trouble with AIs designed to seem conscious, why our experience of the world amounts to a “controlled hallucination,” and the evolutionary point of having a mind.

How do you understand the link between intelligence and consciousness?

Consciousness and intelligence are very different things. There’s this assumption in and around the AI community that, as AI gets smarter, at some point, maybe the point of general AI—the point at which AI becomes as intelligent as a human being—suddenly the lights come on for the system, and it’s aware. It doesn’t just do things, it feels things. But intelligence, broadly defined, is about doing the right thing at the right time, having and achieving goals in a flexible way. Consciousness is all about subjective, raw experience, feelings—things like pain, pleasure, and experiences of the world around us and of the self within it. There may be some forms of intelligence that require consciousness in humans and other animals. But fundamentally they’re different things. This assumption that consciousness just comes along for the ride is mistaken.

What does consciousness need to work?

We don’t know how to build a conscious machine. There’s no consensus about what the sufficient mechanisms are. There are many theories. They’re all interesting. I have my own theory in my book Being You that it’s very coupled with being alive: You won’t get conscious machines until you get living machines. But I might be wrong. In which case consciousness in AI is much closer than we might think. Given this uncertainty, we should be very, very cautious indeed about trying to build conscious machines. Consciousness can endow these machines with all kinds of new capabilities and powers that will be very hard to regulate and rein in. Once an AI system is conscious, it will likely have not the interests we give it, but its own interests. More disquieting, as soon as something is conscious, it has the potential to suffer, and in ways we won’t necessarily even recognize.

Should we be worried about machines that merely appear conscious?

Yes. It’s very difficult to avoid projecting some kind of mind behind the words that we read coming from the likes of ChatGPT. This is potentially very disruptive for society. We’re not quite there yet. Existing large language models, chatbots, can still be spotted. But we humans have a deeply anthropomorphic tendency to project consciousness and mind into things on the basis of relatively superficial similarity. As AI gets more fluent, harder to catch, it’ll become more and more difficult for us to avoid interacting with these things as if they are conscious. Maybe we will make a lot of mistakes in our predictions about how they might behave. Some bad predictions could be catastrophic. If we think something is conscious, we might assume that it will behave in a particular way because we would, because we are conscious.

This might also contort the kind of ethics we have. If we really feel that something is conscious, then we might start to care about what it says. Care about its welfare in a way that prevents us from caring about other things that are conscious. Science-fiction series like Westworld have addressed this in a way that is not very reassuring. The people interacting with the robots end up learning to treat these systems as if they’re slaves of some kind. That’s not a very healthy position for our minds to be in.

The more you look into the brain, the less like a computer it actually appears to be.

You caution against building conscious machines—is that in tension at all with your goal of understanding consciousness? The roboticist Alan Winfield thinks building conscious machines, starting with simulation-based internal models and artificial theory of mind, will be key to solving consciousness. He has a “If you can’t build it, you don’t understand it” view of this. What do you think?

There is a tension. Alan has a point. It’s something I struggle with a little bit. It depends on what you think is going to create conscious AI. For me, consciousness is very closely tied up with being alive. I think simulating the brain on computers, as we have them now, is not the same as building or instantiating a conscious system. But I might be wrong. There’s a certain risk to that—that in fact simulating something is the same as instantiating, is the same as generating it. In which case, then I’m not being as cautious as I think I am.

On the other hand, of course, unless we understand how consciousness happens in biological brains, and bodies, then we will not be on firm ground at all in trying to make inferences about when other systems are conscious—whether they’re machine-learning systems, artificial-intelligence systems, other animals, newborn babies, or anything. We will be on very, very shaky ground. So there’s a need for research into consciousness. All I’m saying is that there shouldn’t be this kind of gung ho goal, “Let’s try to just build something that actually is conscious, because it’s kind of cool.” For research in this area, there should be some kind of ethical regulation about what’s worth doing. What are the pros and cons? What are the risks and rewards? Just as there are in other areas of research.

Why do you think that consciousness isn’t some kind of complicated algorithm that neurons implement?

This idea, often called functionalism in philosophy, is a really big assumption to make. Some things in the world, when you simulate them, run an algorithm, you actually get the thing. An algorithm that plays chess, let’s say, is actually playing chess. That’s fine. But there are other things for which an algorithmic simulation is just, and always will be, a simulation. Take a computer simulation of a weather system. We can simulate a weather system in as much detail as we like, but nobody would ever expect it to get wet or windy inside a computer simulation of a hurricane, right? It’s just a simulation. The question is: Is consciousness more like chess, or more like the weather?

The common idea that consciousness is just an algorithm that runs on the wetware of the brain assumes that consciousness is more like chess, and less like the weather. There’s very little reason why this should be the case. It stems from this idea we’re saddled with still—that the brain is a kind of computer and the conscious mind is a program running on the computer of the brain.

You won’t get conscious machines until you get living machines.

But the more you look into the brain, the less like a computer it actually appears to be. In a computer you’ve got a sharp distinction between the substrate, the silicon hardware, and the software that runs on it. That’s why computers are useful. You can have the same computer run a billion different programs. But in the brain, it’s not like that at all. There’s no sharp distinction between the mindware and the wetware. Even a single neuron, every time it fires, changes its connection strength. A single neuron tries to sort of persist over time as well. It’s a very complicated object. Then of course there are chemicals swirling around. It’s just not clear to me that consciousness is something that you can abstract away from the stuff that brains and bodies are made out of, and just implemented in the pristine circuits of some other kind of system.

You argue that the world around us as we perceive it is best understood as a controlled hallucination. What do you mean by that?

It seems to us that we see the world as it is, in a kind of mind-independent way: We open our eyes, and there it is. There’s the world. The idea that I explore in my book and in my research is that this is not what’s happening. What we experience as reality ultimately has its roots in the biology of the body in a very substrate-dependent manner. In fact, the brain is always making predictions about what’s out there in the world or in the body. And using sensory signals to update those predictions. What we consciously experience is not a readout of the sensory data in a kind of outside-in direction. It’s the predictions themselves. It’s the brain’s best guess of what’s going on.

Does that amount to a simulation of objective reality?

Not necessarily. I just think it means that the brain has some kind of generative model of the causes of its sensory signals. That really constitutes what we experience, but also has the same process about the body itself. If you think about what brains are for—evolutionarily and developmentally speaking—they’re not for doing neuroscience or playing chess or whatever. They’re for keeping the body alive. And the brain has to control and regulate things like blood pressure, heartbeat, gastric tension, and all these physiological processes to make sure that we stay within these very tight bounds that are compatible with staying alive for complex organisms like us. I argue that this entails that the brain is or has a predictive model of its own body, because prediction is very good for regulation. This goes down all the way into the depths of the physiology. Even single cells self-regulate in a very interesting way. The substrate dependency is built in from the ground-up.

What we consciously experience is the brain’s best guess of what’s going on.

Why does consciousness have to be an embodied phenomenon when we can have experiences of lacking a body?

You’re right, people can have out-of-body experiences, experiences of things like ego dissolution, and so on. It shows that how things appear in our experience is not always, if ever, an accurate reflection of how they actually are. Our experience of what our body is is itself a kind of perception. There are many experiments that show that it’s quite easy to manipulate people’s experiences of where their body is and isn’t. Or what object in the world is part of the body or not. To get the brain into a condition that it can have those kinds of experiential states, my suspicion is that there needs to have been at least a history of embodiment. A brain that’s not had any interaction with a body or a world might be conscious, but it would not be conscious of anything at all.

Is that something that could happen with brain organoids?

Right. They don’t look very impressive. They’re clusters of neurons derived from human stem cells that develop in a dish. They certainly don’t write poetry as chatbots can do. We look at these, and we tend to think, “There’s nothing ethically to worry about here.” But me, I’m much more concerned about the prospects of synthetic consciousness in brain organoids than I am in the next iteration of chatbots. Because these organoids are made out of the same stuff. Sure, they don’t have bodies. That might be a deal breaker. But still they’re much closer to us in one critical sense, which is that they’re made out of the same material.

But that doesn’t mean it would be conscious, right? Aren’t our brains active in many ways that are unconnected to generating consciousness?

You’re absolutely right. There’s lots of stuff that goes on in the brain that doesn’t seem to be directly implicated in consciousness. Everything’s indirectly implicated. But if we look at where the tightest relationships are between brain activity and consciousness, we can rule out large parts of the brain. We can rule out the cerebellum. The cerebellum, at the back of the brain, has three quarters of all our neurons yet doesn’t seem to be directly implicated in consciousness at all. So if you just had a cerebellum by itself, my guess is it would not support any conscious experiences. The right way to think about that, is that just being made of neurons, and having this kind of biological substrate—well, that might be necessary for consciousness, but it’s certainly not sufficient.

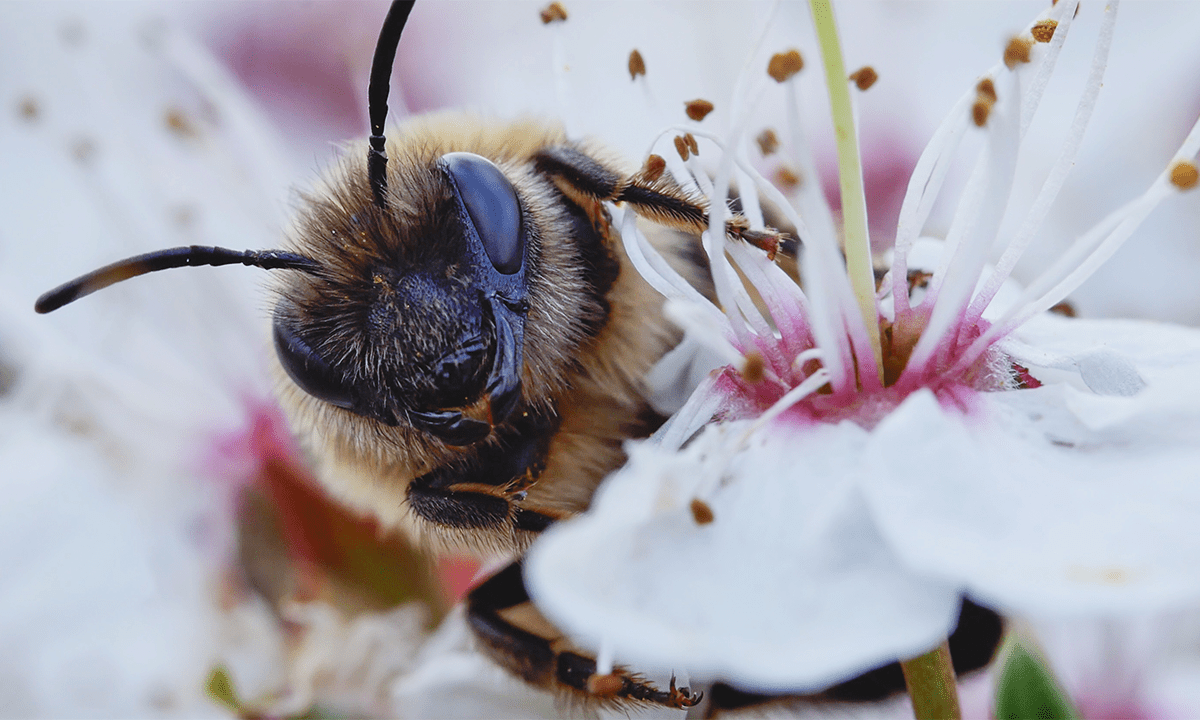

How do you think about what the adaptive value might have been of having conscious experiences? Do you think that insects or plants have a possibility of being conscious?

This is a huge problem. Actually there’s two connected problems here. One is: What is the function of consciousness? It’s famously said that nothing in biology makes sense except in the light of evolution. Why did evolution provide us and other organisms with conscious experiences? And did it provide all organisms with conscious experiences? And the connected question is: How will we ever know? Can we develop a test now to decide whether a plant has experience or not, or indeed, whether an organoid, or an AI system has conscious experiences? If you understand the function, then we’ve got a way in to apply some tests, at least in some cases.

There are some people, certainly in philosophy, who argue that consciousness has no function at all. It doesn’t play any role in an organism’s behavior. I find this very difficult to grasp. It’s such a central phenomenon of our lives. If you just look at the character of conscious experience, it’s incredibly functionally useful. A conscious experience, typically for us humans, brings together a large amount of information about the world, from many different modalities at once—sight, sound, touch, taste, smell—in a single unified scene that immediately makes apparent what the organism should do next. That’s the primary function of consciousness—to guide the motivated behavior of the organism that maximizes its chances of staying alive.

Do plants experience motivation to stay alive?

Plants are amazing. It sounds like a very trite thing to say, but plants are more interesting than many people think. They move on slow timescales, but if you speed up their motion, they behave. They don’t just waft around in the wind. Many plants appear to have goals and appear to engage in intentional action. But they don’t have nervous systems of any sort, certainly not of the kind that bear comparison to humans. They didn’t really face the same problem of integrating large amounts of information in a way to support rapid flexible behavior.

Inferring where consciousness resides in the world, beyond the human, is really very difficult. We know we are conscious. When we look across all mammals, you see the same brain mechanisms that are implicated in human consciousness. If you go further afield than that, to insects and a fish, it becomes more controversial and more difficult to say for sure. But I don’t think the problem is insoluble. If we begin to understand that it’s not just having this part of the brain, but it’s this way in which brain regions speak to each other that’s important, that gives us something which we can look for in other animals that may have quite different brains to our own. But when you get to plants it’s much more difficult.

What are you working on at the moment?

One thing is trying to use computational models to understand more about different kinds of visual experience. We’re looking at different kinds of hallucinations, hallucinations that might happen in psychosis, with psychedelics, or as a result of various neurological conditions like Parkinson’s disease. These hallucinations all have different characters. Some are simple, some are complex, some are spontaneous, some seem to emerge from the environment. We’re trying to understand the computational mechanisms that underpin these different kinds of experience, both to shed light on these conditions, but also more generally, to understand more about normal non-hallucinatory experience.

There’s also very little we know about the hidden landscape of perceptual or inner diversity. There was that example of the dress a few years ago that half the world saw one way and a half another way. But it’s not just the dress. I have a project called the Perception Census, which is a very large-scale, online citizen-science project to characterize how we differ in our perceptual experiences across many different domains like color, time, emotion, sound, music, and so on. There are about 50 different dimensions of perception we’re looking at within this study. We’ve already got about 25,000 people taking part from 100 countries. The objective is to see if there’s some kind of underlying latent space, sort of perceptual personalities, if you like, that explain how individual differences in perception might go together when we look at different kinds of perception. That’s a bit of uncharted territory. ![]()

Brian Gallagher is an associate editor at Nautilus. Follow him on Twitter @bsgallagher.

Lead image: Tasnuva Elahi, from images by Irina Popova st and eamesBot / Shutterstock