Princeton’s Palmer Field, 1951. An autumn classic matching the unbeaten Tigers, with star tailback Dick Kazmaier—a gifted passer, runner, and punter who would capture a record number of votes to win the Heisman Trophy—against rival Dartmouth. Princeton prevailed over Big Green in the penalty-plagued game, but not without cost: Nearly a dozen players were injured, and Kazmaier himself sustained a broken nose and a concussion (yet still played a “token part”). It was a “rough game,” The New York Times described, somewhat mildly, “that led to some recrimination from both camps.” Each said the other played dirty.

The game not only made the sports pages, it made the Journal of Abnormal and Social Psychology. Shortly after the game, the psychologists Albert Hastorf and Hadley Cantril interviewed students and showed them film of the game. They wanted to know things like: “Which team do you feel started the rough play?” Responses were so biased in favor of each team that the researchers came to a rather startling conclusion: “The data here indicate there is no such ‘thing’ as a ‘game’ existing ‘out there’ in its own right which people merely ‘observe.’ ” Everyone was seeing the game they wanted to see. But how were they doing this? They were, perhaps, an example of what Leon Festinger, the father of “cognitive dissonance,” meant when he observed “that people cognize and interpret information to fit what they already believe.”

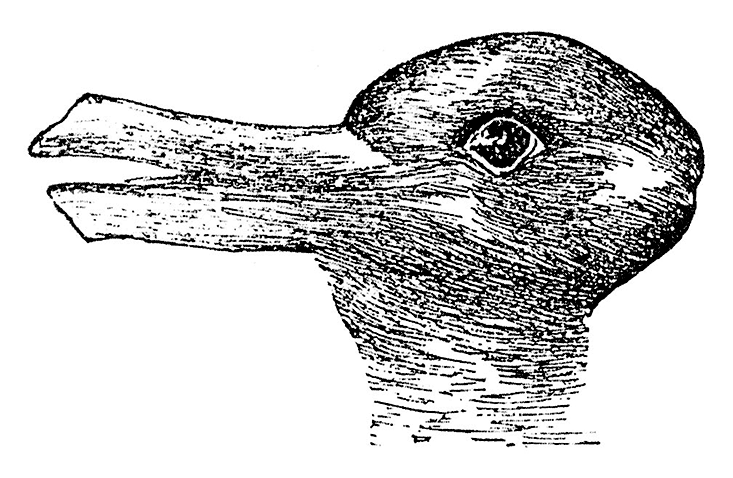

In watching and interpreting the game footage, the students were behaving similarly to children shown the famous duck-rabbit illusion, pictured above. When shown the illusion on Easter Sunday, more children see the rabbit, where on other Sundays they are more likely to see the duck.1 The image itself allows both interpretations, and switching from seeing one to the other takes some effort. When I showed duck-rabbit to my 5-year-old daughter, and asked her what she saw, she replied: “A duck.” When I asked her if she saw “anything else,” she edged closer, forehead wrinkled. “Maybe there’s another animal there?” I proffered, trying not to sound as if magnet school admission was on the line. Suddenly, a shimmer of awareness, and a smile. “A rabbit!”

I ought not to have felt bad. As an experiment by Allison Gopnik and colleagues showed, no child in a group of 3- to 5-year-old test subjects made the reversal (of a “vases-faces” illustration) on their own.2 When a group of older—but still “naïve”—children were tested, one-third made the reversal. Most of the rest were able to see both when the ambiguity was mentioned. Interestingly, the ones who saw both on their own were those who had done better on an exercise testing “theory of mind”—in essence the ability to monitor our own mental state in relation to the world (for example, showing children a box of Crayons that turn out to contain candles, and then asking them to predict what another child would think is in the box).

Attention can “be thought of as what you allow your eyes to look at.”

And if you do not discern duck-rabbit at first, or any other figure reversal, there is no immediate cause for concern: Any number of studies show adults, who as the authors note “presumably have complex representational abilities,” failing to make the switch. Nor is there any correct reading: While there is a slight rabbit tendency, there are plenty of duck people. Studies probing handedness as a cause have come up empty. My wife sees rabbit, I see duck. We are both lefties.

But while everyone, at some point, can be made to see duck-rabbit, there is one thing that no one can see: You cannot, no matter how hard you try, see both duck and rabbit at once.

When I put the question of whether we were living in a kind of metaphorical duck-rabbit world to Lisa Feldman Barrett, who heads the Interdisciplinary Affective Science Laboratory at Northeastern University, her answer was quick: “I don’t even think it’s necessarily metaphorical.” The structure of the brain, she notes, is such that there are many more intrinsic connections between neurons than there are connections that bring sensory information from the world. From that incomplete picture, she says, the brain is “filling in the details, making sense out of ambiguous sensory input.” The brain, she says, is an “inference generating organ.” She describes an increasingly well-supported working hypothesis called predictive coding, according to which perceptions are driven by your own brain and corrected by input from the world. There would otherwise simple be too much sensory input to take in. “It’s not efficient,” she says. “The brain has to find other ways to work.” So it constantly predicts. When “the sensory information that comes in does not match your prediction,” she says, “you either change your prediction—or you change the sensory information that you receive.”

This connection between sensory input on the one hand, and prediction and belief formation on the other hand, has been observed in the laboratory. In a study published in Neuropsychologia, when people were asked to think about whether a statement linking an object and a color—the banana is yellow is one example—was true, similar regions of the brain were activated when they simply were asked to perceive colors. As if thinking about the banana as yellow was the same as actually seeing yellow—a kind of re-perception, as is known to happen in memory recall (though the researchers also cautioned that “perception and knowledge representation are not the same phenomena”).

We form our beliefs based on what comes to us from the world through the window of perception, but then those beliefs act like a lens, focusing on what they want to see. In a New York University psychology laboratory earlier this year, a group of subjects watched a 45-second video clip of a violent struggle between a police officer and an unarmed civilian.3 It was ambiguous as to whether the officer, in trying to handcuff the person resisting arrest, behaved improperly. Before seeing the video, the subjects were asked to express how much identification they felt with police officers as a group. The subjects, whose eye movements were being discretely monitored, were then asked to assign culpability. Not surprisingly, people who identified less strongly with police were more likely to call for stronger punishment. But that was only for people who often looked at the police officer during the video. For those who did not look as much at the officer, their punishment decision was the same whether they identified with police or not.

As Emily Balcetis, who directs NYU’s Social Perception Action and Motivation Lab, and was a coauthor on the study, told me, we often think of decision making as the key locus of bias. But, she asks, “what aspects of cognition are preceding that big judgment?” Attention, she suggests, can “be thought of as what you allow your eyes to look at.” In the police video, “your eye movements determine a completely different understanding of the facts of the case.” People who made the stronger judgment against the police spent more time seeing him (and, per duck-rabbit, they were presumably not able to watch the officer and civilian at the same time). “If you feel he’s not your guy,” says Balcetis. “You watch him more. You look at the guy who might seem to you to pose a threat.”

But what matters in making such assessments? This too, is fluid. Numerous studies have suggested a biased neural signature in subjects when they see images of people from their own racial in-group. But now tell subjects the people in those images have been assigned to a fictitious “team,” to which they also belong. “In that first 100 milliseconds or so, we’re presented with a rabbit-duck problem,” says Jay Van Bavel, a professor of psychology at NYU. Are you looking at someone from your own team, or someone from a different race? In Van Bavel’s study, suddenly it is the team members that are getting more positive neuronal activity, virtually rendering race invisible (almost as if, per duck-rabbit, we can only favor one interpretation at a time).4

We live in a world where “in some sense, almost everything we see can be construed in multiple ways,” says Bavel. As a result, we are constantly choosing between duck and rabbit.

We are stubborn about our decisions, too. In a study paying explicit homage to duck-rabbit, Balcetis and colleagues showed subjects a series of images depicting either “sea creatures” or “farm animals.” Subjects were asked to encode each image; they would get positive or negative “points” for each correct identification. If they ended the game with a positive score, they would get jelly beans. Negative? “Partially liquefied canned beans.” But the fix was in: The last image was an ambiguous horse-seal figure (with the seal a somewhat tougher see). To avoid consuming the nasty beans, subjects would have to see whichever image put them over the top. And, largely, they did. But what if subjects actually saw both images and simply reported only seeing the one that favored their own ends? They ran the experiment again, with a group of new subjects, this time with eye tracking. Those who had more motivation to see farm animals tended to look first at the box marked “farm animal” (where a click would encode their answer and send them to the next animal), and vice versa. The glance at the “correct” box (in their minds, anyway) was like a poker “tell,” revealing their intention without conscious calculation. Their vision was primed to pick favorably.

But when the experiments faked a computer error and said, no, sorry, actually, it is the sea creature that will keep you from drinking liquid beans, most subjects, Balcetis says, stuck to their original, motivated perception—even in the light of the new motivation. “They cannot reinterpret this picture they have formed in their mind,” she says, “because in the process of trying to make meaning from this ambiguous thing in the first place removes the ambiguity from that.”

Our brain may have registered the unreliability of the image subconsciously, and decided not to spread the news.

A recent study by Kara Federmeier and colleagues hints that something similar goes on in our formation of memories.5 They considered the example of someone with a mistaken belief about a political candidate’s policy stance, like when most people incorrectly thought Michael Dukakis, not George Bush, had declared he would be the “education president.” Studying subjects’ brain activity via EEG, they found that people’s “memory signals” were much the same toward the incorrect information as they were toward the things they correctly remembered. Their interpretation of the event had hardened into truth.

This hardening can happen without our awareness. In a study published in Pediatrics, more than 1,700 parents in the United States were sent material from one of four sample campaigns designed to reduce “misperceptions” of the dangers of the MMR vaccine.6 None of the campaigns, they reported, seemed to push the needle on parents’ intentions to vaccinate. For parents who were least likely to vaccinate to begin with, the material actually lowered their belief that MMR causes autism. But it also made them less likely to vaccinate. Showing people images of children with measles and mumps—the dangers of not vaccinating—only made people more likely to believe vaccines had dangerous side effects.

How exactly this hardening happens, and what might prompt someone to change their mind and reverse their duck-rabbit interpretation, is unclear. There is a long-lasting and ongoing debate over what exactly drives the figure reversal process. One argument is that it is “bottom up.” It could be that the neurons giving you the duck representation get tired, or “satiated,” and suddenly the novel rabbit swims into view. Or there is something about the way the figure is drawn (the bill “pops out”) or how it is presented that prompts the switch.

The opposing theory is “top down,” suggesting something going on higher in the brain that predisposes us to make the switch: We have already learned about it, we are expecting it, we are actively looking for it. People instructed to not make reversals are less likely to, while asking people to do it faster increases the reversal rate.7 Others argue that it is a hybrid model, challenging the distinction between top-down and bottom-up.8

Jürgen Kornmeier, of the Institute for Frontier Areas of Psychology and Mental Health in Freiburg, Germany, along with colleagues, has suggested one hybrid model, which questions the distinction between top-down and bottom-up. As Kornmeier described to me, even the earliest activity in the eyes and early visual systems betray top-down influence—and the information flow can by no means assumed to be one-way. They suggest that even if we do not notice duck and rabbit, our brain may have actually registered the unreliability of the image subconsciously, and decided, in effect, not to spread the news. In this view, your brain itself is in on the trick. The only dupe left in the room is you.

None of which bodes well for the idea that policy or other debates can be solved by simply giving people accurate information. As research by Yale University law and psychology professor Dan Kahan has suggested, polarization does not happen with debates like climate change because one side is thinking more analytically, while the other wallows in unreasoned ignorance or heuristic biases.9 Rather, those subjects who tested highest on measures like “cognitive reflection” and scientific literacy were also most likely to display what he calls “ideologically motivated cognition.” They were paying the most attention, seeing the duck they knew was there.

Tom Vanderbilt writes on design, technology, science, and culture, among other subjects.

References

1. Brugger, P. & Brugger, S. The Easter Bunny in October: Is it disguised as a duck? Perceptual and Motor Skills 76, 577-578 (1993).

2. Mitroff, S.R., Sobel, D.M., & Gopnik, A. Reversing how to think about ambiguous figure reversals: Spontaneous alternating by uninformed observers. Perception 35, 709-715 (2006).

3. Granot, Y., Balcetis, E., Schneider, K.E., Tyler, T.R. Justice is not blind: Visual attention exaggerates effects of group identification on legal punishment. Journal of Experimental Psychology: General (2014).

4. Van Bavel, J.J., Packer, D.J., & Cunningham, W.A. The neural substrates of in-group bias. Psychological Science 19, 1131-1139 (2008).

5. Coronel, J.C., Federmeier, K.D., & Gonsalves, B.D. Event-related potential evidence suggesting voters remember political events that never happened. Social Cognitive and Affective Neuroscience 9, 358-366 (2014).

6. Nyhan, B., Reifler, J., Richey, S. & Freed, G.L. Effective messages in vaccine promotion: A randomized trial. Pediatrics (2014). Retrieved from doi: 10.1542/peds.2013-2365

7. Kornmeier, J. & Bach, M. Ambiguous figures—what happens in the brain when perception changes but not the stimulus. Frontiers in Human Neuroscience 6 (2012). Retrieved from doi: 10.3389/fnhum.2012.00051

8. Kornmeier, J. & Bach, M. Object perception: When our brain is impressed but we do not notice it. Journal of Vision 9, 1-10 (2009).

9. Kahan, D.M. Ideology, motivated reasoning, and cognitive reflection: An experimental study. Judgment and Decision Making 8, 407-424 (2013).