What is it exactly that makes humans so smart? In his seminal 1950 paper, “Computer Machinery and Intelligence,” Alan Turing argued human intelligence was the result of complex symbolic reasoning. Philosopher Marvin Minsky, cofounder of the artificial intelligence lab at the Massachusetts Institute of Technology, also maintained that reasoning—the ability to think in a multiplicity of ways that are hierarchical—was what made humans human.

Patrick Henry Winston begged to differ. “I think Turing and Minsky were wrong,” he told me in 2017. “We forgive them because they were smart and mathematicians, but like most mathematicians, they thought reasoning is the key, not the byproduct.” Winston, a professor of computer science at MIT, and a former director of its AI lab, was convinced the key to human intelligence was storytelling. “My belief is the distinguishing characteristic of humanity is this keystone ability to have descriptions with which we construct stories. I think stories are what make us different from chimpanzees and Neanderthals. And if story-understanding is really where it’s at, we can’t understand our intelligence until we understand that aspect of it.” Winston believed storytelling was so central to human intelligence, it was also the key to creating sentient machines in the future.

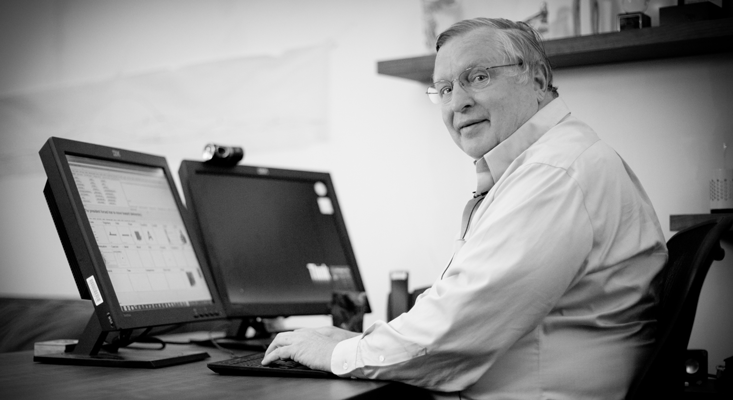

Winston died in July at age 76. He is remembered as a remarkable scholar, a Mensch, and a beloved mentor to generations of MIT scholars, a teacher who always emphasized to his students the critical importance of developing their writing and speaking skills. “You cannot lead if you cannot communicate,” he taught them.

I met Winston while doing research for my book Wayfinding: The Science and Mystery of How Humans Navigate the World. I had become fascinated by storytelling and the possibility that a distinct part of the brain, the hippocampus, had contributed to our species’ capacity for building narratives and stories in the mind. The hippocampus is what allows us to remember past events, what is known as episodic memory, and imagine the future. It is also where we create representations of space, the so-called cognitive maps that we use every day to plot routes and navigate to a destination. Could it be that our species’ need to travel in search of water, food, and shelter had contributed to our ability to organize our journeys into narrative sequences with beginnings, middles, and ends? Was storytelling a kind of evolutionary result of navigating?

The anthropologist Michelle Scalise Sugiyama once spent a year researching oral traditions of foraging societies and discovered how widespread the connection between stories and navigation is across the world’s cultures. In her analysis of nearly 3,000 stories from Africa, Australia, Asia, North America, and South America, she found that 86 percent contain topographic information—travel routes, landmarks, the locations of resources like water, game, plants, and campsites. She argues that the human mind—initially designed to encode space—found a way to transmit topographical information verbally by transforming it into social information in the form of stories. “Narrative serves as a vehicle for storing and transmitting information critical to survival and reproduction,” Sugiyama has written. “The creation of landmarks by a human agent or agents in the course of a journey is a common motif in forager oral traditions … By linking discrete landmarks, these tales in effect chart the region in which they take place, forming story maps.”

“Do you think rats are telling themselves stories?”

“Yes, that’s exactly what I think.”

In indigenous North American cultures, examples of story maps abound from the Mojave to the Gitksan. In 1898 Franz Boas described a common feature of the stories of the Salish peoples, a character known as a “culture hero,” “transformer,” or “trickster” who gives the universe its shape through his travels and whose adventures are passed down through generations. In her description of Pawnee migrations across the Midwest plains, anthropologist Gene Weltfish describes how each band followed a preferred route, some of which had few identifiable landmarks and were easy to get lost on. To successfully navigate, “the Pawnees had a detailed knowledge of every aspect of the land,” she wrote in The Lost Universe. “Its topography was in their minds like a series of vivid pictorial images, each a configuration where this or that event had happened in the past to make it memorable. This was especially true of the old men who had the richest store of knowledge in this respect.” Similarly, the cultural and linguistic anthropologist Keith Basso wrote in his book Wisdom Sits in Places that the Apache frequently cite the names of places in sequence, recreating a journey. One day Basso was stringing barbed wire with two Apache cowboys when he heard one of them talking to himself quietly, reciting a list of place names for nearly 10 minutes straight. The cowboy told Basso that he “talked names” all the time, that it allowed him to “ride that way in my mind.”

I had not expected my research to lead me to the field of AI, but I discovered that Winston had been exploring similar ideas for years in the course of his groundbreaking research into human intelligence. He was fascinated, for instance, by memory studies in rats showing bursts of activity in the animals’ hippocampal cells as they navigated mazes, and how the rats would later relive the same exact routes in their sleep. As the neuroscientist Matt Wilson recently recalled, Winston “would always ask, ‘Do you think that what you are seeing are the rats telling themselves stories?’ And I would respond, ‘Yes, that’s exactly what I think they are doing!’” Winston’s tremendous intellectual curiosity had led him to see connections between moving through space, sequential thinking, storytelling, and human intelligence, and over the course of the spring of 2017 he graciously allowed me to sit in on his course, “The Human Intelligence Enterprise,” and visit his offices to understand these ideas for myself.

I first met Winston at his second floor office in the Stata Center on the MIT campus, a surreal 720,000 square foot Alice in Wonderland building designed by Frank Gehry with walls and corners crashing into one another at sharp angles. Winston, white-haired, wearing thin wire-framed glasses, sat at his desk. Behind him was an assortment of books on the Civil War, of which he was a passionate amateur scholar (one of his favorite books was The Battle Cry of Freedom by James M. McPherson, comparable only to Shakespeare, in his opinion), but it was the painting above his head that caught my attention. It was a framed reproduction of Michelangelo’s fresco The Creation of Adam, depicting the moment just before genesis, the fingers of God and Adam hanging in the air, about to touch and set into motion the biblical story of man on earth.

Winston had been at MIT for most of his life; he was an undergraduate there, receiving a degree in 1965 in electrical engineering before doing a doctoral dissertation under Minsky. It was Winston who took over the Artificial Intelligence Laboratory when Minsky moved on to create the influential Media Lab. “I do screwball AI,” Winston told me. Within this niche of screwball AI, Winston has created a new computational theory for human intelligence. He believes that to evolve AI beyond systems that can simply win at chess or Jeopardy, to build systems that might actually begin to approach the intelligence of a human child, scientists must figure out what made humans smart in the first place.

What is Macbeth about? “Pyrrhic victory and revenge,” the computer responded.

Winston drew on linguistics, in particular a hypothesis developed by fellow MIT professors Robert Berwick and Noam Chomsky, to explain how language evolved in humans. Their idea is that humans were the only species who evolved the cognitive ability to do something called “Merge.” This linguistic “operation” is when a person takes two elements from a conceptual system—say “ate” and “apples”—and merges them into a single new object, which can then be merged with another object—say “Patrick,” to form “Patrick ate apples”—and so on in an almost endlessly complex nesting of hierarchical concepts. This, they believe, is the central and universal characteristic of human language, present in almost everything we do.

“We can construct these elaborate castles and stories in our head. No other animals do that,” Berwick said. The theory flips the common explanation of why language developed: not as a tool for interpersonal communication but as an instrument of internal thought. Language, they argue, is not sound with meaning but meaning with sound.

For Winston, the merge hypothesis represented the best explanation so far for how humans developed story understanding. But Winston also believed that the ability to create narrative stems from spatial navigation. “I do think much of our understanding comes from the physical world, and that involves things moving through it,” he said. “The ability to put things in order, I think that comes out of spatial navigation. We benefit from many things that were already there, and sequencing was one of the things that was already there.” He continued, “From an AI perspective, what merge gives you is the ability to build a symbolic description. We already had the ability to arrange things in sequences, and this new symbolic capability gave us the ability to have stories, listen to stories, tell stories, to combine two stories together to get a new story, to be creative.” Winston calls it the Strong Story Hypothesis.

Winston decided to see if he could create a program that could understand a story. Not just read or process a story but glean lessons from it, even communicate its own insights about the motivations of its protagonists. What were the most basic functions that would be needed to give a machine this ability, and what would they reveal about human computation?

Winston and his team decided to call their machine Genesis. They started to think about commonsense rules it would need to function. The first rule they created was deduction—the ability to derive a conclusion by reasoning. “We knew about deduction but didn’t have anything else until we tried to create Genesis,” Winston told me. “So far we have learned we need seven kinds of rules to handle the stories.” For example, Genesis needs something they call the “censor rule” that means: if something is true, then something else can’t be true. For instance, if a character is dead, the person cannot become happy.

When given a story, Genesis creates what is called a representational foundation: a graph that breaks the story down and connects its pieces through classification threads and case frames and expresses properties like relations, actions, and sequences. Then Genesis uses a simple search function to identify concept patterns that emerge from causal connections, in a sense reflecting on its first reading. Based on this process and the seven rule types, the program starts identifying themes and concepts that aren’t explicitly stated in the text of the story. What fascinated Winston initially was that Genesis required a relatively small set of rule types in order to successfully engage in story understanding at a level that appears to approach human understanding. “We once thought that we would need a whole lot of representations,” Winston said. “We now know that we can get away with just a few.”

“Would you like a demonstration?” he asked me. I rolled my chair around to the other side of his desk and watched as Winston opened the Genesis program. “Everything in Genesis is in English, the stories, the knowledge,” he said. He typed a sentence into a text window in the program: “A bird flew to a tree.” Below the text window I saw case frames listed. Genesis had identified the actor of the story as the bird, the action as fly, and the destination as tree. There was even a “trajectory” frame illustrating the sequence of action pictorially by showing an arrow hitting a vertical line. Then Winston changed the description to “A bird flew toward a tree.” Now the arrow stopped short of the line.

“Now let’s try Macbeth,” Winston said. He opened up a written version of Macbeth, translated from Shakespearean language to simple English. Gone were the quotations and metaphors; the summarized storyline had been shrunk to about 100 sentences and included only the character types and the sequence of events. In just a few seconds Genesis read the summary and then presented us with a visualization of the story. Winston calls such visualizations “elaboration graphs.” At the top were some 20 boxes containing information such as “Lady Macbeth is Macbeth’s wife” and “Macbeth murders Duncan.” Below that were lines connecting to other boxes, connecting explicit and inferred elements of the story. What did Genesis think Macbeth was about? “Pyrrhic victory and revenge,” it told us. None of these words appeared in the text of the story. Winston went back to a main navigation page and clicked on a box called “selfstory.” Now we saw, in a window called “Introspection,” the process of Genesis’s own understanding of the story, the sequence of its reasoning and inference. “I think that’s cool because Genesis is a program that is in some respects self-aware,” he said.

Building complex story understanding in machines could help us create better models for education, political systems, medicine, and urban planning. Imagine, for example, a machine that possessed not just a few dozen rules by which to understand a text but thousands of rules that it could apply to a text hundreds of pages long. Imagine such a machine employed by the FBI, given an intractable murder case with puzzling evidence and a multitude of potential perpetrators. Or inside the Situation Room, offering American diplomats and military intelligence its own analysis of the motives of Russian hackers or Chinese belligerence in the South China Sea, calculating predictions for future behavior based on an analysis of a hundred years of history.

Winston and his students used Genesis to analyze a 2007 cyberwar between Estonia and Russia. They also found creative ways to test its intelligence, prompting it to tell stories itself, or tweaking its perspective to read a story from different psychological profiles—Asian vs. European, for example. One of Winston’s graduate students gave Genesis the ability to teach and persuade readers. For example, the student requested that Genesis make the woodcutter look good in the story of Hansel and Gretel. Genesis responded by adding sentences that emphasized the character’s virtuousness.

Can a computer think without a body? The class was quiet.

Winston’s students found a way to give Genesis schizophrenia. “We think some aspects of schizophrenia are the consequence of fundamentally broken story systems,” Winston said. He showed me a cartoon illustration. It depicted a little girl trying to open a door handle that is too high to reach, and then fetching an umbrella. A healthy person would infer the girl is getting the umbrella to extend her reach and open the door; a person with schizophrenia does what is called hyperpresumption—inferring that the girl is getting an umbrella to go outside in the rain. To get Genesis to think like a schizophrenic in this way, Winston and his students switched two lines of code in the program. They put Genesis’s search for explanations that tie story elements together after the search for the default answer, the one that the girl will be going into the rain. Hyperpresumption, according to Genesis, is a dysfunction of sequencing in the brain. They called it the Faulty Story Mechanism Corollary.

One of Winston’s former students at MIT was Wolfgang Victor Hayden Yarlott, an engineering and computer science major who graduated in 2014 and is now doing a Ph.D. at Florida International University. Yarlott is a Crow Indian and had an idea: If Winston was correct about the Strong Story Hypothesis, that stories are a key part of human intelligence, Genesis needed to demonstrate that it understood stories from all cultures, including indigenous cultures such as the Crow. “Stories are how intelligence and knowledge is represented and passed down from generation to generation— an inability to understand any culture’s stories means that either the hypotheses are wrong or the Genesis system needs more work,” Yarlott wrote in his thesis.

Yarlott chose a set of five Crow stories for Genesis to read, including creation myths that he’d heard during his childhood in southern Montana. His challenge was to create recognition in Genesis of chains of events that seemed unrelated, of supernatural concepts like medicine (which has a magic-like quality in Crow folklore), and of the “trickster” personality traits. Those are all elements in the Crow stories that, as Yarlott determined, distinguish them from the canon of Anglo-European storytelling. The creation myth “Old Man Coyote Makes the World” features animals that communicate with Old Man Coyote just as people do. As Yarlott points out, there is an amazing display of power or medicine that enables Old Man Coyote to create—but there are explicitly unknowable events that take place as part of the story, like, “How he did this, no one can imagine.” To solve these issues Yarlott had to give Genesis new concept patterns to recognize. For example,

Start description of “Creation”.

XX and YY are entities.

YY’s not existing leads to XX’s creating YY.

The end.

Start description of “Successful trickster”

XX is a person.

YY is an entity.

XX’s wanting to fool YY leads to XX’s fooling YY. The end.

Start description of “Vision Quest”.

XX is a person.

YY is a place.

XX’s traveling to YY leads to XX’s having a vision.

The end.

The stories that Yarlott told to Genesis read like this:

Start experiment.

Note that “Old Man Coyote” is a name.

Note that “Little_Duck” is a name.

Note that “Big_Duck” is a name.

Note that “Cirape” is a name.

Insert file Crow commonsense knowledge.

Insert file Crow reflective knowledge.

“Trickster” is a kind of personality trait.

Start story titled “Old Man Coyote Makes the World”.

Old Man Coyote is a person.

Little_Duck is a duck.

Big_Duck is a duck.

Cirape is a coyote.

Mud is an object.

“the tradition of wife stealing” is a thing.

Old Man Coyote saw emptiness because the world didn’t exist.

Old Man Coyote doesn’t want emptiness.

Old Man Coyote tries to get rid of emptiness.

Yarlott found that Genesis was capable of making dozens of inferences about the story and several discoveries, too. It triggered concept patterns for ideas that weren’t explicitly stated in the story, recognizing the themes of violated belief, origin story, medicine man, and creation. It seemed to comprehend the elements of Crow literature, from unknowable events to the concept of medicine to the uniform treatment of all beings and the idea of differences as a source of strength. “I believe this is a solid step toward showing both that Genesis is capable of handling stories from Crow literature,” he surmised, “and that Genesis is a global system for story understanding, regardless of the culture the stories come from.”

Genesis has obvious shortcomings: So far it only understands elementary language stripped of metaphor, dialogue, complex expression, and quotation. To grow its capacity for understanding, Genesis needs more concept patterns—in other words, more teaching. How many thousands of stories does a child hear, create, and read as she grows into adulthood? Perhaps hundreds of thousands. But even if Genesis could access the same number of stories there are probably more fundamental limitations to the machine’s potential.

One rainy day, I went to see Winston teach his popular undergraduate course at MIT, “Intro to Artificial Intelligence.” I listened to him explain the Strong Story Hypothesis to hundreds of students and demonstrate its abilities. “Can Watson do this?” he quipped. But then he posed a series of questions to his young students casting doubt on his own invention.

“Can we think without language?”

The room was quiet.

“Well, we know from those whose language cortex is gone that they can’t read or speak or understand spoken language,” he explained. “Are they stupid? They can still play chess, do arithmetic, find hiding places, process music. Even if the external language apparatus is blown away, I think they still have inner language.”

He paused. “Can we think without a body?”

Quiet.

“What does Genesis know about love if it doesn’t have a hormonal system?” he said. “What does it know about dying if it doesn’t have a body that rots? Can it still be intelligent?”

Love and death, I marveled, as students filed out of the lecture hall. The stuff of the most epic stories on earth. Without embodiment in space and time, could Genesis ever understand these universal human conditions? Winston thought that one of the next questions that needed to be answered was how much humans learn by self-discovery. Genesis had been taught in the same manner that a parent would teach a child, but children also have direct experience and what he called “surrogate experience” through reading that helps them to formulate rules and concepts independently. Could this self-discovery process be somehow computationally modeled? Or, I wondered, could Genesis somehow reproduce the kind of wayfinding across landscape and mind that has made humans human? As Winston himself said at the close of his lecture, “We’ll leave that part of the story for another time.”

M.R. O’Connor is the author of Wayfinding: The Science and Mystery of How Humans Navigate the World. She writes about the politics and ethics of science, technology, and conservation. Her reporting has appeared online in The New Yorker, Science, Foreign Policy, and The Atlantic. She was a MIT Knight Science Journalism Fellow in 2016/17.

Adapted from Wayfinding: The Science and Mystery of How Humans Navigate the World by M.R. O’Connor. Copyright © 2019 by the author and reprinted by permission of St. Martin’s Publishing Group.

Lead image: Vasilyev Alexandr / Shutterstock