When Google’s AlphaGo computer program triumphed over a Go expert earlier this year, a human member of the Google team had to physically move the pieces. Manuela Veloso, the head of Carnegie Mellon’s machine learning department, would have done it differently. “I’d require the machine to move the pieces like I do,” she says. “That’s the world in which I live, which is a physical world.”

It sounds simple enough. If Google can make cars that drive themselves, surely it could add robotic arms to a Go match. Even in 1997, I.B.M. could have given Deep Blue robotic arms in its match against Garry Kasparov. To Veloso, though, the challenge is not in building a robot to play on a given board in given conditions, but rather to build one able to play on any board. “Imagine all the different types of chess pieces that humans handle perfectly fine. How would we get a robot to detect these pieces and move them on any type of board, with any number of lighting conditions, and never let the piece fall except on the right square? Oh God,” she says.

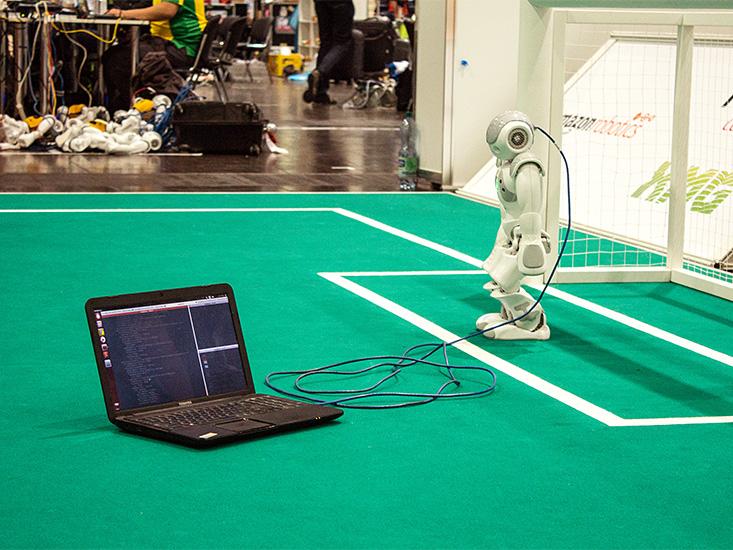

And if the idiosyncrasies of static chess pieces are hard for modern robotics, imagine how hard it would be to deal with a chaotic, rolling soccer ball. Then add a whole team of other robots chasing that same ball. That’s the setup for RoboCup, the annual robotic soccer competition Veloso co-founded in 1997.

The cost of bringing robots into her, and our, world is great. A game like Go is a one-versus-one game of perfect information—both sides can see the entire board and can make their moves with perfect accuracy. Two Go games could, theoretically, be identical. But the contingencies of the physical world make each soccer game different and entirely unpredictable. “As soon as you inject the ball in there—the physics of the ball, the gravity, the friction on the carpet—it’s not reproducible. How do you go about writing a piece of code that plays a game without knowing what’s going to happen?” says Veloso.

RoboCup robots range in size all the way from small (think oversized coffee mugs) to kid-sized (think 2-year-old) to adult-sized. Robot size tends to be inversely correlated with apparent soccer ability. The coffee-mug robots zip around like springtime squirrels and make what look like intentional, soccer-worthy passes and goals. In the adult-sized league, though, the robots move cautiously and inelegantly. They stumble, often. They fall, often. Videos of adult-sized robots shooting on goal need to be sped up to even be watchable (like, say, videos of plants growing).

Stuck as they are in their shiny bodies, the RoboCup robot players face some of the same problems that human players (also stuck) do. Sensors are faulty, communication is noisy, joints break, vision is narrow, and perfect plans are undone by physical constraints or the other team. The stumbling, flailing, and curiously slow robots do something remarkable while struggling through their games: They seem to create the space for complex behaviors, some of which can look vaguely familiar, and even human. Watching the robots try to play team soccer we can see intimations of cooperation, aggression, tribalism, and even, at times, a kind of individuality.

David Rand, a researcher on the psychology of cooperation and director of the Human Cooperation Lab at Yale, told us that “It certainly seems like if you want robots that play team sports, you have to build in some sense of us versus them.” The robots’ first task in the physical world of soccer is something very, well, human: They must, like in all soccer playgrounds around the world, choose sides. Veloso says that the abstract idea of us versus them is not programmed into the robots, but something like a specific instance of it is. “I think it’s more like ‘These guys are our team and those are not’,” she says.

In one type of RoboCup league, the robots are controlled from the sidelines and choosing sides is easy—a centralized computer “coach” simply tells each robot where to go and what to do. Having one computer control everything is more akin to the A.I.-mastered games like chess and Go. “To play a game of chess it’s just one mind. It’s not many minds,” says Veloso.

We can’t ever hope to understand minds, or programs, out of the context of the physical environments they are tasked with surviving in.

But in another type of RoboCup league, things get interesting. Each of the robots in the “many mind,” or decentralized, league is entirely autonomous and has all its sensors and computers—and therefore decision making—on-board. It is a bit like if each of the 32 chess pieces were suddenly on their own, without a human grandmaster or Deep Blue telling them what to do. First, they would need to determine if they are white or black. Next, they would try to figure out, from their vantage point, how to strategically cooperate with their teammates. Soccer is similarly an independent, multi-robot problem and thus a test bed for research on coordination, cooperation, and the emergence of strategies among individual robots—each of which knows some of the problem, but none of which know all of it. “You cannot win a game of soccer with a single robot on the field,” says Veloso.

The exact method each soccer-playing robot uses to determine its team depends on the team but, in general, the robots use a combination of visual cues like jersey color, positional information about other robots, between-robot communication (the robot equivalent of “Hey, I’m on your side!”), and which side of the field they remember starting in. “Some teams use vision, some teams use the position. Some teams just see another robot in front of them and they don’t know if they’re teammates or not,” says Veloso.

From something as simple as sides, behaviors begin to emerge in RoboCup that would be familiar to anyone who has played real soccer. For one, the robots, sure of their team but insecure in their new bodies, appear to push members of the other team. (Yes, RoboCup has yellow and red cards.) One year, a robot team got caught pushing so often and so repeatedly that the only robot left at the end of the game was the goalie—all the rest had been ejected. Apparently, the goalie fought valiantly, but the team ultimately lost.

Veloso explains that this apparently aggressive behavior is actually anything but. It emerges mostly from poor motor balance as opposing robots collide on the field. “When there are two of them close by the ball, they are inevitably balancing on one leg to try to kick. And the other one is trying to get to the ball, too.” Very often, she says, this makes them fall. There is some programmed intentionality to it, too: If it is a teammate nearby, one robot will step back and avoid fighting for the ball. The robots, in other words, have different rule sets for in-groups and out-groups, a simple enough precursor to teamwork, and a kind of tribalism.

Robots will even occasionally switch tribal allegiance—but, like with their pushing, the reason is more bug than feature. Confused robots will, on occasion, accidentally switch sides after, say, visually mistaking which direction they are going. (A not uncommon occurrence in human athletes, even at the professional level.)

By 2040, the goal is to have 11 robots playing a full-field, FIFA-compliant game against star human soccer players.

As prosaic as switching sides might seem, it is a proof of the capacity to be fluid with one’s allegiances. David Rand explains that to make a robot that was tribal in the human sense, it would need an ability to abstractly and fluidly change tribes. Human fealties are, after all, often heavily nested. Two warring parties might align in the face of a common enemy. This was shown rather convincingly in a psychology study from the 1950s, where 24 young boys, all unacquainted, were split evenly into two groups. At first, the groups knew nothing of each other, but when the two groups were later made to compete, between-group hostility became so strong that they had to be separated. It was only after the introduction of a made-up third group of “vandals” and a shared goal did the two groups work together and, finally, start to cooperate. “This is a clear feature of human psychology, that the us and them divisioning is super fluid,” Rand explains.

Watching RoboCup robots choose sides based on jersey color and then change them in a haze on the field—and knowing what we know all too well about humans and tribes—one cannot help but see early echoes of tribal behavioral: Altruism with each well-intentioned pass, parochialism in each accidental push.

It might have been this kind of behavior that prompted the late Nobel-Prize winning social scientist Herb Simon, widely credited with launching the field of artificial intelligence, to say that he had been “amazed, amused, gratified, and instructed” by what he saw in early robot soccer matches. “We have seen in the soccer games, an entire social drama, played out with far less skill (so far) than professional human soccer, but with all the important components of the latter clearly visible,” he wrote.

The starring role in this drama, besides the robots, is the messy, unpredictable real world. RoboCup reinforces arguments for the so-called embodied cognition approach to cognitive science and artificial intelligence. Roughly, this approach claims that the physical layer of the world has to be taken into account in order to understand any kind of intelligence in embodied creatures—humans, animals, and now, soccer-playing robots. We can’t ever hope to understand minds, or programs, out of the context of the physical bodies they control or the physical environments they are tasked with surviving in.

RoboCup researchers are hoping to, one day, do for soccer what I.B.M. did with chess: By 2050, they hope to field a team able to beat the reigning human World Cup champions. To do so, according to their roadmap, they must confront both the challenges of the physical world and the difficulties of teamwork. By 2025, they hope to introduce jumping and headers into the robot repertoire as well as have a team of six cooperating robots. By 2030, teams of eight robots are expected to be able to score against a human goalie, defend against a human kick, and be competitive against an unprofessional human team. By 2035, they will have a tolerance for rain and dirt conditions, allowing outdoor play. And by 2040, the goal is to have 11 robots playing a full-field, FIFA-compliant game while being able to both score and defend against star human soccer players.

Whatever path the development of RoboCup takes, it seems inevitable that its robots will not just play against humans, but alongside them. Veloso sees this as a natural progression: “Why would humans develop the robots not to play with them?”

Even weirder, Veloso says that some robots may, one day, watch from the stands. RoboCup researchers have already tried to make robotic sports fans. During the 2013 RoboCup, hundreds of small robots were programmed to move and cheer when their sensors picked up noise above a certain level, the sonic equivalent of tiny robots participating in a stadium wave. “It was hilarious to see them all moving in response to what was happening in the game,” says Veloso. She explains that creating a true robotic sports fan, though, would be even harder than creating a player. To do so would be to solve a so-called “A.I.-complete” problem—the hardest in A.I.—because the definition of fandom is so inextricably tied to human desire, reward, emotion, and identity.

Giving robots complex bodies, though, may have already moved them one step closer to this A.I.-complete solution by giving them a key ingredient: individuality. In Werner Herzog’s new documentary, Lo and Behold, Reveries of a Connected World, a member of Veloso’s robotics team at Carnegie Mellon singles out one of their robots, “Number 8,” as their favorite. Number 8 has been playing for over a decade in the small-sized league. Veloso is partial, too. “We all love robot Number 8,” she said. The reason, simply, is that, for some reason, the robot is really good—Veloso explains it in particular is uniquely better at scoring than the other robots on the team, even though they all have the same code. “All these robots are manufactured manually, so maybe with Number 8, the parts are better polished,” she says, half-jokingly. “Who knows? I don’t know. I’m just telling you, Number 8 is better.”

The journalist Eric Simons argued in his book The Secret Lives of Sports Fans, that being a sports fan is not just tribalistic but also a matter of self-identification and identity. It is easy to imagine that Robot Number 8 may live out its retirement not in a Carnegie Mellon parts basement, but in the 2050 RoboCup arena’s box seats. Rooting, if all goes well, for its old team.

Seth Frey is a cognitive scientist and Neukom Postdoctoral Fellow at Dartmouth College.

Patrick House is a neuroscientist and writer based in Palo Alto, California.