Most materials derive their macroscopic properties from their microscopic structure. A steel rod is hard, for instance, because its atoms form a repeating crystalline pattern that remains static over time. Water parts around your foot when you dip it into a lake because fluids don’t have that structure; their molecules move around randomly.

Then there’s glass, a strange in-between substance that has puzzled physicists for decades. Take a snapshot of the molecules in glass, and they’ll appear disordered just like a liquid’s. But most of the molecules barely move, making the material rigid like a solid.

Glass is formed by cooling certain liquids. But why the molecules in the liquid slow down so dramatically at a certain temperature, with no obvious corresponding change in their structural arrangement—a phenomenon known as the glass transition—is a major open question.

Now, researchers at DeepMind, a Google-owned artificial intelligence company, have used AI to study what’s happening to the molecules in glass as it hardens. DeepMind’s artificial neural network was able to predict how the molecules move over extremely long timescales, using only a “snapshot” of their physical arrangement at one moment in time. According to DeepMind’s Victor Bapst, even though the microscopic structure of a glass appears featureless, “the structure is maybe more predictive of the dynamics than people thought.”

Peter Harrowell, who studies the glass transition at the University of Sydney, agrees. He said the new work “makes a stronger case” than ever before that in glass, “structure somehow encodes for the dynamics,” and so glass isn’t as disordered as a liquid after all.

Predicting Propensity

To understand what microscopic changes cause the glass transition, physicists need to relate two kinds of data: how the molecules in a glass are arranged in space, and how they (slowly) move over time. One way to link these is with a quantity called dynamic propensity: how much a set of molecules is likely to have moved by some specific time in the future, given their current positions. This evolving quantity comes from calculating the molecules’ trajectories using Newton’s laws, starting with many different random initial velocities and then averaging the outcomes together.

By simulating these molecular dynamics, computers can generate “propensity maps” for thousands of glass molecules—but only on timescales of trillionths of a second. And molecules in glass, by definition, move extremely slowly. Computing their propensity to a horizon of seconds or more is “just impossible [for] normal computers because it takes too much time,” said Giulio Biroli, a condensed matter physicist at the École Normale Supérieure in France.

What’s more, Biroli said, merely turning the crank on these simulations doesn’t produce much insight for physicists about what structural features, if any, could be causing the molecular propensities in glass.

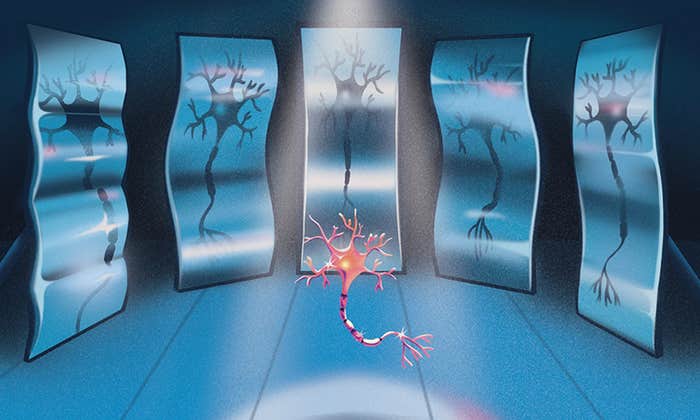

DeepMind’s researchers set out to train an AI system to predict propensities in glass without actually running the simulations, and to try to understand where these propensities come from. They used a special kind of artificial neural network that takes graphs—collections of nodes connected by lines—as input. Each node in the graph represents a molecule’s three-dimensional position in the glass; lines between nodes represent how far apart molecules are from each other. Since neural networks “learn” by changing their own structure to reflect the structure of their inputs, “the graph neural network is very well suited to represent particles’ interaction,” Bapst said.

Bapst and his colleagues first used the results of simulations to train their AI system: They created a virtual cube of glass comprising 4,096 molecules, simulated the evolution of the molecules based on 400 unique starting positions at various temperatures, and computed the particles’ propensities in each case. After training the neural network to accurately predict these propensities, the researchers next fed 400 previously unseen particle configurations—“snapshots” of the glass molecules’ configurations—into the trained network.

Using only these structural snapshots, the neural network predicted the molecules’ propensities at different temperatures with unprecedented accuracy, reaching 463 times further into the future than the previous state-of-the-art machine learning prediction method.

Correlated Clues

According to Biroli, the DeepMind neural network’s ability to predict molecules’ future movements based on a mere snapshot of their current structure provides a powerful new way to explore the dynamics of glasses, and potentially other materials as well.

But what pattern did the network detect in those snapshots in order to make its predictions? The system can’t be easily reverse-engineered to determine what it learned to pay attention to during training—a common problem for researchers trying to use AI to do science. But in this case they found some clues.

According to Agnieszka Grabska-Barwinska, a member of the team, the graph neural network learned to encode a pattern that physicists call correlation length. That is, as DeepMind’s graph neural network restructured itself to reflect the training data, it came to exhibit the following tendency: When predicting propensities at higher temperatures (where molecular movement looks more liquid-like than solid), for each node’s prediction the network depended on information from neighboring nodes two or three connections away in the graph. But at lower temperatures closer to the glass transition, that number—the correlation length—increased to five.

“We see that the network extracts, as we lower the temperature, information from larger and larger neighborhoods” of particles, said Thomas Keck, a physicist on the DeepMind team. “At these different temperatures, the glass looks, to the naked eye, just identical. But the network sees something different as we go down.”

Increased correlation length is a hallmark of phase transitions, in which particles transition from a disordered to an ordered arrangement or vice versa. It happens, for instance, when atoms in a block of iron collectively align so that the block becomes magnetized. As the block approaches this transition, each atom influences atoms farther and farther away in the block.

To physicists like Biroli, the neural network’s ability to learn about correlation length and factor it into its predictions suggests that some hidden order must be developing in the structure of glass during the glass transition. Peter Wolynes, a glass expert at Rice University, said that the correlation length learned by the machine provides evidence that materials “approach a thermodynamic phase transition” as they become glassy.

Still, the knowledge gained by the neural network doesn’t easily translate into new equations. “We cannot say, ‘Oh, actually our network is looking at this correlation that I can give you a formula for,’” said Pushmeet Kohli, who heads DeepMind’s science team. To some glass physicists, this caveat limits how useful the graph neural network might be. “Can this be explained in human terms?” said Wolynes. “That they did not do. That doesn’t mean they cannot do it in the future.”

Lead image: Why some liquids harden into glass is a major open question.