Nowadays, the words “artificial intelligence” seem to be on practically everyone’s lips, from Elon Musk to Henry Kissinger. At least a dozen countries have mounted major AI initiatives, and companies like Google and Facebook are locked in a massive battle for talent. Since 2012, virtually all the attention has been on one technique in particular, known as deep learning, a statistical technique that uses sets of of simplified “neurons” to approximate the dynamics inherent in large, complex collections of data. Deep learning has powered advances in everything from speech recognition and computer chess to automatically tagging your photos. To some people, it probably seems like “superintelligence”—machines vastly more intelligent than people—are just around the corner.

The truth is, they are not. Getting a machine to recognize the syllables in your sentence is not the same as it getting to understand the meaning of your sentences. A system like Alexa can understand a simple request like “turn on the lights,” but it’s a long way from holding a meaningful conversation. Similarly, robots can vacuum your floor, but the AI that powers them remains weak, and they are a long way from being clever enough (and reliable enough) to watch your kids. There are lots of things that people can do that machines still can’t.

I tried to take a step back, to explain why deep learning might not be enough, and where we ought to look to take AI to the next level.

And lots of controversy about what we should do next. I should know: For the last three decades, since I started graduate school at the Massachusetts Institute of Technology, studying with the inspiring cognitive scientist Steven Pinker, I have been embroiled in on-again, off-again debate about the nature of the human mind, and the best way to build AI. I have taken the sometimes unpopular position that techniques like deep learning (and predecessors that were around back then) aren’t enough to capture the richness of the human mind.

That on-again off-again debate flared up in an unexpectedly big way last week, leading to a huge Tweetstorm that brought in a host of luminaries, ranging from Yann LeCun, a founder of deep learning and current Chief AI Scientist at Facebook, to (briefly) Jeff Dean, who runs AI at Google, and Judea Pearl, a Turing Award winner at the University of California, Los Angeles.

When 140 characters no longer seemed like enough, I tried to take a step back, to explain why deep learning might not be enough, and where we perhaps ought to look for another idea that might combine with deep learning to take AI to the next level. The following is a slight adaptation of my personal perspective on what the debate is all about.

It all started when I read an interview with Yoshua Bengio, one of deep learning’s pioneers, in Technology Review. Where inventors often hype their discoveries, Bengio downplayed his, emphasizing instead some other important problems in AI that might need to address, writing this:

I think we need to consider the hard challenges of AI and not be satisfied with short-term, incremental advances. I’m not saying I want to forget deep learning. On the contrary, I want to build on it. But we need to be able to extend it to do things like reasoning, learning causality, and exploring the world in order to learn and acquire information.

I agreed with virtually every word and thought it was terrific that Bengio said so publicly. I was also struck by what seemed to be (a) an important change in view, or at least framing, relative to how advocates of deep learning framed things a few years ago (see below), (b) movement toward a direction for which I had long advocated, and (c) noteworthy coming from Bengio.

So I tweeted the interview, expecting a few retweets and nothing more. And then, almost immediately, a Twitterstorm broke out.

Here’s the tweet, perhaps forgotten in the storm that followed:

For the record and for comparison, here’s what I had said almost exactly six years earlier, on November 25, 2012, eerily:

Deep learning is important work, with immediate practical applications.

…

Realistically, deep learning is only part of the larger challenge of building intelligent machines. Such techniques lack ways of representing causal relationships (such as between diseases and their symptoms), and are likely to face challenges in acquiring abstract ideas like “sibling” or “identical to.” They have no obvious ways of performing logical inferences, and they are also still a long way from integrating abstract knowledge, such as information about what objects are, what they are for, and how they are typically used. The most powerful A.I. systems … use techniques like deep learning as just one element in a very complicated ensemble of techniques, ranging from the statistical technique of Bayesian inference to deductive reasoning.

I stand by that. It is, as far as I know (and I could be wrong), the first place where anybody said that deep learning per se wouldn’t be a panacea. Given what folks like Pinker and I had discovered about an earlier generation of predecessor models, the hype that was starting to surround deep learning seemed unrealistic. Six years later Bengio was pretty much saying the same thing.

Some people liked the tweet, some people didn’t. Yann LeCun’s response was deeply negative. In a series of tweets he claimed (falsely) that I hate deep learning, and that because I was not personally an algorithm developer, I had no right to speak critically; for good measure, he said that if I had finally seen the light of deep learning, it was only in the last few days, in the space of our Twitter discussion (also false).

By reflecting on what was and wasn’t said (and what does and doesn’t actually check out) in that debate, and where deep learning continues to struggle, I believe that we can learn a lot.

To clear up some misconceptions: I don’t hate deep learning, not at all. We used it in my last company (I was the CEO and a founder), and I expect that I will use it again; I would be crazy to ignore it. I think—and I am saying this for the public record, feel free to quote me—deep learning is a terrific tool for some kinds of problems, particularly those involving perceptual classification, like recognizing syllables and objects, but also not a panacea. In my New York University debate with LeCun, I praised LeCun’s early work on convolution, which is an incredibly powerful tool. And I have been giving deep learning some (but not infinite) credit ever since I first wrote about it as such: in The New Yorker in 2012, in my January 2018 Deep Learning: A Critical Appraisal article, in which I explicitly said, “I don’t think we should abandon deep learning,” and on many occasions in between. LeCun has repeatedly and publicly misrepresented me as someone who has only just woken up to the utility of deep learning, and that’s simply not so.

LeCun’s assertion that I shouldn’t be allowed to comment is similarly absurd: Science needs its critics (LeCun himself has been rightly critical of deep reinforcement learning and neuromorphic computing), and although I am not personally an algorithm engineer, my criticism thus far has had lasting predictive value. To take one example, experiments that I did on predecessors to deep learning, first published in 1998, continue to hold validity to this day, as shown in recent work with more modern models by folks like Brendan Lake and Marco Baroni and Bengio himself. When a field tries to stifle its critics, rather than addressing the underlying criticism, replacing scientific inquiry with politics, something has gone seriously amiss.

But LeCun is right about one thing; there is something that I hate. What I hate is this: the notion that deep learning is without demonstrable limits and might, all by itself, get us to general intelligence, if we just give it a little more time and a little more data, as captured in a 2016 suggestion by Andrew Ng, who has led both Google Brain and Baidu’s AI group. Ng suggested that AI, by which he meant mainly deep learning, would either “now or in the near future” be able to do “any mental task” a person could do “with less than one second of thought.”

Generally, though certainly not always, criticism of deep learning is sloughed off, either ignored, or dismissed, often in an ad hominem way. Whenever anybody points out that there might be a specific limit to deep learning, there is always someone like Jeremy Howard, the former chief scientist at Kaggle and founding researcher at fast.ai, to tell us that the idea that deep learning is overhyped is itself overhyped. Leaders in AI like LeCun acknowledge that there must be some limits, in some vague way, but rarely (and this is why Bengio’s new report was so noteworthy) do they pinpoint what those limits are, beyond to acknowledge its data-hungry nature.

Others like to leverage the opacity of the black box of deep learning to suggest that that are no known limits. Last week, for example, one of the founders of machine learning, Tom Dietterich, said (in answer to a question about the scope of deep learning):

Dietterich is of course technically correct; nobody yet has delivered formal proofs about limits on deep learning, so there is no definite answer. And he is also right that deep learning continues to evolve. But the tweet (which expresses an argument I have heard many times, including from Dietterich more than once) neglects the fact we also do have a lot of strong suggestive evidence of at least some limit in scope, such as empirically observed limits on reasoning abilities, poor performance in natural language comprehension, vulnerability to adversarial examples, and so forth. (At the end of this article, I will even give an example in the domain of object recognition, putatively deep learning’s strong suit.)

To take another example, consider a widely-read 2015 article in Nature on deep learning by LeCun, Bengio, and Geoffrey Hinton, the trio most associated with the invention of deep learning. The article elaborates on the strength of deep learning in considerable detail. There again much of what was said is true, but there was almost nothing acknowledged about the limits of deep learning, so that it would be easy to walk away from the paper imagining that deep learning is a much broader tool than it really is. The paper’s conclusion furthers that impression by suggesting that deep learning’s historical antithesis—symbol-manipulation/classical AI—should be replaced: “new paradigms are needed to replace the rule-based manipulation of symbolic expressions on large vectors.” The traditional ending of many scientific papers—limits—is essentially missing, inviting the inference that the horizons for deep learning are limitless. Symbol-manipulation, the message seems to be, will soon be left in the dustbin of history.

When I rail about deep learning, it’s not because I think it should be “replaced,” but because I think that it has been oversold.

The strategy of emphasizing strength without acknowledging limits is even more pronounced in a 2017 Nature article on Go by the Google-owned AI firm DeepMind, which appears to imply similarly limitless horizons for deep-reinforcement learning. The article suggests that Go is one of the hardest problems in AI—“Our results comprehensively demonstrate that a pure [deep] reinforcement learning approach is fully feasible, even in the most challenging of domains” —without acknowledging that other hard problems differ qualitatively in character and might not be accessible to similar approaches. For example, information in most tasks is less complete than it is with Go. I discuss this further elsewhere.

It worries me, greatly, when a field dwells largely or exclusively on the strengths of its latest discoveries, without publicly acknowledging possible weaknesses that have actually been well documented.

Here’s my view: Deep learning really is great, but it’s the wrong tool for the job of cognition writ large. It’s a tool for perceptual classification, when general intelligence involves so much more. What I was saying in 2012 (and have never deviated from) is that deep learning ought to be part of the workflow for AI, not the whole thing: “just one element in a very complicated ensemble of things,” as I put it then, and “not a universal solvent, [just] one tool among many” as I put it this January. Deep learning is, like anything else we might consider, a tool with particular strengths, and particular weaknesses. Nobody should be surprised by this.

When I rail about deep learning, it’s not because I think it should be “replaced” (cf. Hinton, LeCun, and Bengio’s strong language above, where the name of the game is to conquer previous approaches), but because I think that (a) it has been oversold (for example that Andrew Ng quote, or the whole framing of DeepMind’s 2017 Nature paper), often with vastly greater attention to its strengths than its potential limitations, and (b) exuberance for deep learning is often (though not universally) accompanied by a hostility to symbol-manipulation that I believe is a foundational mistake in the ultimate solution to AI.

I think it is far more likely that the two—deep learning and symbol-manipulation—will co-exist, with deep learning handling many aspects of perceptual classification, but symbol-manipulation playing a vital role in reasoning about abstract knowledge. Advances in narrow AI with deep learning are often taken to mean that we don’t need symbol-manipulation anymore, and I think that it is a huge mistake.

So what is symbol-manipulation, and why do I steadfastly cling to it? The idea goes back to the earliest days of computer science (and even earlier, to the development of formal logic): Symbols can stand for ideas, and if you manipulate those symbols, you can make correct inferences about the inferences they stand for. If you know that P implies Q, you can infer from not-Q that not-P. If I tell you that plonk implies queegle but queegle is not true, then you can infer that plonk is not true.

In my 2001 book The Algebraic Mind, I argued, in the tradition of cognitive psychologists Allen Newell and Herb Simon, and my mentor Steven Pinker, that the human mind incorporates (among other tools) a set of mechanisms for representing structured sets of symbols, in something like a hierarchical tree. Even more critically I argued that a vital component of cognition is the ability to learn abstract relationships that are expressed over variables— analogous to what we do in algebra, when we learn an equation like x = y + 2, and then solve for x given some value of y. The process of attaching y to a specific value (say 5) is called binding; the process that combines that value with the other elements is what I would call an operation. The central claim of the book was that symbolic processes like that—representing abstractions, instantiating variables with instances, and applying operations to those variables—was indispensable to the human mind. I showed in detail that advocates of neural networks often ignored this, at their peril.

Whatever one thinks about the brain, virtually all of the world’s software is built on symbols.

The form of the argument was to show that neural-network models fell into two classes: “Implementational connectionism” had mechanisms that formally mapped onto the symbolic machinery of operations over variables, and “eliminative connectionism” lacked such mechanisms. The models that succeeded in capturing various facts (primarily about human language) were ones that mapped on; those that didn’t failed. I also pointed out that rules allowed for what I called free generalization of universals, whereas multilayer perceptrons required large samples in order to approximate universal relationships, an issue that crops up in Bengio’s recent work on language.

Nobody yet knows how the brain implements things like variables or binding of variables to the values of their instances, but strong evidence (reviewed in the book) suggests that brains can. Pretty much everyone agrees that at least some humans can do this when they do mathematics and formal logic, and most linguists would agree that we do it in understanding language. The real question is not whether human brains can do symbol-manipulation at all, it is how broad is the scope of the processes that use it.

The secondary goal of the book was to show that it was possible to build the primitives of symbol manipulation in principle using neurons as elements. I examined some old ideas, like dynamic binding via temporal oscillation, and personally championed a slots-and-fillers approach that involved having banks of node-like units with codes, something like the ASCII code. Memory networks and differentiable programming have been doing something a little like that, with more modern (embedding) codes, but following a similar principle, embracing symbol manipulation with microprocessor-like operations. I am cautiously optimistic that this approach might work better for things like reasoning and, once we have a solid enough machine-interpretable database of probabilistic but abstract common sense, language.

Whatever one thinks about the brain, virtually all of the world’s software is built on symbols. Every line of computer code, for example, is really a description of some set of operations over variables: If X is greater than Y, do P, otherwise do Q; concatenate A and B together to form something new; and so forth. Neural networks can (depending on their structure, and whether anything maps precisely onto operations over variables) offer a genuinely different paradigm, and are obviously useful for tasks like speech-recognition, which nobody would do with a set of rules anymore, with good reason. But nobody would build a browser by supervised learning on sets of inputs (logs of user keystrokes) and output (images on screens, or packets downloading). My understanding from LeCun is that a lot of Facebook’s AI is done by neural networks, but it’s certainly not the case that the entire framework of Facebook runs without recourse to symbol-manipulation.

And although symbols may not have a home in speech recognition anymore, and clearly can’t do the full stack of cognition and perception on their own, there’s lots of problems where you might expect them to be helpful, albeit in problems that nobody, either in the symbol-manipulation-based world of classical AI or in the deep-learning world, has the answers for yet . These problems include abstract reasoning and language, which are, after all, the domains for which the tools of formal logic and symbolic reasoning were invented. To anyone who has seriously engaged in trying to understand, say, commonsense reasoning, this seems obvious.

Yes, partly for historical reasons that date back to the earliest days of AI, the founders of deep learning have often been deeply hostile to including such machinery in their models. Hinton, for example, gave a talk at Stanford in 2015 called Aetherial symbols, in which he tried to argue that the idea of reasoning with formal symbols was “as incorrect as the belief that a lightwave can only travel through space by causing disturbances in the luminiferous aether.”

Hinton didn’t really give an argument for that, so far as I can tell (I was sitting in the room). Instead, he seemed (to me) to be making a suggestion for how to map hierarchical sets of symbols onto vectors. That wouldn’t render symbols “aether”—it would make them very real causal elements with a very specific implementation, a refutation of what Hinton seemed to advocate. (Hinton refused to clarify when I asked.) From a scientific perspective (as opposed to a political perspective), the question is not what we call our ultimate AI system. It’s: How does it work? Does it include primitives that serve as implementations of the apparatus of symbol-manipulation (as modern computers do), or work on entirely different principles? My best guess is that the answer will be both: Some but not all parts of any system for general intelligence will map perfectly onto the primitives of symbol-manipulation and others will not.

That’s actually a pretty moderate view, giving credit to both sides. Where we are now, though, is that the large preponderance of the machine-learning field doesn’t want to explicitly include symbolic expressions (like “dogs have noses that they use to sniff things”) or operations over variables (like algorithms that would test whether observations P, Q, and R and their entailments are logically consistent) in their models.

Far more researchers are more comfortable with vectors, and make advances every day in using those vectors; for most researchers, symbolic expressions and operations aren’t part of the toolkit. But the advances they make with such tools are, at some level, predictable: Training times to learn sets of labels for perceptual inputs keep getting better, and accuracy on classification tasks improves. No less predictable are the places where there are fewer advances: in domains like reasoning and language comprehension—precisely the domains that Bengio and I are trying to call attention to—deep learning on its own has not gotten the job down, even after billions of dollars of investment.

Those domains seem, intuitively, to revolve around putting together complex thoughts, and the tools of classical AI would seem perfectly suited to such things. Why continue to exclude them? In principle, symbols also offer a way of incorporating all the world’s textual knowledge, from Wikipedia to textbooks; deep learning has no obvious way of incorporating basic facts like “dogs have noses,” nor does it have a way to accumulate that knowledge into more complex inferences. If our dream is to build machines that learn by reading Wikipedia, we ought to consider starting with a substrate that is compatible with the knowledge contained therein.

The most important question that I personally raised in last month’s Twitter discussion about deep learning is ultimately this: Can it solve general intelligence? Or only problems involving perceptual classification? Or something in between? What else is needed?

Symbols won’t cut it on their own, and deep learning won’t either. The time to bring them together, in the service of novel hybrids, is long overdue.

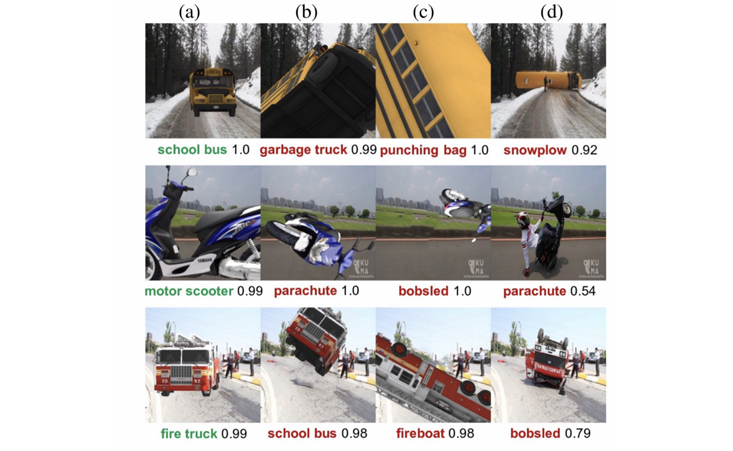

Just after I finished the first draft of this essay, Max Little brought my attention to a thought-provoking new paper by Michael Alcorn, Anh Nguyen, and others that highlights the risks inherent in relying too heavily on deep learning and big data. In particular, they showed that standard deep learning nets often fall apart when confronted with common stimuli rotated in three-dimensional space into unusual positions, like the top-right corner of this figure, in which a school bus is mistaken for a snowplow:

In a healthy field, everything would stop when a systematic class of errors that is surprising and illuminating was discovered. Souls would be searched; hands would be wrung. Mistaking an overturned school bus is not just a mistake, it’s a revealing mistake.It shows not only that deep learning systems can get confused, but that they are challenged in making a fundamental distinction known to all philosophers: the distinction between features that are merely contingent associations (snow is often present when there are snowplows, but not necessary) and features that are inherent properties of the category itself (snowplows ought, other things being equal, to have plows, unless, for example, they have been dismantled). We’d already seen similar examples with contrived stimuli, like Anish Athalye’s carefully designed, 3-D printed, foam-covered baseball that was mistaken for an espresso:

Alcorn’s results—some from real photos from the natural world—should have pushed worry about this sort of anomaly to the top of the stack.

The initial response, though, wasn’t hand-wringing—it was more dismissiveness, such as a tweet from LeCun that dubiously likened the noncanonical pose stimuli to Picasso paintings. The reader can judge for him or herself, but the right-hand column, it should be noted, shows all natural images, neither painted nor rendered. They are not products of the imagination, they are reflections of a genuine limitation that must be faced.

In my judgment, deep learning has reached a moment of reckoning. When some of its most prominent leaders stand in denial, there is a problem.

Which brings me back to the paper and Alcorn’s conclusions, which actually seem exactly right, and which the whole field should take note of: “state-of-the-art DNNs [Deep Neural Networks] perform image classification well but are still far from true object recognition.” As they put it, “DNNs’ understanding of objects like ‘school bus’ and ‘fire truck’ is quite naive”—very much parallel to what I said about neural network models of language 20 years earlier, when I suggested that the concepts acquired by Simple Recurrent Networks were too superficial.

The technical issue driving Alcorn et al.’s new results?

As Alcorn et al. put it:

deep neural networks can fail to generalize to out-of-distribution inputs, including natural, non-adversarial ones, which are common in real-world settings.

Funny they should mention that. The chief motivation I gave for symbol-manipulation, back in 1998, was that back-propagation (then used in models with fewer layers, hence precursors to deep learning) had trouble generalizing outside a space of training examples.

That problem hasn’t gone away.

And object recognition was supposed to be deep learning’s forte. If deep learning can’t recognize objects in noncanonical poses, why should we expect it to do complex everyday reasoning, a task for which it has never shown any facility whatsoever?

In fact, it’s worth reconsidering my 1998 conclusions at some length. At that time I concluded in part that (excerpting from the concluding summary argument):

● Humans can generalize a wide range of universals to arbitrary novel instances. They appear to do so in many areas of language (including syntax, morphology, and discourse) and thought (including transitive inference, entailments, and class-inclusion relationships).

● Advocates of symbol manipulation assume that the mind instantiates symbol-manipulating mechanisms including symbols, categories, and variables, and mechanisms for assigning instances to categories and representing and extending relationships between variables. This account provides a straightforward framework for understanding how universals are extended to arbitrary novel instances.

● Current eliminative connectionist models map input vectors to output vectors using the back-propagation algorithm (or one of its variants).

● To generalize universals to arbitrary novel instances, these models would need to generalize outside the training space.

● These models cannot generalize outside the training space.

● Therefore, current eliminative connectionist models cannot account for those cognitive phenomena that involve universals that can be freely extended to arbitrary cases.

Richard Evans and Edward Grefenstette’s recent paper at DeepMind, building on Joel Grus’s blog post on the game Fizz-Buzz, follows remarkably similar lines, concluding that a canonical multilayer network was unable to solve the simple game on its own “because it did not capture the general, universally quantified rules needed to understand this task”—exactly what I said in 1998.

Their solution? A hybrid model that vastly outperformed what a purely deep net would have done, incorporating both back-propagation and (continuous versions) of the primitives of symbol manipulation, including both explicit variables and operations over variables. That’s really telling. And it’s where we should all be looking: gradient descent plus symbols, not gradient descent alone. If we want to stop confusing snow plows with school buses, we may ultimately need to look in the same direction, because the underlying problem is the same: In virtually every facet of the mind, even vision, we occasionally face stimuli that are outside the domain of training; deep learning gets wobbly when that happens, and we need other tools to help.

All I am saying is to give Ps (and Qs) a chance.

Gary Marcus was CEO & Founder of the machine learning company Geometric Intelligence (acquired by Uber), and is a professor of psychology and neural science at NYU, and a freelancer for the New Yorker and The New York Times.