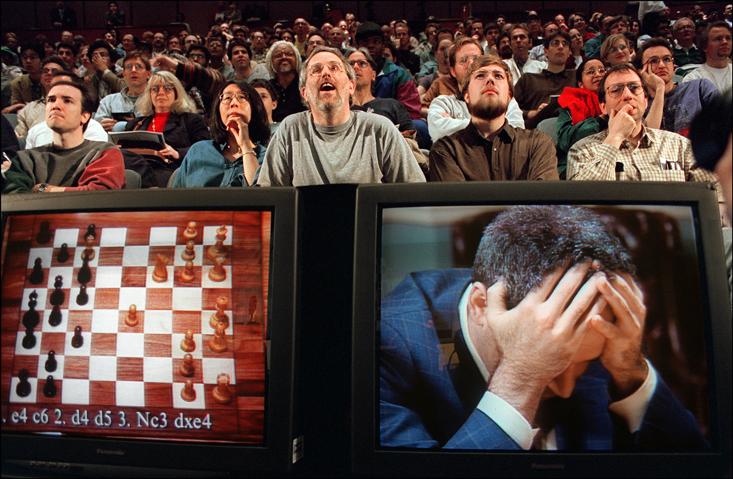

When IBM’s Deep Blue beat chess Grandmaster Garry Kasparov in 1997 in a six-game chess match, Kasparov came to believe he was facing a machine that could experience human intuition. “The machine refused to move to a position that had a decisive short-term advantage,” Kasparov wrote after the match. It was “showing a very human sense of danger.”1 To Kasparov, Deep Blue seemed to be experiencing the game rather than just crunching numbers.

Just a few years earlier, Kasparov had declared, “No computer will ever beat me.”2 When one finally did, his reaction was not just to conclude that the computer was smarter than him, but that it had also become more human. For Kasparov, there was a uniquely human component to chess playing that could not be simulated by a computer.

Kasparov was not sensing real human intuition in Deep Blue; there was no place in its code, constantly observed and managed by a team of IBM engineers, for anything that resembled human thought processes. But if not that, then what? The answer may start with another set of games with an unlikely set of names: Go, Hex, Havannah, and Twixt. All of these have a similar design: Two players take turns placing pieces on any remaining free space on a fairly large board (19-by-19 in Go’s case, up to 24-by-24 for Twixt). The goal is to reach some sort of winning configuration, by surrounding the most territory in the case of Go, by connecting two opposite sides of the board in Hex, and so on.

Might Kasparov have actually detected a hint of analogical thinking in Deep Blue’s play and mistaken it for human intervention?

The usual way a computer plays chess is to consider various move possibilities individually, evaluate the resulting boards, and rank moves as being more or less advantageous. Yet for games like Go and Twixt, this approach breaks down. Whereas at any point in chess there are at most a couple dozen possible moves, these games offer hundreds of possible moves (thousands in the case of Arimaa, which was designed to be a chess-like game that computers could not beat). The evaluation of all or most possible board positions for more than a couple of moves forward quickly becomes impossible for a computer to analyze in a reasonable amount of time: a combinatoric explosion. In addition, even the very concept of evaluation is more difficult than in chess, as there is less agreement on how to judge the value of a particular board configuration.

For humans, though, these games remain eminently playable. Why? Computer scientist and advanced Go player Martin Müller gives us a hint:

Patterns recognized by humans are much more than just chunks of stones and empty spaces: Players can perceive complex relations between groups of stones, and easily grasp fuzzy concepts such as ‘light’ and ‘heavy’ stones. This visual nature of the game fits human perception but is hard to model in a program.3

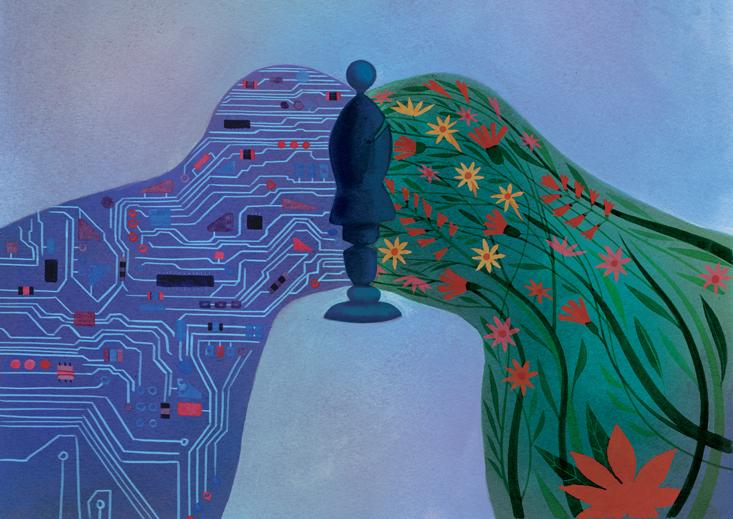

In other words, Go strategy lies not in a strictly formal representation of the game but in a variety of different kinds of visual pattern recognition and similarity analysis: sorting the pieces into different shapes and clumps, comparing them to identical or visually similar patterns immediately available to one’s mind, and quickly trimming the space of investigation to a manageable level. Might Kasparov have actually detected a hint of analogical thinking in Deep Blue’s play and mistaken it for human intervention?

Analogy has a long history in thinking about thinking.

Cognitive linguists such as Mark Turner and Gilles Fauconnier, building off of the work of George Lakoff (Metaphors We Live By), have emphasized the analogical process of conceptual “blending” as being core to every level of human cognition, from discerning images to creative writing to applying mathematical concepts to the world.4,5 Historian and philosopher Arthur I. Miller (Insights of Genius)6 and historian Andrew Robinson both stress relentlessly creative, conceptual analogizing as being at the heart of scientific innovation and revolution.

But analogy presents a major challenge to computers, and even to the formal logic that undergirds computer science. The mathematician Stanislaw Ulam believed analogy was key to extending formal logic to encompass the whole world: “What is it that you see when you see? You see an object as a key, you see a man in a car as a passenger, you see some sheets of paper as a book. It is the word ‘as’ that must be mathematically formalized, on a par with the connectives ‘and,’ ‘or,’ ‘implies,’ and ‘not’ …”7

Computers are notoriously bad at inferring analogical relationships, like grasping that a picture of a horse portrays an instance of the class of object called “horse.” When humans look at a horse, we do not take it in bit by bit and analyze it; we instantaneously see it as a horse (or a car, or a plane, or whatever it may be) analogically—even in the presence of high degrees of variability.

It is a meta-strategy without a strategy!

Computer scientist and complexity theorist Melanie Mitchell, who studied under Douglas Hofstadter, has studied how computers can classify objects with the help of shape prototypes. These experiments use neural networks and a dictionary of “learned shapes” to match new shapes against. In a 2013 study, Mitchell made a startling discovery: If the algorithms’ dictionary was removed and replaced with a series of random shape projections to match against, the algorithms performed equally well.8 While intuition may suggest that the brain builds up representational archetypes which correspond to objects in the world, Mitchell’s research suggests that pure randomness may have an important role in the process of conceptualization.

The extent to which these results speak to human analogizing is unclear. But they open the possibility that our process of analogy making may be even less rational and more stochastic than we suspect, and that the deep archetypes we match against in our brain might bear far less relationship to reality than we might think. Underneath our apparent rationality may lie neurobiological processes that look considerably closer to random trial and error. In this view, human creativity and randomness go hand in hand.

The power of randomness is amply visible in new approaches that have finally enabled computers to play games like Go, Hex, Havannah, and Twixt at a professional level.9 At the heart of these approaches is an algorithm called the Monte Carlo method which, true to its name, relies on randomized, statistical sampling, rather than evaluating possible future board configurations for each possible move. For example, for a given move, a Monte Carlo tree search will play out a number of random or heuristically chosen future games (“playouts”) from that move on, with little strategy behind either player’s moves. Most possibilities are not played out, thus constraining the massive branching factor. If a move tends to lead to more winning games regardless of the strategy then employed, it is considered a stronger move. The idea is that such sampling will often be sufficient to estimate the general strength or weakness of a move.

If anything, Monte Carlo methods seem dumber still than computer chess approaches, because now instead of evaluating board positions en masse, computers are just playing out random (or at least partly-random) games and sampling the possibilities. It is a meta-strategy without a strategy! But Monte Carlo works better for high-branching games than more “strategic” approaches that attempt to make accurate evaluations of the strength of particular board positions.

What Kasparov might have sensed when he played Deep Blue is something that appeared like that creative randomness, which he took to be a “human” intuition of danger. Deep Blue programmer Feng-Hsiung Hsu writes in his book Behind Deep Blue that during the match, outside analysts were divided over a mysterious move made by the program, thinking it either weak or obliquely strategic. Eventually, the programmers discovered that the move was simply the result of a bug that had caused the computer not to choose what it had actually calculated to be the best move—something that could have appeared as random play. The bug wasn’t fixed until after game four, long after Kasparov’s spirit had been broken.

David Auerbach is a writer and software engineer who lives in New York. He writes the Bitwise column for Slate.

References

1. Kasparov, G. IBM Owes Mankind A Rematch. Time Magazine 149, 66-67 (1997).

2. Hsu, F.H. Behind Deep Blue: Building the Computer that Defeated the World Chess Champion Princeton University Press (2002).

3.Müller, M. Computer go. Artificial Intelligence 134, 145–179 (2002).

4. Turner, M. The Origin of Ideas: Blending, Creativity, and the Human Spark Oxford University Press (2014).

5. Fauconnier, G. & Turner, M. The Way We Think: Conceptual Blending and the Mind’s Hidden Complexities Basic Books, New York, NY (2002).

6. Miller, A.I. Insights of Genius: Imagery and Creativity in Science and Art MIT Press (1996).

7. Rota, G.-C., & Palombi, F. Indiscrete Thoughts Birkhäuser Boston (1997).

8. Thomure, M.D., Mitchell, M., & Kenyon, G.T. On the role of shape prototypes in hierarchical models of vision. The 2013 International Joint Conference on Neural Networks 1-6 (2013).

9. Gelly, S., et al. The grand challenge of computer Go: Monte Carlo tree search and extensions. Communications of the ACM 55, 106-113 (2012).