I remember well the first time my certainty of a bright future evaporated, when my confidence in the panacea of technological progress was shaken. It was in 2007, on a warm September evening in San Francisco, where I was relaxing in a cheap motel room after two days covering The Singularity Summit, an annual gathering of scientists, technologists, and entrepreneurs discussing the future obsolescence of human beings.

In math, a “singularity” is a function that takes on an infinite value, usually to the detriment of an equation’s sense and sensibility. In physics, the term usually refers to a region of infinite density and infinitely curved space, something thought to exist inside black holes and at the very beginning of the Big Bang. In the rather different parlance of Silicon Valley, “The Singularity” is an inexorably-approaching event in which humans ride an accelerating wave of technological progress to somehow create superior artificial intellects—intellects which with predictable unpredictability then explosively make further disruptive innovations so powerful and profound that our civilization, our species, and perhaps even our entire planet are rapidly transformed into some scarcely imaginable state. Not long after The Singularity’s arrival, argue its proponents, humanity’s dominion over the Earth will come to an end.

I had encountered a wide spectrum of thought in and around the conference. Some attendees overflowed with exuberance, awaiting the arrival of machines of loving grace to watch over them in a paradisiacal post-scarcity utopia, while others, more mindful of history, dreaded the possible demons new technologies could unleash. Even the self-professed skeptics in attendance sensed the world was poised on the cusp of some massive technology-driven transition. A typical conversation at the conference would refer at least once to some exotic concept like whole-brain emulation, cognitive enhancement, artificial life, virtual reality, or molecular nanotechnology, and many carried a cynical sheen of eschatological hucksterism: Climb aboard, don’t delay, invest right now, and you, too, may be among the chosen who rise to power from the ashes of the former world!

Over vegetarian hors d’oeuvres and red wine at a Bay Area villa, I had chatted with the billionaire venture capitalist Peter Thiel, who planned to adopt an “aggressive” strategy for investing in a “positive” Singularity, which would be “the biggest boom ever,” if it doesn’t first “blow up the whole world.” I had talked with the autodidactic artificial-intelligence researcher Eliezer Yudkowsky about his fears that artificial minds might, once created, rapidly destroy the planet. At one point, the inventor-turned-proselytizer Ray Kurzweil teleconferenced in to discuss, among other things, his plans for becoming transhuman, transcending his own biology to achieve some sort of eternal life. Kurzweil believes this is possible, even probable, provided he can just live to see The Singularity’s dawn, which he has pegged at sometime in the middle of the 21st century. To this end, he reportedly consumes some 150 vitamin supplements a day.

If our technological civilization is to avoid falling into decay, human obsolescence in one form or another is unavoidable.

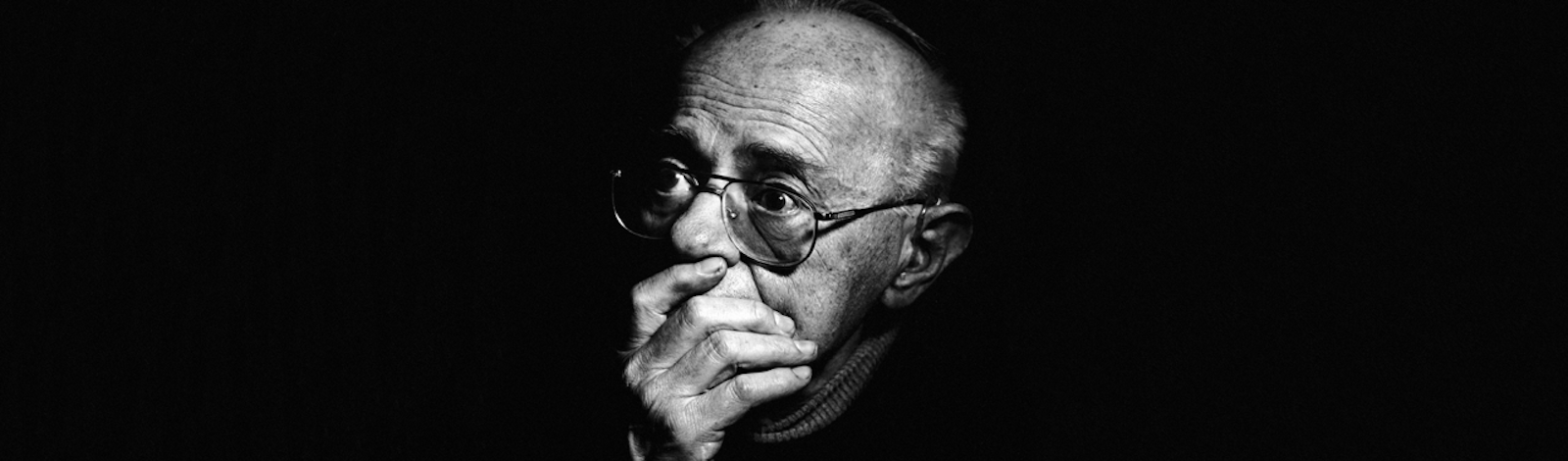

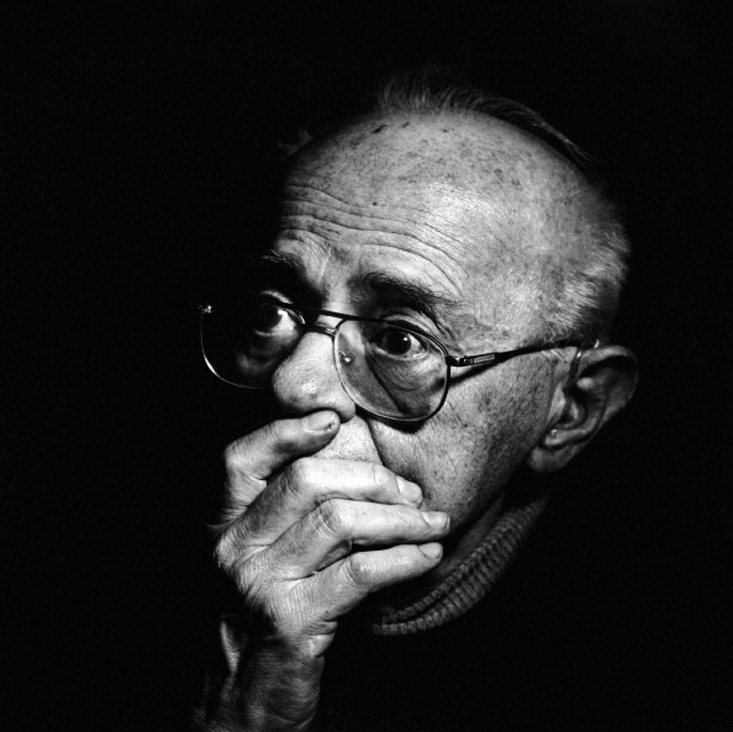

Returning to my motel room exhausted each night, I unwound by reading excerpts from an old book, Summa Technologiae. The late Polish author Stanislaw Lem had written it in the early 1960s, setting himself the lofty goal of forging a secular counterpart to the 13th-century Summa Theologica, Thomas Aquinas’s landmark compendium exploring the foundations and limits of Christian theology. Where Aquinas argued for the certainty of a Creator, an immortal soul, and eternal salvation as based on scripture, Lem concerned himself with the uncertain future of intelligence and technology throughout the universe, guided by the tenets of modern science.

To paraphrase Lem himself, the book was an investigation of the thorns of technological roses that had yet to bloom. And yet, despite Lem’s later observation that “nothing ages as fast as the future,” to my surprise most of the book’s nearly half-century-old prognostications concerned the very same topics I had encountered during my days at the conference, and felt just as fresh. Most surprising of all, in subsequent conversations I confirmed my suspicions that among the masters of our technological universe gathered there in San Francisco to forge a transhuman future, very few were familiar with the book or, for that matter, with Lem. I felt like a passenger in a car who discovers a blindspot in the central focus of the driver’s view.

Such blindness was, perhaps, understandable. In 2007, only fragments of Summa Technologiae had appeared in English, via partial translations undertaken independently by the literary scholar Peter Swirski and a German software developer named Frank Prengel. These fragments were what I read in the motel. The first complete English translation, by the media researcher Joanna Zylinska, only appeared in 2013. By Lem’s own admission, from the start the book was a commercial and a critical failure that “sank without a trace” upon its first appearance in print. Lem’s terminology and dense, baroque style is partially to blame—many of his finest points were made in digressive parables, allegories, and footnotes, and he coined his own neologisms for what were, at the time, distinctly over-the-horizon fields. In Lem’s lexicon, virtual reality was “phantomatics,” molecular nanotechnology was “molectronics,” cognitive enhancement was “cerebromatics,” and biomimicry and the creation of artificial life was “imitology.” He had even coined a term for search-engine optimization, a la Google: “ariadnology.” The path to advanced artificial intelligence he called the “technoevolution” of “intellectronics.”

Even now, if Lem is known at all to the vast majority of the English-speaking world, it is chiefly for his authorship of Solaris, a popular 1961 science-fiction novel that spawned two critically acclaimed film adaptations, one by Andrei Tarkovsky and another by Steven Soderbergh. Yet to say the prolific author only wrote science fiction would be foolishly dismissive. That so much of his output can be classified as such is because so many of his intellectual wanderings took him to the outer frontiers of knowledge.

Lem was a polymath, a voracious reader who devoured not only the classic literary canon, but also a plethora of research journals, scientific periodicals, and popular books by leading researchers. His genius was in standing on the shoulders of scientific giants to distill the essence of their work, flavored with bittersweet insights and thought experiments that linked their mathematical abstractions to deep existential mysteries and the nature of the human condition. For this reason alone, reading Lem is an education, wherein one may learn the deep ramifications of breakthroughs such as Claude Shannon’s development of information theory, Alan Turing’s work on computation, and John von Neumann’s exploration of game theory. Much of his best work entailed constructing analyses based on logic with which anyone would agree, then showing how these eminently reasonable premises lead to astonishing conclusions. And the fundamental urtext for all of it, the wellspring from which the remainder of his output flowed, is Summa Technologiae.

The core of the book is a heady mix of evolutionary biology, thermodynamics—the study of energy flowing through a system—and cybernetics, a diffuse field pioneered in the 1940s by Norbert Wiener studying how feedback loops can automatically regulate the behavior of machines and organisms. Considering a planetary civilization this way, Lem posits a set of feedbacks between the stability of a society and its degree of technological development. In its early stages, Lem writes, the development of technology is a self-reinforcing process that promotes homeostasis, the ability to maintain stability in the face of continual change and increasing disorder. That is, incremental advances in technology tend to progressively increase a society’s resilience against disruptive environmental forces such as pandemics, famines, earthquakes, and asteroid strikes. More advances lead to more protection, which promotes more advances still.

The result is a disconcerting paradox: To maintain control of our own fate, we must yield our agency to minds exponentially more powerful than our own.

And yet, Lem argues, that same technology-driven positive feedback loop is also an Achilles heel for planetary civilizations, at least for ours here on Earth. As advances in science and technology accrue and the pace of discovery continues its acceleration, our society will approach an “information barrier” beyond which our brains—organs blindly, stochastically shaped by evolution for vastly different purposes—can no longer efficiently interpret and act on the deluge of information.

Past this point, our civilization should reach the end of what has been a period of exponential growth in science and technology. Homeostasis will break down, and without some major intervention, we will collapse into a “developmental crisis” from which we may never fully recover. Attempts to simply muddle through, Lem writes, would only lead to a vicious circle of boom-and-bust economic bubbles as society meanders blindly down a random, path-dependent route of scientific discovery and technological development. “Victories, that is, suddenly appearing domains of some new wonderful activity,” he writes, “will engulf us in their sheer size, thus preventing us from noticing some other opportunities—which may turn out to be even more valuable in the long run.”

Lem thus concludes that if our technological civilization is to avoid falling into decay, human obsolescence in one form or another is unavoidable. The sole remaining option for continued progress would then be the “automatization of cognitive processes” through development of algorithmic “information farms” and superhuman artificial intelligences. This would occur via a sophisticated plagiarism, the virtual simulation of the mindless, brute-force natural selection we see acting in biological evolution, which, Lem dryly notes, is the only technique known in the universe to construct philosophers, rather than mere philosophies.

The result is a disconcerting paradox, which Lem expresses early in the book: To maintain control of our own fate, we must yield our agency to minds exponentially more powerful than our own, created through processes we cannot entirely understand, and hence potentially unknowable to us. This is the basis for Lem’s explorations of The Singularity, and in describing its consequences he reaches many conclusions that most of its present-day acolytes would share. But there is a difference between the typical modern approach and Lem’s, not in degree, but in kind.

Unlike the commodified futurism now so common in the bubble-worlds of Silicon Valley billionaires, Lem’s forecasts weren’t really about seeking personal enrichment from market fluctuations, shiny new gadgets, or simplistic ideologies of “disruptive innovation.” In Summa Technologiae and much of his subsequent work, Lem instead sought to map out the plausible answers to questions that today are too often passed over in silence, perhaps because they fail to neatly fit into any TED Talk or startup business plan: Does technology control humanity, or does humanity control technology? Where are the absolute limits for our knowledge and our achievement, and will these boundaries be formed by the fundamental laws of nature or by the inherent limitations of our psyche? If given the ability to satisfy nearly any material desire, what is it that we actually would want?

Lem’s explorations of these questions are dominated by his obsession with chance, the probabilistic tension between chaos and order as an arbiter of human destiny. He had a deep appreciation for entropy, the capacity for disorder to naturally, spontaneously arise and spread, cursing some while sparing others. It was an appreciation born from his experience as a young man in Poland before, during, and after World War II, where he saw chance’s role in the destruction of countless dreams, and where, perhaps by pure chance alone, his Jewish heritage did not result in his death. “We were like ants bustling in an anthill over which the heel of a boot is raised,” he wrote in Highcastle, an autobiographical memoir. “Some saw its shadow, or thought they did, but everyone, the uneasy included, ran about their usual business until the very last minute, ran with enthusiasm, devotion—to secure, to appease, to tame the future.” From the accumulated weight of those experiences, Lem wrote in the New Yorker in 1986, he had “come to understand the fragility that all systems have in common,” and “how human beings behave under extreme conditions—how their behavior when they are under enormous pressure is almost impossible to predict.”

To Lem (and, to their credit, a sizeable number of modern thinkers), the Singularity is less an opportunity than a question mark, a multidimensional crucible in which humanity’s future will be forged.

I couldn’t help thinking of Lem’s question mark that summer in 2007. Within and around the gardens surrounding the neoclassical Palace of Fine Arts Theater where the Singularity Summit was taking place, dark and disruptive shadows seemed to loom over the plans and aspirations of the gathered well-to-do. But they had precious little to do with malevolent superintelligences or runaway nanotechnology. Between my motel and the venue, panhandlers rested along the sidewalk, or stood with empty cups at busy intersections, almost invisible to everyone. Walking outside during one break between sessions, I stumbled across a homeless man defecating between two well-manicured bushes. Even within the context of the conference, hints of desperation sometimes tinged the not-infrequent conversations about raising capital; the subprime mortgage crisis was already unfolding that would, a year later, spark the near-collapse of the world’s financial system. While our society’s titans of technology were angling for advantages to create what they hoped would be the best of all possible futures, the world outside reminded those who would listen that we are barely in control even today.

In Lem’s view, humans, as imperfect as we are, shall always strive to progress and improve.

I attended two more Singularity Summits, in 2008 and 2009, and during that three-year period, all the much-vaunted performance gains in various technologies seemed paltry against a more obvious yet less-discussed pattern of accelerating change: the rapid, incessant growth in global ecological degradation, economic inequality, and societal instability. Here, forecasts tend to be far less rosy than those for our future capabilities in information technology. They suggest, with some confidence, that when and if we ever breathe souls into our machines, most of humanity will not be dreaming of transcending their biology, but of fresh water, a full belly, and a warm, safe bed. How useful would a superintelligent computer be if it was submerged by storm surges from rising seas or dis- connected from a steady supply of electricity? Would biotech-boosted personal longevity be worthwhile in a world ravaged by armed, angry mobs of starving, displaced people? More than once I have wondered why so many high technologists are more concerned by as- yet-nonexistent threats than the much more mundane and all-too-real ones literally right before their eyes.

Lem was able to speak to my experience of the world outside the windows of the Singularity conference. A thread of humanistic humility runs through his work, a hard-gained certainty that technological development too often takes place only in service of our most primal urges, rewarding individual greed over the common good. He saw our world as exceedingly fragile, contingent upon a truly astronomical number of coincidences, where the vagaries of the human spirit had become the most volatile variables of all.

It is here that we find Lem’s key strength as a futurist. He refused to discount human nature’s influence on transhuman possibilities, and believed that the still-incomplete task of understanding our strengths and weaknesses as human beings was a crucial prerequisite for all speculative pathways to any post-Singularity future. Yet this strength also leads to what may be Lem’s great weakness, one which he shares with today’s hopeful transhumanists: an all-too-human optimism that shines through an otherwise-dispassionate darkness, a fervent faith that, when faced with the challenge of a transhuman future, we will heroically plunge headlong into its depths. In Lem’s view, humans, as imperfect as we are, shall always strive to progress and improve, seeking out all that is beautiful and possible rather than what may be merely convenient and profitable, and through this we may find salvation. That we might instead succumb to complacency, stagnation, regression, and extinction is something he acknowledges but can scarcely countenance. In the end, Lem, too, was seduced—though not by quasi-religious notions of personal immortality, endless growth, or cosmic teleology, but instead by the notion of an indomitable human spirit.

Like many other ideas from Summa Technologiae, this one finds its best expression in one of Lem’s works of fiction, his 1981 novella Golem XIV, in which a self-programming military supercomputer that has bootstrapped itself into sentience delivers a series of lectures critiquing evolution and humanity. Some would say it is foolish to seek truth in fiction, or to draw equivalence between an imaginary character’s thoughts and an author’s genuine beliefs, but for me the conclusion is inescapable. When the novella’s artificial philosopher makes its pronouncements through a connected vocoder, it is the human voice of Lem that emerges, uttering a prophecy of transcendence that is at once his most hopeful—and perhaps, in light of trends today, his most erroneous:

“I feel that you are entering an age of metamorphosis; that you will decide to cast aside your entire history, your entire heritage and all that remains of natural humanity—whose image, magnified into beautiful tragedy, is the focus of the mirrors of your beliefs; that you will advance (for there is no other way), and in this, which for you is now only a leap into the abyss, you will find a challenge, if not a beauty; and that you will proceed in your own way after all, since in casting off man, man will save himself.”

Freelance writer Lee Billings is the author of Five Billion Years of Solitude: The Search for Life Among the Stars.

Photograph by Forum/UIG/Getty Images

This article originally appeared in the Fall 2014 Nautilus Quarterly.