Let me tell you a short, fictitious story about a very real Spanish conquistador, Francisco de Orellana.

In 1546 he was captured and imprisoned in a small, dank cell. Before long he was summoned, and a judge sentenced him to death. To add insult to injury, the judge decided a bit of mental torture was in order. The sentence was passed on a Sunday, and the judge ordered that Orellana be hanged by the end of the week—but also that he be told of the event only on the morning of his execution. Each night, Orellana would fall asleep not knowing whether it would be his last.

But hang on a minute, thought Orellana (presumably maintaining his rationality in the face of death). The execution could not take place on Friday because, if Orellana hadn’t been informed by Thursday morning that he would be killed that very day, then he would need to be executed the following day, giving him a full day’s foresight. The tortuous surprise would be missing. Therefore he would be killed on Thursday at the latest.

But Thursday, too, was impossible, since if he hadn’t been told by Wednesday morning that he would die that day, he would have the same full day’s notice. Following this line of thought, Orellana concluded that Wednesday, Tuesday, and all the other days leading back to the Sunday when his sentence was passed were equally unworkable appointments with the executioner.

Orellana concluded that he had deflected his torture by sheer dint of thought. The execution could never come as a surprise!

This well-known puzzle, called the “hangman paradox,” has existed in many different variants for years. To this day philosophers argue about where the catch lies. How could it be that so simple an instruction as the judge’s fails? Is there a hidden assumption? A subtle break in logic? Is the definition of the word surprise consistent throughout?

Indeterminism

At the heart of the hangman paradox is a tension between determinism and chance. Determinism tells us simply that the very first moment of a history decides every other moment up to the last. In the words of Persian poet and astronomer Omar Khayyam, “And the first Morning of Creation wrote / What the Last Dawn of Reckoning shall read.” Chance strips from us control and foresight, like Orellana in the judge’s imagination.

Orellana feels that he has led determinism to a triumph over the uncertainty of the judge’s sentence. But as we look more carefully at his victory, it starts to feel Pyrrhic. Objections arise. We may believe intuitively that the judge’s orders do in fact amplify the horror of the death sentence. The hangman paradox reflects the ambivalence of any deterministic claim.

And, indeed, most of us are probably already ambivalent about determinism. Psychologically we are repelled by the possibility that all our actions are fully determined by our history and the environment. In addition, our subjective experience of the world is full of randomness.

“And the first Morning of Creation wrote

What the Last Dawn of Reckoning shall read.”

—Omar Khayyam

On the other hand, determinism is intellectually pleasing and has attracted the fealty of some of our greatest thinkers. German philosopher and mathematician Gottfried Leibniz developed it into “the principle of sufficient reason,” the idea that everything happens for a reason. Dutch philosopher Baruch Spinoza, a rationalist par excellence, used the principle of sufficient reason to argue for a completely deterministic universe in which God is basically the sum total of all causes. To Spinoza, the universe could be constructed only one way, allowing even God no choice.

For the determinist, randomness is a kind of illusion, the result of our ignorance as humans. Underneath that illusion lies a clockwork world. For a deep anti-determinist, the present and the past of nature itself have an indeterminate relationship. Both determinist and anti-determinist, then, would agree that randomness exists. But for the one, it is a human failing, and for the other, a physical property.

And this is where the physicist enters the debate. As we will see, quantum physics offers an interesting resolution to the determinism-randomness duality that is capable of embracing both sides. It portrays determinism at the level of the whole universe, while allowing that any part of the universe may be fundamentally random.

A brief uncertain history

Perhaps the scientific result most clearly in the determinist camp was Newton’s discovery of the laws of motion. They allowed scientists to predict precisely the position of planets far into the future. And given that every other object in the universe also followed those laws, all of existence appeared to be eminently deterministic. The French physicist Pierre-Simon de Laplace reasoned that a sufficiently powerful intelligence that could “comprehend all the forces by which nature is animated” and knew the initial positions of every object could know everything from start to finish: “… nothing would be uncertain and the future, as the past, would be present to its eyes.”

Laplace’s worldview reigned for 200 years until German physicist Max Planck arrived on the scene. Planck realized that the classical physics of Newton and his later peers was incomplete and proved that energy always comes in tiny but discrete chunks called quanta. Fellow German physicist Werner Heisenberg pointed out that the quantum picture brought with it a new kind of uncertainty.

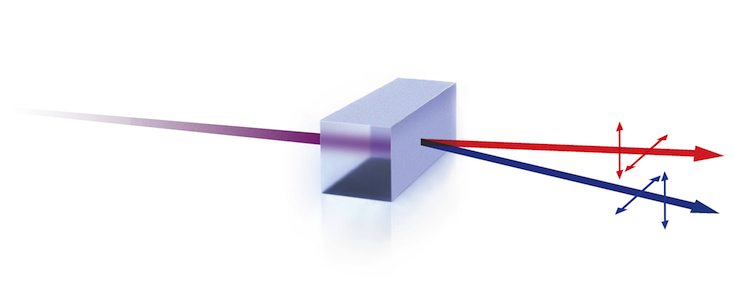

Imagine, he said, that we want to know the position and velocity of a single electron. To see it we must bounce light off the electron and then detect the reflected light with a microscope. But there is a problem: Light interacting with the electron will give it a push, so the position we see in the microscope will no longer be accurate. To make matters worse, the push will impart a random velocity to the electron, making both the position and velocity uncertain. Heisenberg argued we can never know the position and velocity at the same time, no matter how sensitive our tools.

Perhaps this is not surprising. As objects interact with each other in complex ways, you might say, certain of their properties become uncertain. But even in the absence of direct interaction uncertainty can be generated.

Suppose you take a number of electrons in exactly the same physical state. Now choose half of them at random and measure their position, while measuring the velocity of the other half. Physicists have found that the more precisely you measure the position of the first half, the less precisely you are able to measure the momentum of the second half. Heisenberg’s uncertainty again. But this time, there is no interaction between the two halves during the measurement. And what’s more, this uncertainty correlation persists regardless of the distance between the two halves, even at enormous expanses. The reactions in one half to measurements in the other are instantaneous.

For the determinist, randomness is a human failing. For the anti-determinist, it is a physical property.

This picture of the world really bugged Einstein, both because the communication of information across large distances seemed to violate his theory of relativity and because he objected to the idea that reality was deeply indeterminate. He argued that quantum mechanics was incomplete and proposed a thought experiment to prove it. In his experiment, co-designed with his colleagues Boris Podolsky and Nathan Rosen, two particles interact with each other in such a way that their position and velocity become perfectly correlated. Measuring the position of one would let us know the position of the other, and the same for their velocity.

But if we could determine the exact position and velocity of both particles, it would contradict Heisenberg’s uncertainty principle (we’d measure one particle to determine its position and the other to determine its velocity). And since quantum physics is based on Heisenberg, it must be incomplete. This became known as the EPR paradox. (Subsequent experiments by Alain Aspect and others have reconciled these troubling aspects of quantum mechanics, proving Einstein and his colleagues wrong.)

Quantum correlations between objects and events like the ones we’ve described are called entanglement. Quantum physics stipulates that all the objects in the universe that have interacted have become entangled. Interaction simply means that objects, say atoms, share information by exchanging other objects, say photons. More generally, anytime the properties of one object are affected by another object, we say that they interact.

And pretty much all matter is entangled in some way. That’s because matter is constantly interacting via light and other fields. What’s more, in its distant past, all matter interacted even more intimately, given that the entire universe had (arguably) a volume smaller than 10-43 meters cubed (at about 10-34 seconds after the Big Bang).

Physics, then, has taken us from the precisely determined worldview of Newton and Laplace to the locally uncertain world of Heisenberg to the globally uncertain world of Aspect. If all matter is entangled and interdependent, then our ability to know the properties of any part of our universe depends on inscrutable connections to distant objects. This simple observation may be the basis for a reconciliation between determinism and chance.

The origins of probabilities

To see how this works, we must first assume that the quantum physics we have studied in small systems in the laboratory applies to the universe as a whole.

If so, the universe is in a gigantic entangled state. It becomes possible for the universe as a whole to evolve completely deterministically, while individual parts of the universe maintain a non-deterministic uncertainty. From our limited perspective, in our corner of the universe, looking at the little bits of matter we have access to and without knowledge of the relevant entanglements, things would indeed appear uncertain. At the same time, from a perspective outside the universe—from which everything is seen as a whole—they would not.

In this view, we live in a deterministic universe but cannot escape uncertainty. And entanglement becomes an important source of uncertainty for our macroscopic lives. A coin toss, for example, would be random for two sets of reasons. The first set is our own ignorance of the initial conditions: Our hand is in an unexpected position, the velocity we give the coin is random, and so on. But even if we corrected for each of these factors and made a toss under conditions that appear identical, the outcome would still, in principle, be random because of a second, independent source of uncertainty: The coin, our hand, and everything else is linked to other matter through entanglement. And the properties of that other matter are out of our control.

A more important consequence than random coin tosses is the uncertainty that is the basis of the random DNA mutations necessary for evolution. A truly indeterminate local physics guarantees that this process has some “noise,” and that mutation is always possible in principle. The chemistry of DNA replication involves, after all, exchanges of electrons and atoms, which are quantum objects.

We live in a deterministic universe but cannot escape uncertainty.

The deterministic universe fits into a broader picture that is emerging from modern physics as being exquisitely balanced. The degree of a system’s disorder is measured as entropy, a quantity that reflects how many different ways the parts of a system can be arranged to make the whole. A perfectly deterministic universe has zero entropy, since there is only a single arrangement possible (with future rearrangements determined entirely by fixed laws). If the universe is deterministic, it has zero entropy. An increasing entanglement with the rest of the universe balances our observation of increasing entropy (or disorder) in our part of the universe, in line with the second law of thermodynamics. Because increasing entanglement correlates states with each other, it drives entropy down. On the whole, entropy always stays at zero.

Recent measurements also suggest that the universe as a whole has zero energy, zero charge, and zero angular momentum. How is this possible? All energy due to matter (which is positive) is canceled by an equal amount of gravitational energy (which is negative). There are equal amounts of positive and negative charge, and we cannot create one without creating the other. Zero angular momentum means that the universe has no net spin. The universe, then, is a whole lot of nothing: yin and yang that cancel each other out. Locally, in our own neighborhood, we seem to have lots of stuff: matter, charges, motion, entropy, and uncertainty. But globally, none of these exist, never have and never will.

This picture would please the ancient Greek philosopher Epicurus. He claimed that nothing begets nothing, meaning that something cannot be created out of nothing. Otherwise, he said, anything could be created out of anything. This view is contrary to the official Vatican position regarding cosmogony, but it is fully consistent with modern physics.

And determinism, with its zero entropy, fits nicely into this paradigm.

Gilbert Keith Chesterton believed that paradoxes have value because they are “truth standing on its head to gain attention.” In our case, we have, like Orellana, reasoned our way to a place of profound determinism. As in the hangman paradox, it coexists, albeit uncomfortably, with a profound uncertainty.

Vlatko Vedral is the author of Decoding Reality: The Universe as Quantum Information.