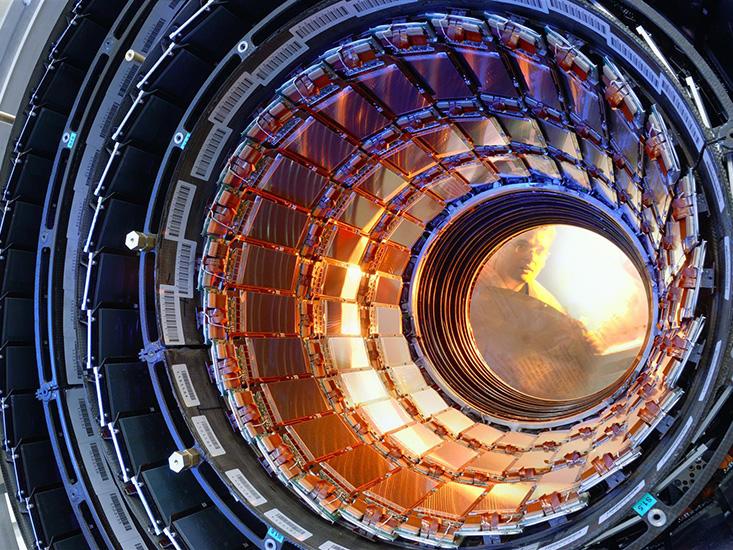

Particle physicists had two nightmares before the Higgs particle was discovered in 2012. The first was that the Large Hadron Collider (LHC) particle accelerator would see precisely nothing. For if it did, it would likely be the last large accelerator ever built to probe the fundamental makeup of the cosmos. The second was that the LHC would discover the Higgs particle predicted by theoretical physicist Peter Higgs in 1964 … and nothing else.

Each time we peel back one layer of reality, other layers beckon. So each important new development in science generally leaves us with more questions than answers. But it also usually leaves us with at least the outline of a road map to help us begin to seek answers to those questions. The successful discovery of the Higgs particle, and with it the validation of the existence of an invisible background Higgs field throughout space (in the quantum world, every particle like the Higgs is associated with a field), was a profound validation of the bold scientific developments of the 20th century.

However, the words of Sheldon Glashow continue to ring true: The Higgs is like a toilet. It hides all the messy details we would rather not speak of. The Higgs field interacts with most elementary particles as they travel through space, producing a resistive force that slows their motion and makes them appear massive. Thus, the masses of elementary particles that we measure, and that make the world of our experience possible is something of an illusion—an accident of our particular experience.

As elegant as this idea might be, it is essentially an ad hoc addition to the Standard Model of physics—which explains three of the four known forces of nature, and how these forces interact with matter. It is added to the theory to do what is required to accurately model the world of our experience. But it is not required by the theory. The universe could have happily existed with massless particles and a long-range weak force (which, along with the strong force, gravity, and electromagnetism, make up the four known forces). We would just not be here to ask about them. Moreover, the detailed physics of the Higgs is undetermined within the Standard Model alone. The Higgs could have been 20 times heavier, or 100 times lighter.

Why, then, does the Higgs exist at all? And why does it have the mass it does? (Recognizing that whenever scientists ask “Why?” we really mean “How?”) If the Higgs did not exist, the world we see would not exist, but surely that is not an explanation. Or is it? Ultimately to understand the underlying physics behind the Higgs is to understand how we came to exist. When we ask, “Why are we here?,” at a fundamental level we may as well be asking, “Why is the Higgs here?” And the Standard Model gives no answer to this question.

Some hints do exist, however, coming from a combination of theory and experiment. Shortly after the fundamental structure of the Standard Model became firmly established, in 1974, and well before the details were experimentally verified over the next decade, two different groups of physicists at Harvard, where both Sheldown Glashow and Steven Weinberg were working, noticed something interesting. Glashow, along with Howard Georgi, did what Glashow did best: They looked for patterns among the existing particles and forces and sought out new possibilities using the mathematics of group theory.

In the Standard Model the weak and electromagnetic forces of nature are unified at a high-energy scale, into a single force that physicists call the “electroweak force.” This means that the mathematics governing the weak and electromagnetic forces are the same, both constrained by the same mathematical symmetry, and the two forces are different reflections of a single underlying theory. But the symmetry is “spontaneously broken” by the Higgs field, which interacts with the particles that convey the weak force, but not the particles that convey the electromagnetic force. This accident of nature causes these two forces to appear as two separate and distinct forces at scales we can measure—with the weak force being short-range and electromagnetism remaining long-range.

Georgi and Glashow tried to extend this idea to include the strong force, and discovered that all of the known particles and the three non-gravitational forces could naturally fit within a single fundamental symmetry structure. They then speculated that this symmetry could spontaneously break at some ultrahigh energy scale (and short distance scale) far beyond the range of current experiments, leaving two separate and distinct unbroken symmetries left over—resulting in separate strong and electroweak forces. Subsequently, at a lower energy and larger distance scale, the electroweak symmetry would break, separating the electroweak force into the short-range weak and the long-range electromagnetic force.

They called such a theory, modestly, a Grand Unified Theory (GUT).

At around the same time, Weinberg and Georgi along with Helen Quinn noticed something interesting—following the work of Frank Wilczek, David Gross, and David Politzer. While the strong interaction got weaker at smaller distance scales, the electromagnetic and weak interactions got stronger.

Every time we open a new window on the universe, we are surprised.

It didn’t take a rocket scientist to wonder whether the strength of the three different interactions might become identical at some small-distance scale. When they did the calculations, they found (with the accuracy with which the interactions were then measured) that such a unification looked possible, but only if the scale of unification was about 15 orders of magnitude in scale smaller than the size of the proton.

This was good news if the unified theory was the one proposed by Howard Georgi and Glashow—because if all the particles we observe in nature got unified this way, then new particles (called gauge bosons) would exist that produce transitions between quarks (which make up protons and neutrons), and electrons and neutrinos. That would mean protons could decay into other lighter particles, which we could potentially observe. As Glashow put it, “Diamonds aren’t forever.”

Even then it was known that protons must have an incredibly long lifetime. Not just because we still exist almost 14 billion years after the big bang, but because we all don’t die of cancer as children. If protons decayed with an average lifetime smaller than about a billion billion years, then enough protons would decay in our bodies during our childhood to produce enough radiation to kill us. Remember that in quantum mechanics, processes are probabilistic. If an average proton lives a billion billion years, and if one has a billion billion protons, then on average one will decay each year. There are a lot more than a billion billion protons in our bodies.

However, with the incredibly small proposed distance scale and therefore the incredibly large mass scale associated with spontaneous symmetry breaking in Grand Unification, the new gauge bosons would get large masses. That would make the interactions they mediate be so short-range that they would be unbelievably weak on the scale of protons and neutrons today. As a result, while protons could decay, they might live, in this scenario, perhaps a million billion billion billion years before decaying. Still time to hold onto your growth stocks.

With the results of Glashow and Georgi, and Georgi, Quinn, and Weinberg, the smell of grand synthesis was in the air. After the success of the electroweak theory, particle physicists were feeling ambitious and ready for further unification.

How would one know if these ideas were correct, however? There was no way to build an accelerator to probe an energy scale a million billion times greater than the rest mass energy of protons. Such a machine would have to have a circumference of the moon’s orbit. Even if it was possible, considering the earlier debacle over the Superconducting Super Collider, no government would ever foot the bill.

Happily, there was another way, using the kind of probability arguments I just presented that give limits to the proton lifetime. If the new Grand Unified Theory predicted a proton lifetime of, say, a thousand billion billion billion years, then if one could put a thousand billion billion billion protons in a single detector, on average one of them would decay each year.

Where could one find so many protons? Simple: in about 3,000 tons of water.

So all that was required was to get a tank of water, put it in the dark, make sure there were no radioactivity backgrounds, surround it with sensitive phototubes that can detect flashes of light in the detector, and then wait for a year to see a burst of light when a proton decayed. As daunting as this may seem, at least two large experiments were commissioned and built to do just this, one deep underground next to Lake Erie in a salt mine, and one in a mine near Kamioka, Japan. The mines were necessary to screen out incoming cosmic rays that would otherwise produce a background that would swamp any proton decay signal.

Both experiments began taking data around 1982–83. Grand Unification seemed so compelling that the physics community was confident a signal would soon appear and Grand Unification would mean the culmination of a decade of amazing change and discovery in particle physics—not to mention another Nobel Prize for Glashow and maybe some others.

Unfortunately, nature was not so kind in this instance. No signals were seen in the first year, the second, or the third. The simplest elegant model proposed by Glashow and Georgi was soon ruled out. But once the Grand Unification bug had caught on, it was not easy to let it go. Other proposals were made for unified theories that might cause proton decay to be suppressed beyond the limits of the ongoing experiments.

The Higgs is like a toilet. It hides all the messy details we would rather not speak of.

On Feb. 23, 1987, however, another event occurred that demonstrates a maxim I have found is almost universal: Every time we open a new window on the universe, we are surprised. On that day a group of astronomers observed, in photographic plates obtained during the night, the closest exploding star (a supernova) seen in almost 400 years. The star, about 160,000 light-years away, was in the Large Magellanic Cloud—a small satellite galaxy of the Milky Way observable in the southern hemisphere.

If our ideas about exploding stars are correct, most of the energy released should be in the form of neutrinos, despite that the visible light released is so great that supernovas are the brightest cosmic fireworks in the sky when they explode (at a rate of about one explosion per 100 years per galaxy). Rough estimates then suggested that the huge IMB (Irvine- Michigan-Brookhaven) and Kamiokande water detectors should see about 20 neutrino events. When the IMB and Kamiokande experimentalists went back and reviewed their data for that day, lo and behold IMB displayed eight candidate events in a 10-second interval, and Kamiokande displayed 11 such events. In the world of neutrino physics, this was a flood of data. The field of neutrino astrophysics had suddenly reached maturity. These 19 events produced perhaps 1,900 papers by physicists, such as me, who realized that they provided an unprecedented window into the core of an exploding star, and a laboratory not just for astrophysics but also for the physics of neutrinos themselves.

Spurred on by the realization that large proton-decay detectors might serve a dual purpose as new astrophysical neutrino detectors, several groups began to build a new generation of such dual-purpose detectors. The largest one in the world was again built in the Kamioka mine and was called Super-Kamiokande, and with good reason. This mammoth 50,000-ton tank of water, surrounded by 11,800 phototubes, was operated in a working mine, yet the experiment was maintained with the purity of a laboratory clean room. This was absolutely necessary because in a detector of this size one had to worry not only about external cosmic rays, but also about internal radioactive contaminants in the water that could swamp any signals being searched for.

Meanwhile, interest in a related astrophysical neutrino signature also reached a new high during this period. The sun produces neutrinos due to the nuclear reactions in its core that power it, and over 20 years, using a huge underground detector, physicist Ray Davis had detected solar neutrinos, but had consistently found an event rate about a factor of three below what was predicted using the best models of the sun. A new type of solar neutrino detector was built inside a deep mine in Sudbury, Canada, which became known as the Sudbury Neutrino Observatory (SNO).

Super-Kamiokande has now been operating almost continuously, through various upgrades, for more than 20 years. No proton-decay signals have been seen, and no new supernovas observed. However, the precision observations of neutrinos at this huge detector, combined with complementary observations at SNO, definitely established that the solar neutrino deficit observed by Ray Davis is real, and moreover that it is not due to astrophysical effects in the sun but rather due to the properties of neutrinos. The implication was that at least one of the three known types of neutrinos is not massless. Since the Standard Model does not accommodate neutrinos’ masses, this was the first definitive observation that some new physics, beyond the Standard Model and beyond the Higgs, must be operating in nature.

Soon after this, observations of higher-energy neutrinos that regularly bombard Earth as high-energy cosmic-ray protons hit the atmosphere and produce a downward shower of particles, including neutrinos, demonstrated that yet a second neutrino has mass. This mass is somewhat larger, but still far smaller than the mass of the electron. For these results team leaders at SNO and Kamiokande were awarded the 2015 Nobel Prize in Physics—a week before I wrote the first draft of these words. To date these tantalizing hints of new physics are not explained by current theories.

The absence of proton decay, while disappointing, turned out to be not totally unexpected. Since Grand Unification was first proposed, the physics landscape had shifted slightly. More precise measurements of the actual strengths of the three non-gravitational interactions—combined with more sophisticated calculations of the change in the strength of these interactions with distance—demonstrated that if the particles of the Standard Model are the only ones existing in nature, the strength of the three forces will not unify at a single scale. In order for Grand Unification to take place, some new physics at energy scales beyond those that have been observed thus far must exist. The presence of new particles would not only change the energy scale at which the three known interactions might unify, it would also tend to drive up the Grand Unification scale and thus suppress the rate of proton decay—leading to predicted lifetimes in excess of a million billion billion billion years.

As these developments were taking place, theorists were driven by new mathematical tools to explore a possible new type of symmetry in nature, which became known as supersymmetry. This fundamental symmetry is different from any previous known symmetry, in that it connects the two different types of particles in nature, fermions (particles with half-integer spins) and bosons (particles with integer spins). The upshot of this is that if this symmetry exists in nature, then for every known particle in the Standard Model at least one corresponding new elementary particle must exist. For every known boson there must exist a new fermion. For every known fermion there must exist a new boson.

Since we haven’t seen these particles, this symmetry cannot be manifest in the world at the level we experience it, and it must be broken, meaning the new particles will all get masses that could be heavy enough so that they haven’t been seen in any accelerator constructed thus far.

What could be so attractive about a symmetry that suddenly doubles all the particles in nature without any evidence of any of the new particles? In large part the seduction lay in the very fact of Grand Unification. Because if a Grand Unified theory exists at a mass scale of 15 to 16 orders of magnitude higher energy than the rest mass of the proton, this is also about 13 orders of magnitude higher than the scale of electroweak symmetry breaking. The big question is why and how such a huge difference in scales can exist for the fundamental laws of nature. In particular, if the Standard Model Higgs is the true last remnant of the Standard Model, then the question arises, Why is the energy scale of Higgs symmetry breaking 13 orders of magnitude smaller than the scale of symmetry breaking associated with whatever new field must be introduced to break the GUT symmetry into its separate component forces?

Following three years of LHC runs, there are no signs of supersymmetry whatsoever.

The problem is a little more severe than it appears. When one considers the effects of virtual particles (which appear and disappear on timescales so short that their existence can only be probed indirectly), including particles of arbitrarily large mass, such as the gauge particles of a presumed Grand Unified Theory, these tend to drive up the mass and symmetry-breaking scale of the Higgs so that it essentially becomes close to, or identical to, the heavy GUT scale. This generates a problem that has become known as the naturalness problem. It is technically unnatural to have a huge hierarchy between the scale at which the electroweak symmetry is broken by the Higgs particle and the scale at which the GUT symmetry is broken by whatever new heavy field scalar breaks that symmetry.

The mathematical physicist Edward Witten argued in an influential paper in 1981 that supersymmetry had a special property. It could tame the effect that virtual particles of arbitrarily high mass and energy have on the properties of the world at the scales we can currently probe. Because virtual fermions and virtual bosons of the same mass produce quantum corrections that are identical except for a sign, if every boson is accompanied by a fermion of equal mass, then the quantum effects of the virtual particles will cancel out. This means that the effects of virtual particles of arbitrarily high mass and energy on the physical properties of the universe on scales we can measure would now be completely removed.

If, however, supersymmetry is itself broken (as it must be or all the supersymmetric partners of ordinary matter would have the same mass as the observed particles and we would have observed them), then the quantum corrections will not quite cancel out. Instead they would yield contributions to masses that are the same order as the supersymmetry-breaking scale. If it was comparable to the scale of the electroweak symmetry breaking, then it would explain why the Higgs mass scale is what it is.

And it also means we should expect to begin to observe a lot of new particles—the supersymmetric partners of ordinary matter—at the scale currently being probed at the LHC.

This would solve the naturalness problem because it would protect the Higgs boson masses from possible quantum corrections that could drive them up to be as large as the energy scale associated with Grand Unification. Supersymmetry could allow a “natural” large hierarchy in energy (and mass) separating the electroweak scale from the Grand Unified scale.

That supersymmetry could in principle solve the hierarchy problem, as it has become known, greatly increased its stock with physicists. It caused theorists to begin to explore realistic models that incorporated supersymmetry breaking and to explore the other physical consequences of this idea. When they did so, the stock price of supersymmetry went through the roof. For if one included the possibility of spontaneously broken supersymmetry into calculations of how the three non-gravitational forces change with distance, then suddenly the strength of the three forces would naturally converge at a single, very small-distance scale. Grand Unification became viable again!

Models in which supersymmetry is broken have another attractive feature. It was pointed out, well before the top quark was discovered, that if the top quark was heavy, then through its interactions with other supersymmetric partners, it could produce quantum corrections to the Higgs particle properties that would cause the Higgs field to form a coherent background field throughout space at its currently measured energy scale if Grand Unification occurred at a much higher, superheavy scale. In short, the energy scale of electroweak symmetry breaking could be generated naturally within a theory in which Grand Unification occurs at a much higher energy scale. When the top quark was discovered and indeed was heavy, this added to the attractiveness of the possibility that supersymmetry breaking might be responsible for the observed energy scale of the weak interaction.

In order for Grand Unification to take place, some new physics at energy scales beyond those that have been observed thus far must exist.

All of this comes at a cost, however. For the theory to work, there must be two Higgs bosons, not just one. Moreover, one would expect to begin to see the new supersymmetric particles if one built an accelerator such as the LHC, which could probe for new physics near the electroweak scale. Finally, in what looked for a while like a rather damning constraint, the lightest Higgs in the theory could not be too heavy or the mechanism wouldn’t work.

As searches for the Higgs continued without yielding any results, accelerators began to push closer and closer to the theoretical upper limit on the mass of the lightest Higgs boson in supersymmetric theories. The value was something like 135 times the mass of the proton, with details to some extent depending on the model. If the Higgs could have been ruled out up to that scale, it would have suggested all the hype about supersymmetry was just that.

Well, things turned out differently. The Higgs that was observed at the LHC has a mass about 125 times the mass of the proton. Perhaps a grand synthesis was within reach.

The answer at present is … not so clear. The signatures of new super- symmetric partners of ordinary particles should be so striking at the LHC, if they exist, that many of us thought that the LHC had a much greater chance of discovering supersymmetry than it did of discovering the Higgs. It didn’t turn out that way. Following three years of LHC runs, there are no signs of supersymmetry whatsoever. The situation is already beginning to look uncomfortable. The lower limits that can now be placed on the masses of supersymmetric partners of ordinary matter are getting higher. If they get too high, then the supersymmetry-breaking scale would no longer be close to the electroweak scale, and many of the attractive features of supersymmetry breaking for resolving the hierarchy problem would go away.

But the situation is not yet hopeless, and the LHC has been turned on again, this time at higher energy. It could be that supersymmetric particles will soon be discovered.

If they are, this will have another important consequence. One of the bigger mysteries in cosmology is the nature of the dark matter that appears to dominate the mass of all galaxies we can see. There is so much of it that it cannot be made of the same particles as normal matter. If it were, for example, the predictions of the abundance of light elements such as helium produced in the big bang would no longer agree with observation. Thus physicists are reasonably certain that the dark matter is made of a new type of elementary particle. But what type?

Well, the lightest supersymmetric partner of ordinary matter is, in most models, absolutely stable and has many of the properties of neutrinos. It would be weakly interacting and electrically neutral, so that it wouldn’t absorb or emit light. Moreover, calculations that I and others performed more than 30 years ago showed that the remnant abundance today of the lightest supersymmetric particle left over after the big bang would naturally be in the range so that it could be the dark matter dominating the mass of galaxies.

In that case our galaxy would have a halo of dark matter particles whizzing throughout it, including through the room in which you are reading this. As a number of us also realized some time ago, this means that if one designs sensitive detectors and puts them underground, not unlike, at least in spirit, the neutrino detectors that already exist underground, one might directly detect these dark matter particles. Around the world a half dozen beautiful experiments are now going on to do just that. So far nothing has been seen, however.

So, we are in potentially the best of times or the worst of times. A race is going on between the detectors at the LHC and the underground direct dark matter detectors to see who might discover the nature of dark matter first. If either group reports a detection, it will herald the opening up of a whole new world of discovery, leading potentially to an understanding of Grand Unification itself. And if no discovery is made in the coming years, we might rule out the notion of a simple supersymmetric origin of dark matter—and in turn rule out the whole notion of supersymmetry as a solution of the hierarchy problem. In that case we would have to go back to the drawing board, except if we don’t see any new signals at the LHC, we will have little guidance about which direction to head in order to derive a model of nature that might actually be correct.

Things got more interesting when the LHC reported a tantalizing possible signal due to a new particle about six times heavier than the Higgs particle. This particle did not have the characteristics one would expect for any supersymmetric partner of ordinary matter. In general the most exciting spurious hints of signals go away when more data are amassed, and about six months after this signal first appeared, after more data were amassed, it disappeared. If it had not, it could have changed everything about the way we think about Grand Unified Theories and electroweak symmetry, suggesting instead a new fundamental force and a new set of particles that feel this force. But while it generated many hopeful theoretical papers, nature seems to have chosen otherwise.

The absence of clear experimental direction or confirmation of super- symmetry has thus far not bothered one group of theoretical physicists. The beautiful mathematical aspects of supersymmetry encouraged, in 1984, the resurrection of an idea that had been dormant since the 1960s when Yoichiro Nambu and others tried to understand the strong force as if it were a theory of quarks connected by string-like excitations. When supersymmetry was incorporated in a quantum theory of strings, to create what became known as superstring theory, some amazingly beautiful mathematical results began to emerge, including the possibility of unifying not just the three non-gravitational forces, but all four known forces in nature into a single consistent quantum field theory.

However, the theory requires a host of new spacetime dimensions to exist, none of which has been, as yet, observed. Also, the theory makes no other predictions that are yet testable with currently conceived experiments. And the theory has recently gotten a lot more complicated so that it now seems that strings themselves are probably not even the central dynamical variables in the theory.

None of this dampened the enthusiasm of a hard core of dedicated and highly talented physicists who have continued to work on superstring theory, now called M-theory, over the 30 years since its heyday in the mid-1980s. Great successes are periodically claimed, but so far M-theory lacks the key element that makes the Standard Model such a triumph of the scientific enterprise: the ability to make contact with the world we can measure, resolve otherwise inexplicable puzzles, and provide fundamental explanations of how our world has arisen as it has. This doesn’t mean M-theory isn’t right, but at this point it is mostly speculation, although well-meaning and well-motivated speculation.

It is worth remembering that if the lessons of history are any guide, most forefront physical ideas are wrong. If they weren’t, anyone could do theoretical physics. It took several centuries or, if one counts back to the science of the Greeks, several millennia of hits and misses to come up with the Standard Model.

So this is where we are. Are great new experimental insights just around the corner that may validate, or invalidate, some of the grander speculations of theoretical physicists? Or are we on the verge of a desert where nature will give us no hint of what direction to search in to probe deeper into the underlying nature of the cosmos? We’ll find out, and we will have to live with the new reality either way.

Lawrence M. Krauss is a theoretical physicist and cosmologist, the director of the Origins Project and the foundation professor in the School of Earth and Space Exploration at Arizona State University. He is also the author of bestselling books including A Universe from Nothing and The Physics of Star Trek.

Copyright © 2017 by Lawrence M. Krauss. From the forthcoming book The Greatest Story Ever Told—So Far: Why Are We Here? By Lawrence M. Krauss to be published by Atria Books, a Division of Simon & Schuster, Inc. Printed by permission.