Ada Lovelace was an English mathematician who lived in the first half of the 19th century. (She was also the daughter of the poet Lord Byron, who invited Mary Shelley to his house in Geneva for a weekend of merriment and a challenge to write a ghost story, which would become Frankenstein.) In 1842, Lovelace was tasked with translating an article from French into English for Charles Babbage, the “Grandfather of the Computer.” Babbage’s piece was about his Analytical Engine, a revolutionary new automatic calculating machine. Although originally retained solely to translate the article, Lovelace also scribbled extensive ideas about the machine into the margins, adding her unique insight, seeing that the Analytical Engine could be used to decode symbols and to make music, art, and graphics. Her notes, which included a method for calculating the Bernoulli numbers sequence and for what would become known as the “Lovelace objection,” were the first computer programs on record, even though the machine could not actually be built at the time.1

Her contributions were astonishing. Though never formally trained as a mathematician, Lovelace was able to see beyond the limitations of Babbage’s invention and imagine the power and potential of programmable computers; also, she was a woman, and women in the first half of the 19th century were typically not seen as suited for this type of career. Lovelace had to sign her work with just her initials because women weren’t thought of as proper authors at the time.2 Still, she persevered,3 and her work, which would eventually be considered the world’s first computer algorithm, later earned her the title of the first computer programmer.

Lovelace was an imaginative and poetic mathematician, who said that the Analytical Engine “weaves algebraic patterns just as the Jacquard loom weaves flowers and leaves,” and called mathematics “poetical science.” She arrived in the field educated but also unshackled by conventional training, and so was able to envision that this new type of computing machine could be used for far more than just numbers and quantities.

Ada Lovelace took us “from calculation to computation,” and nearly two centuries later, her visionary insights have proved true. She received little recognition for her contributions at the time and didn’t receive an official New York Times obituary until 2018, when the Times decided to go back and eulogize the many women and people of color the newspaper had overlooked since 1851. She was able to see the vast potential of the computer in the mid-19th century, and her creative and unconventional approach to mathematical exploration has much to teach us about the power of diversity, inclusion, and multidisciplinary, cross-pollinating intelligence.

We all have a role to play in building AI and ensuring that this revolutionary technology is used for the benefit of all. What kind of skills and intelligence will be required to build our best technological future? How will we avoid the pitfalls of homogeneity? Almost all of the major advances in AI development are currently being made in silos, disparate laboratories, secret government facilities, elite academic institutions, and the offices of very large companies working independently throughout the world. Few private companies (as of this writing) are actively sharing their work with competitors, despite the efforts of such organizations as OpenAI, the MIT-IBM Watson AI Lab, and the Future of Life Institute to bring awareness to the importance of transparency in building AI. Keeping intellectual property secret is deeply ingrained in the culture of private enterprise, but with the acceleration of AI technology development and proliferation, our public duty to one another is such that we have to prioritize transparency, accountability, fairness, and ethical decision-making.

The people working in the various fields of AI are presently doing so with little or no oversight outside of a few self-imposed ethical guidelines. They have no consistent set of laws or regulations to guide them, in general or within industries. The intelligent machine gold rush is still in its Wild West phase, and there are huge financial rewards on the line. Many believe that the first trillionaire will be an AI entrepreneur.

Concentrating AI talent in a very small and secretive group of organizations sets a dangerous precedent that can inhibit democratization of the technology.

While the rewards of inventing the next generation of smart tech are undoubtedly attracting the best and the brightest from around the world—and there is currently a substantial demand for AI experts—for the most part they constitute a homogeneous group of people. Many of AI’s foundational concepts were created by an even less diverse set of people. The building blocks of AI are incredibly eclectic, in that they draw from such distinct fields as psychology, neuroscience, biomimicry, and computer science, yet the demographic of AI’s developers does not reflect this diversity. Researcher Timnit Gebru was at the Neural Information Processing Systems conference in 2016, with approximately 8,500 people in attendance. She counted six black people among them, and herself the only black woman. If the players are all very similar, the game is already stacked.

The teams designing smart technology represent some of the most astute computer scientists working today, and they have made and will continue to make extraordinary contributions to science. However, these brilliant people, for the most part—except for some of those writing on the subject, and some of the coalitions calling for more transparency in AI research—are working in isolation. The result is a silo effect. To avoid the most harmful repercussions of the silo effect, we need to be having a broader discussion about the homogeneity of the people involved in artificial intelligence development.

Although many of the current leaders in the AI field have been trained at the most prestigious schools and have earned advanced degrees, most have received virtually no training in the ethical ramifications of creating intelligent machines, largely because such training has not historically been a standard expectation for specialists in the field. While some pilot programs are underway, including a new AI college at MIT, courses on ethics, values, and human rights are not yet integral parts of the computer and engineering sciences curriculum. They must be.

The current educational focus on specialized skills and training in a field such as computer science can also discourage people from looking beyond the labs and organizations in which they already exist. In the next generation of AI education, we will need to guard against such overspecialization. It is crucial that we institute these pedagogical changes at every level, including for the very youngest future scientists and policy-makers. According to Area9 cofounder Ulrik Juul Christensen, “discussion is rapidly moving to the K-12 education system, where the next generation must prepare for a world in which advanced technology such as artificial intelligence and robotics will be the norm and not the novelty.”

Some of the biggest players presently in the AI game are the giant technology companies, such as Google, Facebook, Microsoft, Baidu, Alibaba, Apple, Amazon, Tesla, IBM (which built Watson), and DeepMind (which made AlphaGo and was acquired by Google). These companies swallow up the smaller AI companies at a rapid rate. This consolidation of technological knowledge within a few elite for-profit companies is ascendant and will continue to rise due to conventional power dynamics. We will need, among many other societal changes, incentives to encourage entrepreneurship that can spawn smaller, more agile, and more diverse companies in this space. Given the economic trends toward tech monopolies and against government intervention in corporate power consolidation, we have to counter not only by investing in creative AI start-ups, but also by educating the public on how important it is to infuse transparency, teamwork, and inclusive thinking into the development of AI.

The demand for the most accomplished people working in AI and related fields is fierce, and so these relatively small numbers of corporations that control enormous resources are thus able to offer significant compensation. Even such elite universities as Oxford and Cambridge are complaining that tech giants are stealing all of their talent. On the federal level, the U.S. Department of Defense’s Defense Advanced Research Projects Agency is readying AI for the government’s military use. Governments and tech giants from Russia to China are hard at work in a competition to build the most robust intelligent technology. While each nation is covert about its process, sources indicate that China and Russia are outpacing the United States in AI development in what is being called the next space race.

Our chance to include diverse voices has a limited horizon.

Concentrating AI talent in a very small and secretive group of organizations sets a dangerous precedent that can inhibit democratization of the technology. It also means that less rigorous academic research is being conducted and published than could be achieved if ideas were shared more freely. With a primarily capitalistic focus on growth, expansion, and profit, the pendulum of public discourse swings away from a deeper understanding of the philosophical and human repercussions of building these tools—topics that researchers and those outside these siloed environments are freer to debate in academic institutions.

To better manage the looming menaces posed by developing smart technologies, let’s invite the largest possible spectrum of thought into the room. This commitment must go beyond having diverse voices, though that is a critical starting point. To collaborate effectively (and for good), we must move toward collective intelligence that harnesses various skills, backgrounds, and resources to gather not only smart individuals but also smart teams. Thomas W. Malone, in his book Superminds, reminds us that our collective intelligence—not the genius of isolated individuals—is responsible for almost all human achievement in business, government, science, and beyond. And with intelligent tech, we are all about to get a lot smarter.

Harnessing our collective ingenuity can help us move past complacency and realize our best future. The company Unanimous AI uses swarm intelligence technology (sort of like a hive mind) inspired by swarms in nature in order to amplify human wisdom, knowledge, and intuition, optimizing group dynamics to enhance decision-making. Another idea will be to consider more open-source algorithms to better support algorithmic transparency and information sharing. Open-source allows developers to access the public work of others and build upon it. More aspirationally, we must endeavor to design a moral compass with a broad group of contributors and apply it throughout the entire AI ecosystem.

The STEM fields are relatively homogeneous, with few women, people of color, people of different abilities, and people of different socioeconomic backgrounds. To take one example, 70 percent of computer science majors at Stanford are male. When attending a Recode conference on the impact of digital technology, Microsoft researcher Margaret Mitchell, looking out at the attendees, observed “a sea of dudes.” This lack of representation poses many problems, and one is that the biases of this homogeneous group will become ingrained in the technology it creates.

Generally speaking, in the U.S, 83.3 percent of “high tech” executives are white and 80 percent are male. The U.S. Equal Employment Opportunity Commission says that amid major economic growth in the high-tech sector, “diversity and inclusion in the tech industry have in many ways gotten worse.” Women currently earn a smaller percentage of computer science degrees than they did almost thirty years ago: “In 2013, only 26 percent of computing professionals were female—down considerably from 35 percent in 1990 and virtually the same as in 1960.”

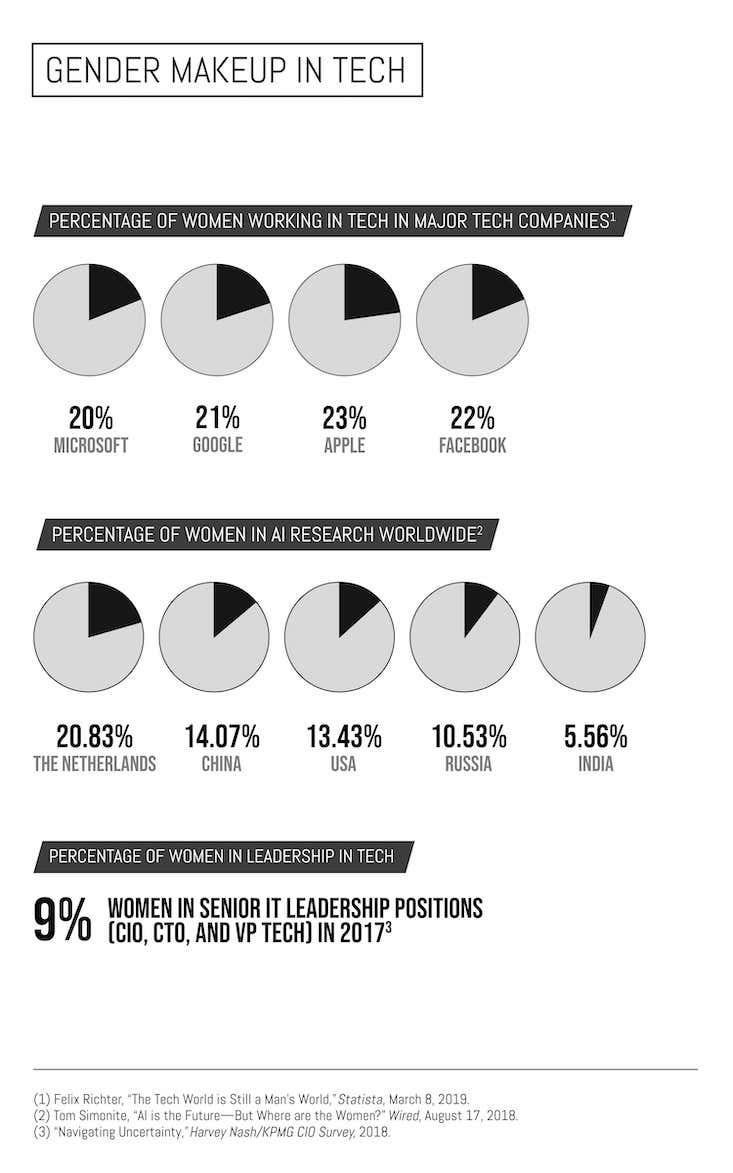

Jeff Dean, the head of AI Google, said in August 2016 he was more worried about a lack of diversity in AI than he was about an AI dystopia. Yet, a year later, the Google Brain team was 94 percent male and over 70 percent white. Many AI organizations do not share any diversity data, but based on the information that is publicly available, the teams often appear homogeneous, including those that have been profiled as the future of AI. The 2018 World Economic Forum Global Gender Gap report found that only 22 percent of AI professionals around the world are women. The cycle perpetuates itself when training programs are open only to those already in the circle, as opposed to the thousands from underrepresented groups that graduate with degrees in computer science and related disciplines.

As of 2018, Google reported a 69 percent male workforce, with 2.5 percent black and 3.6 percent Latinx employees. At Facebook, 4 percent of their employees are black and 5 percent are Hispanic. Overall, men hold 74.5 percent of Google’s leadership positions. Only 10 percent of those working on “machine intelligence” at Google are women, and only 12 percent of the leading machine-learning researchers worldwide are women.

Women were the first computer programmers. However, women who are trained in the tech fields today tend to eventually flee in large numbers, due to an office culture riddled with biases that are often unconscious and permeate every aspect of the field. In general, funding for more diverse tech founders and for companies that are not led by white, cisgender men4 is so low that Melinda Gates has said she no longer wants to invest in “white guys in hoodies,” preferring to focus on women- and minority-led initiatives.

The lack of diversity infiltrates every level of technology, including the people providing its financial backing. Venture capital firms are 70 percent white, 3 percent black, and 18 percent women (and 0 percent black women as recently as 2016). A shocking 40 percent of all major venture capital executives in the U.S. went to just two schools: Harvard University and Stanford University.

If the homogeneity in the field of AI development continues, we risk infusing and programming a predictable set of prejudices into our intelligent digital doppelgängers.

This means that those financing our most vital new tech businesses are homogeneous along racial, gender, and cognitive lines and thus highly prone to funding people who look and think like them. Other intersecting factors, such as age, ability, and cultural/socioeconomic background, also contribute to this cycle of homogeneity. This inherent bias is evident throughout the technology ecosystem, where the limited amount of money available to underrepresented groups matches their underrepresentation in the industry.

There are, fortunately, individuals and organizations who are encouraging multifarious groups of people to pursue careers in technology, including Women in Machine Learning, Black in AI, Lesbians Who Tech, Trans Code, Girls Who Code, Black Girls Code, and Diversity AI, all of whom are trying very hard to help poorly represented groups break into the industry. In the words of Timnit Gebru, the cofounder of Black in AI: “We’re in a diversity crisis.” It’s a myth that there is a pipeline problem. The reality is that there is an unconscious bias problem.

The absence of heterogeneity is more pronounced in technology than in other industry sectors.5 And the discouraging numbers include only publicly available data. For example, even getting access to statistics for tech employees with disabilities is a challenge. More research, data, and action are sorely needed around confronting bias in the field of AI and the complex, structural, and deeply rooted issues surrounding the intersections of gender, ability, race, sexuality, socioeconomic status, discrimination, and power.

The perpetuation of stereotypes and cloistered educational pipelines creates a vicious cycle of homogeneity and in-group bias. Consciously or not, many of us prefer people who are like ourselves and who already exist within our own social circles, which can induce discrimination. The fact that holding these biases is unintentional is no excuse. If the homogeneity in the field of AI development continues, we risk infusing and programming a predictable set of prejudices into our intelligent digital doppelgängers.

The 2018 U.S. Congressional Facebook hearings demonstrated how little our technology scions and government officials understand each other. Some legislators spent their limited time asking for explanations of basic elements of how Facebook works, revealing digital illiteracy while Facebook’s representatives remained as tight-lipped as possible. Large gaps in understanding still exist between the tech community, associated industries, and the elected representatives entrusted to regulate them. Without a far better public understanding of the science, we are not capable as a society of monitoring the companies and platforms, let alone the technology.

Our political leaders don’t know tech and our tech leaders haven’t decoded how to program values or to detect intersecting layers of societal bias. We have to find ways to bridge this chasm, because our major technological advances will only truly progress through collective intelligence, which requires both human capabilities and evolving machine capabilities working together.

The machines we are training to teach themselves will become determinant in more and more outcomes, so our chance to include diverse voices has a limited horizon. Trying to build global consensus on climate issues and solutions has, frustratingly, taken many decades to even get to an assemblage like the Paris Accord (which is still very much debated and in peril). The introduction of intelligent machines into almost all facets of human life offers no such runway. If we respond with the same apathy as we have to the confirmed threats to our environment, we may have little say in it at all.

Part of our myopia and inability to acknowledge and accept big-picture views is precipitated by education systems that push us into narrow disciplines of study, especially in the sciences. We used to generalize more often and thereby gain a broader array of knowledge; now we often specialize in the sciences and math very early. I believe that bridging the curriculum divide between the sciences and the humanities and offering opportunities for collaborations across specialties will be essential. Very few liberal arts graduates end up as scientists, and not many engineers pursue careers in the humanities, as we can get corralled into fenced-off fields of study early on in our lives. The siloed thinking that can occur in certain educational settings can abet reductionist thinking, impeding conversations across disciplines and blocking cross-pollination of thought and deliberation—the building blocks of new ideas.

Expanding science and technology curricula to include courses on ethical decision-making is one way to start, along with encouraging more diverse individuals to consider STEM education. For AI to flourish, we will also need linguists and biologists consulting with child psychologists, zoologists, and mathematicians. Rethinking our definitions of “expert” and “genius,” as well as what and who is “exceptional,” is also key. A future world that is fair and enriching for us all is going to require skilled empaths as well as designers who can reimagine work environments for human-AI partnerships. It will also urgently require those endowed with real-life experience in creative problem-solving, critical thinking, ethical leadership and decision-making, human rights protections, and social justice.

The paradox of achieving heterogeneity is complicated by the fact that in the not-too-distant future much of our lives will be spent in virtual worlds that we create for ourselves. We may lose the ability to contemplate and investigate our tangible world as we more readily did in the past. As Monica Kim writes in The Atlantic:

If virtual reality becomes a part of people’s day-to-day lives, more and more people may prefer to spend a majority of their time in virtual spaces. Futurist Ray Kurzweil predicted, somewhat hyperbolically, in 2003, “By the 2030s, virtual reality will be totally realistic and compelling and we will spend most of our time in virtual environments . . . We will all become virtual humans.” In theory, such escapism is nothing new—as critics of increased TV, Internet, and smartphone usage will tell you—but as VR technology continues to blossom, the worlds that they generate will become increasingly realistic, as Kurzweil explained, creating a greater potential for overuse.

As we are more able to shape the virtual worlds we inhabit in our own image, we will also need to be more intentional about breaking out of silos and filter bubbles to explore ways of living, thinking, and being that are unlike us, and not of our own creation.

To evolve into a society that can coexist with our superintelligent creations, we need to reconsider our education systems; revise our concepts of intelligence and genius; and imagine a life where unique human characteristics are embraced while our synthetically intelligent machines assume many of our more mundane tasks.

Flynn Coleman is a writer, international human rights attorney, public speaker, professor, and social innovator. She has worked with the U.N., the U.S. federal government, international corporations, and human rights organizations, and has written about global citizenship, the future of work and purpose, political reconciliation, war crimes, genocide, human and civil rights, humanitarian issues, innovation and design for social impact, and improving access to justice and education. She lives in New York City. A Human Algorithm is her first book.

Excerpted from A Human Algorithm: How Artificial Intelligence Is Redefining Who We Are by Flynn Coleman. Published with permission from Counterpoint Press. Copyright © 2019 by Flynn Coleman.

Footnotes

1. Some say that it was Lovelace’s work that inspired Alan Turing’s. Dominic Selwood, “Ada Lovelace Paved the Way for Alan Turing’s More Celebrated Codebreaking a Century Before He was Born,” Telegraph UK, December 10, 2014, www.telegraph.co.uk/technology /11285007/Ada-Lovelace-paved-the-way-for-Alan-Turings-more-celebrated-codebreaking-a-century-before-he-was-born.html.

2. “As people realized how important computer programming was, there was a greater back-lash and an attempt to reclaim it as a male activity,” says Valerie Aurora, the executive director of the Ada Initiative, a nonprofit organization that arranges conferences and training programs to elevate women working in math and science. “In order to keep that wealth and power in a man’s hands, there’s a backlash to try to redefine it as something a woman didn’t do, and shouldn’t do, and couldn’t do.”

The numbers are still low today. “The Census Bureau [found] that the share of women working in STEM (science, technology, engineering, and math) has decreased over the past couple of decades; this is due largely to the fact that women account for a smaller proportion of those employed in computing. In 1990, women held thirty-four per cent of STEM jobs; in 2011, it was twenty-seven per cent.”

Betsy Morais, “Ada Lovelace, the First Tech Visionary,” New Yorker, October 15, 2013, www.newyorker.com/tech/elements/ada-lovelace-the-first-tech-visionary.

3. Lovelace had access to great tutors, including Mary Somerville, the first scientist (rather than “man of science”), a creative and artistic scientific mind. Christopher Hollings, Ursula Martin, and Adrian Rice, “The Early Mathematical Education of Ada Lovelace,” Journal of the British Society for the History of Mathematics, 32:3 (2017): 221–234, DOI: 10.1080/17498430.2017.1325297.

4. Cisgender is a trans-inclusionary term meaning anyone who identifies with their gender assigned at birth.

5. “Compared to overall private industry, the high tech sector employed a larger share of whites (63.5 percent to 68.5 percent), Asian Americans (5.8 percent to 14 percent) and men (52 percent to 64 percent), and a smaller share of African Americans (14.4 percent to 7.4 percent), Hispanics (13.9 percent to 8 percent), and women (48 percent to 36 percent).” “Diversity in High Tech,” US Equal Opportunity Employment Commission, accessed September 3, 2018, www.eeoc.gov/eeoc/statistics/reports/hightech.

References

George Anders, You Can Do Anything: The Surprising Power of a “Useless” Liberal Arts Education (New York: Little, Brown, and Company, 2017)

Amy Rees Anderson, “No Man Is Above Unconscious Gender Bias in The Workplace—It’s ‘Unconscious,’” Forbes, December 14, 2016, www.forbes.com/sites/amyanderson/2016/12/14/no-man-is-above-unconscious-gender-bias-in-the-workplace-its-unconscious/#d055ac512b42

Johana Bhuiyan, “The Head of Google’s Brain Team is More Worried About the Lack of Diversity in Artificial Intelligence than an AI Apocalypse,” Recode, August 13, 2016, www.recode.net/2016/8/13/12467506/google-brain-jeff-dean-ama-reddit-artificial-intelligence-robot-takeover

Danielle Brown, “Google Diversity Annual Report 2018,” accessed September 27, 2018, diversity.google/annual-report/#!#_our-workforce

Caroline Bullock, “Attractive, Slavish and at Your Command: Is AI Sexist?” BBC News, December 5, 2016, www.bbc.com/news/business-38207334

Ulrik Juul Christensen, “Robotics, AI Put Pressure on K-12 Education to Adapt and Evolve,” The Hill, September 1, 2018, thehill.com/opinion/education/404544-robotics-ai-put-pressure-on-k-12-to-adapt-and-evolve

Zachary Cohen, “US Risks Losing Artificial Intelligence Arms Race to China and Russia,” CNN Politics, November 29, 2017, www.cnn.com/2017/11/29/politics/us-military-artificial-intelligence-russia-china/index.html

Mark Cuban, “The World’s First Trillionaire Will Be an Artificial Intelligence Entrepreneur,” CNBC, March 13, 2017, www.cnbc.com/2017/03/13/mark-cuban-the-worlds-first-trillionaire-will-be-an-ai-entrepreneur.html

Jorge Cueto, “Race and Gender Among Computer Science Majors at Stanford,” Medium, July 13, 2015, medium.com/@jcueto/race-and-gender-among-computer-science-majors-at-stanford-3824c4062e3a

Ciarán Daly, “‘We’re in a Diversity Crisis’—This Week in AI,” AI Business, February 15, 2018, aibusiness.com/interview-infographic-must-read-ai-news

Katherine Dempsey, “Democracy Needs a Reboot for the Age of Artificial Intelligence,” Nation, November 8, 2017, www.thenation.com/article/democracy-needs-a-reboot-for-the-age-of-artificial-intelligence

James Essinger, Ada’s Algorithm: How Lord Byron’s Daughter Ada Lovelace Launched the Digital Age (Brooklyn: Melville House, 2014).

John Fuegi and Jo Francis, “Lovelace & Babbage and the Creation,” IEEE Annals of the History of Computing, Vol. 25, Issue 4 (October–December 2003): 16–26.

Aleta George, “Booting Up a Computer Pioneer’s 200-Year-Old Design,” Smithsonian Magazine, April 1, 2009, www.smithsonianmag.com/science-nature/booting-up-a-computer-pioneers-200-year-old-design-122424896

Nico Grant, “The Myth of the ‘Pipeline Problem,’” Bloomberg, June 13, 2018, www.bloomberg.com/news/articles/2018-06-13/the-myth-of-the-pipeline-problem-jid07tth

Jessi Hempel, “Melinda Gates and Fei-Fei Li Want to Liberate AI from ‘Guys with Hoodies,’” Wired, May 4, 2017, www.wired.com/2017/05/melinda-gates-and-fei-fei-li-want-to-liberate-ai-from-guys-with-hoodies

Jane C. Hu, “Group Smarts,” Aeon, October 3, 2016, aeon.co/essays/how-collective-intelligence-overcomes-the-problem-of-groupthink

Annette Jacobson, “Why We Shouldn’t Push Students to Specialize in STEM Too Early,” PBS NewsHour, September 5, 2017, www.pbs.org/newshour/education/column-shouldnt-push-students-specialize-stem-early

Rebecca M. Jordan-Young, Brain Storm: The Flaws in the Science of Sex Differences (Cambridge, MA: Harvard University Press, 2011).

Yasmin Kafai, “Celebrating Ada Lovelace,” MIT Press, October 13, 2015, mitpress.mit .edu/blog/changing-face-computing—one-stitch-time

Richard Kerby, “Where Did You Go to School?” Noteworthy, July 30, 2018, blog.usejournal.com/where-did-you-go-to-school-bde54d846188

Monica Kim, “The Good and the Bad of Escaping to Virtual Reality,” The Atlantic, February 18, 2015, www.theatlantic.com/health/archive/2015/02/the-good-and-the-bad-of-escaping-to-virtual-reality/385134

Pierre Lévy, Collective Intelligence: Mankind’s Emerging World in Cyberspace (New York: Basic Books, 1994), 13.

Steve Lohr, “M.I.T. Plans College for Artificial Intelligence, Backed by $1 Billion,” New York Times, October 15, 2018, www.nytimes.com/2018/10/15/technology/mit-college-artificial-intelligence.html

John Markoff, “It Started Digital Wheels Turning,” New York Times, November 7, 2011, www .nytimes.com/2011/11/08/science/computer-experts-building-1830s-babbage-analytical-engine.html

Claire Cain Miller, “Overlooked: Ada Lovelace,” New York Times, March 8, 2018, www.nytimes.com/interactive/2018/obituaries/overlooked-ada-lovelace.html

Oliver Milman, “Paris Deal: A Year After Trump Announced US Exit, a Coalition Fights to Fill the Gap,” Guardian, June 1, 2018, www.theguardian.com/us-news/2018/may/31/paris-climate-deal-trump-exit-resistance

Talia Milgrom-Elcott, “STEM Starts Earlier Than You Think,” Forbes, July 24, 2018, www.forbes.com/sites/taliamilgromelcott/2018/07/24/stem-starts-earlier-than-you-think/#150b641a348b

Cade Metz, “Google Just Open Sourced Tensorware, its Artificial Intelligence Engine,” Wired, November 9, 2015, www.wired.com/2015/11/google-open-sources-its-artificial-intelligence-engine

Steve O’Hear, “Tech Companies Don’t Want to Talk about the Lack of Disability Reporting,” Techcrunch, November 7, 2016, techcrunch.com/2016/11/07/parallel-pr-universe

Erik Sherman, “Report: Disturbing Drop in Women in Computing Field,” Fortune, March 26, 2015, fortune.com/2015/03/26/report-the-number-of-women-entering-computing-took-a- nosedive

Sam Shead, “Oxford and Cambridge Are Losing AI Researchers to DeepMind,” Business Insider, November 9, 2016, www.businessinsider.com/oxbridge-ai-researchers-to-deepmind-2016-11

Jackie Snow, “We’re in a Diversity Crisis: Cofounder of Black in AI on What’s Poisoning Algorithms in Our Lives,” MIT Technology Review, February 14, 2018, www.technology review.com/s/610192/were-in-a-diversity-crisis-black-in-ais-founder-on-whats-poisoning-the-algorithms-in-our

Christopher Summerfield, Matt Botvinick, and Demis Hassabis, “AI and Neuroscience,” DeepMind, accessed September 27, 2018, deepmind.com/blog/ai-and-neuroscience-virtuous-circle

Nicola Perrin and Danil Mikhailov, “Why We Can’t leave AI in the hands of Big Tech,” Guardian, November 3, 2017, www.theguardian.com/science/2017/nov/03/why-we-cant-leave-ai-in-the-hands-of-big-tech

Maria Popova, “The Art of Chance-Opportunism in Creativity and Scientific Discovery,” Medium, accessed September 3, 2018, www.brainpickings.org/2012/05/25/the-art-of-scientific-investigation-1; William I. B. Beveridge, The Art of Scientific Investigation (New Jersey: Blackburn Press, 2004).

Tom Simonite, “AI is the Future—But Where are the Women?” Wired, August 17, 2018, www.wired.com/story/artificial-intelligence-researchers-gender-imbalance

Dorothy Stein, Ada: A Life and A Legacy (Cambridge, MA: MIT Press, October 1985).

Victor Tangermann, “Hearings Show Congress Doesn’t Understand Facebook Well Enough to Regulate It,” Futurism, April 11, 2018, futurism.com/hearings-congress-doesnt-understand-facebook-regulation

Gillian Tett, The Silo Effect: The Peril of Expertise and the Promise of Breaking Down Barriers (New York: Simon and Schuster, 2015).

Rachel Thomas, “Diversity Crisis in AI, 2017 Edition,” fast.ai, August 16, 2017, www.fast .ai/2017/08/16/diversity-crisis

Rachel Thomas, “If You Think Women in Tech is Just a Pipeline Problem, You Haven’t Been Paying Attention,” Medium, July 27, 2015, medium.com/tech-diversity-files/if-you-think-women-in-tech-is-just-a-pipeline-problem-you-haven-t-been-paying-attention-cb7a2073b996

Clive Thompson, “The Secret History of Women in Coding,” New York Times, February 13, 2019, www.nytimes.com/2019/02/13/magazine/women-coding-computer-programming.html

Derek Thompson, “America’s Monopoly Problem,” The Atlantic, October 2016, www.theatlantic.com/magazine/archive/2016/10/americas-monopoly-problem/497549

Betty Alexandra Toole, Ada, The Enchantress of Numbers: The Prophet of the Computer Age (Sausalito: Strawberry Press, 1998), 175–178, www.cs.yale.edu/homes/tap/Files/ada-bio.html

Betty Alexandra Toole, Ada, The Enchantress of Numbers: Poetical Science.

Maxine Williams, “Facebook 2018 Diversity Report: Reflecting on Our Journey,” July 12, 2018, newsroom.fb.com/news/2018/07/diversity-report.

Fareed Zakaria, In Defense of a Liberal Education (New York: W. W. Norton & Company, 2016).

“Ada Lovelace,” Computer History, accessed September 3, 2018, www.computerhistory .org/babbage/adalovelace

“Advancing Opportunity for All in the Tech Industry,” US Equal Employment Opportunity Commission, May 18, 2016, www.eeoc.gov/eeoc/newsroom/release/5-18-16.cfm

“Diversity in High Tech,” US Equal Employment Opportunity Commission, accessed September 3, 2018, www.eeoc.gov/eeoc/statistics/reports/hightech

“How Facebook Has Handled Recent Scandals,” Letters to the Editor, New York Times, November 16, 2018, www.nytimes.com/2018/11/16/opinion/letters/facebook-scandals.html

“Ingroup Favoritism and Prejudice,” Principles of Social Psychology, accessed September 27, 2018, opentextbc.ca/socialpsychology/chapter/ingroup-favoritism-and-prejudice.

IEEE Spectrum, accessed September 3, 2018, spectrum.ieee.org/tech-talk/at-work/tech-careers/computer-vision-leader-feifei-li-on-why-ai-needs-diversity

Partnerships on AI, accessed September 3, 2018, www.partnershiponai.org

“Science and Engineering Degrees, By Race/Ethnicity of Recipients: 2002–12,” National Science Foundation, May 21, 2015, www.nsf.gov/statistics/2015/nsf15321/#chp2

“The Global Gender Gap Report 2018,” World Economic Forum, accessed December 22, 2018, www3.weforum.org/docs/WEF_GGGR_2018.pdf

Lead image by Supamotion / Shutterstock