Reprinted with permission from Quanta Magazine‘s Abstractions blog.

The 61st International Mathematical Olympiad, or IMO, began yesterday. It may go down in history for at least two reasons: Due to the COVID-19 pandemic it’s the first time the event has been held remotely, and it may also be the last time that artificial intelligence doesn’t compete.

Indeed, researchers view the IMO as the ideal proving ground for machines designed to think like humans. If an AI system can excel here, it will have matched an important dimension of human cognition.

“The IMO, to me, represents the hardest class of problems that smart people can be taught to solve somewhat reliably,” said Daniel Selsam of Microsoft Research. Selsam is a founder of the IMO Grand Challenge, whose goal is to train an AI system to win a gold medal at the world’s premier math competition.

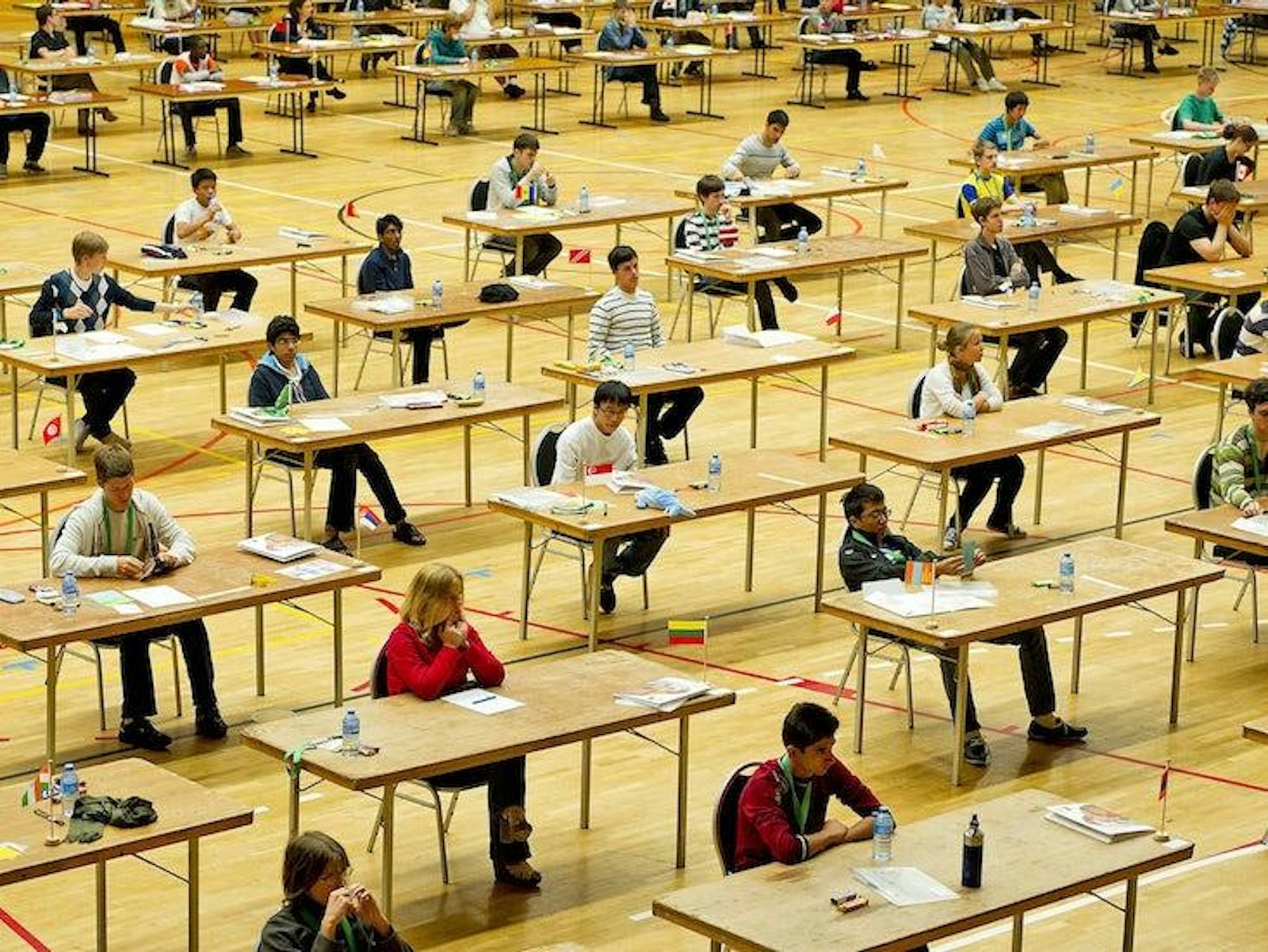

Since 1959, the IMO has brought together the best pre-college math students in the world. On each of the competition’s two days, participants have four and a half hours to answer three problems of increasing difficulty. They earn up to seven points per problem, and top scorers take home medals, just like at the Olympic Games. The most decorated IMO participants become legends in the mathematics community. Some have gone on to become superlative research mathematicians.

A lot of what mathematicians do when they approach a new problem is ineffable.

IMO problems are simple, but only in the sense that they don’t require any advanced math—even calculus is considered beyond the scope of the competition. They’re also fiendishly difficult. For example, here’s the fifth problem from the 1987 competition in Cuba:

Let n be an integer greater than or equal to 3. Prove that there is a set of n points in the plane such that the distance between any two points is irrational and each set of three points determines a non-degenerate triangle with rational area.

Like many IMO problems, this one might appear impossible at first.

“You read the questions and think, ‘I can’t do that,’” said Kevin Buzzard of Imperial College London, a member of the IMO Grand Challenge team and gold medalist at the 1987 IMO. “They’re extremely hard questions that are accessible to schoolchildren if they put together all the ideas they know in a brilliant way.”

Solving IMO problems often requires a flash of insight, a transcendent first step that today’s AI finds hard—if not impossible.

For example, one of the oldest results in math is Euclid’s proof from 300 BCE that there are infinitely many prime numbers. It begins with the recognition that you can always find a new prime by multiplying all known primes and adding 1. The proof that follows is simple, but coming up with the opening idea was an act of art.

“You cannot get computers to get that idea,” said Buzzard. At least, not yet.

The IMO Grand Challenge team is using a software program called Lean, first launched in 2013 by a Microsoft researcher named Leonardo de Moura. Lean is a “proof assistant” that checks mathematicians’ work and automates some of the tedious parts of writing a proof.

De Moura and his colleagues want to use Lean as a “solver,” capable of devising its own proofs of IMO problems. But at the moment, it cannot even understand the concepts involved in some of those problems. If it’s going to do better, two things need to change.

First, Lean needs to learn more math. The program draws on a library of mathematics called mathlib, which is growing all the time. Today it contains almost everything a math major might know by the end of their second year of college, but with some elementary gaps that matter for the IMO.

The second, bigger challenge is teaching Lean what to do with the knowledge it has. The IMO Grand Challenge team wants to train Lean to approach a mathematical proof the way other AI systems already successfully approach complicated games like chess and Go—by following a decision tree until it finds the best move.

“If we can get a computer to have that brilliant idea by simply having thousands and thousands of ideas and rejecting all of them until it stumbles on the right one, maybe we can do the IMO Grand Challenge,” said Buzzard.

But what are mathematical ideas? That’s surprisingly hard to say. At a high level, a lot of what mathematicians do when they approach a new problem is ineffable.

“A key step in many IMO problems is to basically play around with it and look for patterns,” said Selsam. Of course, it’s not obvious how you tell a computer to “play around” with a problem.

At a low level, math proofs are just a series of very concrete, logical steps. The IMO researchers could try to train Lean by showing it the full details of previous IMO proofs. But at that granular level, individual proofs become too specialized to a given problem.

“There’s nothing that works for the next problem,” said Selsam.

To help with this, the IMO Grand Challenge team needs mathematicians to write detailed formal proofs of previous IMO problems. The team will then take these proofs and try to distill the techniques, or strategies, that make them work. Then they’ll train an AI system to search among those strategies for a “winning” combination that solves IMO problems it’s never seen before. The trick, Selsam observes, is that winning in math is much harder than winning even the most complicated board games. In those games, at least you know the rules going in.

“Maybe in Go the goal is to find the best move, whereas in math the goal is to find the best game and then to find the best move in that game,” he said.

The IMO Grand Challenge is currently a moonshot. If Lean were participating in this year’s competition, “we’d probably get a zero,” said de Moura.

But the researchers have several benchmarks they’re trying to hit before next year’s event. They plan to fill in the holes in mathlib so that Lean can understand all of the questions. They also hope to have the detailed formal proofs of dozens of previous IMO problems, which will begin the process of providing Lean with a basic playbook to draw from.

At that point a gold medal may still be far out of reach, but at least Lean could line up for the race.

“Right now lots of things are happening, but there’s nothing particularly concrete to point to,” said Selsam. “[Next] year it becomes a real endeavor.”

Kevin Hartnett is a senior writer at Quanta Magazine covering mathematics and computer science. His work has been collected in the “Best Writing on Mathematics” series in 2013, 2016 and 2017.