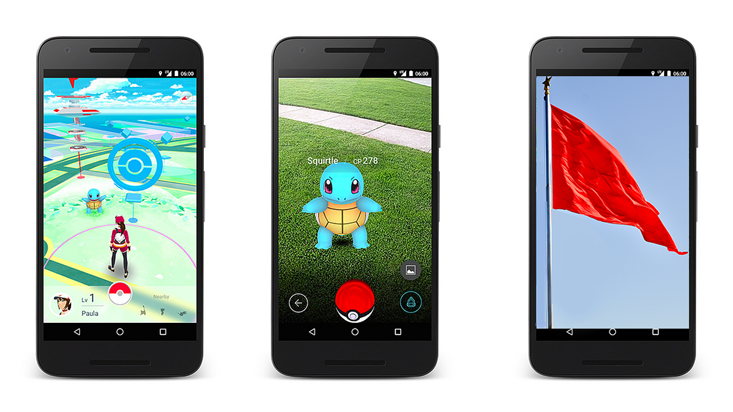

Released in July 2016, Pokémon Go is a location-based, augmented-reality game for mobile devices, typically played on mobile phones; players use the device’s GPS and camera to capture, battle, and train virtual creatures (“Pokémon”) who appear on the screen as if they were in the same real-world location as the player: As players travel the real world, their avatar moves along the game’s map. Different Pokémon species reside in different areas—for example, water-type Pokémon are generally found near water. When a player encounters a Pokémon, AR (Augmented Reality) mode uses the camera and gyroscope on the player’s mobile device to display an image of a Pokémon as though it were in the real world.1 This AR mode is what makes Pokémon Go different from other PC games: Instead of taking us out of the real world and drawing us into the artificial virtual space, it combines the two; we look at reality and interact with it through the fantasy frame of the digital screen, and this intermediary frame supplements reality with virtual elements which sustain our desire to participate in the game, push us to look for them in a reality which, without this frame, would leave us indifferent. Sound familiar? Of course it does. What the technology of Pokémon Go externalizes is simply the basic mechanism of ideology—at its most basic, ideology is the primordial version of “augmented reality.”

The first step in this direction of technology imitating ideology was taken a couple of years ago by Pranav Mistry, a member of the Fluid Interfaces Group at the Massachusetts Institute of Technology Media Lab, who developed a wearable “gestural interface” called “SixthSense.”2 The hardware—a small webcam that dangles from one’s neck, a pocket projector, and a mirror, all connected wirelessly to a smartphone in one’s pocket—forms a wearable mobile device. The user begins by handling objects and making gestures; the camera recognizes and tracks the user’s hand gestures and the physical objects using computer vision-based techniques. The software processes the video stream data, reading it as a series of instructions, and retrieves the appropriate information (texts, images, etc.) from the Internet; the device then projects this information onto any physical surface available—all surfaces, walls, and physical objects around the wearer can serve as interfaces. Here are some examples of how it works: In a bookstore, I pick up a book and hold it in front of me; immediately, I see projected onto the book’s cover its reviews and ratings. I can navigate a map displayed on a nearby surface, zoom in, zoom out, or pan across, using intuitive hand movements. I make a sign of @ with my fingers and a virtual PC screen with my email account is projected onto any surface in front of me; I can then write messages by typing on a virtual keyboard. And one could go much further here—just think how such a device could transform sexual interaction. (It suffices to concoct, along these lines, a sexist male dream: Just look at a woman, make the appropriate gesture, and the device will project a description of her relevant characteristics—divorced, easy to seduce, likes jazz and Dostoyevsky, good at fellatio, etc., etc.) In this way, the entire world becomes a “multi-touch surface,” while the whole Internet is constantly mobilized to supply additional data allowing me to orient myself.

Mistry emphasized the physical aspect of this interaction: Until now, the Internet and computers have isolated the user from the surrounding environment; the archetypal Internet user is a geek sitting alone in front of a screen, oblivious to the reality around him. With SixthSense, I remain engaged in physical interaction with objects: The alternative “either physical reality or the virtual screen world” is replaced by a direct interpenetration of the two. The projection of information directly onto the real objects with which I interact creates an almost magical and mystifying effect: Things appear to continuously reveal—or, rather, emanate—their own interpretation. This quasi-animist effect is a crucial component of the IoT: “Internet of things? These are nonliving things that talk to us, although they really shouldn’t talk. A rose, for example, which tells us that it needs water.”1 (Note the irony of this statement. It misses the obvious fact: a rose is alive.) But, of course, this unfortunate rose does not do what it “shouldn’t” do: It is merely connected with measuring apparatuses that let us know that it needs water (or they just pass this message directly to a watering machine). The rose itself knows nothing about it; everything happens in the digital big Other, so the appearance of animism (we communicate with a rose) is a mechanically generated illusion.

Our “direct” experience of “real” reality is already structured like a mixture of RR, AR, and MR.

However, this magic effect of SixthSense does not simply represent a radical break with our everyday experience; rather, it openly stages what was always the case. That is to say: In our everyday experience of reality, the “big Other”—the dense symbolic texture of knowledge, expectations, prejudices, and so on—continuously fills in the gaps in our perception. For example, when a Western racist stumbles upon a poor Arab on the street, does he not “project” a complex of such prejudices and expectations onto the Arab, and thus “perceive” him in a certain way? This is why SixthSense presents us with another case of ideology at work in technology: The device imitates and materializes the ideological mechanism of (mis)recognition which overdetermines our everyday perceptions and interactions.

And does not something similar happen in Pokémon Go? To simplify things to the utmost, did Hitler not offer the Germans the fantasy frame of Nazi ideology that made them see a specific Pokémon—“the Jew”—popping up all around, and providing the clue to what one has to fight against? And does the same not hold for all other ideological pseudo-entities that have to be added to reality in order to make it complete and meaningful? One can easily imagine a contemporary anti-immigrant version of Pokémon Go where the player wanders about a German city and is threatened by Muslim immigrant rapists or thieves lurking everywhere. Here we encounter the crucial question: Is the form the same in all these cases, or is the anti-Semitic conspiracy theory which makes us see the Jewish plot as the source of our troubles formally different from the Marxist approach which observes social life as a battleground of economic and power struggles? There is a clear difference between these two cases: In the second case, the “secret” beneath all the confusion of social life is social antagonisms, not individual agents which can be personalized (in the guise of Pokémon figures), while Pokémon Go does inherently tend toward the ideologically personalized perception of social antagonisms. In the case of bankers threatening us from all around, it is not hard to see how such a figure can easily be appropriated by a Fascist populist ideology of plutocracy (as opposed to “honest” productive capitalists). … The point of the parallel between Nazi anti-Semitism and Pokémon Go is thus a very simple and elementary one: Although Pokémon Go presents itself as something new, grounded in the latest technology, it relies on old ideological mechanisms. Ideology is the practice of augmenting reality.

The general lesson from Pokémon Go is that, when we deal with the new developments in Virtual Reality (VR) technology, we usually focus on the prospect of full immersion, thereby neglecting the much more interesting possibilities of Augmented Reality (AR) and Mixed Reality (MR):

• In VR, you wear something on your head (currently, a head-mounted display that can look like a boxy set of goggles or a space helmet) that holds a screen in front of your eyes, which in turn is powered by a computer. Thanks to specialized software and sensors, the experience becomes your reality, filling your vision; at the high end, this is often accompanied by 3-D audio that feels like a personal surround-sound system on your head, or controllers that let you reach out and interact with this artificial world in an intuitive way. The forthcoming development of VR will heighten the level of immersion so that it will feel as if we are fully present in it: When VR users look (and walk) around, their view of that world will adjust in the same way as it would if they were looking or moving in real reality.

• AR takes our view of the real world and adds digital information, from simple numbers or text notifications to a complex simulated screen, making it possible to augment our view of reality with digital information about it without checking another device, leaving both our hands free for other tasks. We thus immediately see reality plus selected data about it that provide the interpretive frame of how to deal with it—for example, when we look at a car, we see the basic data about it on screen.

• But the true miracle is MR: It lets us see the real world and, as part of the same reality, “believable” virtual objects which are “anchored” to points in real space, and thus enable us to treat them as “real.” Say, for example, that I am looking at an ordinary table, but see interactive virtual objects (a person, a machine, a model of a building) sitting on top of it; as I walk around, the virtual landscape holds its position, and when I lean in close, it gets closer in the way a real object would. To some degree, I can then interact with these virtual objects in such a “realistic” way that what I do to them has effects in non-virtual reality (for example, I press a button on the virtual machine and the air-conditioning starts to work in reality).2

We thus have four levels of reality: RR (“real” reality which we perceive and interact with), VR, AR, MR; but is RR really simply reality, or is even our most immediate experience of reality always mediated and sustained by some kind of virtual mechanism? Today’s cognitive science definitely supports the second view—for example, the basic premise of Daniel Dennett’s “heterophenomenology”3 is that subjective experience is the theorist’s (interpreter’s) symbolic fiction, his supposition, not the domain of phenomena directly accessible to the subject. The universe of subjective experience is reconstructed in exactly the same way as we reconstruct the universe of a novel from reading its text. In a first approach, this seems innocent enough, self-evident even: Of course we do not have direct access to another person’s mind, of course we have to reconstruct an individual’s self-experience from his external gestures, expressions and, above all, words. However, Dennett’s point is much more radical; he pushes the parallel to the extreme. In a novel, the universe we reconstruct is full of “holes,” not fully constituted; for example, when Conan Doyle describes Sherlock Holmes’s apartment, it is in a way meaningless to ask exactly how many books there were on the shelves—the writer simply did not have an exact idea of it in his mind. And, for Dennett, it is the same with another person’s experience in “reality”: what one should not do is to suppose that, deep in another’s psyche, there is a full self-experience of which we get only fragments. Even the appearances cannot be saved.

This central point of Dennett can be nicely explained if one contrasts it with two standard positions which are usually opposed as incompatible, but are in effect solidary: first-person phenomenalism and third-person behavioral operationalism. On the one hand, the idea that, even if our mind is merely software in our brains, nobody can take from us the full first-person experience of reality; on the other hand, the idea that, in order to understand the mind, we should limit ourselves to third-person observations which can be objectively verified, and not accept any first-person accounts. Dennett undermines this opposition with what he calls “first-person operationalism”: the gap is to be introduced into my very first-person experience—the gap between content and its registration, between represented time and the time of representation. A nice proto-Lacanian point of Dennett (and the key to his heterophenomenology) is this insistence on the distinction, in homology with space, between the time of representation and the representation of time: They are not the same, i.e., the loop of flashback is discernible even in our most immediate temporal experience—the succession of events ABCDEF … is represented in our consciousness so that it begins with E, then goes back to ABCD, and, finally, returns to F, which in reality directly follows E. So even in our most direct temporal self-experience, a gap akin to that between signifier and signified is already at work: Even here, one cannot “save the phenomena,” since what we (mis)perceive as a directly experienced representation of time (the phenomenal succession ABCDEF …) is already a “mediated” construct from a different time of representation (E/ABCD/F …).

“First-person operationalism” thus emphasizes how, even in our “direct (self-)experience,” there is a gap between content (the narrative inscribed into our memory) and the “operational” level of how the subject constructed this content, where we always have a series of rewritings and tinkerings: “introspection provides us—the subject as well as the ‘outside’ experimenter—only with the content of representation, not with the features of the representational medium itself.”3 In this precise sense, the subject is his own fiction: The content of his own self-experience is a narrativization in which memory traces already intervene. So when Dennett makes “ ‘writing it down’ in memory criterial for consciousness; that is what it is for the ‘given’ to be ‘taken’—to be taken one way rather than another,” and claims that “there is no reality of conscious experience independent of the effects of various vehicles of content on subsequent action (and, hence, on memory),”3 we should be careful not to miss the point: What counts for the concerned subject himself is the way an event is “written down,” memorized—memory is constitutive of my “direct experience” itself, i.e., “direct experience” is what I memorize as my direct experience. Or, to put it in Hegelian terms (which would undoubtedly appall Dennett): Immediacy itself is mediated, it is a product of the mediation of traces. One can also put this in terms of the relationship between direct experience and judgment on it: Dennett’s point is that there is no “direct experience” prior to judgment, i.e., what I (re)construct (write down) as my experience is already supported by judgmental decisions.

For this reason, the whole problem of “filling in the gaps” is a false problem, since there are no gaps to be filled in. Let us take the classic example of our reading a text which contains a lot of printing errors: Most of these errors pass unnoticed, i.e., since, in our reading, we are guided by an active attitude of recognizing patterns, we, for the most part, simply read the text as if there were no mistakes. The usual phenomenological account of this would be that, owing to my active attitude of recognizing ideal patterns, I “fill in the gaps” and automatically, even prior to my conscious perception, reconstitute the correct spelling, so that it appears to me that I am reading the correct text, without mistakes. But what if the actual procedure is different? Driven by the attitude of actively searching for known patterns, I quickly scan a text (our actual perception is much more discontinuous and fragmentary than it may appear), and this combination of an active attitude of searching and fragmented perception leads my mind directly to the conlcusion that, for example, the word I just read is “conclusion,” not “conlcusion,” as it was actually written? There are no gaps to be filled in here, since there is no moment of perceptual experience prior to the conclusion (i.e., judgment) that the word I have just read is “conclusion”: Again, my active attitude drives me directly to the conclusion.

What I (re)construct as my experience is already supported by judgmental decisions.

Back to VR, AR, and MR: Is not the conclusion that imposes itself that our “direct” experience of “real” reality is already structured like a mixture of RR, AR, and MR? It is thus crucial to bear in mind that AR and MR “work” because they do not introduce a radical break into our engagement in reality, but mobilize a structure that is already at work in it. There are arguments (drawn from the brain sciences) that something like ideological confabulation is proper to the most elementary functioning of our brain; recall the famous split-brain experiment:

The patient was shown two pictures: of a house in the winter time and of a chicken’s claw. The pictures were positioned so they would exclusively be seen in only one visual field of the brain (the winter house was positioned so it would only be seen in the patient’s left visual field [LVF], which corresponds to the brain’s right hemisphere, and the chicken’s claw was placed so it would only be seen in the patient’s right visual field [RVF], which corresponds to the brain’s left hemisphere).

A series of pictures was placed in front of the patient who was then asked to choose a picture with his right hand and a picture with his left hand. The paradigm was set up so the choices would be obvious for the patients. A snow shovel is used for shoveling the snowy driveway of the winter house and a chicken’s head correlates to the chicken’s claw. The other pictures do not in any way correlate with the two original pictures. The patient chose the snow shovel with his left hand (corresponding to his brain’s right hemisphere) and his right hand chose the chicken’s head (corresponding to the brain’s left hemisphere). When the patient was asked why he had chosen the pictures he had chosen, the answer he gave was astonishing: “The chicken claw goes with the chicken head, and you need a snow shovel to clean out the chicken shed.” Why would he say this? Wouldn’t it be obvious that the shovel goes with the winter house? For people with an intact corpus callosum, yes it is obvious, but not for a split-brain patient. Both the winter house and the shovel are being projected to the patient from his LVF, so his right hemisphere is receiving and processing the information and this input is completely independent from what is going on in the RVF, which involves the chicken’s claw and head (the information being processed in the left hemisphere). The human brain’s left hemisphere is primarily responsible for interpreting the meaning of the sensory input it receives from both fields, however the left hemisphere has no knowledge of the winter house. Because it has no knowledge of the winter house, it must invent a logical reason for why the shovel was chosen. Since the only objects it has to work with are the chicken’s claw and head, the left hemisphere interprets the meaning of choosing the shovel as “it is an object necessary to help the chicken, which lives in a shed, therefore, the shovel is used to clean the chicken’s shed.” Gazzaniga famously coined the term “left brain interpreter” to explain this phenomenon.4

It is crucial to note that the patient “wasn’t ‘consciously’ confabulating”: “The connection between the chicken claw and the shovel was an honest expression of what ‘he’ thought.”5 And is not ideology, at its most elementary, such an interpreter confabulating rationalizations in the conditions of repression? A somewhat simplified example: Let’s imagine the same experiment with two pictures shown to a subject fully immersed in ideology, a beautiful villa and a group of starving miserable workers; from the accompanying cards, he selects a fat rich man (inhabiting the villa) and a group of aggressive policemen (whose task is to squash the workers’ eventual desperate protest). His “left brain interpreter” doesn’t see the striking workers, so how does it account for the aggressive policemen? By confabulating a link such as: “Policemen are needed to protect the villa with the rich man from robbers who break the law.” Were not the (in)famous nonexistent weapons of mass destruction that justified the United States’ attack on Iraq precisely the result of such a confabulation, which had to fill in the void of the true reasons for the attack?

Slavoj Žižek is a Slovenian philosopher and cultural critic. He is a professor at the European Graduate School, International Director of the Birkbeck Institute for the Humanities, Birkbeck College, University of London, and a senior researcher at the Institute of Sociology, University of Ljubljana, Slovenia. His books include First as Tragedy, Then as Farce; Iraq: The Borrowed Kettle; In Defense of Lost Causes; Living in the End Times; and many more.

Excerpted from Incontinence of the Void by Slavoj Žižek published by The MIT Press. © 2017 Massachusetts Institute of Technology. All rights reserved.

References

1. Quoted from Dnevnik newspaper, Ljubljana, Slovenia, Aug. 24, 2016.

2. Johnson, E. What are the differences between virtual, augmented and mixed reality? www.recode.net (2016).

3. Dennett, D.C. Consciousness Explained Little, Brown, Boston, MA (1991).

4. M.S. The split brain revisited. Scientific American 279, 50-55 (1998).

5. Foster, C. Wired to God? Hodder, London (2011).

Photocollage credits: Everett Collection; tangxn /Shutterstock