On March 11, 2021, the auction house Christie’s sold a work by an American graphic designer, Michael Winkelmann, a.k.a. Beeple, for a colossal $69 million, making it the third most expensive work ever sold by a living artist. The work, Everydays: The First 5000 Days, is a nonfungible token, or NFT. It’s a computer file that cannot be exchanged, copied, or destroyed, which gives the purchaser proof of authenticity. It lives online in a virtual space—an immaterial space—in a blockchain, a secure digital public ledger. The file is a mint copy, an original, like the Mona Lisa that hangs in the Louvre. It’s also a work of art.

Everydays exemplifies a new generation of digital art that uses computers in adventurous ways and is pushing boundaries, just as artists from Da Vinci to Picasso to Rothko have always done. Even more exciting is AI art, generated by machines with just a little prompting by humans. It’s exciting because AI art may one day show us how machines see the world.

The point is not whether we can distinguish AI-created music from human music but whether machines will be able to create music of their own, music we cannot imagine.

For 5,000 days straight—nearly 15 years—Beeple created images, sometimes by hand, sometimes digitally, and posted them online. He then made a vast collage of his work on a jpg. The individual tiles are colorful and highly imaginative. Some are dystopian sci-fi images, some abstract, some crude sketches, others political or satirical. What I find endlessly fascinating is the incredible variety and detail of the images. It’s fascinating to zoom in on tiles. There are scatological images, people rollerblading among giant juice bottles, disturbing otherworldly images of astronauts, some nightmarish, some surreal, all colorful and inventive. The whole contents of a weird imagination are there to be seen.

Like all original works of art, Everydays has a subject, theme, and a point of view. Beeple’s images express his take on current events. He resents accumulation of wealth by the few, is critical of America’s political turbulence, and delves into what’s new on Twitter.

Another element of art is the aesthetic of a work, which sets it apart from other works and is what makes it original. Cubist art had its own aesthetic—the reduction of forms to geometry—and so does Everydays, a sort of sci-fi, comic book, surreal aesthetic. Everydays can also be compared to Hieronymus Bosch’s otherworldly paintings, which must have seemed utterly shocking in their day.

Artists before Beeple had turned their works into NFTs but Beeple was the first to transform a checkerboard of 5,000 images into a single NFT, which tickled the fancy of Christie’s, no less. But for all the talent and creativity of digital artists like Beeple, it’s AI art, which is creating images never imagined before, that brings into focus the differences, or, more to the point, the lack of differences, between us and machines.

Beeple’s art is generated by digital software packages such as Adobe Photoshop that allow artists to manipulate images, rather like manipulating a paintbrush. AI art, on the other hand, is created by algorithms, the set of instructions that you program into the machine to tell it what to do. With AI art, it’s the machine that manipulates the paintbrush.

Chief among artists who employ artificial intelligence in their creations is Mario Klingemann. Klingemann uses an artificial neural network, a machine whose architecture resembles the way the human brain is wired. On this he runs a GAN (Generative Adversarial Network), made up of a generator and a discriminator network. Data, often digital images, are coded into a GAN. The generator then creates images from, essentially, nothing—data noise—and then passes them to the discriminator network. The network assesses whether each image is real or not.

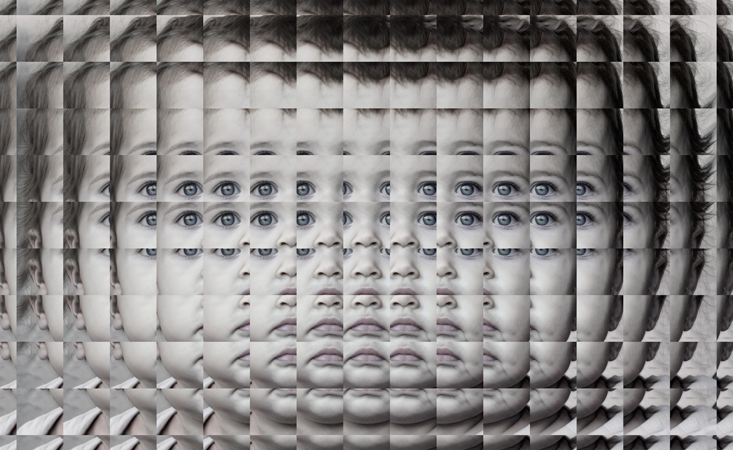

Klingemann’s discriminator network is trained on thousands of faces harvested from the Web. At first the generator creates formless blobs that are rejected by the discriminator. Soon the generator is no longer generating images from nothing, but from the rejected images, which begin to form its database, its memory. These are faces that belong to no one in our world. We could say that the generator network is imagining, beginning to construct an inner world of its own. In this way Klingemann created Mitosis, meaning the moment when the embryo begins to form.

Mitosis is a two-minute video loop made up of over 750,000 faces continually being generated by a GAN. The ever-changing face in Mitosis symbolizes how AI is becoming an integral part of our daily life, changing us in subtle ways that are noticeable only in hindsight. The effect is akin to Cubist art, where the viewer sees a scene from many different perspectives, one at a time. Besides the eye-catching checkerboard array, it reflects the Cubist aesthetic. While Beeple’s work is static, a checkerboard of tiny squares, Klingemann’s is endlessly moving and changing before our eyes. Far from cartoon-like, it has the gravity of a black and white photograph. These are images created not by the human artist but by the machine.

AI art like Klingemann’s shows that machines can imagine what we cannot imagine and see what we cannot see. This is especially evident in artificial neural networks that run the DeepDream algorithm invented by Alexander Mordvintsev initially to probe the machine’s “hidden layers” of neurons to glean a better understanding of how they work. Basically, DeepDream overinterprets an image, which gives a sense of how individual neurons contribute to it.

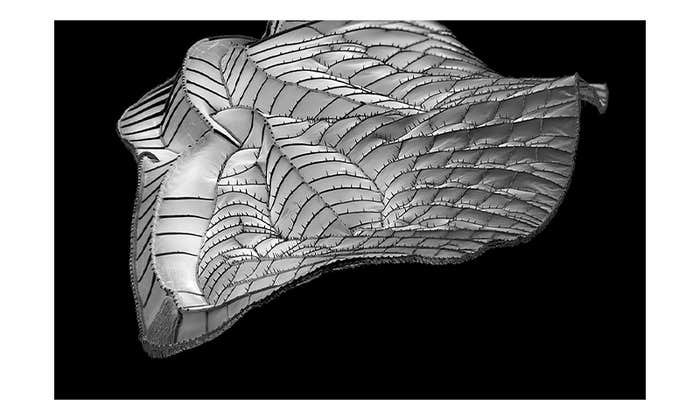

Suppose we take an artificial neural network running DeepDream and trained on ImageNet, a huge database of 14 million images. We feed in a jpg of Van Gogh’s Starry Night, then stop analysis partway through. Will the machine see a fuzzy image of Van Gogh’s painting? No. What it produces changes every time, but it might come out with something like the one below—the world as seen through the eyes of a machine. We could not have imagined such images before DeepDream created them.

DeepDream’s works have been included in many exhibitions including the Gray Area show of 2016, which was the first to reveal the esoteric world of artificial intelligence to the public. They are totally new, have no link with art of the past, and have a style all their own, a unique aesthetic. We can recognize a DeepDream work just as we recognize a Rembrandt or a Monet. They also push the boundaries of conventional art in that they have no specific subject matter. They are the world as seen by an alien being, a machine, like the images created by machines running GANs. This is what I find so exciting with DeepDream. You never know what to expect and whatever emerges is nothing you could have imagined.

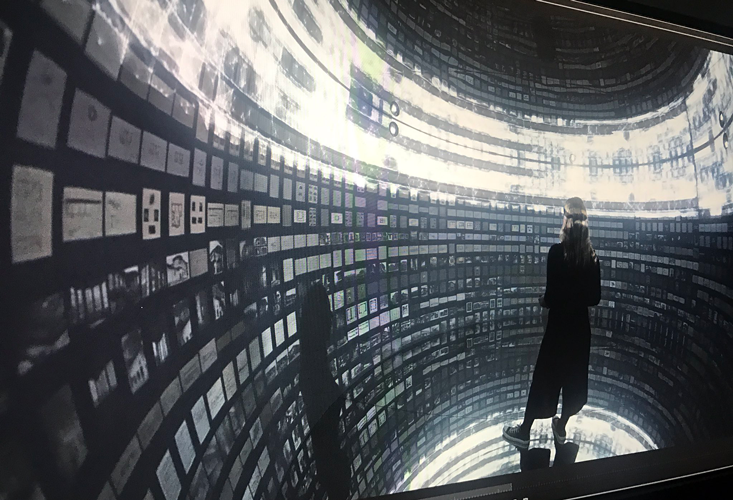

Another fascinating example of computer-generated art is Refik Anadol’s work. It’s a stunning example of the potential of machines to dream, to create a new world of extraordinary and beautiful images. Anadol, a media artist, took 1.7 million documents relating to Turkey, from the late 19th century to the present, from a Turkish art institution and online library called SALT Galata, and encoded them all in numbers. Archive Dreaming is an immersive media installation. Viewers stand in a cylindrical room and the images are projected onto the walls and onto them.

When the installation is dormant, a GAN comes into play, using a discriminator network trained on these 1.7 million documents. The generator network begins generating images which soon start to look much like the real ones but are documents that have never existed. Viewers are surrounded by a whirl of images in which it’s impossible to distinguish real from unreal. These images are new representations of the original subjects and texts, which are designated as art, so the images too are art. To me the interactive aspect is especially intriguing. The audience becomes part of it, with others seeing views of the work as it moves over the contours of your body. As in Borges’s “The Library of Babel,” on a library generated by 22 letters of the alphabet, plus punctuation marks, there is literally an infinity of images.

The images generated by DeepDream and GAN demonstrate how art and technology are fusing in the 21st century. For the moment, human artists program the machines. But it is the machines themselves that do the creating, going beyond the parameters set up by their human programmers.

Can AI art really be called art? Few question whether AI-created music is music. Some AI researchers, for instance, have trained artificial neural networks on hundreds of scores by Bach. Having learned the rules of baroque music, the machines come up with more of the same. It’s a long way from Bach because at present machines are rather limited and have neither emotions nor the memory of scores by Bach’s predecessors, as Bach did. But asked to pick which of two pieces is Bach and which by a machine, only about 50 percent of the listeners will make the right choice.

Next to make a splash will be art created entirely by machines with humans nowhere in the picture.

But it’s a pointless exercise. The point is not whether we can distinguish AI-created music from human music but whether machines will be able to create music of their own, music which at present we cannot imagine. There are already machines, such as nSynth, capable of producing musical sounds we’ve never heard before, and which musicians can use to create music.

But how can a machine made up of wires and transistors, created and programmed by human beings, be considered creative? While Mozart’s father taught him the rules of composition, we don’t attribute the son’s music to the father. Similarly, there’s no need to attribute the music or art a machine creates to its programmer. As for human creativity, we ourselves are an amalgam of bones, muscles, arteries, and veins. And we are clearly creative.

What’s so different about the components of a machine? Like us, machines have a memory and can respond to the world around them and are built to adapt as they learn and think. When you feed pixels, text, or musical notes into an artificial neural network, they are all encoded and stored in the machine’s memory as numbers. We can imagine sculpting with words or musical notes or transforming Picasso’s Les Demoiselles d’Avignon into a symphony.

This goes for us too. We too are machines. At one level we are biological machines made up of muscles, bones, arteries, and veins; at a deeper level, chemical machines; and at the deepest level, neutrons, protons, gluons, and the rest of the elementary particles. In theory, the dynamics of these elementary particles can be calculated using the equations of quantum physics, which when encoded for computers become numbers. It’s numbers all the way down.

Next to make a splash will be art created entirely by machines with humans nowhere in the picture. AI art is already developing akin to the way in which human art has developed through the millennia. By doing so, AI art is opening our own minds, causing us to reflect on ourselves, what we’re made of, who we are. The next step will be when we merge with machines.

Arthur I. Miller is a scientist, historian, and author, and Emeritus Professor of the History and Philosophy of Science at University College London. His most recent book is The Artist in the Machine: The World of AI-Powered Creativity.

Lead image: mundissima / Shutterstock