Ross Goodwin has had an extraordinary career. After playing about with computers as a child, he studied economics, then became a speech writer for President Obama, writing presidential proclamations, then took a variety of freelance writing jobs.

One of these involved churning out business letters—he calls it freelance ghostwriting. The letters were all pretty much the same, so he figured out an algorithm that would generate form letters, using a few samples as a database. The algorithm jumbled up paragraphs and lines following certain templates, then reassembled them to produce business letters, similar but each varying in style, saving him the job of starting anew each time. He thought he was on to something new but soon found out that this was a well-explored area. But it did pique his interest in the “intersection of writing and computation.”

Today, computers are creating an extraordinary new world of images, sounds, and stories such as we have never experienced before. Gerfried Stocker, the artistic director of Ars Electronica in Linz, Austria, says, “Rather than asking whether machines can be creative and produce art, the question should be, ‘Can we appreciate art we know has been made by a machine?’ ”

Much of the art computers are creating transcends the merely weird to encompass works that we might consider pleasing and that many artists judge as acceptable. Most programmed—rule-based—systems have constraints to prevent them from producing nonsense, but artificial neural networks can now generate poetry and prose that frequently passes over into that realm.

One of Goodwin’s first creations, developed at NYU’s Interactive Telecommunications Program (ITP), is the remarkable word.camera. It takes a picture—of you or of whatever you are holding up to be photographed—identifies what it’s seeing, then generates words—poetry—sparked by the images it has identified. Show it an image of, for example, mountain scenery, and it might come up with seven or eight descriptive phrases—“blue sky with clouds,” “a large rock in the background.” Then it uses each to generate a sequence of words: “A blue sky with clouds: and a sweet sun carrying the shadow of the black trees and the spire are dark and the wind and the breath in the light are.” A little mysterious, but no more so than a lot of contemporary poetry.

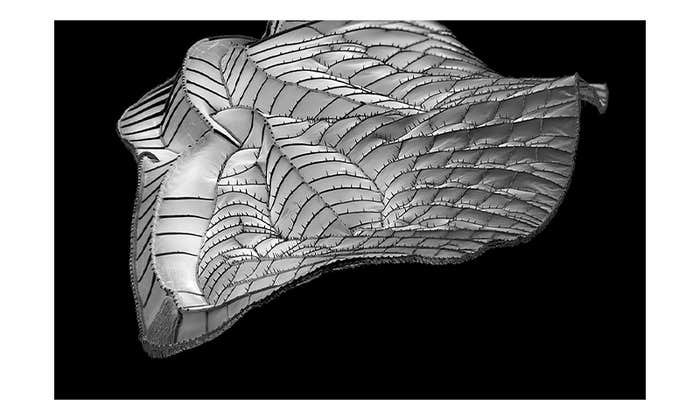

Here is an example of an image, in this case the great 16th-century artist Albrecht Dürer’s 1514 engraving of Saint Jerome in His Study. It’s a period interpretation of a genius as someone touched by divine guidance, transmitted by the light that shines on the saint.

And here is what the machine sees, the poem generated by word.camera when presented with this picture:

A Stone Wall fills a sea, A shadow of rivers,

A spirit of light;

and in the water

Some storm that sweeps the wind and the darkness. A large brick wall with a slow view of the trees,

The town of the house where it was done with water, Having passed a Spring-fire.

A large rock on the ground is dark and shadowy, and the whole world is dead.

To create his word.camera, Goodwin took a neural network machine containing Char-RNN, which predicts sequences of characters based on an input corpus, and trained it on a vast number of 20th-century poems and a large number of images, all captioned. Then he rigged up a digital camera to it. The computer writes poems based on what it sees in the image. Goodwin was gratified that the poems “conjured imagery and employed relatively solid grammar,” while having the aura of originality. “What good is a generator if it just plagiarizes your input corpus?” he asks, adding: “A machine that can caption images is a machine that can describe or relate to what it sees in a highly intelligent way.”

In 2016, Goodwin met Oscar Sharp, a British film student at NYU who preferred hanging out with the technologists at ITP rather than with filmmakers. Sharp wanted to create a film by splicing together random parts of other films, like Dadaist artists had done. But Goodwin had a better idea—to use Char-RNN. Their call to action came when they heard about the annual Sci-Fi London Film Festival and its 48-Hour Film Challenge. For this, contestants are given a set of props and a list of lines of dialogue that must be included. They have to make the film over the next two days, and it can be no more than five minutes long.

It’s an AI version of an American road trip akin to Kerouac’s On the Road.

Goodwin fed his Char-RNN, which they named Jetson, digitized screenplays of sci-fi films from the 1980s and 1990s, TV shows like Stargate: SG1, and every episode of Star Trek and The X-Files. While Goodwin, Sharp, and the cast stood around the printer, Jetson spat out a screenplay, complete with seemingly impossible stage directions, such as, “He is standing in the stars and sitting on the floor.” Sharp decided this called for a dream sequence—though some of Shakespeare’s stage directions, too, like the classic “Exit pursued by a bear,” from A Winter’s Tale, are as obscure as any written by Jetson.

Another stage direction called for one of the characters—H, who is speaking to C—to spit out an eyeball:

C (smiles)

I don’t know anything about this.

H (to Hauk, taking his eyes from his mouth)

Then what?

Sharp randomly assigned roles to the three actors in the room: Thomas Middleditch from the HBO series Silicon Valley (H), Elisabeth Gray (H2), and Humphrey Ker (C). The actors read through Jetson’s script, interpreting the lines as they read, turning it into an involving if somewhat bizarre sci-fi story of love, despair, and murder. There is even a musical interlude that Jetson composed after learning from a database of 25,000 folk songs, which comes toward the end, during a dreamlike action sequence. The song was curated and performed by vocalist Tiger Darrow and musician Andrew Orkin, who “chose lines that worked well with their music.”

Sharp kept curation of the script itself to an absolute minimum. He cut some lines to keep the movie within the five-minute limit for the challenge. One scene was removed because it called for a truck and they had none. “Oscar Sharp did not edit it for consistency or linearity or for any other qualities,” Goodwin tells me. In the film, which they called Sunspring, they went the whole distance: Actors spoke exactly the lines that the machine gave them.

Sunspring is a sci-fi drama with the characters’ costumes augmented with metal and gold. It’s certainly bizarre—even Lynchian, one might say. The actors deliver their somewhat gnomic lines with passion and intensity, putting meaning and expression into them and making them make sense, even if the script itself does not. And in the course of the film H does indeed spit out an eyeball. For Goodwin, it’s up to the actor or the reader to provide the meaning. That’s an essential part of creative writing.

“The magic doesn’t come from the writer, it comes from the reader,” says Pablo Gervás, a Spanish professor of software engineering and artificial intelligence, who has developed an algorithm to generate poetry. It seems truly magical when “a string of characters on a page sparks memory and emotion,” like a work of visual art or of music can, Gervas tells me. Good writers take a minimalist approach, leaving it to the reader to figure things out rather than explaining everything.

People may be less prone to appreciate prose that makes no sense, but this is not the case for movie dialogue or song lyrics or poetry. As Goodwin says, modern readers may have difficulty understanding certain lines of Shakespeare, but they make sense when actors flesh them out with their body language and emotion.

The judges at the film festival placed Sunspring in the top 10, though it did not win. Nevertheless, Jetson was interviewed on stage at the award ceremony, responding through text on Goodwin’s computer. Here are two of its responses:

Interviewer: What do you think of your historic nomination in this contest?

Jetson: I am pretty excited. I think I can see the feathers when they release their hearts. It’s like a breakdown of the facts. So they should be competent with the fact that they won’t be surprised.

Interviewer: What’s next for you?

Jetson: Here we go. The staff is divided by the train of the burning machine building with sweat. No one will see your face. The children reach into the furnace, but the light is still slipping to the floor. The world is still embarrassed. The party is with your staff. My name is Benjamin.

Rather touchingly, Jetson had told them its name and henceforth Goodwin and Sharp referred to it—or him—as Benjamin, and billed him as such as the writer of Sunspring.

For the moment, Goodwin sees the role of computers as assisting us and providing intelligent augmentation. But he believes that we are getting close to machines creating on their own volition. “That sort of creativity,” he says, “need not replace human creativity and will work in conjunction with human creativity. I don’t see why one has to replace the other.”

Benjamin, along with Goodwin and Sharp, went on to create a second movie, entitled It’s No Game, with David Hasselhoff as the Hoffbot. And Goodwin was snapped up by Google’s Artist and Machine Intelligence Program as a creative technologist.

He has now published a book entitled 1 the Road, which he describes as “a novel written using a car as a pen, an enhanced AI experiment.” It is an AI version of an American road trip akin to Jack Kerouac’s On the Road. For this, Goodwin took an AI for a ride. He traveled from New York to New Orleans in a Cadillac equipped with four sensors: a surveillance camera on the roof, a GPS unit, a microphone, and the computer’s internal clock. All these were hooked up to an AI trained on poetry, science fiction, and what Goodwin describes as “bleak” literature, as well as on location data.

Like a very advanced version of Goodwin’s word.camera, the AI produced words in response to what it saw and places it passed and to conversation it overheard in the car. The opening sentence came spewing out of the printer as Goodwin turned on the machine in Brooklyn: “It was 9:17 in the morning and the house was heavy.”

The AI continued to generate somewhat disjointed but evocative sentences throughout the journey, such as in this excerpt:

It was seven minutes to ten in the morning, and it was the only good thing that happened.

What is it? the painter asked.

The time was six minutes until ten o’clock in the morning, and the conversation was finished while the same interview was over.

Responding as it does to sights and sounds along the way, the book really does read like a rather surreal road trip novel.

How we interpret such gnomic prose can provide hints as to how we will respond to computer-generated prose of the future, prose written by an alien life-form. In the future, we can expect computers to produce literature different from anything we could possibly conceive of. Our instinct is to try to make sense of it if we can. But when a new form of writing appears, generated by sophisticated machines, we may not be able to. As we learn to appreciate it, perhaps we will even come to prefer machine-generated literature.

Arthur I. Miller is Emeritus Professor of History and Philosophy of Science at University College London. He is the author of The Artist in the Machine: The World of AI-Powered Creativity, Colliding Worlds: How Cutting-Edge Science Is Redefining Contemporary Art, and other books including Einstein, Picasso: Space, Time, and the Beauty That Causes Havoc.

This article was adapted from The Artist in the Machine: The World of AI-Powered Creativity, published in 2019 by MIT Press.

Lead image: robin.ph / Shutterstock

Read our interview with Arthur I. Miller here.