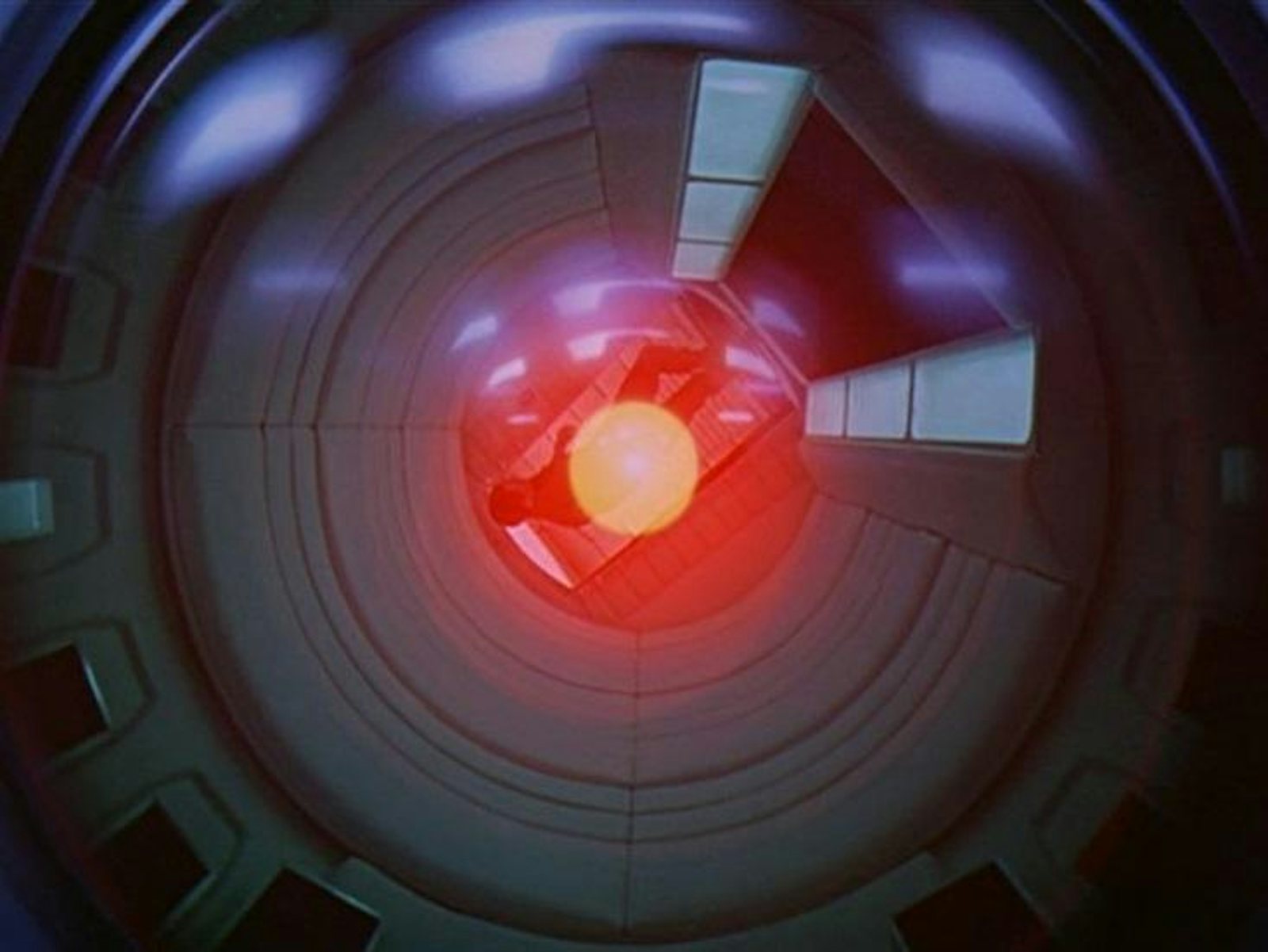

If humans go on to create artificial intelligence, will it present a significant danger to us? Several technical luminaries have been open and clear with respect to this possibility: Elon Musk, the founder of SpaceX, has equated it to “summoning the demon”; Stephen Hawking warns it “could spell the end of the human race”; and Bill Gates agrees with Musk, placing himself in this “concerned” camp.

Their worry is that once the AI is switched on and gradually assumes more and more responsibility in running our brave, newfangled world—all the while improving upon its own initial design—what’s to stop it from realizing that human existence is an inefficiency or perhaps even an annoyance? Perhaps the AI would want to get rid of us if, as Musk has suggested, it decided that the best way to get rid of spam email “is to get rid of humans” altogether.

No doubt there is value in warning of the dystopian potential of certain trends or technologies. George Orwell’s 1984, for example, will always stand as a warning against technologies or institutions that remind us of Big Brother. But could the anxiety about AI just be the unlucky fate of every new radical technology that promises a brighter future: to be accused as a harbinger of doom? It certainly wouldn’t be unprecedented. Consider the fear that once surrounded another powerful technology: recombinant DNA.

“A self-improving agent must reason about the behavior of its smarter successors in abstract terms. If it could predict their actions in detail, it would already be as smart as them.”

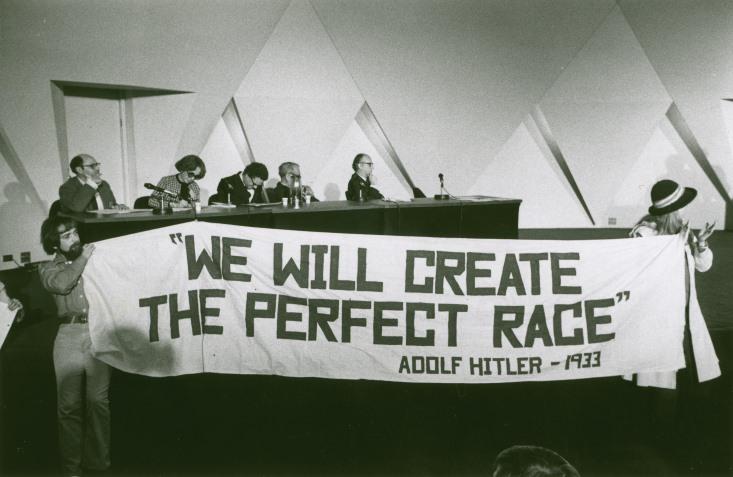

In 1972 Paul Berg, a leading biochemist at Stanford University, created a strand of DNA that contained all the genes of a virus potentially implicated in cancer and fused it with genes of a bacteria that dwells in the human intestine: E. coli. This caused an uneasy stir. But the alarms really went off when researchers created the first recombinant DNA, demonstrating the ability to clone the DNA of any organism, and to mix and match genes together in order to bring about novel forms of life. The Columbia biochemist Erwin Chargaff (whose work was invaluable to Watson and Crick’s discovery of the double helix) fired off a letter to the editor of Science titled, “On the Dangers of Genetic Meddling,” stressing “the awesome irreversibility of what is being contemplated.” He argued that it was the pinnacle of brashness to play around with the order and stability that nature had, over millions of years, maintained. “The future,” he concluded, “will curse us for it.” A couple of months later, Liebe Cavalieri, then a biochemist at Cornell and a member of the Sloan-Kettering Institute for Cancer Research, took this argument to the pages of The New York Times, relating the catastrophic scenarios we could expect to suffer if recombinant DNA research were not halted. As a way to stymie the ambitions of the relevant scientists, Cavalieri went so far as to suggest “that the Nobel Committee announce that no awards will ever be given in this area.” Chargaff and Cavalieri represented a contingent of researchers who wished to see the use of recombinant DNA banned for the foreseeable future.

Seeing the potential for recombinant DNA research to cause an epidemic, Berg called for a worldwide moratorium on all such experimentation, which led, in 1975, to the organization of the Asilomar Conference in California. More than 100 scientists from over a dozen countries attended, along with lawyers, journalists, and government officials. Attendees fiercely debated whether research into recombinant DNA was “flirting with disaster” or safe, even during meal breaks and over cocktails well into the wee hours.

On the final day of the conference, it was agreed that research should continue but only under the most stringent of restrictions on potentially dangerous experiments. For these, airlocks and ventilation systems were mandatory to prevent some gnarly virus or bacteria from escaping. And just to be extra safe, the microbes being tampered with had to be genetically weakened so that they’d die outside the lab. Stipulations such as these went on to help form the biosafety guidelines of the National Institutes of Health, which have been updated numerous times in each decade, most recently in 2013. If you’re a researcher and you’re looking to do an experiment involving recombinant DNA, you must register and receive approval by a safety committee if the lab work will involve, among other things, cloning potentially lethal DNA or RNA combinations, or using human or animal pathogens as hosts of manipulated genes. The Asilomar Conference allowed research to proceed carefully “under a yellow light,” as Berg later said.

Despite Cavalieri’s warning, Berg and his biochemist colleagues won a Nobel Prize in 1980. And since then, innumerable experiments—many of them inconceivable in 1975—have been conducted with recombinant DNA without any hazardous repercussions. The worried, distinguished naysayers ended up conclusively on the wrong side of history. By being—and continuing to be—extremely careful, biologists have thus far managed to prevent the doom that Chargaff and Cavalieri prophesied.

But there have been occasional slip-ups in our biosafety defenses. It was only last year, for instance, that a stash of old vials containing the smallpox virus, kept precariously in a cardboard box labeled “1954,” was spotted in a U.S. Food and Drug Administration lab in the heart of the National Institutes of Health. And a month earlier, the director of the Center for Disease Control revealed that lab workers had fudged the handling and storage of what turned out to be live anthrax, as well as inadvertently mixing deadly H5N1 influenza with a benign strain of the virus in a shipment to another lab. These lapses in professionalism, wrote Deborah Cotton, a professor of medicine at Boston University, “strain credulity.” Until these sorts of mistakes can be more reliably prevented, she suggested that high-risk experiments ought to be suspended. Because of the nature of humans and organizations, even our most diligent precautions are always fallible.

In light of our experience with biosafety, would we be able to prevent a superintelligent AI from going rogue and threatening us? If so, one of the important causes will likely be the ongoing work in how to create—and maintain—a “Friendly AI.”

Nate Soares, a researcher at the Machine Intelligence Research Institute and previously at Microsoft and Google, specializes in this area. In his most recent paper “The Value Learning Problem,” he essentially makes the point that Aretha Franklin didn’t go far enough when she says, “R-E-S-P-E-C-T/Find out what it means to me.” It’s simply not enough, in other words, for an AI to understand what moral behavior is; it must also have a preference for it. But, as every parent knows, instilling this in a child can be maddening. What makes that irritating situation tolerable, however, is the child’s lack of power to do any real harm in the mean time—which may not be the case at all with a super-intelligence. “Given the enormity of the stakes and the difficulty of writing bug-free software,” writes Soares, “every available precaution must be taken when constructing super-intelligent systems.” And it’s critical that we don’t wait till the technology arrives to begin thinking of precautions, Soares says; even if our guidelines are vague and abstract, they’ll be better than nothing.

“If this were the timeline of aviation, we would only just be getting beyond the stage where a few inventors were tinkering with flapping-wing contraptions.”

One such precaution is based on the hypothesis that it won’t be humans who directly create a super-intelligence; instead, we’ll create a human-level AI that then continuously improves on its own design, making itself far more ingenious than anything humans could engineer. Therefore the challenge is in making sure that when it comes specifically to refining its own intelligence, the AI goes about it wisely, making sure that it maintains our moral values even as it rewires its own “brain.” If you see a paradox lurking here, you’re right: It’s analogous to trying to imagine what you would do in a difficult situation if you were smarter than you are. This is why “a self-improving agent must reason about the behavior of its smarter successors in abstract terms,” explains Soares. “If it could predict their actions in detail, it would already be as smart as them.”

As of now, Soares thinks we’re very far from an adequate theory of how an intelligence beyond ours will think. One of the main hurdles he highlights is programming the AI’s level of self-trust, given that it will never be able to come up with a mathematically certain proof that one decision is superior to another. Program in too much doubt, and it will never decide how to effectively modify itself; program in too much confidence, and it will execute poor decisions rather than searching for better ones. Coming up with the right balance between paralyzing insecurity and brash self-assurance is spectacularly complicated. And it may not be possible to task an AI with solving this problem itself, because it may require a deeper understanding of the theoretical problems than we currently have; it may even be unwise to try, because a super AI may reason that it’s in its own interest to deceive us.

This is why figuring out how to make machines moral first, perhaps before allowing them to self-modify, “is of critical importance,” writes Soares. “For while all other precautions exist to prevent disaster, it is value learning which could enable success.”

But to some other AI researchers, precautions are not just unnecessary, they’re preposterous. “These doomsday scenarios are logically incoherent at such a fundamental level that they can be dismissed as extremely implausible,” writes Richard Loosemore, in an article for the Institute for Ethics and Emerging Technologies. He called out the Machine Intelligence Research Institute specifically for peddling unfounded paranoia. Their restrictions would “require the AI to be so unstable that it could never reach the level of intelligence at which it would become dangerous,” writes Loosemore.

One insight to glean from this dispute may be that the technology is still too far off for anything convincing to be said about it. As Loosemore declares, “If this were the timeline of aviation, rather than the timeline of AI, we would only just be getting beyond the stage where a few inventors were tinkering with flapping-wing contraptions.” On the other hand, the development of super-intelligence may be the paradigmatic example of where it’s better to be safe than sorry.

Brian Gallagher is an editorial intern at Nautilus.