In wondering what can be done to steer civilization away from the abyss, I confess to being increasingly puzzled by the central enigma of contemporary cognitive psychology: To what degree are we consciously capable of changing our minds? I don’t mean changing our minds as to who is the best NFL quarterback, but changing our convictions about major personal and social issues that should unite but invariably divide us. As a senior neurologist whose career began before CAT and MRI scans, I have come to feel that conscious reasoning, the commonly believed remedy for our social ills, is an illusion, an epiphenomenon supported by age-old mythology rather than convincing scientific evidence.

If so, it’s time for us to consider alternate ways of thinking about thinking that are more consistent with what little we do understand about brain function. I’m no apologist for artificial intelligence, but if we are going to solve the world’s greatest problems, there are several major advantages in abandoning the notion of conscious reason in favor of seeing humans as having an AI-like “black-box” intelligence.

To believe that we can accurately determine whether or not consciousness contains causal properties is sheer folly.

But first, a brief overview as to why I feel so strongly that purely conscious thought isn’t physiologically likely. To begin, manipulating our thoughts within consciousness requires that we have a modicum of personal agency. To this end, rather than admit that no one truly knows what a mind is or how a thought arises, neuroscientists have come up with a number of ingenious approaches designed to unravel the slippery relationship between consciousness and decision-making.

In his classic 1980s experiments, University of California, San Francisco, neurophysiologist Benjamin Libet noted a consistent change in brain wave activity (a so-called “ready potential”) prior to a subject’s awareness of having decided to move his hand. Libet’s conclusion was that the preceding activity was evidence for the decision being made subconsciously, even though subjects felt that the decision was conscious and deliberate. Since that time his findings, supported by subsequent similar results on fMRI and direct brain recordings, have featured prominently in refuting the notion of humans possessing free will. However, others presented with the same evidence strongly reject this interpretation.

Such disagreements reflect more than competing personal biases. A disappointing truth is that we cannot precisely correlate what happens in our brain with what we consciously experience. Given the same perceptual input—say the photo of a bright red 1955 Ferrari Spyder—one’s mental states will vary dramatically depending upon mood and circumstance. As a lifelong car fan, I might experience seeing the Ferrari as the car of my dreams, as ridiculously expensive, or ostentatious. I sometimes recall the thrill of being a little kid standing alongside my father in Golden Gate Park watching sport car races, a time when the roadster stood for adventure and a world larger than grade school and homework. Now a photo of the same car stirs unpleasant feelings about rich collectors and a particularly precious 1994 Museum of Modern Art exhibit rather than the exhilaration of leather-helmeted dare-devils. The difference isn’t in the elemental perception of the car—in both cases I fully recognize that it is the same Ferrari model. And yes, it is possible that sufficiently granular fMRI scans might one day be able to determine exactly what I am seeing.

But determining what I am feeling about what I see? For the moment, put aside the impreciseness of language and the difficulty in accurately describing personal feelings—a Herculean task that has preoccupied poets and artists for millennia. A more basic problem: There is no neuroscience methodology that can adequately document and label a lifetime of complex moment-by-moment neuronal interactions within the brain, body-wide hormonal and chemical fluctuations, as well as yet ill-understood external influences potentially contributing to the human equivalent of swarm behavior. If the same input (the sight of the Ferrari) can trigger differing mental states in the same observer, a complete knowledge of the physiology of perception isn’t sufficient to predict what the observer will consciously experience. At best, descriptions of the contents of consciousness are the equivalent of a personal memoir—a first-person eyewitness account of idiosyncratic perceptions that make such accounts notoriously unreliable. Descriptions of what we consciously feel are meta-perceptions—perceptions about our perceptions.

To put this gap between conscious experience and subliminal brain activity into a neurobiological perspective, think of a major league player swinging at an incoming pitch. As the time it takes for the ball to leave the pitcher’s hand and reach the plate is approximately the same as the batter’s initial reaction time and the subsequent swing, the batter must decide whether or not to swing as soon as the ball leaves the pitcher’s hand. (With pitch velocities ranging between 80 to over 100 miles per hour, it takes approximately 380 to 460 milliseconds for the ball to reach the plate. Minimum reaction time between the image of the ball reaching the batter’s retina and the initiation of the swing is approximately 200 milliseconds; the swing takes another 160 to 190 milliseconds.) And yet, from the batter’s perspective, it feels as though he sees the ball approach the plate and then he decides to swing. (This discrepancy in the timing of our perceptions, though ill-understood, is referred to as the subjective backward projection of time.) One of the all-time great hitters, Ted Williams, once said that he looked for one pitch in one area about the size of a silver dollar. Not to be outdone, Barry Bonds has said that he reduced the strike zone to a tiny hitting area the size of a quarter.

Even though players know that their experience of waiting until they see the pitch approach the plate before making a decision is physiologically impossible, they do not experience their swing as a robotic gesture beyond their control or as purely accidental. Further, their explanations for why they swung/didn’t swing will incorporate perceptions that occurred after they had already initiated the swing.

We spectators are equally affected by the discrepancy between what we see and what we know. Take a group of diehard anti-free-will determinists to the deciding World Series game and have them watch their home team’s batter lose the Series by not swinging at a pitch that, to the onlookers, was clearly in the strike zone. How many do you think would be able to shrug off any sense of blame or disappointment in the batter? Indeed, how many would bother to attend the game if they accepted that the decision whether or not to swing occurred entirely at a subliminal level?

Worse, we think that we see what the batter sees, but we don’t. Not needing to make a split-second decision, we can watch the entire pitch and have a much better idea of its trajectory and whether it is a fastball, curveball, or knuckleball. And we judge accordingly. How could he have been a sucker for a change-up, we collectively moan and boo, unable to viscerally reconcile the difference in our perceptions. (Keep this discrepancy in mind the next time you watch a presidential debate from the comfort of your armchair. What the candidates experience isn’t what we onlookers see and hear when not pressured for a quick response.)

There are major advantages in abandoning conscious reason in favor of seeing humans as having an AI-like “black-box” intelligence.

Irrational exuberance is okay at a ball game, but not when deciding the role of diminished capacity in sentencing a murderer or whether or not your teenage daughter is really trying her hardest to learn algebra. To believe that we can accurately determine whether or not consciousness contains causal properties is sheer folly. If you doubt this, try to imagine an experiment in which you could objectively determine that a conscious thought was both necessary and sufficient for any action. Somehow you would have to both recognize the neural signature of the conscious thought and demonstrate that it arose independently of any prior brain activity. (I realize that the absence of evidence is not evidence of absence of an effect of conscious thought on behavior, but the absence of evidence does mean that any belief in the role of consciousness on behavior is speculation rather than a testable hypothesis.)

If arriving at a reasonable consensus on our conscious ability to control our behavior is out of the question, is there an alternative commonsense approach to understanding personal responsibility? What about trying to decide the degree to which an act is intentional? But intention runs into the same dead end. If we agree that the batter intended to swing but that the intention was determined via subconscious brain mechanisms, are we still able to attribute blame or praise? What are we to do with the idea of unconscious intention?

Before answering, consider a few common variations on the theme of intention. A tree leans toward the light to capture the sun’s rays for photosynthesis. As the tree lacks any traditional definition of consciousness, the movement can be seen as involuntary yet intentional (as opposed to random or accidental). The same might be said when a hard-of-hearing person spontaneously leans forward to better hear a conversation, though we might be somewhat uncomfortable with the term involuntary and substitute in a word like reflexive, instinctive, or automatic. Up the ante a bit and consider a typical so-called “Freudian slip” such as calling your husband “dad.” Although the word “dad” is uttered prior to any conscious awareness of having chosen the word, we now suspect some underlying meaning, even perhaps an unconsciously mediated intention.

Now let’s further complicate the picture by introducing the distortions of time and memory. Imagine the following situation. During Pete’s freshman year, he is publicly humiliated by Jim, a dorm mate that Pete had previously and mistakenly believed was a close friend. Pete vows to get even; during the following summer vacation he spends much of his idle time conjuring up a number of nasty retribution scenarios. But when he returns to school in the fall, Jim is gone, having transferred to another college. Pete is momentarily annoyed at not getting a chance for revenge, but also deeply relieved at not having to confront his own childish fantasies. Memory to the rescue; he soon forgets all about him. Thirty years later, out of the blue, Pete sees Jim coming down the street, but doesn’t recall the embarrassing incident. Jim smiles and extends his hand. Without any conscious thought, Pete lowers his shoulder and charges into Jim, knocking him to the ground. Jim breaks his arm and subsequently presses charges. Pete tells the judge that he had no reason to bump into him, and that the action was entirely unintentional. However, Jim’s lawyer relates the prior incident of embarrassment and argues that the shove was entirely intentional. How/what should the judge decide? Should the punishment vary according to whether or not the judge assigns conscious or unconscious intention to the act?

Let’s imagine Pete entirely forgets the event and 30 years later spontaneously decides to write a novel of retribution and revenge. When asked why he chose the subject, Pete laughs, shrugs, and says with utter sincerity that he merely typed what was whispered to him by his muse. Though the appearance of an unbidden story is a commonly felt experience when writing fiction, few novelists would seriously argue that their writing is nothing more than random bits of typing.

We presume intention because we believe that writing is intentional, just as we presume that we can control our thoughts because we feel that we can control them. As we seem constitutionally compelled to explain and justify our actions, we have developed an extensive behavioral lexicon with its attendant philosophical arguments. But the descriptions of conscious experience do not necessarily reflect their underlying physiology. Check out the optical-illusion afterimages in this video that cause you to see a black and white image in full color. Due to depletion of photopigments in retinal receptor cells with prolonged staring at a single point, your visual cortex generates the appearance of colors that are not present in the original black and white image. What you see is not what the retina sees; your perception exists as a brain state that does not correspond to the “real world.” Similarly, a batter telling us why he did/didn’t swing may bear little if any relationship to the subliminal brain activity that generated the swing/no swing decision. In short, the language of philosophy and psychology, derived from post hoc yet deeply felt beliefs, has not helped us understand the role of conscious will at the basic science level.

I often ask myself how aliens completely unfamiliar with contemporary culture and beliefs might see us humans. Imagine encountering a group of aliens that operate via AI deep learning neural networks. These aliens can readily solve specific problems such as recognizing faces, winning at chess or poker, or detecting weather patterns. Though they have no conscious experience, hence no moods, emotions, or feelings, they have full access to extensive descriptions of conscious experience, from the great classics of literature to pop psychobabble.

Such machines would have no trouble seeing the shared similarities in how we/they acquire skills. Consider how we learn language. As infants, we gather sounds from our environment, parsing together language from the acquisition of phonemes, syllables, sentences, paragraphs. Reward systems tell us when we use language correctly or incorrectly. (I can still remember my grammar school teacher sternly shaking her head when I ended a sentence with a preposition.) The same process allows us to take up the tuba, dance the Salsa, learn the rules of logic, or have sex. The aliens would correctly presume that whatever behavior was observed was the expected result of extensive trial-and-error learning no different from how they, over many trials, improve their poker or chess skills via positive and negative feedback.

There is no compelling evidence to suggest that public debate on virtually any subject can ever be resolved through reason.

From the aliens’ perspective, there would be no need to invoke additional mechanisms such as conscious choice and willful deliberation. To them, declarations of conscious reasoning such as “I thought about this,” or “I decided to do that” would offer no added value in explaining human behavior.

Now up the ante and have the universe’s champion poker savvy alien observe your Friday night poker game. (Keep in mind that the alien’s sole purpose is to win; there are no other rewards to complicate its behavior.) It would be baffled by much of what it sees. Some play bad hands and refuse to fold when it was obvious they are losing. Some bluff too much; others too little. Some appear to enjoy losing. The alien might presume that these players didn’t have sufficient clarity of purpose (winning), have an inability to properly weigh all available information without bias, haven’t run sufficient learning trials to weed out statistical anomalies, or that their feedback loops don’t accurately record, judge, and validate results. Note that for the unemotional alien, all deviations from optimal play would be seen in the neutral mechanistic language of operational defects, not in the charged language of failure of character or will.

Such aliens would be equally befuddled by watching political debates on climate change or universal healthcare. They would observe humans ignoring data that strongly warn of impending catastrophic consequences for their species, apparently preferring and even enjoying conflict, anger, self-righteous indignation, and a wide variety of self-defeating behaviors. They would quickly conclude what most of us also suspect but often fail to acknowledge: Though our genes follow the laws of natural selection to optimize survival of the species, as individuals we are not necessarily similarly inclined.

As Thomas Hobbes pointed out in the 1600s, we migrate toward pleasure and away from pain. But pleasure is idiosyncratic. A poker player who’s lost a bundle at the Friday night game but had a few beers, a primo cigar, and some great laughs pulling off an outstanding bluff may well describe the game as the highlight of the week. Another might win but be so irritated by one of his co-players that he feels the evening was a real downer not worth repeating. For both men, outcome is not closely linked to experience. The same reward systems that provide the high of seeing a sunset, cuddling a baby, taking opioids or feeling certain, can also deliver a perverse frisson of pleasure with negative emotions such as terror and rage. (Check out the often communal enthusiasm of outrage at a political rally or the thrill of being scared at a horror movie or roller coaster ride.)

To further complicate our understanding of the relationship between purpose, behavior, and experience, we are the all-too-often proud owners of a variety of biologically generated mental sensations that influence how we feel about our thoughts. Foremost among these involuntary mental states are the seductive but illusory sense of self and agency that, in consort with the equally unbidden feeling of knowing when you are right, trigger the unshakable belief that we willfully and deliberately make conscious choices. (If you have any doubt about the feeling of certainty’s involuntary nature, just consider how the sense of an “a-ha” occurs to you in the same way as you experience love or fright.) However, the aliens observing us would see no need for such beliefs. For them, all changes in behavior occur by getting more and better data and empirically testing it; for them, the notion of conscious thought is irrelevant and unproven.

Something is fundamentally wrong with how we think about ourselves and others. Though pundits bombard us with myriad cultural and psychological explanations, for me, the most insidious is the perpetuation of the unjustified mythology that man is capable of conscious rational deliberation. This is not how our brains work. Yes, we can dream up brilliant ideas, but deep learning machines can also come up with previously unimaginable strategies without a shred of consciousness or understanding of what they are doing. (I am reminded of Richard Feynman’s quip that no one understands quantum mechanics, not even its founders, despite it being one of the great intellectual achievements of modern man.)

I don’t believe that AI can teach us anything about wisdom, compassion, morality and ethics, or how to live the good life. As one who has written several novels, I recoil from using AI jargon to describe human thought. However, entertaining the possibility that our thoughts have similar origins to AI-generated decisions does allow us to see beyond the traditional folk psychology descriptions of behavior. By not parsing out conscious versus unconscious intentions, we aren’t forced to make arbitrary and physiologically unsound judgments as to the degree of responsibility for an action. By dropping conventional notions of blame based upon assignment of agency and intention, we are better able to accept what cognitive science is increasingly demonstrating—that our thoughts arise from a combination of personal biology, experience and shared culture, not just the brain in isolation.

Aliens would be befuddled by watching political debates on climate change or universal healthcare.

In the same vein, we can reconsider what AI is teaching us about the definition of rational. A baseball player can improve his batting average by inputting a mountain of data, including where and what type of pitch a pitcher is likely to throw in any given circumstance. Though the decision whether or not to swing is made subliminally, it can be seen as rational—if by rational you mean the best choice under the specific circumstances. But subliminal rationality, like subliminal intention, is not the same as a conscious choice; proclaiming that man is a rational animal because we can have good ideas and make good decisions does not distinguish us from other animals, plants, or inanimate objects that also make correct decisions. We are no more or less rational than a thermostat.

Another critical take-away from deep learning is that all information should initially be considered equal. Consider how an AI bot learns to play poker. It is given the rules of the game and instructed to find the plays that have the best win rate. In the beginning, it will try anything, even the most seemingly ludicrous. Without built-in biases and pre-conceptions, it only winnows down the nonsensical if shown to be a losing strategy. The bot isn’t constrained by contemporary opinion, prevailing norms, or what seems reasonable. If the ridiculous (to us) play turns out to be a winner, it is retained. And we all know the bottom line of this story; AI bots are now readily outplaying the best humans.

Though seldom discussed in this light, humans have gradually adopted a similar problem-solving strategy—scientific method—for empirically testable questions. However, there remains one crucial difference. Though imagination and creativity thrive on avoiding premature judgments, scientific inquiry, limited by time and funding constraints, usually proceeds from some initial pre-conception (hunches and intuitions) of the likelihood that a particular line of investigation will be successful. As a consequence, the history of science is filled with great ideas that have been shelved or derided when they run counter to preconceived notions of how the world works. (I still remember when our UCSF neurology department chairman considered denying future Nobel Laureate Stan Prusiner tenure for wanting to pursue the idea that an aggressive form of dementia, Creutzfeld-Jacob disease, could be caused by an agent, prion, that wasn’t even a living organism.)

The benefit of treating incoming information as initially value-neutral is critical to our understanding of all aspects of modern thought. How to do this is unclear. Unlike AI, we cannot improve ourselves through increased computer processing power; we are stuck with a few pounds of flesh endowed with certain innate qualities and abilities that we expand via exposure to parents, teachers, friends, neighbors, community, like-minded individuals, and organizations. Their modes of thinking become our cognitive templates, with widely varying perspectives determining who we trust and consider experts.

There is no compelling evidence to suggest that public debate on virtually any subject can ever be resolved through reason. We migrate toward what we feel is best. Even science has its problems, ranging from replication to questions of statistical validation. However, science has self-correcting methods for slowly moving closer to supportable knowledge. Untestable opinion has no such self-correcting mechanisms.

If this argument sounds harsh or offensive, so is watching present day failure of discourse between those with differing points of view, yet persisting with the unrealistic hope that we could do better if we tried harder, thought more deeply, had better educations, and could overcome innate and acquired biases.

If we are to address gathering existential threats, we need to begin the arduous multigenerational task of acknowledging that we are decision-making organisms rather than uniquely self-conscious and willfully rational. Just as we are slowly stripping away pop psychology to better understand the biological roots of mental illnesses such as schizophrenia, stepping back from assigning blame and pride to conscious reasoning might allow us a self-image that reunites us with the rest of the natural world as opposed to declaring ourselves as unique. Only if we can see that our thoughts are the product of myriad factors beyond our conscious control, can we hope to figure out how to develop the necessary subliminal skills to successfully address the world’s most urgent problems. If AI can improve itself, so can we.

Robert Burton, M.D., former Chief of the Division of Neurology at UCSF Medical Center at Mt. Zion, is the author of On Being Certain: Believing You Are Right Even When You’re Not, A Skeptic’s Guide to the Mind: What Neuroscience Can and Cannot Tell Us About Ourselves, and three critically acclaimed novels.

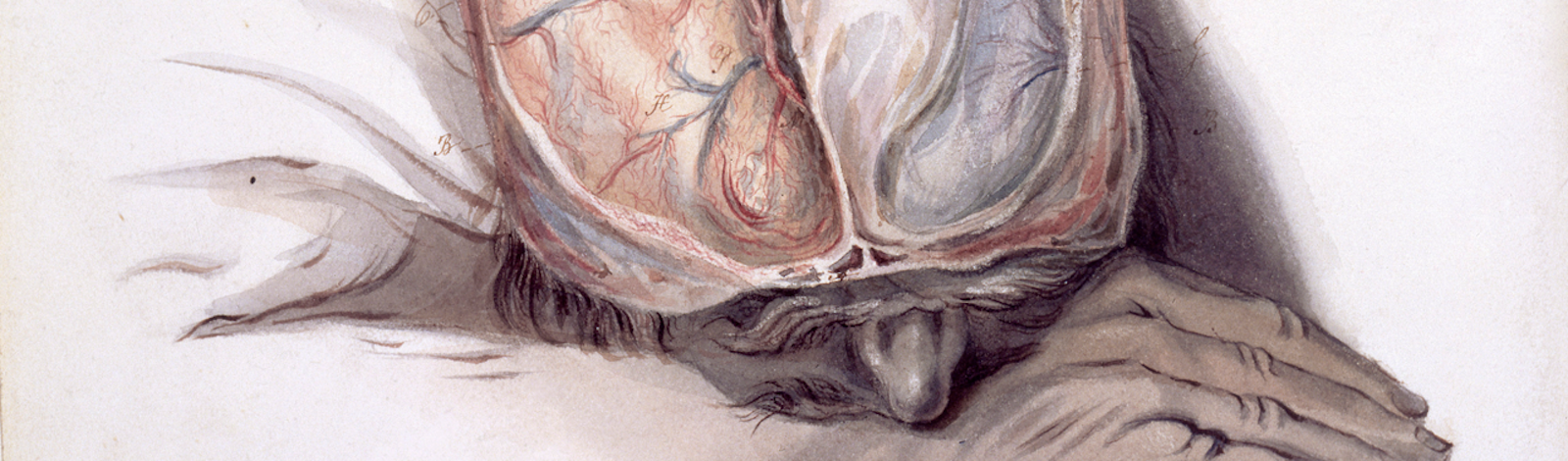

Lead image: Wikimedia