Tony Ro’s research on the brain’s mixing of sound and touch began, appropriately enough, at a mixer. It was the spring of 2000 in Houston, Texas, where he had recently launched his first laboratory at Rice University. The mixer was for new faculty at the school, to help them get to know each other. Ro struck up a conversation with Sherrilyn Roush, a 34-year-old philosopher, who told him all about her work on the reliability and fallibility of science. And Ro told her about his studies on how the human brain merges the torrent of sensory information we see, hear, and feel.

“I said to him, ‘Well, you should study my brain!’ ” Roush recalls, laughing. “And then I immediately thought to myself, oh shit, he probably gets this at every party.” Ro gamely asked her why her brain was so unusual. She explained that she had had a stroke a couple of months earlier, and ever since had felt numb on the left side of her body. “And then he said, ‘Actually, I should study your brain.’ ”

Over the next couple of years Roush went to Ro’s laboratory several times for a variety of behavioral tests and brain scans. In one experiment, she sat with her hands resting on the arms of a chair, donning rings of electrodes on each of her middle fingers. Throughout the experiment, the rings would deliver a small electric current to her right hand, left hand, both hands, or neither hand, and Roush would tell the researchers when she felt a faint shock. Ro puzzled over that data for a long time. “She kept reporting she was feeling things even when we weren’t delivering any touch stimuli,” he says. “I couldn’t figure it out.”

Five years went by before Ro thought of a possible, if weird explanation. During the experiment, a 500-millisecond warning tone had played at the beginning of each trial. Perhaps, he thought, Roush was responding to that sound. He dashed off an email to her. “Did you or do you feel any sensations on your hands in response to sounds?” he wrote. Roush responded right away: Yes, in fact, her skin was sensitive to sound. Her friends would sometimes needle her by making noises that set her off. And she had an awful reaction to the crisp drawl of Colonel St. James, a popular radio personality in Houston. “My entire body rebels at certain pitches, which I’ve noticed with radio announcers,” she told Ro. “I have to change the station.”

Ro realized then that Roush’s stroke had left her with synesthesia, a neurological mix-up of the senses.

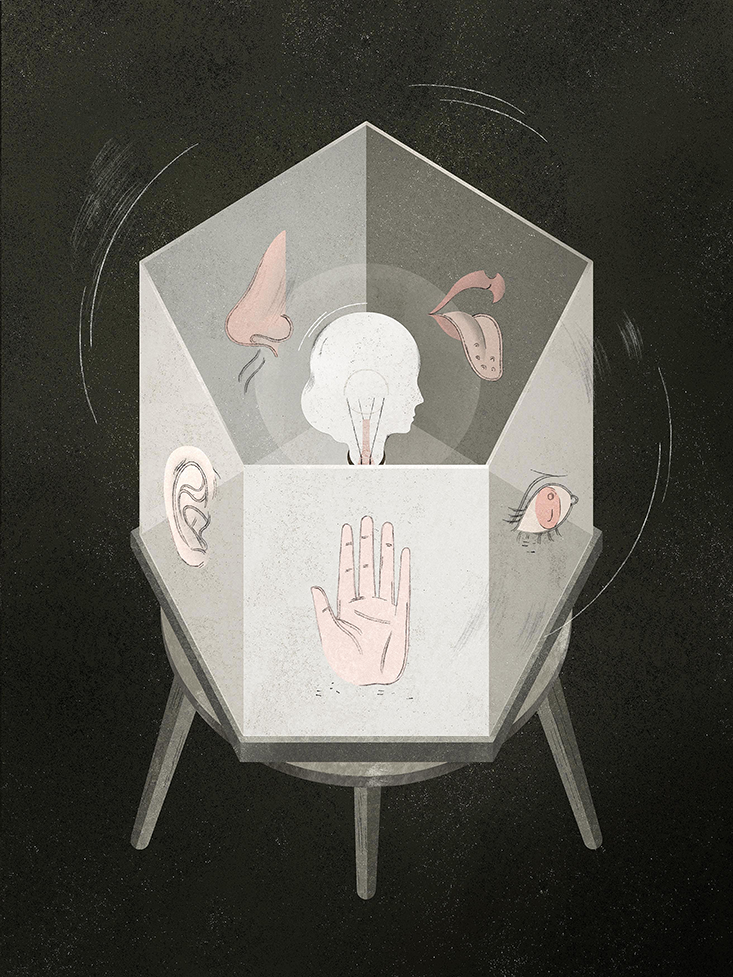

Humans have five distinct senses: sight, sound, touch, taste, and smell. For decades, researchers have thought that each sense is processed separately in the cortex, or outer layers of the brain, and then later integrated by separate brain areas. Cortical tissue near the back of the brain holds cells sensitive to vision, for example, while the area above the ear reacts to sounds. But recently, researchers have begun to question this so-called “uni-modal” model of sensory processing, suggesting instead that cortical regions respond to and integrate information from several senses at once.

In Roush, Ro saw an opportunity to study an extreme case of sensual mixing. Synesthesia is not well understood, but the condition has long been reported by creative types. The abstract painter Wassily Kandinsky, for example, saw “wild, almost crazy lines” sketched in his mind while listening to an opera, and claimed that as a child, he heard a hissing noise when mixing paint colors. Novelist Vladimir Nabokov saw the V in his name as “pale, transparent pink” and the N as a “greyish-yellowish oatmeal color.” Physicist Richard Feynman described the hues of equations. Psychologists dismissed these claims as hallucinations until the 1990s, when brain-scanning experiments on synesthetes confirmed that their sensory brain circuits take inputs from one sense and interpret them as coming from another.

We may all have some synesthesia to some extent.

Roush’s particular flavor of synesthesia, in which she feels sounds on her skin, had never been reported in the scientific literature. Ro first published her case in 2007 and has continued to study her, trying to pin down how this sensory mix-up happens in her brain.1 Through this work, Ro has developed an intriguing theory: that Roush’s merging of sound and touch is just an exaggerated version of what happens in all of our brains.

Roush’s particular flavor of synesthesia, in which she feels sounds on her skin, had never been reported in the scientific literature. Ro first published her case in 2007 and has continued to study her, trying to pin down how this sensory mix-up happens in her brain.1 Through this work, Ro has developed an intriguing theory: that Roush’s merging of sound and touch is just an exaggerated version of what happens in all of our brains.

Most of us, after all, have felt the almost painful shiver of hearing fingernails scratching a blackboard, or have gotten the chills when Whitney Houston hits a high note. Given the similarities between the brain cells that process sounds and feelings, it’s even possible, Ro says, that a sense of hearing evolved from a sense of feeling. That shared ancestry might make these senses more cooperative than scientists ever imagined. “Certain areas of the brain can merge with one another, or one area can acquire another,” says Ro, now at The City University of New York. “We may all have some synesthesia to some extent.”

As early as the 1960s, studies of monkey brains suggested that the cortex processes sensory information in discrete chunks, and in a hierarchical order within those chunks.

Take vision, for example, the most-studied sensory system. When a monkey looks at something, light rays hit the back of its eyes, where specialized cells transform the light into electrical signals. Those messages then move from the eyes to the thalamus, a relay center smack in the middle of the brain. From the thalamus, the message goes to an area at the back of the brain called the primary visual cortex, or V1. Cells in this area are sensitive to crude visual features—such as color and the spatial orientation of lines. After hitting V1, information gets further processed and distributed to regions called V2, V3, V4, and V5. For example, cells in V5 integrate visual signals from the lower regions to help the animal perceive the speed and direction of moving objects. Outside of the visual cortex, the processed information mixes with input from other senses.

Researchers have mapped the same sorts of hierarchies for other sensory systems. All of that work suggests that each specialized area of the primary cortex processes only one sense at a time, and that the brain doesn’t mix different types of sensory information until it reaches the higher-order areas.

That dogma began to erode in the late 1990s, when neuroscientist Charles Schroeder made a mistake that turned into scientific serendipity. Working at the Nathan S. Kline Institute for Psychiatric Research in New York, Schroeder and his colleagues were using monkeys to learn about how attention influences the perception of sound. For these experiments, they would place an electrode in a monkey’s primary auditory cortex, and record the electrical firings from cells as the animal listened to clicks and beeps.

The primary auditory cortex lies right below the somatosensory cortex, which typically responds to touch (somato comes from the Greek word for body). One day the researchers placed the electrode in a patch of cells that vigorously fired when the monkeys were touched, so they assumed the electrode was in the somatosensory cortex. They soon realized, however, that it was deeper than they thought, in the auditory cortex, which meant the region was responding to both sound and touch. “We said, oh my god we have to study this,” Schroeder recalls. After the initial discovery, his team looked further into the connections between these two regions, ultimately showing that parts of the somatosensory cortex also respond to sounds. “The systems are really densely interconnected,” he says.

Around the same time, other labs were also beginning to report that sensory cortices process more than one sense. For example, in brain scans, study participants’ auditory cortices responded to speech sounds differently when they simultaneously looked at letters, and their primary olfactory cortices, regions that process smells, activated when they looked at words describing an odor—such as “cinnamon” or “garlic.”

Sensory mergers seemed most dramatic in unusual patients whose brains had reorganized after a loss of sensory inputs. One report, for example, described a patient known as PH who began losing his vision in childhood and was completely blind by age 40.2 Two years later, PH developed a kind of synesthesia in which reading Braille with his hands produced “intrusive visual sensations,” including colored dots that would shift in his mind’s eye. When researchers tapped on the man’s fingers in the laboratory, he experienced a dramatic “jumping” and “swirling” of his visual field.

Thinking about these rare patients and Schroeder’s monkeys, Ro decided to investigate whether Roush’s stroke had rewired her sensory circuits. Ro scanned her brain while she listened to animal calls, power tools, pure tones, and other sounds. In 2008, he reported that sounds not only activated Roush’s primary auditory cortex, but they also tickled her somatosensory cortex.3 She already knew, of course, that sounds sometimes triggered strange sensations on the skin of her arm and hand. But the scans gave neurological proof that her brain was interpreting sound as touch.

Some researchers argue that true synesthetes like Roush are probably exceptions to the rule of uni-modal sensing, rather than amplified examples of the multi-modal sensing that happens in everybody. Ranulfo Romo, a neuroscientist at the National Autonomous University of Mexico in Mexico City, has found evidence to support the traditional model of sensory processing. He and his colleagues trained monkeys to discriminate between two different sound frequencies (low versus high) and between two different touch frequencies (slow versus fast pulses) delivered to the end of their fingertips. Neurons in the monkeys’ auditory cortices produced distinct firing patterns for the two different sounds, but showed no such encoding of the touch frequencies. Likewise, neurons in the somatosensory cortex selectively responded to the different touch stimuli but not to the sound.4

Based on those data, Romo is ready to dismiss the idea that primary sensory cortices are multisensory. Studies showing otherwise might have picked up on slight stimulations occurring in other areas, but they don’t indicate that those areas are actually cross-processing sensory information, he says. “My position is: The visual cortex is very visual, the auditory cortex processes only auditory information, and the somatosensory cortex processes only somatosensory input,” he says.

Participants’ primary olfactory cortices, regions that process smells, activated when they looked at words describing an odor—such as “cinnamon” or “garlic.”

Schroeder offers a middle ground: The primary cortices are indeed specialized for one sense, but the inputs from other senses have what scientists call a “modulatory effect,” meaning that they subtly adjust the signal from the primary sense. It’s similar to talking to someone at a noisy cocktail party, when reading their lips helps you to make sense of their speech, Schroeder explains. As one of his recent studies showed, lip reading during spoken conversations modulates rhythms of activity in the auditory cortex. “It’s not like the auditory cortex itself is seeing,” he says. “It has a modulatory effect of boosting the gain of your visual perception.”

In normal people, these mergers may be slight. But if one sensory cortex is impaired from a stroke or another brain trauma, Ro suspects the neural connections between it and other regions may increase during the healing process. Perhaps this is what happened to Roush after the stroke wiped out brain cells in the right side of her thalamus, the relay center between our sensory organs and the cortex. For the first year and a half after it happened, she lost a lot of feeling on her left side (because of how our sensory nerves are wired, the right thalamus affects the left side of the body). Her sense of touch wasn’t entirely gone, but if something tapped her, it felt much lighter than it did before her stroke.

The damaged part of her thalamus, Ro reasons, must have been responsible for transmitting touch information to the somatosensory cortex. With that region suddenly quiet, it seems that its next-door neighbor, the primary auditory cortex, took over the neural real estate. After a while, auditory inputs began innervating her somatosensory cortex, causing her odd feelings of touch. Last year, Ro backed up his theory with another imaging study of Roush’s brain. He used a type of brain imaging, called diffusion tensor imaging, which tracks the bundles of nerves that connect various regions of the brain. He found robust connections between her auditory cortex and somatosensory cortex, supporting the idea that the latter had acquisitioned the former.5

But here’s the rub: Ro’s study also found connections between these regions in 17 normal controls. The links were not as strong as those he saw in Roush’s brain, but they were there all the same. “There seems more and more evidence now that the senses are very interconnected,” he says.

If senses are indeed connected, the next question is whether they impinge on each other equally. A wide variety of research—not only from neuroscience, but also from evolutionary biology and electrical engineering—suggests sound and touch share deep connections. The biological scaffolding of both systems is quite similar, especially compared with the other senses. Vision depends on eye cells that are sensitive to light, while our taste buds and noses detect chemicals. In contrast, hearing and touch rely on mechanical tweaks to cells. In the inner ear, so-called “hair cells” have skinny fingers that move in response to sound waves. Cells in the skin contain similar “mechanoreceptors” that respond to physical pressure.

Some studies on the evolution of fish suggest that hair cells evolved from cells in a sensory organ that detects vibrations in the surrounding water. And because humans and other mammals evolved from fish-like creatures, it’s possible that these early vibrational sensors gave way to our current sense of sound. Ro speculates that over the course of millions of years, “our ability to hear distant information may have come about by adapting the same mechanisms that process touch.”

Her sense of touch wasn’t entirely gone, but if something tapped her, it felt much lighter than it did before her stroke.

Although speculative, it’s possible that what scientists now interpret as a merger of sound and touch might actually be a reflection of an earlier state in which the two senses were one. How the brain regions that process those senses interact today, however, remains somewhat mysterious. It could be that the region we call the auditory cortex doesn’t interpret sound, per se, but rather frequency information of any kind, whether it comes from sounds or from vibrations.

Bolstering that idea, acoustic engineers Louis Braida and Charlotte Reed of the Massachusetts Institute of Technology in Cambridge conducted a series of experiments in which they delivered simultaneous sounds and finger-pulses to volunteers. When the sound and touch frequencies matched, the volunteers heard sounds that were inaudible on trials without the added pulsing. But how this integration works in the brain is only beginning to be understood.

A benefit of the relationship between the auditory and somatosensory cortices is that the connections that develop between them after injury might help patients regain touch or hearing, albeit in slightly altered ways. Fourteen years have passed since Roush’s stroke, and her synesthetic brain continues to adapt in ways that are both frustrating and enriching. About five years ago she began feeling hypersensitive to sounds. Even in her quiet apartment she’ll sometimes wear earplugs all day in order to concentrate. But on the positive side, Roush, a dancer, has become more sensitive to the rhythm of music. “I sense it much more intensely and appreciate more simultaneous rhythms,” she says.

She attributes all of this to her brain fervently trying to use sound to get as much tactile information from the world as possible, since she still has reduced feeling on her left side. Sometimes this neural compensation feels like “trying to scrub a floor with a toothbrush,” she says. “But actually, even though the sensations aren’t usually all that strong, they are strong enough to tell me where my arm and head and leg are. And I guess that’s the point.”

Virginia Hughes is a science journalist based in Brooklyn.

References

1. Ro T. et al. Ann Neurol 62, 433-441 (2007). PubMed: http://www.ncbi.nlm.nih.gov/pubmed/17893864

2. Armel K.C. et al. Neurocase 5, 293-296 (1999). Abstract: http://www.tandfonline.com/doi/abs/10.1080/13554799908411982#.Uul1tmRdWm0

3. Beauchamp M.S. and Ro T. J. Neurosci 28, 13696-13702 (2008). PubMed: http://www.ncbi.nlm.nih.gov/pubmed/19074042

4. Lemus L. et al. Neuron 67, 335-348 (2010). PubMed: http://www.ncbi.nlm.nih.gov/pubmed/20670839

5. Ro T. et al. Cereb. Cortex 23, 1724-1730 (2013). PubMed: http://www.ncbi.nlm.nih.gov/pubmed/22693344