Do you know what information is? No worries if you don’t. Clarity on the concept is apparently hard to come by. In a May cover story, New Scientist wondered, “What is information?” The answer: “a mystery bound up with thermodynamics” that “seems to play a part in everything from how machines work to how living creatures function.” Plausible enough—genomes, immune systems, and brains all seem to process information.

Yet published the next week was an essay on Aeon by Robert Epstein, a senior research psychologist at the American Institute for Behavioral Research and Technology, explaining, “Your brain does not process information” (emphasis mine). “We are organisms, not computers. Get over it. Let’s get on with the business of trying to understand ourselves, but without being encumbered by unnecessary intellectual baggage. The IP [information-processing] metaphor has had a half-century run, producing few, if any, insights along the way. The time has come to hit the DELETE key.”

Has it, though? I’m not sure I want to “get over” my identity as an information processor just yet. Epstein’s conclusion did provoke some unambivalent comments. Sergio Graziosi, for example, a molecular neurologist turned software engineer, felt, after reading Epstein’s “The Empty Brain,” that he had to post a blog titled “Robert Epstein’s Empty Essay,” arguing that “virtually every key passage is mistaken.” David Krakauer, a complex systems theorist at the Santa Fe institute—and an articulate provocateur—agrees. On Tuesday, he joined the neuroscientist Sam Harris on his Waking Up podcast to, among other things, explain why. Krakauer took issue with Epstein’s contention that our understanding of the brain as a computer will inevitably give way to another metaphor. “In the mid-1800s,” Epstein writes, “inspired by recent advances in communications, the German physicist Hermann von Helmholtz compared the brain to a telegraph.”

Sure, Krakauer tells Harris, we do have a tendency to be “epistemologically narcissistic,” projecting our latest and greatest technology as a template onto the brain in hopes of understanding it. But where Epstein goes wrong is in succumbing to what Krakauer likes to call “M3 mayhem.” The mayhem comes from not understanding the difference, he says, between the three Ms: mathematics, mathematical models, and metaphors. This misunderstanding arises, in turn, from confusion about the technical and colloquial meaning of scientific terms—in Epstein’s case, “computation” and “information.” Arguments like Epstein’s “flare up,” Krakauer says, as a result of mixing up the technical with the metaphorical, and the mathematical with the colloquial.

Information is in fact “the negative of thermodynamic entropy.”

Computation actually has a specific definition—not necessarily related to information processing—formulated by Alan Turing, forefather of the modern computer. In 1928, the mathematician David Hilbert wondered whether it were possible to create a machine capable of answering any mathematical question. Turing, in answering Hilbert in 1936, “invented a mathematical model that we now know as the Turing machine,” says Krakauer. It showed that there are some mathematical statements that are fundamentally uncomputable—impossible, in other words, to prove as true or false. Later, in the 1940s, Turing realized that the Turing machine was not just a model for solving math problems, says Krakauer—“it was actually the model of problem solving itself, and the model of problem solving itself is what we mean by computation.”

But Epstein uses “computation” as if it’s synonymous with any sort of information-processing, in effect setting up a straw man: “The idea,” writes Epstein, “that humans must be information processors just because computers are information processors is just plain silly.” This argument, says Krakauer, “is so utterly confused that it’s almost not worth attending to.”

The brain does process information because information is in fact “the negative of thermodynamic entropy,” as Claude Shannon, the founder of information theory, realized, says Krakauer. Entropy is the degree of disorder or randomness in a system, so information, says Krakauer, amounts to “the reduction of uncertainty,” or disorder, in a system. Our brains, he says, are constantly reducing uncertainty about the world, for example, by transforming sensory inputs, like light hitting our eyes and atmospheric vibrations bumping our ears, into the perceptual outputs of a visual scene accompanied by sound. In a sense, every waking moment is an effort to stave off and diminish the unpredictability of the world.

“It turns out that you can measure this reduction mathematically, and the extent to which that’s useful is proved by, essentially, neuroprosthetics,” says Krakauer. “The information theory of the brain allows us to build cochlear implants; it allows us to control robotic limbs with our brains. So, it’s not a metaphor! It’s a deep mathematical principle that allows us to understand how the brain operates and re-engineer it.”

For more Krakauer, be sure to check out his entire chat with Nautilus. A clip from our conversation is below:

Brian Gallagher, an assistant editor at Nautilus, edits the Facts So Romantic blog. Follow him on Twitter @bsgallagher.

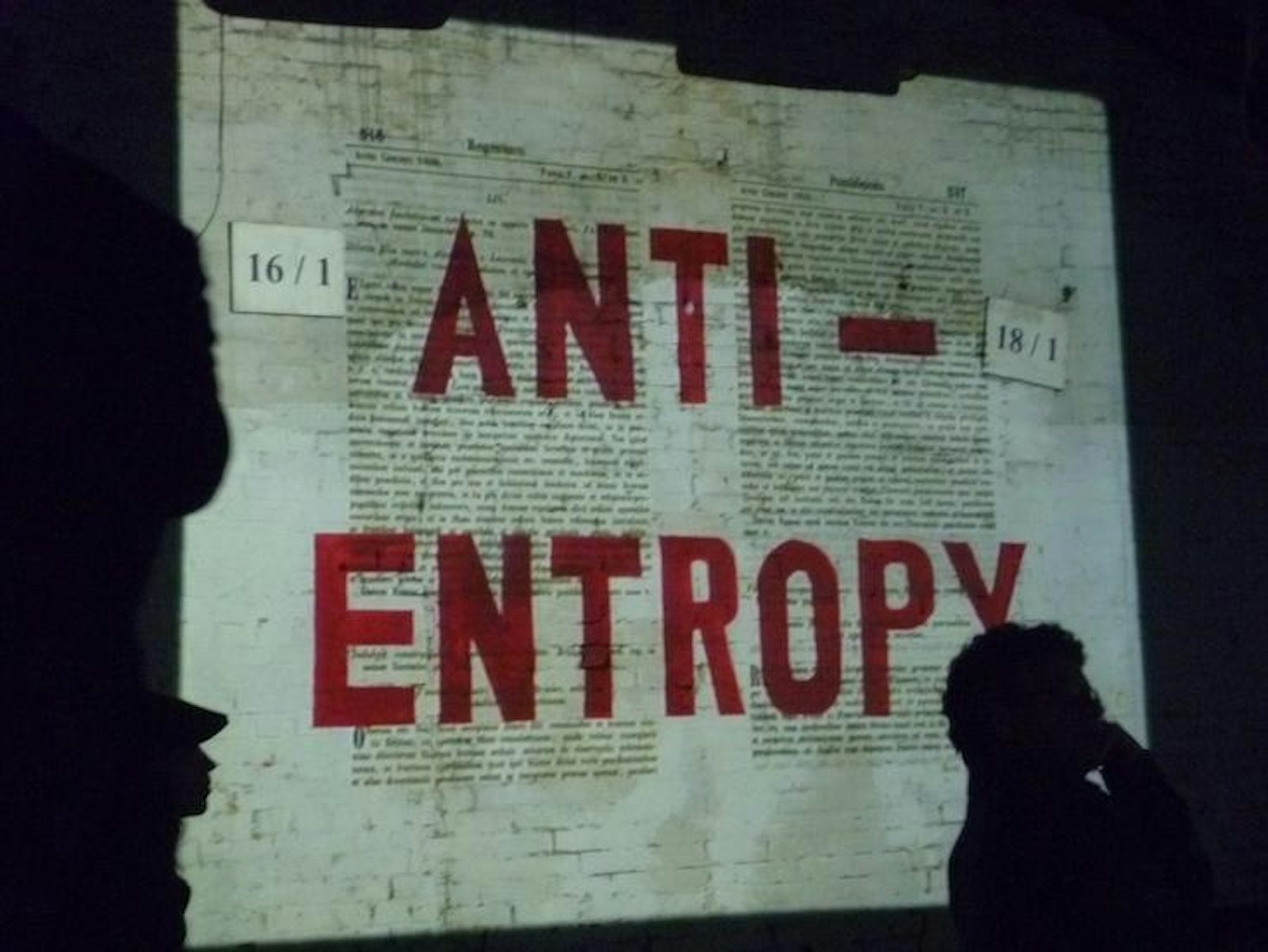

The lead photograph is courtesy of 51% Studios Architecture via Flickr.