Infomercialist and pop psychologist Barbara De Angelis puts it this way: “Love is a force more formidable than any other.” Whether you agree with her or not, De Angelis is doing something we do all the time—she is using the language of physics to describe social phenomena.

“I was irresistibly attracted to him”; “You can’t force me”; “We recognize the force of public opinion”; “I’m repelled by these policies.” We can’t measure any of these “social forces” in the way that we can measure gravity or magnetic force. But not only has physics-based thinking entered our language, it is also at the heart of many of our most important models of social behavior, from economics to psychology. The question is, do we want it there?

It might seem unlikely, even insulting, to suggest that people can be regarded as little magnets or particles dancing to unseen forces. But the danger is not so much that “social physics” is dehumanizing. Rather, it comes if we do not use the right physics in thinking about society.

Physicists have learned that natural systems can’t always be described by classical, equilibrium models in which everything reaches a steady, stable state. Similarly, social modelers must beware of turning society into a deterministic Newtonian machine by applying inappropriate physical models that assume society has only one way of working properly. Society rarely finds equilibrium states, after all. Social physics needs to reflect that very human trait: The capacity to surprise.

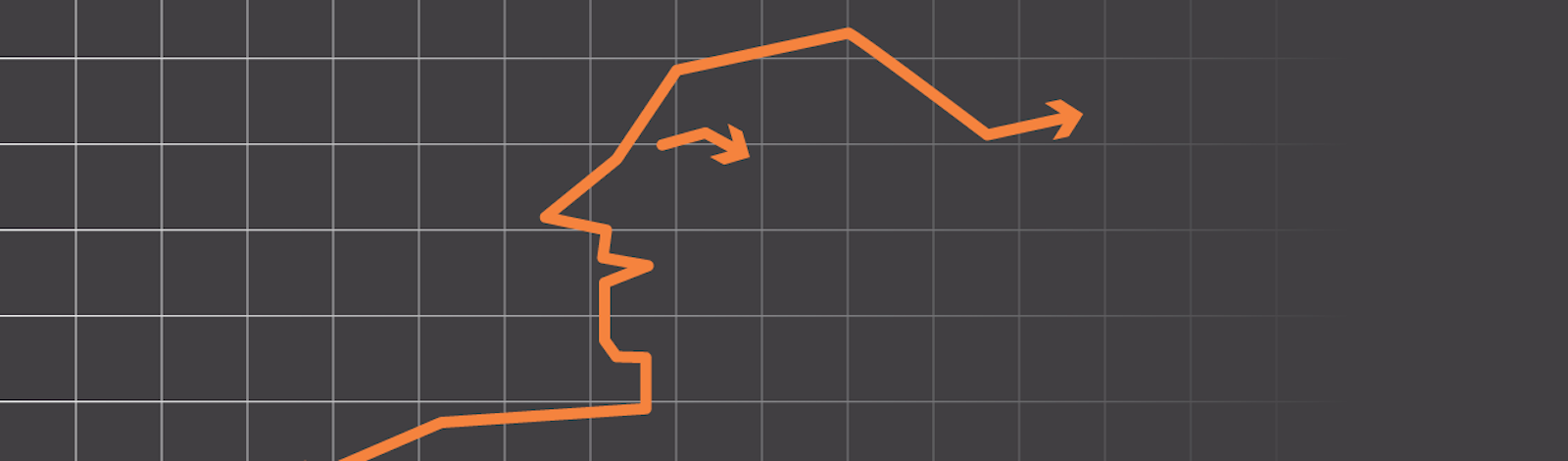

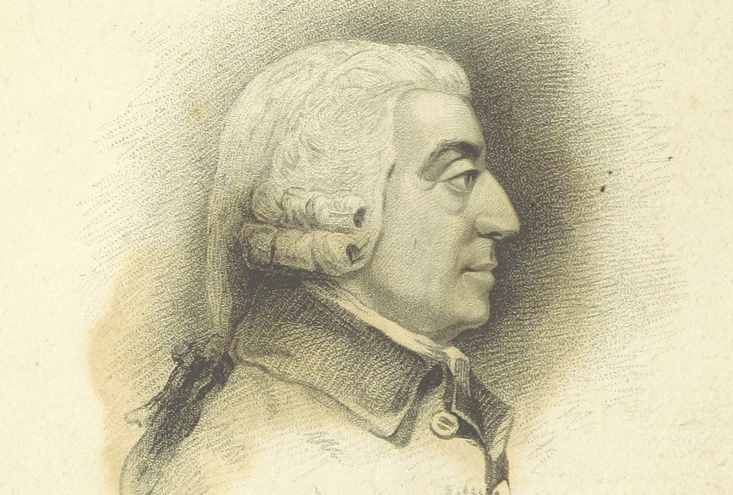

Both the attraction and the pitfalls of a physics of society are illustrated in economics. Adam Smith never actually used the term “market forces,” but the analogy was clearly in his mind. Noting how market prices seem to be drawn to some “natural” value, he compared this to the effect of gravity that Isaac Newton had explained as an invisible force a century earlier. Smith also said in his seminal Wealth of Nations that an “invisible hand” maintains equilibrium in the economy.

Smith was not alone in following Newton. Newtonian clockwork mechanics were, at the time, regarded as the model to which all understanding of nature should aspire, perhaps even including the mechanics of the human body and of society. In a poem extolling the universal power of the Newtonian picture, the French natural philosopher Jean Théophile Desaguliers wrote in 1728 that the notion of a gravity-like force of attraction “is now as universal in the political, as the philosophical world.”

By the 19th century it was widely believed that economics was as law-like as astronomy, and that any interference in the operation of these laws (by attempts to regulate markets, say) was not only unwise but contrary to nature and positively immoral. As the American writer Ralph Waldo Emerson put it,

The laws of nature play through trade, as a toy-battery exhibits the effects of electricity. The level of the sea is not more surely kept, than is the equilibrium of value in society by the demand and supply: and artifice or legislation punishes itself, by reactions, gluts, and bankruptcies. The sublime laws play indifferently through atoms and galaxies.

An obvious objection to this “physicization” of economics is that while the planets are inanimate spheres following their steady elliptical orbits in space, movements in the economy are dictated by the whims—what John Maynard Keynes later called “animal spirits”—of people. Surely they can’t be governed by the same sort of mathematical rules, the same clockwork predictability?

That capriciousness of human nature is precisely what 18th and early 19th-century scientists thought they had tamed—with statistics. Many were astonished to find that acts of human volition, such as crimes and suicides, or events seemingly dictated by imponderable, random circumstances, such as undelivered letters in the mail, followed reliable statistical rules. Not only did the averages seem to stay fairly constant, the small deviations from the averages fell onto a smooth mathematical curve, called the bell curve or the gaussian, after the German mathematician Carl Gauss. It’s no coincidence that some of the earliest work on statistics in the social sciences was done by people trained in the physical sciences, such as the Frenchman Pierre-Simon Laplace and the Belgian astronomer Adolphe Quetelet.

Markets should be steady. You might have noticed that they’re not.

Today there’s nothing surprising about the fact that several social phenomena fit gaussian statistics, because we know that these are simply what result from the quantitative outcomes of events that occur independently from one another—they’re a reflection of the fact that randomness really does often dictate what happens in these situations. But to 19th-century scientists this fact seemed to support the idea that there are firm laws of society just like the Newtonian laws of mechanics. That belief bolstered the view of the French philosopher Auguste Comte that the sciences could be ordered in a hierarchical ranking, all of them law-like and predictable (once we understood them well enough) and modeled after Newtonian physics, which stood at the foundation. Comte called for a “social physics” that would complete the project Newton had begun.

If that was so, where did the laws of economics come from? Evidently they stemmed from the innumerable actions of individual traders, dealers, and investors in the marketplace. But how could we factor in those all-too-human decisions? Once again, physics seemed to have the answer. In the mid-19th century statistical reasoning was everywhere, and the scientists James Clerk Maxwell and Ludwig Boltzmann applied it to the behavior of matter. Their efforts to understand how the bulk properties of gases (like pressure, temperature, and volume) arose from the inscrutable motions of countless molecules in frantic thermal motion gave rise to the discipline of statistical mechanics, which has underpinned the microscopic theory of matter ever since. The point was that you didn’t need to know all the details of what every molecule was doing. The individual quirks averaged out, and out of microscopic chaos came macroscopic smoothness and predictability.

These ideas were rapidly absorbed into economics. In 1900 a young French physicist called Louis Bachelier derived what amounted to a theory of random walks five years before Albert Einstein provided a rigorous description of it to explain the phenomenon of Brownian motion: the wiggling dance of tiny grains suspended in water. But Bachelier was not using this idea to understand specks of matter; he applied it to the fluctuations of stock markets.

Much more influential in the long run was the work of the American scientist Josiah Willard Gibbs, who in the early 1900s outlined the framework of statistical mechanics that is still used today. Gibbs’ student and protégé Edwin Bidwell Wilson became the mentor of economist Paul Samuelson, whose doctoral dissertation became the basis for his aptly named 1947 book Foundations of Economic Analysis. Here Samuelson used statistical arguments like those of Gibbs to more or less construct from scratch the discipline of microeconomics, which explained how individual actions of agents lead to gross economic movements.

It sounds like a great result: ideas in physics proving useful elsewhere. The trouble is, it was the wrong physics for describing a system like the economy. The statistical mechanics that Gibbs and others developed was a theory of systems composed of many particles when they are at equilibrium: that is, when they have settled into a steady, stable state, as molecules do, say, in a glass of water at a uniform temperature. The Newtonian paradigm, and Adam Smith’s invisible hand, had encouraged the idea that markets, too, settle into a stable equilibrium in which prices find a natural value where supply balances demand. Markets should then be steady.

You might have noticed that they’re not. Of course, economists have noticed that too. But the traditional view is that fluctuations in prices are largely just random “white noise,” like the tiny place-to-place variations in temperature we can measure in a glass of water or the electrical noise in a circuit. But when a crash occurs, it’s almost like a thermometer in a beaker of water abruptly soaring toward boiling point or plunging toward freezing. How can that happen? The standard explanation in economic theory is that markets are rocked by external events—political decisions, technological changes, natural disasters, and so on—and that these can disturb what would otherwise be a steady market.

But the statistics of fluctuations in the economy never really look like the random white noise of an equilibrium state. They are much more spiky than that—as economists put it, they’re “heavy-tailed.” That’s been long known, but there hasn’t been a clear explanation for it. All the same, some key theories in economics, such as the Black-Scholes formula used to calculate the “right” prices in the risky derivatives market, ignore the heavy tails and pretend the fluctuations are after all like gaussian white noise. The inaccuracies this neglect introduces—basically a denial that the market can undergo big fluctuations fairly often—have been implicated as one element behind the catastrophic 2008 crash.

There is little sign that the patent inadequacy of current economic models has led to much soul-searching in the traditional heartlands of the discipline.

“This equilibrium shortcut was a natural way to examine patterns in the economy and render them open to mathematical analysis,” says W. Brian Arthur, an economist at the Santa Fe Institute in New Mexico. “It was an understandable—even proper—way to push economics forward. Its central construct, general equilibrium theory, is not just mathematically elegant; in modeling the economy, it gives us a way to picture it, a way to comprehend the economy in its wholeness.” But the price we pay, Arthur says, is that this model economy “lives in a Platonic world of order, stasis, knowableness, and perfection. Absent from it is the ambiguous, the messy, the real.”

It’s now clear that economic markets show all the telltale signs, familiar to physicists, of being a system that is not in equilibrium. That means Gibbs’ statistical physics just isn’t the right model. What’s more, price fluctuations are best explained not as the aggregate of many random, independent decisions, ruffled by external shocks to the system—the standard model—but as largely the outcome of the internal, ever-active dynamics of the market, in which feedback makes decisions inter-dependent. Big fluctuations such as over-valuations and crashes seem to come from herding effects: Everyone does what they see others doing. This is obvious enough from the real world, and it is a familiar idea to economists too—it is partly what Keynes had in mind with his “animal spirits.” Non-equilibrium economic models that allow for feedback and knock-on propagation of agents’ decisions can explain such things.

Nevertheless, many economists have resisted importing the tools that modern statistical physics (which now happily works with non-equilibrium systems too) offers to describe that situation. Why wouldn’t they do that? The answer is complicated. For Samuelson, non-equilibrium states seemed to defy intuition. “Positions of unstable equilibrium,” he wrote in 1947, “even if they exist, are transient, non-persistent states … How many times has the reader seen an egg standing upon its end?” Yet we now know that such states are all around us: in ecosystems, in the weather, and in society.

Academic inertia may also have a lot to do with it. Having invested so heavily in the tidy, solvable equilibrium models that Samuelson and others created, economists have a lot to lose. I have been told that there exist economic journals (at least, there did a decade ago) that simply refuse to consider papers whose starting position is not an equilibrium model. That is certainly “dismal science.”

And there may also be an ideological aspect at play here. Academic economists tend to react with outrage at the suggestion that there is ideology in their mathematical models. But let’s put it this way: If you were an economic pundit, a politician, or a banker predisposed to believe that “the market knows best” and that regulation is inherently bad, you would easily enough find justification for your point of view in equilibrium theories of economics. “If we assume equilibrium, we place a very strong filter on what we can see in the economy,” says Arthur. “Under equilibrium by definition there is no scope for improvement or further adjustment, no scope for exploration, no scope for creation, no scope for transitory phenomena, so anything in the economy that takes adjustment—adaptation, innovation, structural change, history itself—must be bypassed or dropped from theory.”

Whatever the reason, the consequences are pretty dire. The myth of market equilibrium persuaded some political leaders to proclaim, only months before the 2008 crash that almost bankrupted the global economy, that boom and bust cycles were now a thing of the past. Even if no one tends to make such claims today, there is little sign that the patent inadequacy of current economic models has led to much soul-searching in the traditional heartlands of the discipline. So we’ve little reason to hope that it will offer more reliable guidance in the future.

Physicists have been working for nearly a century to devise alternatives to the equilibrium models that failed us in 2008. The Norwegian-born scientist Lars Onsager, surely one of the least known geniuses of the 20th century, arguably began the effort in the 1930s. He showed how, for small departures from an equilibrium state, there exist mathematical relationships between the forces driving the system away from equilibrium (a gradient in temperature, say) and the rates of the processes that result. For this work, Onsager got a Nobel Prize for chemistry in 1968.

Another chemistry Nobel went to Russian-born Ilya Prigogine in 1977 for extending non-equilibrium thermodynamics further. Prigogine argued that, again if you’re not too far from true equilibrium, a system adopts the state in which entropy—crudely speaking, disorder—is generated at the slowest rate. He also demonstrated that, as the driving force away from equilibrium increases, systems may undergo abrupt changes in their overall state and mode of organization, rather like the switch between a solid and a liquid. What’s more, these non-equilibrium states aren’t necessarily disorderly and chaotic, but can have a surprising amount of structure in them.

All this is borne out by experience. It was known since the 19th century that if you heat up a pan of liquid from below so that the lower, hot liquid becomes less dense and rises by convection, then above a certain heating threshold the convective motions can become organized into cells, circulating the fluid from bottom to top and back down again. And the cells aren’t random; in the right circumstances they can be arranged in a very regular pattern, such as a series of stripe-like rolls or a lattice of hexagons. These states are not in equilibrium—if they were, there wouldn’t be any convective motion in the first place. But they’re pretty orderly. They are an example of what Prigogine called “dissipative structures”: non-equilibrium states that dissipate the energy driving the system out of equilibrium. We can see such organized, persistent flow patterns in the convective circulation of the earth’s oceans and atmosphere.

A “crowd gas” could condense into a denser state just like water vapor condensing into a liquid.

There’s plenty still to be understood about non-equilibrium physics. One hot topic since the 1990s is the understanding of “critical states” out of equilibrium: states that are constantly undergoing big fluctuations in the way the components are organized, like a growing pile of grains that experiences repeated avalanches. Such states seem to occur in many natural phenomena, including biological ones such as insect swarms and patterns of brain activity, and it has been proposed that the economic system might be perpetually in a critical state too (in this technical sense, although right now that seems colloquially apposite). Researchers such as Christopher Jarzynski at the University of Maryland and Gavin Crooks at the Lawrence Berkeley National Laboratory in California are trying to put non-equilibrium thermodynamics on the same kind of microscopic foundations as those that Maxwell and Gibbs constructed for equilibrium thermodynamics: understanding how the interactions and motions of the component parts give rise to large-scale behavior.

There’s been a long interest in trying to devise such “bottom-up,” particle-like models to understand social phenomena. That there is a physics of social interaction was proposed in the 1950s, when the social psychologist Kurt Lewin argued that people are rather like charged particles psychologically attracted by “force fields” of belief, habit, and custom. And in 1971 an Australian scientist named L. F. Henderson said that the microscopic gas models of Maxwell and Boltzmann were a pretty good basis for understanding crowd behavior. He showed that people walking along a sidewalk have a statistical distribution of speeds with a bell-like shape just like that which Maxwell used for particles in his theory of gases. He also proposed that, faced with an obstacle like a bottleneck or ticket barrier, a “crowd gas” could condense into a denser state just like water vapor condensing into a liquid.

But the Maxwell-Boltzmann gas is an equilibrium notion. It’s now clear that many, if not most, real social phenomena occur out of equilibrium: They never settle down into some steady, unchanging pattern. Crowds ebb and flow, one moment congested, the next flowing freely. Theoretical models that treat moving people as interacting particles driven (typically by their own internal impulse to reach a goal) away from equilibrium have now been used by physicists to describe all kinds of crowd movements, from the “streaming” that occurs in busy corridors to the abrupt onset of dangerous “panic” motion in dense crowds.

These models are particularly valuable for describing traffic flows, and there seems to be a genuine analogy between the free, densely moving, and jam states of traffic and the phase transitions of freezing, melting, condensation, and evaporation that connect gases, liquids, and solids—except that in traffic the states are usually non-equilibrium, dissipative ones. Physics-based traffic models, in which vehicles are assumed to avoid collisions as if there were some repulsive force acting between them, show much of the complex behavior seen in real traffic, including “stop-and-go” waves of congestion.

Another rather well developed area of this social physics is the analysis of voting and opinion formation. This harks back to Lewin’s idea of “social forces”—except that now the question is often how individuals’ choices affect one another. We are influenced by what our peers do, and to a physicist this looks a little like the way magnetic atoms may become oriented with their poles aligned with their near-neighbors. Such magnetism-related models have been used to study such issues as how consensus emerges, how rumors spread and how extremist views might take root and propagate in a population. Opinion formation that happens within an external “biasing field” such as the influence of the media or advertising can also be studied this way.

Sometimes these magnetism-related models are equilibrium models: What you’re searching for is the stable state that the system settles into, rather as a magnet achieves a state of uniform global orientation when it is cooled down. In other words, the question is what the final consensus state is. But consensus—that’s to say, equilibrium—isn’t always possible. Instead there might be a constant reshuffling of boundaries between different domains where different views predominate, driven by a degree of randomness in the orientation of individuals.

These non-equilibrium models show us that such randomness needn’t produce total disorder: Because of the interactions between opinion-forming agents, islands of temporary consensus can develop, their size and shape constantly changing. In some situations this collective behavior produces the same kind of herding, copy-cat behavior that tips economic markets into big fluctuations. Such outcomes mean that, rather like avalanches set in train by a few tumbling stones (a classic scenario in non-equilibrium physics), small effects can have big consequences. These convulsions may not be predictable individually, but we can at least forecast the likelihood of them happening. Then we can build our social structures and institutions to take them properly into account. It’s rather like planning flood defenses: We need to know if rare big events due to large storms are expected every decade, or every millennium.

In walking, driving, and voting, the range of alternative actions is rather small, and so making physics-based models doesn’t seem too much of a stretch. But some scientists are attempting to extend such models to more ambitious scenarios, such as war and terrorism, the historical evolution and growth of cities and states, or human habits related to climate change. Increasingly these efforts involve the collaboration of social scientists, computer scientists, game theorists, and physicists—they can’t be tackled from within academic siloes. As our ability to model complexity increases, we might hope for ever greater realism: Some researchers are already talking about imbuing their model agents with rudimentary simulations of decision-making neural hardware, so that they don’t just respond to “social forces” as a piece of iron does to a magnet.

A physics of society should be predictive, not prescriptive. It can’t tell us which actions and structures are just and moral. And if it includes subtle ideological biases, even if inadvertent, it risks becoming just another “theory” used to shore up political preferences. Done well, though, social physics might give us foresight, showing us the likely consequences of particular choices and helping us to plan social structures and institutions, laws and cities, to fit with human nature rather than trying to make human nature fit them. It wouldn’t be a crystal ball, but more akin to predicting the weather: a probabilistic, contingent description of perpetual change. After all, perhaps today more than ever we could do with some idea of what lies around the corner.

Philip Ball is the author of Invisible: The Dangerous Allure of the Unseen and many books on science and art.