Thanks to advances in machine learning over the last two decades, it’s no longer in question whether humans can beat computers at games like chess; we’d have about as much chance winning a bench-press contest against a forklift. But ask the current computer champion, Google’s AlphaZero, for advice on chess theory, like whether a bishop or a knight is more valuable in the Ruy Lopez opening, and all you’ll get is a blank stare from a blinking cursor. Theory is a human construct the algorithm has no need for. The computer knows only how to find the best move in any given position because it’s trained extensively—very extensively—by practicing against itself and learning what works.

Even with a lead time of 18 months, the neural network was able to see El Niño events coming.

The computing methods underlying success stories like AlphaZero’s have been termed “deep learning,” so-called because they employ complex structures such as deep neural networks with multiple layers of computational nodes between input and output. Here the input can be richly structured, like the positions of pieces on a chessboard or the color values of pixels in an image, and the output might be an assessment needed to make a decision, like the value of a possible chess move or the probability the image is a photo of a chihuahua instead of a blueberry muffin. Training the network typically involves tuning all of the available dials, the parameters of the model, until it does well against a set of training data and then testing its performance on a separate out-of-sample data set. One of the complaints about such systems is that, once their training is complete, they can be a black box; exactly how the algorithm processes the information it’s given, and why, is often shrouded in mystery. (When all that’s at stake is a chess game, this is no great concern, but when the same techniques are used to determine people’s creditworthiness or likelihood of committing a crime, say, the demand for accountability understandably goes up.) The more layers of nodes, and the more parameters to be adjusted in the learning process, the more opaque the box becomes.

Nevertheless, in addition to their board-game dominance, deep learning algorithms have found success in fields ranging from finance to advertising to medicine. Somewhat surprisingly, the next domino to fall may be weather forecasting. It’s a surprising challenge for machine learning to take on, in part because traditional human learning (augmented by computers, but just for number-crunching) already has a pretty good handle on things. As such, it’s an interesting case study in what happens when deep learning methods trespass on an established discipline, and what this new contest between human and machine could portend for the future of science.

Meteorology, unlike chess, has a lot of accepted theory behind it. Fundamental physical relationships like the equations of fluid dynamics or energy transfer between land and atmosphere set limits on how weather systems can form and evolve. The current state-of-the-art weather forecasts, in fact, amount to simulated solutions to those equations based on current measurements of temperature, wind, humidity, etc., and factoring in uncertainty due to measurement error. Starting from a position of complete naiveté, a neural network, no matter how deep, would have a hard time catching up with all the theoretical knowledge. So, researchers on the forefront of machine learning and climate science have recently started using a different approach: combining what we already know about meteorology with the ability of deep learning to uncover patterns we don’t know about.

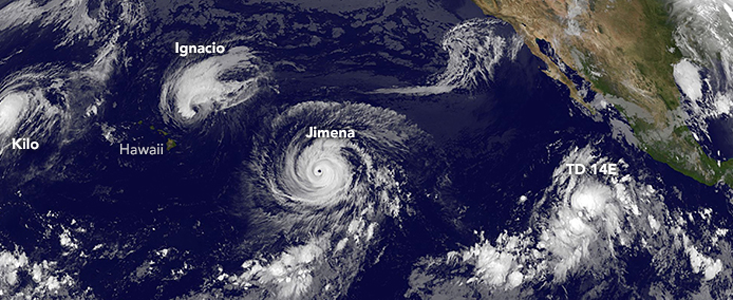

This September in Nature, Yoo-Geun Ham and Jeong-Hwan Kim from the Department of Oceanography at Chonnam National University in South Korea, and Jing-Jia Luo of the Institute for Climate and Application Research in China, announced the success of a new deep learning algorithm to predict the occurrence of El Niño events up to 18 months in advance. El Niño, which refers to the warm phase of an oscillation between warm and cool sea surface temperatures in the eastern Pacific Ocean, has posed a longstanding problem for intermediate-term global weather forecasts. During an El Niño year, normal weather patterns are thrown out of whack, with some parts of the world seeing wetter conditions and more severe storms while others experience prolonged droughts. The greatest impacts occur on the western coast of South America, where heavy rainfall can cause catastrophic flooding while changes in sea temperatures threaten the fishing industry the region depends on, and on the other side of the southern Pacific, which gets a triple whammy of drier conditions, stronger winds, and higher than average temperatures, all exacerbating the risk of bushfires like the ones currently burning throughout New South Wales, Australia.

The social costs of El Niño can be enormous; it’s been linked to water shortages, famine, the spread of infectious disease, and general civil unrest. (A dip in European crop yields during the 1789-1793 El Niño may even have helped kick off the French Revolution.) So the potential benefits of better anticipating and preparing for an El Niño are equally huge. The problem is that El Niño events only occur semi-regularly. Records going back to the late 1800s, when El Niño was first described as a global phenomenon, show a typical interval of two to seven years between events but, beyond that, no real pattern. Furthermore, they range in severity and type depending on where exactly the greatest sea temperature anomalies show up, and each type produces its own idiosyncratic mix of weather phenomena. Depending on where you live, you might not even have noticed that we just finished one; the El Niño of 2019 ended this fall and was of the mild variety.

We have as much chance as beating a chess computer as winning a bench-press contest against a forklift.

Before Ham and his co-authors, the best forecasters could predict an El Niño event at most a year in advance, but there was reason to believe there was room for improvement. For one thing, sea temperatures don’t change all that rapidly, and the fluctuations are not as chaotic as individual weather systems. There’s also some periodicity to the anomalies; the warming periods of an El Niño are typically followed by cooling periods called La Niña, like a giant warm wave sloshing back and forth across the Pacific. So, even though the exact causes and timings are unknown, the warning signs might be present far in advance.

They decided to use what’s called a convolutional neural network, the same network architecture used in machine vision problems like image recognition, to see if it could discern features in the sea temperature data that could predict an El Niño event. To train their model, though, they couldn’t just rely on historical data, because there isn’t nearly enough of it to go around. Monthly records of sea temperatures only go back to 1871, and some amount of that data would need to be reserved to validate the model’s predictions outside of the sample used for training. For techniques best suited to problems of big data, the El Niño data is disappointingly small.

Here is where newfangled data science met old-fashioned climate science.

Instead of historical data, they first trained their neural network on a batch of simulations. The simulations were produced by research groups around the world and collected as part of the Coupled Model Intercomparison Project (CMIP), a large-scale initiative to share climate models and compare petabytes’-worth of predictions for what might happen under different possible futures. Some of the scenarios represent alternative pasts, like climatological historical fiction, and the stories are detailed enough to include features like El Niño events. So, the simulations represent plausible data, bounded by the constraints of the known climate models. They might be a step down from real data, but they’re realistic enough to stand in for the genuine article. Using the substitutes, Ham et al. were able to train the neural network on a data set equivalent to 2,961 years of observations. Then they used the results of all that training as a starting point for learning from the actual historical data, which they separated into a training period from 1871 to 1973 and an out-of-sample validation period from 1984 to 2017.

The results were spectacular. The neural network performed better than the previous state-of-the-art forecasts, with a gap that got wider the earlier in time the forecasts were made. Even with a lead time of 18 months, the neural network was able to see El Niño events coming, make a good estimate of their magnitude, and even classify them according to whether the temperature spikes would occur in the central or eastern Pacific. It did especially well at forecasting El Niños in the boreal spring and summer months, a “predictability barrier” previous models have run into because of the complex interactions between El Niño and monsoon season in South Asia.

The technique of training a system to perform well at one task—in this case, making El Niño predictions in a set of simulated data—before applying that knowledge to another task is called “transfer learning.” Ham’s success at forecasting El Niño shows how this method can combine the best parts of climatology and deep learning. The simulations produced by the climate models provide ample data for the neural network to learn from, in effect training it to think like the models do, but those models are naturally subject to some systematic error. Fine-tuning the network with real historical data then smooths out the error and lets the neural net find features the scientists might not have thought to look for, with the end result being better forecasts.

As a final sense check to demonstrate their model had, in fact, internalized some of the lessons of climate theory, the modelers used the neural network to do a post-mortem analysis on the 1997 to 1998 El Niño, one of the most severe in recorded history. Feeding in sea temperatures for May, June, and July of 1996, they saw neurons lighting up in particular regions, indicating favorable conditions for an El Niño event were developing. Warmer seas in the tropical western Pacific beginning in 1996 built up potential like a loaded gun, and then colder conditions in the southwestern Indian Ocean fired it to the east, resulting in an extreme El Niño about one year later. Colder-than-average conditions in the subtropical Atlantic only exacerbated things. Unlike some mysteriously oracular black box, the model had identified features as significant that were all consistent with what climatologists know about the linkages between sea temperatures in different parts of the world and the ways the climate narratives can unfold. The network had just seen it coming earlier than anyone else, perhaps early enough for people in the affected regions to better prepare for the devastating impacts had the warning system been in place.

The hybrid approach demonstrated by the hunt for El Niño may well provide a new model for applying successful machine learning techniques to theory-heavy fields like climate science. These recent breakthroughs show that, instead of a turf war, the future of research can be a joint venture that combines the best parts of traditional and data science. In another paper in Nature this year, lead author Markus Reichstein of the Department of Biogeochemical Integration at the Max Planck Institute for Biogeochemistry in Germany argued for the importance of “contextual cues,” meaning relationships in the data predicted by known theory, as a way to augment deep learning approaches in Earth science. There’s no reason to expect it to stop there.

Meanwhile, machine learning may be giving climate science a boost at just the right time, thanks to the ongoing climate catastrophe we find ourselves facing. In particular, even though the weather systems El Niño affects are expected to become more extreme in the future, there is still no scientific consensus about whether climate change will increase the frequency or severity of El Niño events. The Intergovernmental Panel on Climate Change report of 2013 said simply that it expects El Niño to continue but with very little confidence about the ways it might change. El Niño events depend on complex feedback loops between warm and cold ocean water, the atmosphere, trade winds, etc. Disrupting a part of those cycles could shut the whole thing down, make the El Niño conditions more frequent or even permanent, or have no noticeable effect either way.

Figuring out how to plan for extreme weather in the future will be like finding the optimal strategy in a chess game where the pieces keep changing. It will take all the learning power of a supercomputer combined with all the experience of a grandmaster.

Aubrey Clayton is a mathematician living in Boston. He teaches logic and philosophy of probability at the Harvard Extension School.

Lead image: Production Perig / Shutterstock

Prefer to listen?