Shaun Patel has such a tranquil voice that it’s easy to see how he convinces patients to let him experiment in the depth of their brains. On the phone, in his office at Massachusetts General Hospital (he is also on faculty at Harvard Medical School), the neuroscientist spoke about gray matter almost as if he were guiding me in meditation. Or perhaps that was just the heady effect of him detailing a paper he had just published in Brain, showing how, using implants on his patients, he could enhance learning by stimulating the caudate nucleus, which lies near the center of the brain.1 You have to time the electric pulse just right, he told me, based on the activity of certain neurons firing during an active learning phase of a game. A perfectly timed pulse could speed up how quickly his patients made the right associations. Using similar methods, he said he has induced people to make more financially conservative bets. His patients don’t even realize what’s happening. Their behavior feels like their own.

This sort of power is prelude to what Patel and his Harvard colleague Charles Leiber, a nanotechnology pioneer, call “precision electronic medicine.” Patel has been working with Leiber to design a new kind of brain-machine interface, one the brain doesn’t recognize as foreign. In Nature Biotechnology, they explained how their neural network-like mesh will offer new ways to treat neurodegenerative and neuropsychiatric illnesses, control state-of-the-art prosthetics, and augment human cognition. It read to me like a page out of the playbook of Elon Musk who, with his company Neuralink, is betting that if humans merge with machines, we won’t have to worry what AI might have in store for us.

In my conversation with Patel, we discussed Neuralink’s new brain probe, the language of the brain, and his vision of enhancing humans with machines. Patel isn’t driven in his pursuit of new means of brain control by visions of a dystopian future. “It’s just a fundamental curiosity,” Patel said. It began in a neuroscience class on abnormal psychology. “The class was about all these interesting disorders that make humans do unusual things that are hard to imagine people doing,” he said. “The description was always that we have neurons in our brain that fire action potentials, these instantaneous changes in voltage. They’re connected to other neurons, and through this, there’s some communication that happens that ultimately results in these behaviors. But nobody could explain with any level of detail the actual mechanism by which this could happen.” Then and there, Patel knew he had his mission.

Tell us about the mesh brain implants you’ve been working on.

These are interfaces reimagined from the ground up. Nerve tissue is insanely, astronomically dense. We have neurons, astrocytes, glia, and different compartments in those cells. These interfaces are ultra-small, in the scale of brain cells. They’re also ultra-flexible. They can remain implanted without mounting any unwanted immune reactions or side effects. This fundamentally changes the way we can think about how neural electronics can be applied as a therapeutic for patients with brain disorders. If you can understand the language of the brain, its code, the spatial localization of neural firing patterns, you can use electrical stimulation to artificially input information into the brain to control thoughts and behavior.

Is understanding the neural code the key to harnessing the brain’s potential?

By understanding this code we can unlock the power of merging the brain with machines. Tissue-like electronics is one of the first technologies that has the potential to explode our ability to understand the code of the brain because they exist in harmony with the brain itself—they can last and record from a given set of neurons for more than a year.

My focus wouldn’t be on making our brain as fast or as good as an AI, but rather creating a link.

What might happen to the brain, and our understanding of it, once humans regularly interface with mesh electronics?

The really interesting thing that will emerge is this unique opportunity to think about how you can input new types of information into the brain. Whatever you might conceive through a stable neural interface, the brain will learn to identify those patterns, and give that organism some meaning about it, just as we have some meaning about light hitting our retinas and the movement of air molecules on our cochlea. Eyes and ears are just types of sensors—stable interfaces—that we’re built with, but you might imagine new ones that integrate with the brain as it’s developing.

The brain really doesn’t care what the input is. There’s no program that our neurons get connected up in a way that is, for example, precisely the way that we need to see. Different neurons identify lines and corners, others encode color, movement, and disparity, and other primitive visual features. These neurons send their axons to other parts of the brain, which then combine those primitive features and begin to reassemble them to give us a perception of the visual field that we’re looking at. It’s the breakdown and reconstruction of the world. It does this through a kind of trial and error, and through feedback and synaptic pruning and other developmental programs.

What would new input, processed by a novel interface, introduce into our perceptual field, or mental space?

This is definitely the dreaming, speculative side of things. But what that introduces—or what that reduces, rather—is the constraint that we have. There’s only so much bandwidth. There’s a really interesting paper published recently2 that showed the information-carrying capacity across all the languages spoken in the world is roughly the same, which is really surprising and interesting. I think it was 39 bits that it could carry, using the apparatus of the human body to communicate. A neural interface, on the other hand, could leverage technology—cameras or the Internet or whatever—to reduce the bandwidth limitation. You can imagine having a direct connection to the information processing center of a human.

What do you make of the neural interface Elon Musk is working on with his company, Neuralink?

It’s along the lines of the kinds of technologies that people have already been developing. Elon Musk is obviously somebody who has made a reputation on tackling hard problems, and I think that this is a significantly hard problem from where we’re starting today. It’s definitely taken an impressive amount of work to get where they are today. There’s a lot of technical details, fabricating and manufacturing these things, that I think are of significant value if you want to get to a commercial scale, which is probably not stuff that they are telling people outside—it’s not really as interesting. I’m curious to see where they’ll be a year or two from now, but I don’t see it as a fundamental game-changer.

The challenge is you can’t get that very deep into the brain. And it also doesn’t maintain the brain’s three-dimensional structure. You can put mesh electronics anywhere in the brain—it’s three-dimensional in structure, so you’re not limited in that sense. That being said, I think you can get some amount of information. The question becomes: How much do you need? What do you need for the things you’re trying to do? The simple answer is, you want to have a very high number of channels in a very stable neural interface. That’s the extreme and the best possible interface you can imagine, the dream interface. I think the mesh has that.

Do you think a neural interface could enhance our cognitive powers enough to make us not worry that we’re going to be overtaken by AI?

I don’t know exactly how others think about this, but if it were me, my focus wouldn’t be on making our brain as fast or as good as an AI, but rather creating a link. Let’s say I’ve already developed the AI—maybe it’s a robot, or a voice detection algorithm. What I want to do is to merge the bandwidth, from that device, to my brain. It’s not that I need to download the world’s knowledge to my brain and have my brain process it to develop an algorithm internally. We already have Google to do that. What I’d really want to do is to be able to use the sensory input that I have.

You can use electrical stimulation to artificially input information into the brain to control thoughts and behavior.

My office is right on the water, in Boston, and I’m seeing this boat, and maybe I wonder what kind of boat that is, or how much it costs: Wouldn’t it be incredible if my brain could communicate with very high bandwidth connection to a repository and extract this information in a way that I intuit the answers? It’s speaking the same language as my brain understands. So it’s different than looking at your phone and going through a few different websites. That is how I imagine the merging of mind and machine.

Have you implanted the mesh interface in a human yet?

We’re actually working to set up the first in-man studies right now. As you can imagine, once you shift into a human, we have to do extra work on validating some of the safety aspects of the materials, and how to sterilize it, and all these extra things. We’re getting very close to trying them out for the first time in human subjects.

Does your interface change the way we think about brain disorders?

Yes, and it’s exciting because a lot of disorders, particularly disorders of cognition, like psychiatric illness and neurodegenerative disease, now have a non-pharmacological therapy. A bad way to describe a brain disorder is to give it a label like a depression or OCD or PTSD because it doesn’t describe the richness of the symptoms. It’s just one word that attempts to categorize a complex disease.

But imagine you have this knowledge and this ability to assess all of these different cognitive functions in terms of their neural code—choosing, learning, forgetting. If you could do that, you can now describe a complex disease as a function of all of these cognitive symptoms, right? With depression, you might see there are some changes in your decision-making. You might see changes in your volitional actions, how much you move, explore, or exploit your environment. Slowly, we’re beginning to imagine how we can quantify these things using data rather than the DSM, which is essentially a checklist of symptoms.

Eyes and ears are just types of sensors that we’re built with, but you might imagine new ones that integrate with the brain as it’s developing.

What is the neural code, exactly?

It’s what the brain uses to represent information. An example is a neuron. You can think of a neuron in many different ways. It’s a very complex cell but a common level of exploring the neuron is at the level of the action potential. You can think of it like a transistor in a silicon circuit. A transistor is a binary representation, zero or one. A neuron is similar. It has some complexity to it and more nuance, but it’s either firing or not firing. You have roughly 86 billion neurons, and they’re all either firing or not firing in an exact moment of time. In the next moment of time, they’re either firing or not firing in this spatial and temporal pattern—which means where the neuron exists in the brain, and what that neuron is connected to.

The pattern of this code, this firing, is a language. It’s like there’s a grammar to it, a vocabulary to it. And it’s through this code that information is represented about the world around us. This comes from our sensory system, our eyes and our ears, our sense of touch, smell, and taste. But it also comes from our brain itself, from what our memories are, what our experiences are. This is all manifested in the way that our brain becomes connected and gives us our unique personality. It’s through the particular way that my neurons are organized in my brain, and the way my neurons fire, the pattern, the spatial temporal pattern and the action potentials, that I’m able to represent the information around me and to do all of these complex cognitive tasks.

How much of the neural code do we understand?

We understand quite a lot. There have been some incredible examples of decoding human speech patterns based on neural activity. It’s reading the activity of your brain to vocalize your thoughts. There are examples of enhancing memory. There are examples of implanting memory. It’s really impressive.

Could tissue-like electronics help heal brain damage?

Yes. Because they resemble the brain so much, there are these interesting properties that have emerged that have not been considered with neural interfaces. The human brain has two areas that are neurogenic. That is, there are two specific areas of the brain that have the ability to produce new brain cells. And in order for those new brain cells to get to other parts of the brain, they travel along a scaffolding created by other neurons. They travel on a specific compartment of the neuron called the axon. Axons reach out and touch other neurons. That’s how neurons are connected to one another. That’s how we develop in utero, that’s how our brain cells get to all the right places. They travel on these scaffolds of other neurons.

What’s been observed is that neurons travel along the electronic mesh itself. That is incredibly interesting because it opens up a new avenue for thinking about therapeutic applications. If somebody has a stroke, a particular part of the brain has been deprived of blood flow, and therefore oxygen and nutrients, and so that region of the brain dies. If you could connect, through the scaffolding created by this electronic mesh, the two neurogenic areas, tapping into the reservoir of new neurons, and end in the region of the brain that’s been damaged by the stroke, you have a new mechanism to reorganize the cells of the brain in a way that could be advantageous for therapy.

What do you think of deep brain stimulation?

Deep brain stimulation delivers electrical stimulation, much like a pacemaker would to the heart, in order to attenuate unwanted symptoms. It’s been used most successfully in movement disorders like Parkinson’s disease. But that approach to date is limited by the technology that we use, specifically the electrode that interfaces the brain. Most electrodes that exist today are large, rigid, and there’s a fundamental mismatch between the brain’s composition and this foreign metallic object. It also works in an open-loop fashion. That is, there’s no feedback. If you’re sleeping, it’s still delivered in the set amount. It just doesn’t change. Your brain is a highly closed loop. When you reach for a glass of water, there’s proprioceptive fibers in your muscles that are giving information back as to where your hand is in space. Your brain is continuously readjusting the movements in order to make sure that your hand ultimately reaches that target. So if you understand enough about the neural code and how that code represents the symptoms that you’re targeting, then you can imagine there might be more intelligent ways to turn on and off, or augment, the stimulation profile to better enhance the therapy.

Brian Gallagher is the editor of Facts So Romantic, the Nautilus blog. Follow him on Twitter @BSGallagher.

References

1. Bick, S.K., et al. Caudate stimulation enhances learning. Brain 142, 2930-2937 (2019).

2. Coupé, C., Mi Oh, Y., Dediu, D., & Pellegrino, F. Different languages, similar encoding efficiency: Comparable information rates across the human communicative niche. Science Advances 5, (2019).

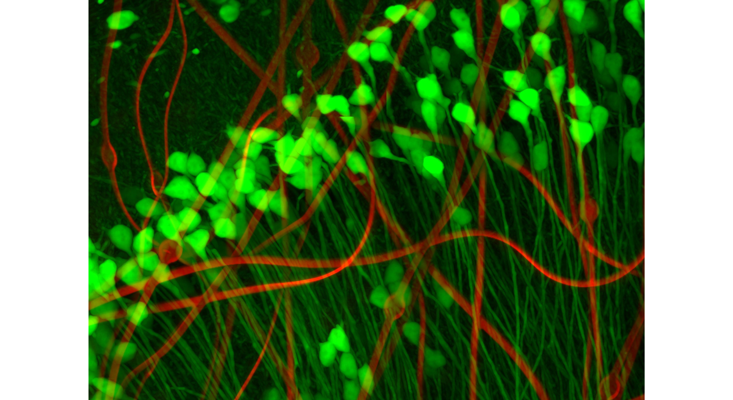

Lead image: whiteMocca / shutterstock