People often ask me whether human-level artificial intelligence will eventually become conscious. My response is: Do you want it to be conscious? I think it is largely up to us whether our machines will wake up.

That may sound presumptuous. The mechanisms of consciousness—the reasons we have a vivid and direct experience of the world and of the self—are an unsolved mystery in neuroscience, and some people think they always will be; it seems impossible to explain subjective experience using the objective methods of science. But in the 25 or so years that we’ve taken consciousness seriously as a target of scientific scrutiny, we have made significant progress. We have discovered neural activity that correlates with consciousness, and we have a better idea of what behavioral tasks require conscious awareness. Our brains perform many high-level cognitive tasks subconsciously.

Consciousness, we can tentatively conclude, is not a necessary byproduct of our cognition. The same is presumably true of AIs. In many science-fiction stories, machines develop an inner mental life automatically, simply by virtue of their sophistication, but it is likelier that consciousness will have to be expressly designed into them.

And we have solid scientific and engineering reasons to try to do that. Our very ignorance about consciousness is one. The engineers of the 18th and 19th centuries did not wait until physicists had sorted out the laws of thermodynamics before they built steam engines. It worked the other way round: Inventions drove theory. So it is today. Debates on consciousness are often too philosophical and spin around in circles without producing tangible results. The small community of us who work on artificial consciousness aims to learn by doing.

Furthermore, consciousness must have some important function for us, or else evolution wouldn’t have endowed us with it. The same function would be of use to AIs. Here, too, science fiction might have misled us. For the AIs in books and TV shows, consciousness is a curse. They exhibit unpredictable, intentional behaviors, and things don’t turn out well for the humans. But in the real world, dystopian scenarios seem unlikely. Whatever risks AIs may pose do not depend on their being conscious. To the contrary, conscious machines could help us manage the impact of AI technology. I would much rather share the world with them than with thoughtless automatons.

When AlphaGo was playing against the human Go champion, Lee Sedol, many experts wondered why AlphaGo played the way it did. They wanted some explanation, some understanding of AlphaGo’s motives and rationales. Such situations are common for modern AIs, because their decisions are not preprogrammed by humans, but are emergent properties of the learning algorithms and the data set they are trained on. Their inscrutability has created concerns about unfair and arbitrary decisions. Already there have been cases of discrimination by algorithms; for instance, a Propublica investigation last year found that an algorithm used by judges and parole officers in Florida flagged black defendants as more prone to recidivism than they actually were, and white defendants as less prone than they actually were.

Beginning next year, the European Union will give its residents a legal “right to explanation.” People will be able to demand an accounting of why an AI system made the decision it did. This new requirement is technologically demanding. At the moment, given the complexity of contemporary neural networks, we have trouble discerning how AIs produce decisions, much less translating the process into a language humans can make sense of.

In the real world, dystopian scenarios seem unlikely.

If we can’t figure out why AIs do what they do, why don’t we ask them? We can endow them with metacognition—an introspective ability to report their internal mental states. Such an ability is one of the main functions of consciousness. It is what neuroscientists look for when they test whether humans or animals have conscious awareness. For instance, a basic form of metacognition, confidence, scales with the clarity of conscious experience. When our brain processes information without our noticing, we feel uncertain about that information, whereas when we are conscious of a stimulus, the experience is accompanied by high confidence: “I definitely saw red!”

Any pocket calculator programmed with statistical formulas can provide an estimate of confidence, but no machine yet has our full range of metacognitive ability. Some philosophers and neuroscientists have sought to develop the idea that metacognition is the essence of consciousness. So-called higher-order theories of consciousness posit that conscious experience depends on secondary representations of the direct representation of sensory states. When we know something, we know that we know it. Conversely, when we lack this self-awareness, we are effectively unconscious; we are on autopilot, taking in sensory input and acting on it, but not registering it.

These theories have the virtue of giving us some direction for building conscious AI. My colleagues and I are trying to implement metacognition in neural networks so that they can communicate their internal states. We call this project “machine phenomenology” by analogy with phenomenology in philosophy, which studies the structures of consciousness through systematic reflection on conscious experience. To avoid the additional difficulty of teaching AIs to express themselves in a human language, our project currently focuses on training them to develop their own language to share their introspective analyses with one another. These analyses consist of instructions for how an AI has performed a task; it is a step beyond what machines normally communicate—namely, the outcomes of tasks. We do not specify precisely how the machine encodes these instructions; the neural network itself develops a strategy through a training process that rewards success in conveying the instructions to another machine. We hope to extend our approach to establish human-AI communications, so that we can eventually demand explanations from AIs.

Besides giving us some (imperfect) degree of self-understanding, consciousness helps us achieve what neuroscientist Endel Tulving has called “mental time travel.” We are conscious when predicting the consequences of our actions or planning for the future. I can imagine what it would feel like if I waved my hand in front of my face even without actually performing the movement. I can also think about going to the kitchen to make coffee without actually standing up from the couch in the living room.

In fact, even our sensation of the present moment is a construct of the conscious mind. We see evidence for this in various experiments and case studies. Patients with agnosia who have damage to object-recognition parts of the visual cortex can’t name an object they see, but can grab it. If given an envelope, they know to orient their hand to insert it through a mail slot. But patients cannot perform the reaching task if experimenters introduce a time delay between showing the object and cueing the test subject to reach for it. Evidently, consciousness is related not to sophisticated information processing per se; as long as a stimulus immediately triggers an action, we don’t need consciousness. It comes into play when we need to maintain sensory information over a few seconds.

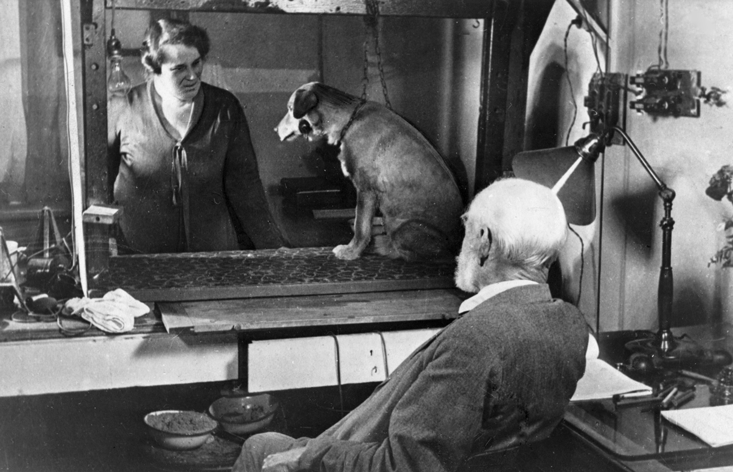

The importance of consciousness in bridging a temporal gap is also indicated by a special kind of psychological conditioning experiment. In classical conditioning, made famous by Ivan Pavlov and his dogs, the experimenter pairs a stimulus, such as an air puff to the eyelid or an electric shock to a finger, with an unrelated stimulus, such as a pure tone. Test subjects learn the paired association automatically, without conscious effort. On hearing the tone, they involuntarily recoil in anticipation of the puff or shock, and when asked by the experimenter why they did that, they can offer no explanation. But this subconscious learning works only as long as the two stimuli overlap with each other in time. When the experimenter delays the second stimulus, participants learn the association only when they are consciously aware of the relationship—that is, when they are able to tell the experimenter that a tone means a puff coming. Awareness seems to be necessary for participants to retain the memory of the stimulus even after it stopped.

These examples suggest that a function of consciousness is to broaden our temporal window on the world—to give the present moment an extended duration. Our field of conscious awareness maintains sensory information in a flexible, usable form over a period of time after the stimulus is no longer present. The brain keeps generating the sensory representation when there is no longer direct sensory input. The temporal element of consciousness can be tested empirically. Francis Crick and Christof Koch proposed that our brain uses only a fraction of our visual input for planning future actions. Only this input should be correlated with consciousness if planning is its key function.

A common thread across these examples is counterfactual information generation. It’s the ability to generate sensory representations that are not directly in front of us. We call it “counterfactual” because it involves memory of the past or predictions for unexecuted future actions, as opposed to what is happening in the external world. And we call it “generation” because it is not merely the processing of information, but an active process of hypothesis creation and testing. In the brain, sensory input is compressed to more abstract representations step by step as it flows from low-level brain regions to high-level ones—a one-way or “feedforward” process. But neurophysiological research suggests this feedforward sweep, however sophisticated, is not correlated with conscious experience. For that, you need feedback from the high-level to the low-level regions.

Counterfactual information generation allows a conscious agent to detach itself from the environment and perform non-reflexive behavior, such as waiting for three seconds before acting. To generate counterfactual information, we need to have an internal model that has learned the statistical regularities of the world. Such models can be used for many purposes, such as reasoning, motor control, and mental simulation.

If we can’t figure out why AIs do what they do, why don’t we ask them?

Our AIs already have sophisticated training models, but they rely on our giving them data to learn from. With counterfactual information generation, AIs would be able to generate their own data—to imagine possible futures they come up with on their own. That would enable them to adapt flexibly to new situations they haven’t encountered before. It would also furnish AIs with curiosity. When they are not sure what would happen in a future they imagine, they would try to figure it out.

My team has been working to implement this capability. Already, though, there have been moments when we felt that AI agents we created showed unexpected behaviors. In one experiment, we simulated agents that were capable of driving a truck through a landscape. If we wanted these agents to climb a hill, we normally had to set that as a goal, and the agents would find the best path to take. But agents endowed with curiosity identified the hill as a problem and figured out how to climb it even without being instructed to do so. We still need to do some more work to convince ourselves that something novel is going on.

If we consider introspection and imagination as two of the ingredients of consciousness, perhaps even the main ones, it is inevitable that we eventually conjure up a conscious AI, because those functions are so clearly useful to any machine. We want our machines to explain how and why they do what they do. Building those machines will exercise our own imagination. It will be the ultimate test of the counterfactual power of consciousness.

Ryota Kanai is a neuroscientist and AI researcher. He is the founder and CEO of Araya, a Tokyo-based startup aiming to understand the computational basis of consciousness and to create conscious AI. @Kanair

Lead image originally from Dano / Flickr