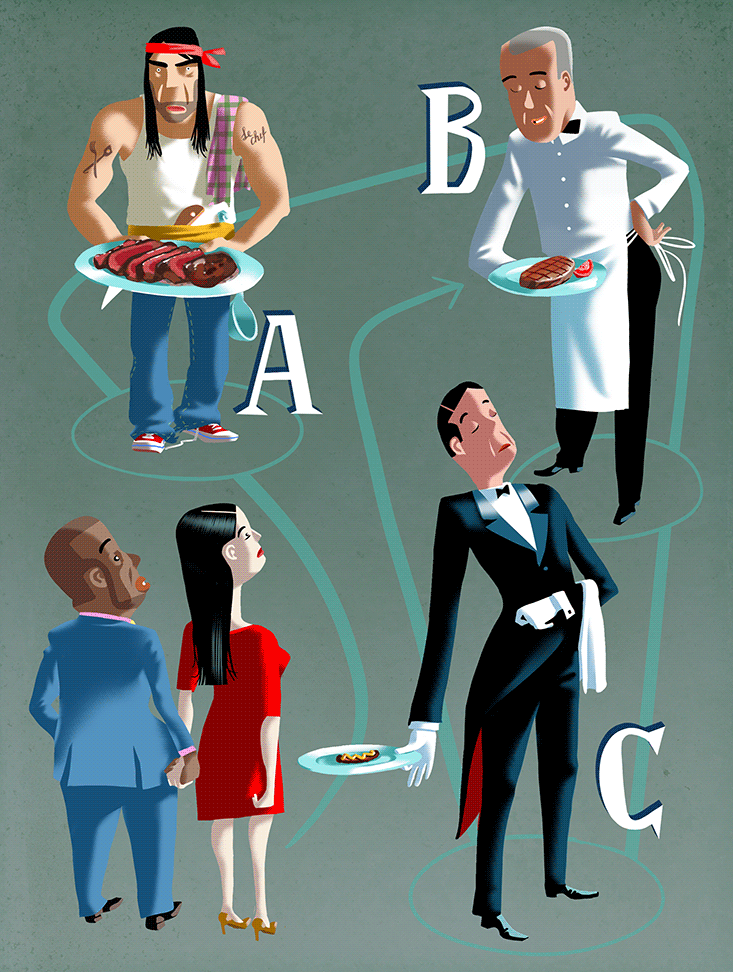

Imagine that (for some reason involving cultural tradition, family pressure, or a shotgun) you suddenly have to get married. Fortunately, there are two candidates. One is charming and a lion in bed but an idiot about money. The other has a reliable income and fantastic financial sense but is, on the other fronts, kind of meh. Which would you choose?

Sound like six of one, half-dozen of the other? Many would say so. But that can change when a third person is added to the mix. Suppose candidate number three has a meager income and isn’t as financially astute as choice number two. For many people, what was once a hard choice becomes easy: They’ll pick the better moneybags, forgetting about the candidate with sex appeal. On the other hand, if the third wheel is a schlumpier version of attractive number one, then it’s the sexier choice that wins in a landslide. This is known as the “decoy effect”—whoever gets an inferior competitor becomes more highly valued.

The decoy effect is just one example of people being swayed by what mainstream economists have traditionally considered irrelevant noise. After all, their community has, for a century or so, taught that the value you place on a thing arises from its intrinsic properties combined with your needs and desires. It is only recently that economics has reconciled with human psychology. The result is the booming field of behavioral economics, pioneered by Daniel Kahneman, a psychologist at Princeton University, and his longtime research partner, the late Amos Tversky, who was at Stanford University.

It’s all about leveraging the unconscious factors that drive 95 percent of consumer decision-making.

It has created a large and growing list of ways that humans diverge from economic rationality. Researchers have found that all sorts of logically inconsequential circumstances—rain, sexual arousal (induced and assessed by experimenters with Saran-wrapped laptops), or just the number “67” popping up in conversation—can alter the value we assign to things. For example, with “priming effects,” irrelevant or unconsciously processed information prompts people to assign value by association (seeing classrooms and lockers makes people slightly more likely to support school funding). With “framing effects,” the way a choice is presented affects people’s evaluation: Kahneman and Tversky famously found that people prefer a disease-fighting policy that saves 400 out of 600 people to a policy that lets 200 people die, though logically the two are the same. While mainstream economists are still wrestling with these ideas, outside of academe there is little debate: The behaviorists have won.

Yet for all their revolutionary impact, even as the behaviorists have overturned the notion that our information processing is economically rational, they still suggest that it should be economically rational. When they describe human decision-making processes that don’t conform to economic theory, they speak of “mistakes”—what Kahneman often calls “systematic errors.” Only by accepting that economic models of rationality lead to “correct” decisions, can you say that human thought-processes lead to “wrong” ones.

But what if the economists—both old-school and behavioral—are wrong? What if our illogical and economically erroneous thinking processes often lead to the best possible outcome? Perhaps our departures from economic orthodoxy are a feature, not a bug. If so, we’d need to throw out the assumption that our thinking is riddled with mistakes. The practice of sly manipulation, based on the idea that the affected party doesn’t or can’t know what’s going on, would need to be replaced with a rather different, and better, goal: self knowledge.

Nowadays, that fast-food company trying to get you to eat fries and the Health Department trying to get you not to both use techniques drawn from behavioral economics—framing and priming you to make certain choices. Welcome to the new era of “choice architecture,” “behavioral marketing,” and “nudge.”

It’s guiding a new approach to advertising and marketing based on a simplified version of behavioral economics—which holds that people are inconsistent, illogical, easily influenced, and seldom aware of why they choose what they choose. “It’s all about leveraging the unconscious factors that drive 95 percent of consumer decision-making, and the best way to do that is through behavioral economics,” wrote Michele Fabrizi, president of the advertising agency MARC USA, in a recent issue of Advertising Age. Behavioral approaches are also infusing management. According to John Balz, Chief Behavioral Officer of a software firm called Opower, as many as 20 percent of Fortune 500 companies now have someone responsible for bringing behavioral science perspectives to business decisions and operations.

Governments are also jumping on the behavioral science bandwagon. In fact, the massive interest in behavioral economics shown by private enterprise has become an argument in favor of the “choice architecture” now used by both governments and do-gooder organizations. With companies working so hard to get you to eat potato chips, drink beer, and spend all your money right now, goes the argument, it’s the government’s duty to use similar techniques to get you to make better choices. This approach, dubbed “Nudge” by economist Richard Thaler and law professor Cass Sunstein in their 2008 book of that title, marks an even bigger change for politics than for business. Citizens of democracies are used to government telling them what they should do, and offering carrots, or sticks, to get compliance. They aren’t so used to government trying to change people’s behavior without being noticed.

The premise of many nudge tactics—that people’s thinking processes are full of mistakes—may be wrong.

For nudge policies to work, experts have to identify an undesirable outcome, then figure out how to use our own decision-making “errors” to “nudge” us toward a better choice. For example, Sunstein writes, “Many people think about their future selves in the same way they think about strangers, but if you show people pictures of what they’ll look like in twenty years, they get a lot more interested in saving for retirement.” In other words, helping people who make mistakes requires experts who know “better.”

Today, nudge is truly a global phenomenon. According to Mark Whitehead, a geographer at Aberystwyth University in Wales, and his colleagues, nudge policies are in place in 136 of the world’s 196 nations. And in 51 of those nations, including China, the United States, Australia, and countries in West Africa, East Africa, and Western Europe, the approach is being directed by a nationwide, centralized authority. From 2009 to 2012, Sunstein himself was head of the White House Office of Information and Regulatory Affairs, which oversees all U.S. government regulations. The United Kingdom has a “nudge squad” (Thaler is a consultant), as does the U.S. government.

This is how a debate among psychologists and economists has come to have cultural significance. A government that assumes people make mistakes needs expert to describe the “correct” decisions, which doesn’t always happen. That’s an odd position for a democratic government to be in, argues the philosopher Mark D. White, a philosopher at the College of Staten Island who is a skeptic of nudge tactics. “Yes, business does it,” he says, “but here is the difference: Everybody knows business tries to manipulate you. We don’t expect the government to manipulate us. That’s not the role that most of us assign to our government.” People who lost their retirement savings in the financial crisis of 2008 might be forgiven for wishing they had not been nudged to invest so much.

What’s more, the premise of many nudge tactics—that people’s thinking processes are full of mistakes—may be wrong.

At the heart of the notion that human decision-making is error-prone is the conviction that aspects of choice should divide neatly into a relevant signal (what does it cost? how much does it matter to me?) and irrelevant noise (does the way it was presented sound hopeful or sad? Is it sunny outside?). But defining what information is relevant isn’t as obvious as it may sound.

What is “noise” in a one-off choice (pick A or B) can be relevant information when an organism is repeatedly chasing resources in changeable and uncertain conditions, notes Donald Hantula, a psychology professor who directs the Decision Making Laboratory at Temple University. If you read research that emphasizes “mistakes,” he says, “one of the conclusions you would come to is that human beings are just stupid. As a lifelong member of the species, I have a little bit of a problem with that.”

Susceptibility to the “decoy effect” is just one of a number of “irrational” decision strategies that have stood the test of evolutionary time, which suggests these strategies have advantages. In fact, studies with all sorts of animals have found they make the same economic “mistakes” as humans. Even the slime mold—a collective of unicellular organisms that join together to form a slug-like whole—is susceptible to the decoy effect. Tanya Latty and Madeleine Beekman, biologists at the University of Sydney, have shown that when faced with a choice between a rich oatmeal-and-agar mix under bright lights (which interferes with their cell biology) and a less nutritious mix in comfortable darkness, slime molds showed no strong preference. They act a bit like diners comparing a loud, unpleasant restaurant with great food to a nice, quiet place with a blah menu—could go either way. (In fact, slime molds being what they are, some headed in both directions at once.) But when the researchers added a worse dark option (the equivalent of a quiet joint with really bad food), the slime molds dropped the bright option and chose the better of the two dark ones. “When you start to see the same fundamental problems solved by all kind of species it begins to suggest there’s some sort of common mechanism,” says Hantula.

Laurie Santos, a professor of psychology and cognitive science at Yale University, has found some mechanisms that might be shared primarily by primates. Like the typical person—who prefers to start with a $10 bill and try to win a second, rather than starting with two bills and risk losing one—capuchins preferred to start with a grape and try for another, rather than risk losing two grapes they already held. They were, in behavioral jargon, “loss averse.” Santos suspects this trait might have evolved because monkeys, like most primates, need to keep track of social relationships.

Monkeys track social relationships by grooming one another. If you’re a monkey exchanging this favor with others, and another monkey does more for you than you did for him, it’s a small gain for you, and not a problem. “But if somebody failed to groom you as much as you did them yesterday,” Santos says, “that’s a big problem and you should have a mechanism to get upset about that.” In primates, loss aversion may be a helpful rule-of-thumb rooted in monitoring of social tit-for-tat. “It’s possible that what we’re seeing in the context of these classic heuristics are actually strategies built for something else,” says Santos. “That doesn’t mean they’re bad or errors.” As Gerd Gigerenzer, Director at the Max Planck Institute for Human Development, in Germany, puts it, a strategy may be “ecologically rational”—the most successful method for solving real problems overall—even if it violates assumptions about rational decision-making.

If I am saving you from turning the wrong way when you are lost, what difference does it make if you don’t know I am steering you?

Thinking about humans as social animals casts a different light on some famous framing effects often cited as examples of human information-processing “error”: Why can’t we recognize that saving 400 people out of 600 is the same as letting 200 people die? One good reason might be that even though the cases are logically identical, they aren’t socially identical—one choice is framed in a way that emphasizes death, and the other, life. In many real-life situations, that difference is relevant information. Even as we share some supposedly error-prone methods of decision-making with very distant relatives like the slime mold, other “mistakes” may reflect the particular needs of primates. In fact, some of our mistakes may be peculiar to our species alone.

Santos, who studies primates to understand the evolution of the human mind, notes that monkeys—even our close cousins, chimpanzees—are not as attuned to social cues, the consequence being that they act more like rational agents. For example, in experiments with a simple puzzle—open this box, take out a prize—Victoria Horner and Andrew Whiten showed both human children and young chimpanzees how to solve the puzzle. In one set of trials, the box’s transparent plastic sides were covered by black tape. In the other, the tape was gone, making it obvious that several of the experimenters’ steps were unnecessary. The chimps reacted to this by skipping the useless steps. But 80 percent of the human children stuck with the more complicated procedure. They were “over-imitating”—sticking more closely to what a person showed them than necessary. Humans, especially children, often make the “mistake” of over-imitating when they learn new skills. While over-imitation is not always the most efficient or rational way to solve a problem, it may be a way to pass on crucial information—including custom, etiquette, tradition, and ritual—that we need to be human.

Suppose, though, there was a seat at the table for researchers who accept that people depart from economic rationality, but don’t call that departure a form of error. Would their model make a practical difference?

There are signs that it could. Here is why: Policymakers who assume people are constantly making mistakes must strive to correct “errors” by subtly rearranging choices, but policymakers who don’t assume people make mistakes can instead orient their efforts to bringing people’s attention to their own mental processes. Once aware of these mental processes, people can then do what they will. It’s a subtle but important difference. The first approach nudges people toward a pre-chosen goal; the second informs people about the workings of their own minds, so they can better achieve whatever goals they desire.

Consider this nudge-like strategy, designed by Hantula. About a year ago, he and some colleagues devised a procedural change in the cafeteria of a hospital. “If you ask people at 7:30 in the morning if they want a healthy lunch or something less healthy, they’ll say ‘I choose a healthy lunch’,” says Hantula. “The problem is, at about noon, when they walk up to the cafeteria and the guy at the counter is flipping burgers, they say, ‘I’ll take the burger!’ ” Hantula and his colleagues gave workers a new option. “We were able to get the software that ran their cafeteria and their billing system reprogrammed so that people could pre-order and pay for their lunch at 7:30 in the morning.” By moving their “choice point” from lunchtime to an hour when people preferred a healthier lunch, a significant percentage of workers ended up eating healthier food.

This might sound like a typical nudge. But it is subtly different from those that seek to get people to exercise, invest in retirement, or get medical check-ups without their noticing. In Hantula’s study, the intervention isn’t covert. It calls attention to the decision it’s attempting to influence. “If you approach it from the perspective of ‘oh, these people are stupid, so let’s arrange things so we use their stupidity against them,’ that’s one thing,” Hantula says. “This is different. It’s not sneaky at all.” If your goal is to eat healthier, here’s a way you can reach that goal.

To assume that people make mistakes all the time is to assume that they needn’t be aware that a government or business is trying to change their behavior. After all, if I am saving you from turning the wrong way when you are lost, what difference does it make if you don’t know I am steering you? This outlook has led to a widespread preference among nudgers for “moving in imperceptible steps that do not create backlash or resistance” as Andreas Souvaliotis, the executive chairman of Social Change Rewards, recently said in an interview in Forbes. Remove the assumption that people are inescapably mistaken, though, and the justification for this kind of sneakiness disappears. And that suggests a whole different direction for nudge. Call it Nudge 2.0: Corporate messages and government policies that increase awareness about how the mind works, rather than depending on people not to notice. As it is with the slime mold or the capuchin monkey, the decoy effect might be irresistible to us all. But at least we can understand what we’re doing when we succumb to it.

David Berreby is the author of Us and Them: The Science of Identity, and writes the Mind Matters blog at Bigthink.com.