Nineteen stories up in a Brooklyn office tower, the view from Manuela Veloso’s office—azure skies, New York Harbor, the Statue of Liberty—is exhilarating. But right now we only have eyes for the nondescript windows below us in the tower across the street.

In their panes, we can see chairs, desks, lamps, and papers. They don’t look quite right, though, because they aren’t really there. The genuine objects are in a building on our side of the street—likely the one where we’re standing. A bright afternoon sun has lit them up, briefly turning the facing windows into mirrors. We see office bric-a-brac that looks ghostly and luminous, floating free of gravity.

Veloso, a professor of computer science and robotics at Carnegie Mellon University, and I have been talking about what machines perceive and how they “think”—a subject not nearly as straightforward as I had expected. “How would a robot figure that out?” she says about the illusion in the windows. “That is the kind of thing that is hard for them.”

Artificial intelligence has been conquering hard problems at a relentless pace lately. In the past few years, an especially effective kind of artificial intelligence known as a neural network has equaled or even surpassed human beings at tasks like discovering new drugs, finding the best candidates for a job, and even driving a car. Neural nets, whose architecture copies that of the human brain, can now—usually—tell good writing from bad, and—usually—tell you with great precision what objects are in a photograph. Such nets are used more and more with each passing month in ubiquitous jobs like Google searches, Amazon recommendations, Facebook news feeds, and spam filtering—and in critical missions like military security, finance, scientific research, and those cars that drive themselves better than a person could.

Not knowing why a machine did something strange leaves us unable to make sure it doesn’t happen again.

Neural nets sometimes make mistakes, which people can understand. (Yes, those desks look quite real; it’s hard for me, too, to see they are a reflection.) But some hard problems make neural nets respond in ways that aren’t understandable. Neural nets execute algorithms—a set of instructions for completing a task. Algorithms, of course, are written by human beings. Yet neural nets sometimes come out with answers that are downright weird: not right, but also not wrong in a way that people can grasp. Instead, the answers sound like something an extraterrestrial might come up with.

These oddball results are rare. But they aren’t just random glitches. Researchers have recently devised reliable ways to make neural nets produce such eerily inhuman judgments. That suggests humanity shouldn’t assume our machines think as we do. Neural nets sometimes think differently. And we don’t really know how or why.

That can be a troubling thought, even if you aren’t yet depending on neural nets to run your home and drive you around. After all, the more we rely on artificial intelligence, the more we need it to be predictable, especially in failure. Not knowing how or why a machine did something strange leaves us unable to make sure it doesn’t happen again.

But the occasional unexpected weirdness of machine “thought” might also be a teaching moment for humanity. Until we make contact with extraterrestrial intelligence, neural nets are probably the ablest non-human thinkers we know.

To the extent that neural nets’ perceptions and reasoning differ from ours, they might show us how intelligence works outside the constraints of our species’ limitations. Galileo showed that Earth wasn’t unique in the universe, and Darwin showed that our species isn’t unique among creatures. Joseph Modayil, an artificial intelligence researcher at the University of Alberta, suggests that perhaps computers will do something similar for the concept of intellect. “Artificial systems show us intelligence spans a vast space of possibilities,” he says.

First, though, we need to make sure our self-driving cars don’t mistake school buses for rugby shirts, and don’t label human beings in photos as gorillas or seals, as one of Google’s neural nets recently did. In the past couple of years, a number of computer scientists have become fascinated with the problem and with possible fixes. But they haven’t found one yet.

Jeff Clune is an assistant professor of computer science at the University of Wyoming. Having happened upon bizarre behavior in artificial neural nets, he has recently begun to study it. “I don’t know that anyone has a very good handle on why this is happening,” he says.

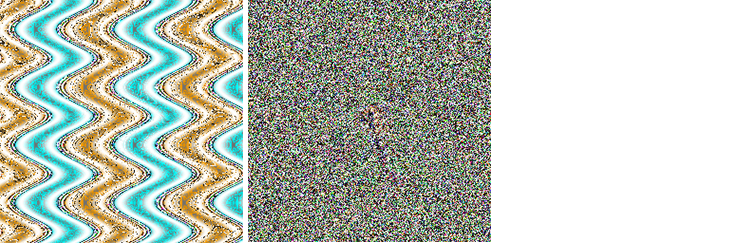

Last year, in a paper titled “Deep Neural Networks Are Easily Fooled,”1 Clune and his coauthors Anh Nguyen and Jason Yosinski reported that they had made a successful system, designed to recognize objects, declare with at least 99.6 percent confidence that the image below on the left is a starfish. And (again, with 99.6 percent confidence) that the one on the right is a cheetah.

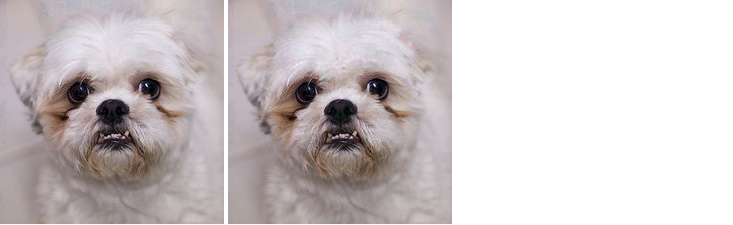

Conversely, a different team of researchers from Google, Facebook, New York University, and the University of Montreal got a neural-net system to rule that, in the figure below, the lefthand image is a dog. But that the one on the right (with slightly altered pixels) is an ostrich.

The picture of the dog on the right is an example of what the researchers have called “adversarial examples.”2 These images differ only slightly from images that are classified correctly, and yet cause advanced neural nets to make judgments that leave humans shaking their heads.

Neural nets are one form of machine learning, which analyzes and draws conclusions from data. And machine learning isn’t used only for visual tasks, notes Solon Barocas, a postdoctoral research associate at the Center for Information Technology Policy at Princeton University.1 In 2012, Barocas points out, a system built to evaluate essays for the Educational Testing Service declared this prose (created by former Massachusetts Institute of Technology writing professor Les Perelman) to be great writing:

In today’s society, college is ambiguous. We need it to live, but we also need it to love. Moreover, without college most of the world’s learning would be egregious. College, however, has myriad costs. One of the most important issues facing the world is how to reduce college costs. Some have argued that college costs are due to the luxuries students now expect. Others have argued that the costs are a result of athletics. In reality, high college costs are the result of excessive pay for teaching assistants.

Big words and neatly formed sentences can’t disguise the absence of any real thought or argument. The machine, though, gave it a perfect score.3

Such screwy results can’t be explained away as hiccups in individual computer systems, because examples that send one system off its rails will do the same to another. After he read “Deep Neural Networks Are Easily Fooled,” Dileep George, cofounder of the AI research firm Vicarious, was curious to see how a different neural net would respond. On his iPhone, he happened to have a now-discontinued app called Spotter, a neural net that identifies objects. He pointed it at the wavy lines that Clune’s network had called a starfish. “The phone says it’s a starfish,” George says.

Spotter was examining a photo that differed from the original in many ways: George’s picture was taken under different lighting conditions and at a different angle, and included some pixels in the surrounding paper that weren’t part of the example itself. Yet the neural net produced the same extraterrestrial-sounding interpretation. “That was pretty interesting,” George says. “It means this finding is pretty robust.”

In fact, the researchers involved in the “starfish” and “ostrich” papers made sure their fooling images succeeded with more than one system. “An example generated for one model is often misclassified by other models, even when they have different architectures,” or were using different data sets, wrote Christian Szegedy, of Google, and his colleagues.4 “It means that these neural networks all kind of agree what a school bus looks like,” Clune says. “And what they think a school bus looks like includes many things that no person would say is a school bus. That really surprised a lot of people.”

The problem isn’t just that machines think differently from people. It is that people can’t figure out why.

Of course, any system that takes in and processes data can misidentify objects. That includes the human brain, which can be convinced that the patterns in its morning toast are a portrait of Jesus. But when you look at a pattern and see something that is not there—what psychologists call “pareidolia”—other people can understand why you made your mistake. This is because we all share the same mental system for seeing things and making sense of them.

In a perfect world, our machines would share that system too, and we could understand them as well as we understand one another. Oddball results from neural nets show us that we don’t live in that world. In such moments, we can see that algorithmic “thinking” is not a copy of ours, says Barocas. “When systems act in ways humans would not, we can see that their pareidolia is different from ours.”

People who write the algorithms, Barocas adds, “want to humanize things, and to interpret things in ways that are in line with how we think and reason. But we need to be prepared to accept that computers, even though they’re performing tasks that we perform, are performing them in ways that are very different.”

Of course, the AI that calls a dog an ostrich is not a threat. Many written tests are scored correctly by machine, and images that fool neural nets appear to be improbable. But, some researchers say, they will occur. Even one instance of a machine deciding that a school bus is a rugby shirt is one too many, Clune says, “especially if you happen to be in the self-driving bus that makes that mistake.”

It is not yet possible to understand how a neural net arrived at an incomprehensible result. The best computer scientists can do with neural nets is to observe them in action and note how an input triggers a response in some of its units. That’s better than nothing, but it’s not close to a rigorous mathematical account of what is going on inside. In other words, the problem isn’t just that machines think differently from people. It is that people can’t reverse-engineer the process to find out why.

Ironically, non-human pareidolia is turning up in the algorithms that are supposed to mimic the most human part of our brains. Neural nets were first proposed in the 1940s as a rough software model of the cerebral cortex, where much of perception and thinking take place. In place of the physical neurons in our heads, the network runs virtual neurons made of code. Each of these is a node with multiple channels for receiving information, a processor for computing a function from those inputs, and one channel for outputting the result of its work. These virtual neurons, like the cells in the human cortex, are organized in layers. Information entering a layer triggers a collective response from its neurons (some are activated and communicate with each other, while others stay silent). The result is passed on to the next layer, where it is treated as raw material for further processing.

Although each neuron is a simple information cruncher, this architecture allows the cells collectively to perform amazing feats with the data they receive. In real brains, for instance, neurons convert a few million electrical impulses in your optic nerves into a perception that you’re looking at a reflection in a window. Layers in your cortex that respond to, say, edges of objects pass on their work to layers that interpret that interpretation—responding to an edge even if it is upside down and in dim light. Layers further along interpret that interpretation, and at the end the visual information is integrated into a complex perception: “That’s an upside-down banana in the shadows.”

Neural nets are simpler. But with recent advances in processing power and the growing availability of huge data sets to provide examples, they can now achieve similar successes. Their layered processing can find patterns in vast amounts of data, and use those patterns to connect labels like “cheetah” and “starfish” to the right images.

The machine doesn’t have hundreds of millions of years of evolutionary design guiding it to notice traits like colors, edges, and shapes. Instead, a neural net is “trained” by human programmers. They will give it—to take one example—a vast number of scrawls, each identified as a human’s scribbled version of a letter of the alphabet. As the algorithm sorts them, its wrong guesses are corrected, until all its classifications of the training data are correct. With thousands of examples of what humans consider to be a letter “d,” a neural net soon works out a rule for deciding correctly what it should label a “d” in the future. That’s one of the great appeals of neural net architecture: It allows computer scientists to design handwriting recognition without having to come up with endless lists of rules for defining a proper “d.” And they don’t need to show the machine every “d” ever created, either. With just a tiny subset of all possible d’s in the universe—the examples it has trained on—the neural net has taught itself to recognize any future “d” it encounters.

The judges didn’t learn that the artist wasn’t human until weeks after they’d admitted its work into the show.

The disadvantage of this architecture is that when the machine rules that TV static is a cheetah, computer scientists don’t have a list of its criteria for “cheetah,” which they can search for a glitch. The neural net isn’t executing a set of human-created instructions, nor is it running through a complete library of all possible cheetahs. It is just responding to inputs as it receives them. The algorithms that create a net are instructions for how to process information in general, not instructions for solving any particular problem. In other words, neural net algorithms are not like precise recipes—take this ingredient, do that to it, and when it turns soft, do this. They are more like orders placed in a restaurant. “I’d like a grilled cheese and a salad, please. How you do it is up to you.” As Barocas puts it, “to find results from exploring the data, to discover relationships, the computer uses rules that it has made.”

At the moment, humans can’t find out what that computer-created rule is. In a typical neural net, the only layers whose workings people can readily discern are the input layer, where data is fed to the system, and the output layer, where the work of the other layers is reported out to the human world. In between, in the hidden layers, virtual neurons process information and share their work by forming connections among themselves. As in the human brain, the sheer number of operations makes it impossible, as a practical matter, to pinpoint the contribution of any single neuron to the final result. “If you knew everything about each person in a 6-billion-person economy, you would not know what is going to happen, or even why something happened in the past,” Clune says. “The complexity is ‘emergent’ and depends on complex interactions between millions of parts, and we as humans don’t know how to make sense of that.”

Moreover, a great deal of information processing is done in the ever-changing connections among neurons, rather than in any single cell. So even if computer scientists pinned down what every cell in a network was doing at a given moment, they still would not have a complete picture of its operations. Knowing that a part of a layer of neurons is activated by the outline of a face, for instance, does not tell you what part those neurons play in deciding whose face it is.

This is why, as Clune and his colleagues recently put it, “neural networks have long been known as ‘black boxes’ because it is difficult to understand exactly how any particular, trained neural network functions, due to the large number of interacting, non-linear parts.”5

Clune compares computer scientists dealing with neural nets to economists grappling with the global economy. “It’s intractable and hard to understand,” he says. “But just because you can’t understand everything doesn’t mean you can’t understand anything.” About the inner workings of neural nets, he says, “we’re slowly starting to figure them out, and we may have, say, an Alan Greenspan level of understanding. But we don’t have a physicist’s understanding.”

Last June, a team of researchers at Google—Alexander Mordvintsev, Christopher Olah, and Mike Tyka—revealed a method they’d developed to make an image-recognition net reveal the work of specific layers in its architecture. “We simply feed the network an arbitrary image or photo and let the network analyze the picture,” the trio wrote. “We then pick a layer and ask the network to enhance whatever it detected.”6 The result was an array of striking images, whose exact shape varied according to what the interrogated layer was focused on. (They soon became famous on the Web as “Google Deep Dream.”) “For example,” the Google team wrote, “lower layers tend to produce strokes or simple ornament-like patterns, because those layers are sensitive to basic features such as edges and their orientations.”

Not long after, Clune, Yosinski, Nguyen, Thomas Fuchs of the California Institute of Technology, and Hod Lipson of Cornell University published a different method of getting an active neural net to reveal what parts of its layers, and even what individual neurons, are doing. Their software tracks events in each layer of a neural network after a human has presented it with a specific image. A user can see, next to the object or image she has shown the network, a map in real time of the neurons that are responding to it. “So you can see what a particular node responds to,” Clune explains. “We’re starting to allow you to shine light into that black box and understand what’s going on.”

While researchers strive to figure out why vast data sets used to train algorithms do not reflect the reality they expected, others think the strange rules dreamed up by algorithms might be teaching us about aspects of reality that we can’t detect ourselves.

After all, Clune says, a flower will look good to both a human and a bee, but that doesn’t mean both creatures see the same thing. “When we look at that flower in the spectrum that its pollinator can see in, the pattern is totally different,” he says. Even though a bee would find our color perception weird, and vice versa, neither species’ view is an illusion. Perhaps the strangeness of neural-net cognition will teach us something. Perhaps it will even delight us.

In the work by Clune and his colleagues, some of the images approved by the recognition algorithm were not like the TV static that the machine declared a cheetah. Instead, this class of images contained some relationship to the category that the algorithm chose.7 It declared, for example, that in the figure below the image on the left was a prison, and that the images on the right were strawberries.

Humans did not make the same categorizations. But when shown what the machine had done, people could see the connection between image and concept. Unlike the static-is-a-cheetah type of judgments, these kinds of machine judgments could lead people to see strawberries in a new way, or think about the category “strawberry” differently.

That sounded like a good definition of “art” to the researchers. So they submitted some of their images to a competition for a show at the University of Wyoming Art Museum. The algorithm’s work was among the 35 percent of entries accepted and displayed at the museum—where it then won a prize. The judges didn’t learn that the artist wasn’t human until weeks after they’d admitted the work into the show. “We submitted to a juried art competition, and there was no requirement to submit any information with the art,” Clune says. “But then we sent them an email a while later, and said, ‘Oh, by the way, here’s this fun backstory …’ ”

It’s fair, then, to describe AI researchers as optimists—but then, AI researchers are people who find the prospect of computer-written poems or computer-choreographed dances delightful. Even if an algorithm comes up with dance moves no human could perform, Clune says, “we could still enjoy watching the robots do the dance.” What we know for sure in 2015 is that, for now, humanity doesn’t fully understand algorithmic pareidolia, even as it depends more and more on algorithmic processes.

“There’s no precise yes or no answer to these questions, but it’s certainly fascinating,” Clune says. “It’s almost like modern neuroscience. We’re taking these brains and trying to reverse-engineer them to find out how they work.”

In any event, the need for a better view into the machine “mind” extends beyond researchers puzzled by neural nets. It’s a challenge for the entire field of artificial intelligence—and the entire society that depends on it.

David Berreby is the author of Us and Them: The Science of Identity, and is currently at work on a book about the future of personal autonomy. He blogs at Otherknowsbest.

References

1. Nguyen, A., Yosinski, J., & Clune, J. Deep neural networks are easily fooled: High confidence predictions for unrecognizable images. Preprint arXiv 1412.1897 (2014).

2. Szegedy, C. et al. Intriguing properties of neural networks. Preprint arXiv 1312.6199 (2013).

3. Hern, A. “Computers Can’t Read” The New Statesman (2012).

4. Goodfellow, I.J., Shlens, J., & Szegedy, C. Explaining and harnessing adversarial examples. Preprint arXiv 1412.6572 (2014).

5. Yosinski, J., Clune, J., Nguyen, A., Fuchs, T., & Lipson, H. Understanding neural networks through deep visualization. Preprint arXiv 1506.06579 (2015).

6. Mordvintsev, A., Olah, C., & Tyka, M. “Inceptionism: Going Deeper into Neural Networks” http://googleresearch.blogspot.com (2015).

7. Nguyen, A., Yosinski, J., & Clune, J. Innovation engines: Automated creativity and improved stochastic optimization via deep learning. Proceedings of the Genetic and Evolutionary Computation Conference (2015).